python第一只爬虫:爬豆瓣top250

2016-06-13 16:39

525 查看

这两天不想看论文,不想看书,就学着慕课网上的python爬虫视频课,试着写了个爬豆瓣top250的读书,程序写的较乱,就当记录下。

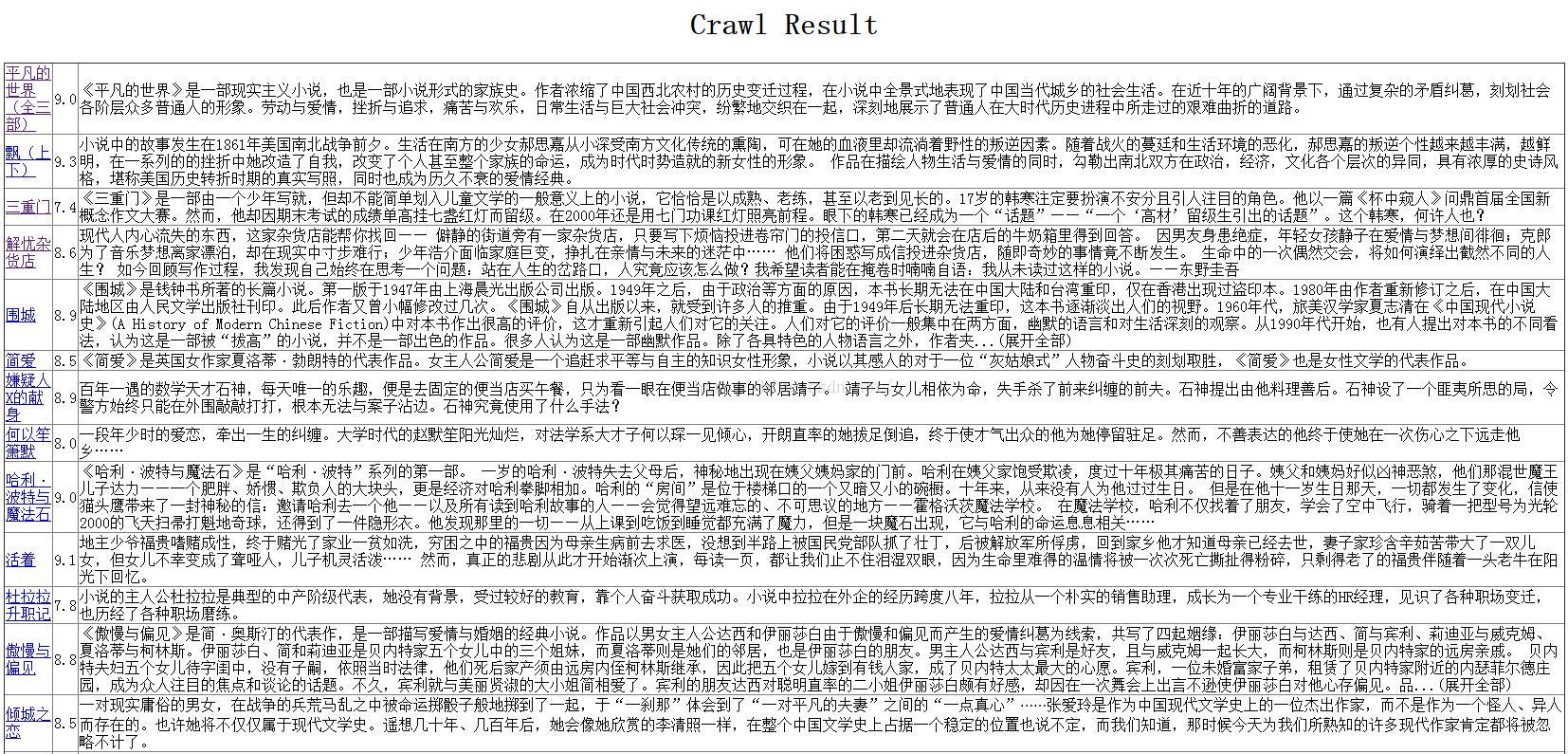

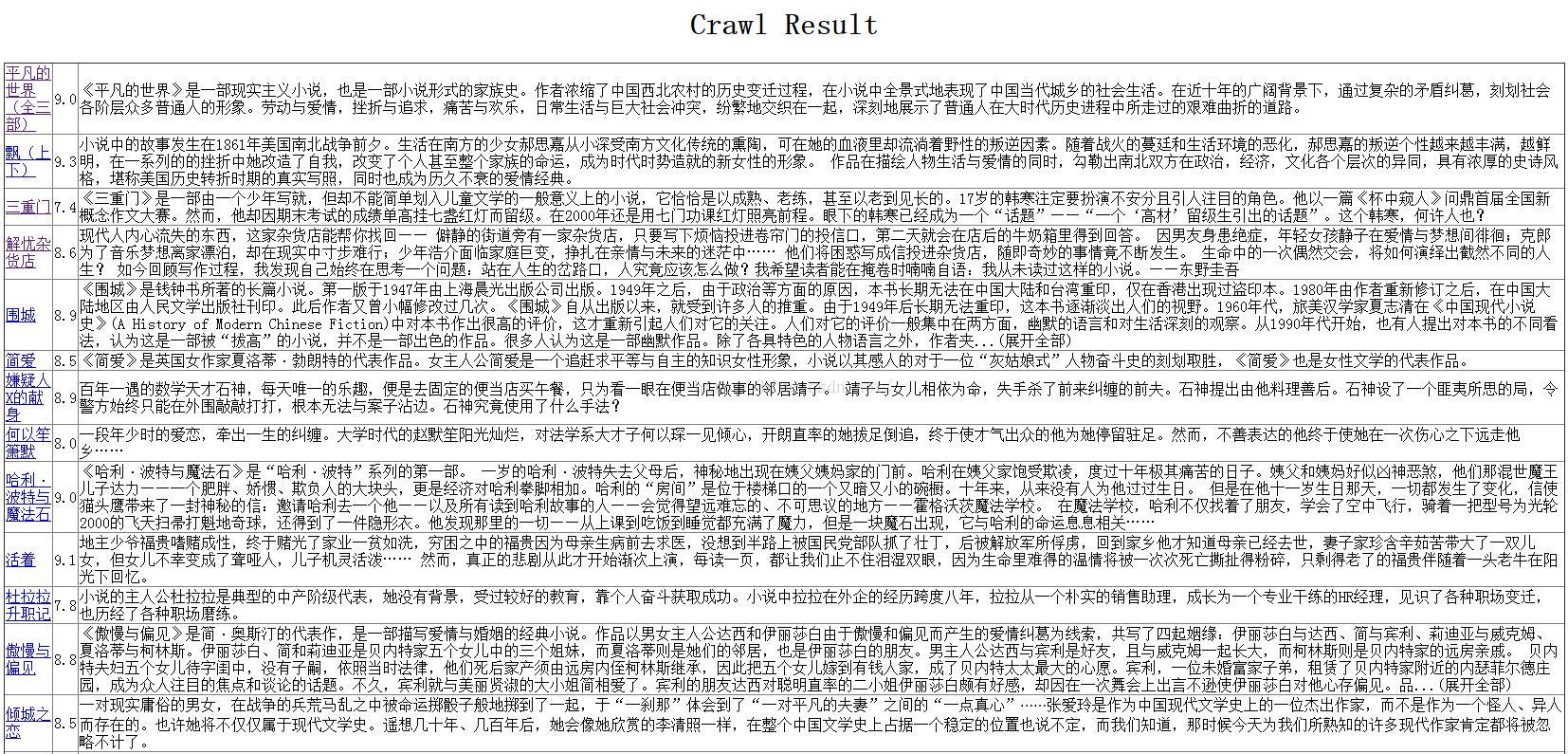

结果以表格形式保存在一个html文件中,第一格是书名,第二个豆瓣评分,第三格是书的简介。

import urllib2

from bs4 import BeautifulSoup

import re

import urlparse

class SpiderMain(object):

def __init__(self):

self.urls=UrlManager()

self.downloader=HtmlDownloader()

self.parser=HtmlParser()

self.outputer=HtmlOutputer()

def craw(self,root_url):

count=1

self.urls.add_new_url(root_url)

while self.urls.has_new_url():

try:

new_url=self.urls.get_new_url()

print 'craw %d : %s'%(count,new_url)

html_cont=self.downloader.download(new_url)

new_urls,new_data=self.parser.parse(new_url,html_cont)

self.urls.add_new_urls(new_urls)

self.outputer.collect_data(new_data)

if count==50:

break

count=count+1

except:

print 'craw failed'

self.outputer.output_html()

class UrlManager(object):

def __init__(self):

self.new_urls=set()

self.old_urls=set()

def add_new_url(self,url):

if url is None:

return

if url not in self.new_urls and url not in self.old_urls:

self.new_urls.add(url)

def add_new_urls(self,urls):

if urls is None or len(urls)==0:

return

for url in urls:

self.add_new_url(url)

def has_new_url(self):

return len(self.new_urls)!=0

def get_new_url(self):

new_url=self.new_urls.pop()

self.old_urls.add(new_url)

return new_url

class HtmlDownloader(object):

def download(self,url):

if url is None:

return None

response =urllib2.urlopen(url)

if response.getcode()!=200:

return None

return response.read()

class HtmlParser(object):

def _get_new_urls(self,page_url,soup):

# new_urls=[]

new_urls=set()

# links=soup.find_all('div',class_="title")

links=soup.find_all(href=re.compile("book.douban.com/subject/[0-9]*"),onclick=re.compile("this,{i:"))

for link in links:

new_url=link['href']

# new_full_url=urlparse.urljoin(page_url,new_url)

new_urls.add(new_url)

return new_urls

# new_urls.append(new_full_url)

def _get_new_data(self,page_url,soup):

res_data={}

res_data['url']=page_url

title_node=soup.find('h1')

if title_node!=None:

res_data['title']=title_node.get_text()

score_node=soup.find("div",id="interest_sectl",class_="")

if score_node!=None:

res_data['score']=score_node.find("strong").get_text()

summary_node=soup.find('div',class_="intro")

if summary_node!=None:

res_data['summary']=summary_node.get_text()

return res_data

def parse(self,page_url,html_cont):

if page_url is None or html_cont is None:

return

soup=BeautifulSoup(html_cont,'html.parser',from_encoding='utf-8')

new_urls=self._get_new_urls(page_url,soup)

new_data=self._get_new_data(page_url,soup)

return new_urls,new_data

class HtmlOutputer(object):

def __init__(self):

self.datas=[]

def collect_data(self,data):

if data is None:

return

self.datas.append(data)

def output_html(self):

fout=open('output.htm','a')

fout.write("<html>")

fout.write("<head>")

fout.write('<meta charset="utf-8"></meta>')

fout.write("<title>Crawl Result</title>")

fout.write("</head>")

fout.write("<body>")

fout.write('<h1 style="text-align:center">Crawl Result</h1>')

fout.write('<table style="border-collapse:collapse;" border="1">')

for data in self.datas:

if data.has_key("title") and data.has_key("summary"):

fout.write("<tr>")

fout.write("<td><a href = '%s'>" % data["url"])

fout.write("%s</a></td>" % data["title"].encode("utf-8"))

fout.write("<td>%s</td>" % data["score"].encode("utf-8"))

fout.write("<td>%s</td>" % data["summary"].encode("utf-8"))

fout.write("<tr>")

fout.write("</table>")

fout.write('<br /><br /><p style="text-align:center">Power By Effortjohn</p>')

fout.write("</body>")

fout.write("</html>")

# def output_html(self):

# fout=open('output.html','w')

# fout.write("<html>")

# fout.write("<body>")

# fout.write("<table>")

# #榛樿ascii

# for data in self.datas:

# fout.write("<tr>")

# fout.write("<td>%s</td>"% data['url'])

# fout.write("<td>%s</td>"% data['title'].encode('utf-8'))

# fout.write("<td>%s</td>"% data['summary'].encode('utf-8'))

# fout.write("<tr>")

# fout.write("</table>")

# fout.write("</body>")

# fout.write("</html>")

#

if __name__=="__main__":

i=0

while(i!=275):

root_url="https://book.douban.com/top250?start=%d"%i

obj_spider=SpiderMain()

obj_spider.craw(root_url)

i=i+25 效果如下:

结果以表格形式保存在一个html文件中,第一格是书名,第二个豆瓣评分,第三格是书的简介。

相关文章推荐

- Python后端[爱伍]

- 详解Python的Flask框架中的signals信号机制

- 学习python出现的问题_类初始化

- python3 urllib post json

- ipython需要2.7版本python, 手动编译; 解决yum在升级python2.7版本后的功能恢复; 解决pip命令失败问题.

- python 中的一些基础算法:递归/冒泡/选择/插入

- 在python中获取时间

- 深入理解 python 中的赋值、引用、拷贝、作用域

- Python的Flask站点中集成xhEditor文本编辑器的教程

- 『Python学习』scipy库学习

- Python特殊语法:filter、map、reduce、lambda [转]

- 有关Python中的division问题

- Python2 抓取百度贴吧图片

- 后台运行python程序输出缓冲区问题

- python+web编程学习总结记录(一)

- python判断字符串

- 实现向 python 脚本中传递列表,字典参数

- Python - 私有方法,专有方法

- Python三维数组

- python客户端监控工具