SaltStack一键部署高可用加负载均衡集群

在上篇博客中我们利用salt推送了一台主机上的haproxy为了实现高可用和负载均衡,我们再使用一台虚拟机server4搭建集群…..

server1和server4组成高可用和负载均衡集群;

server2和server3作为后端真实服务器提供httpd服务;

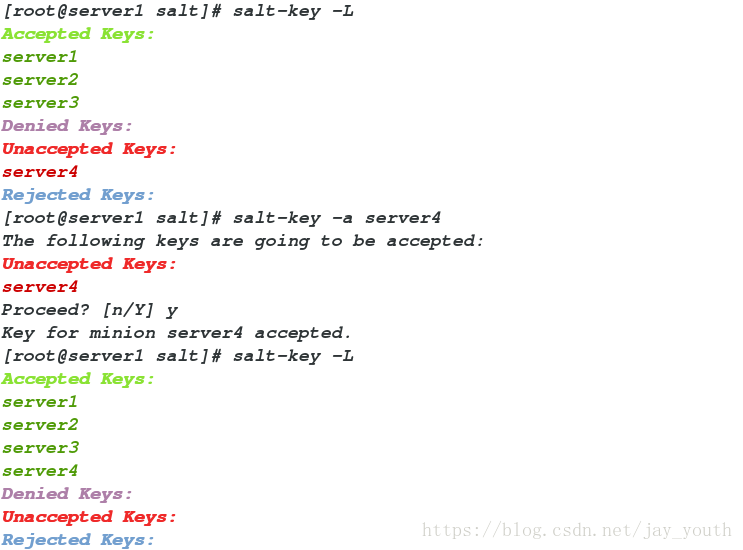

1.将server4加进server1的minion群组中,并在server4上配置yum源

2.在server1的/srv/salt目录下,建立keepalived目录,进到目录里边编辑安装keepalived的sls推送文件

vim install.sls

(将install.sls文件分开来写,先尝试安装的推送,再做文件的软链接等,可以在server4中边验证边在server1中编辑)

kp-install: pkg.installed: - pkgs: - openssl-devel - gcc - mailx # 源码编译所需要的依赖性 file.managed: - name: /mnt/keepalived-2.0.6.tar.gz # 源码包所再的位置(server4中) - source: salt://keepalived/files/keepalived-2.0.6.tar.gz # server1中源码包所在的位置 cmd.run: - name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6 && ./configure --prefix=/usr/local/keepalived --with-init=SYSV &> /dev/null && make &> /dev/null && make install &> /dev/null # 源码编译的过程 - creates: /usr/local/keepalived # 决定是否要再次编译安装的条件 /etc/keepalived: file.directory: - mode: 755 /etc/sysconfig/keepalived: file.symlink: # 做软链接,方便使用,将 - target: /usr/local/keepalived/etc/sysconfig/keepalived /sbin/keepalived: file.symlink: - target: /usr/local/keepalived/sbin/keepalived # 这是对keepalived的二进制命令,配置文件等做软链接等等

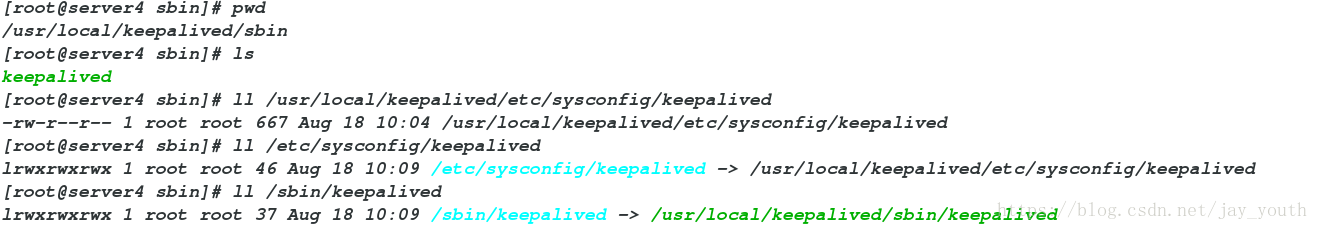

3.在server4中查看是否安装源码编译好keepalived

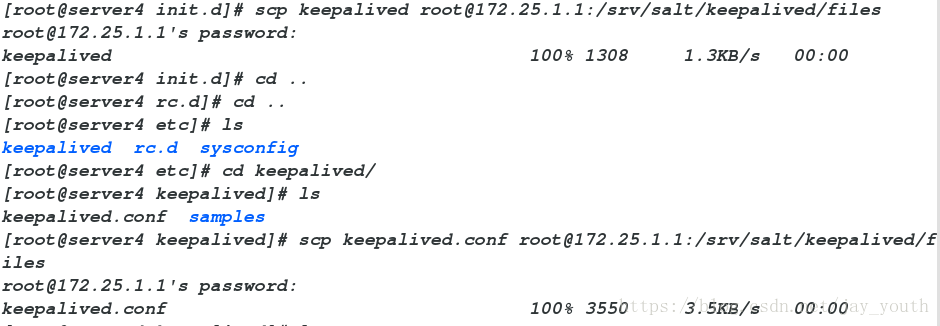

4.将server4中安装编译好的keepalived的脚本,配置文件远程发送到到sevrer1中/srv/salt/keepalived/files

5.执行安装推送

salt server4 state.sls keepalived.install

6.在server1中编辑service.sls,用来启动服务和编辑配置文件

include:

- keepalived.install

/etc/keepalived/keepalived.conf:

file.managed:

- source: salt://keepalived/files/keepalived.conf

- template: jinja # 使用jinja模版

- context:

STATE: {{ pillar['state'] }} # 在pillar中取值

VRID: {{ pillar['vrid'] }}

PRIORITY: {{ pillar['priority'] }}

kp-service:

file.managed:

- name: /etc/init.d/keepalived

- source: salt://keepalived/files/keepalived

- mode: 755

service.running:

- name: keepalived

- reload: True

- watch:

- file: /etc/keepalived/keepalived.conf

7.因为我们在service中用到jinja模版和pillar值,所以去到/srv/pillar中编辑模版,即存储键值对的

cd /srv/pillar/

cd keepalived/

vim install.sls

{% if grains['fqdn'] == 'server1' %} # server1是master

state: MASTER

vrid: 1

priority: 100 # 赋予键和键值

{% elif grains['fqdn'] == 'server4' %} # server2是backup

state: BACKUP

vrid: 1

priority: 50

{% endif %}

cd /srv/pillar/ vim top.sls base: '*': - web.install - keepalived.install

9.在/srv/salt下编辑全部节点的推送的top.sls文件

cd /srv/salt vim top.sls base: 'server1': # 在server1上执行的推送 - haproxy.install - keepalived.service 'server4': - haproxy.install - keepalived.service 'roles:apach': - match: grain - httpd.install 'roles:nginx': - match: grain - nginx.service

10.执行top.sls文件,向所有节点安装以及部署服务

salt '*' state.highstate

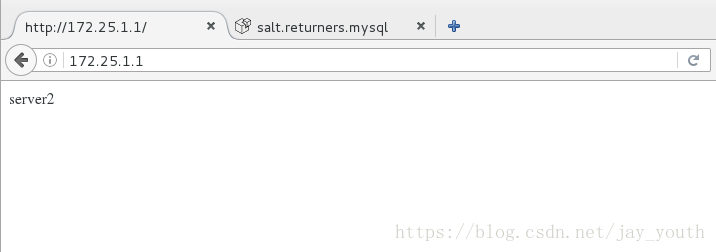

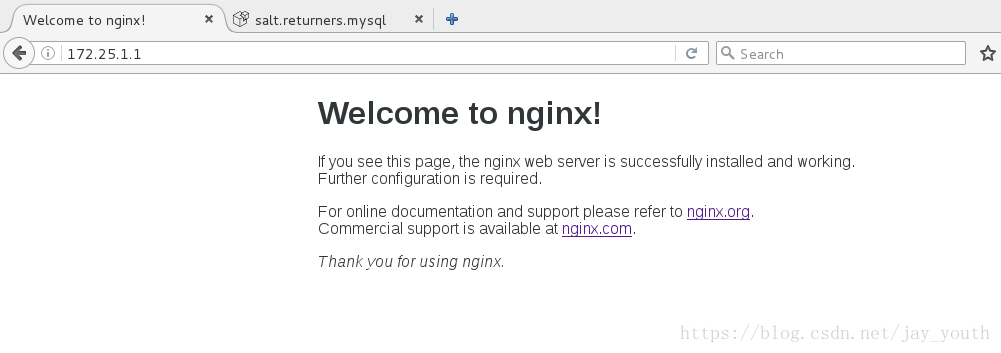

11.在浏览器测试负载均衡和高可用

做到这儿我们发现一个问题,如果正在提供服务的主机上haproxy停掉,那么我们的负载均衡失效,但是keepalived还是不会转移到另一台主机,这时我们应该采取一点措施来解决keepalived不会转移的问题:

1.在server1上编写执行此功能的脚本

vim /opt/haproxy_check.sh #!bin/bash /etc/init.d/haproxy status &> /dev/null || /etc/init.d/haproxy retsart &> /dev/null # 如果haproxy的状态是打开的,不做任何事情,如果haproxy的状态是关闭的,那么重新打开haproxy if [ $? -ne 0 ];then /etc/init.d/keepalived stop &> /dev/null fi # 如果重新打开haproxy的操作返回值非0,那么说明haproxy出现故障,此时由脚本关闭keepalived,将提供服务的节点转移

2.将该脚本写进/srv/salt/keepalived/files/keepalived的配置文件中

vrrp_script check_haproxy {

script "/opt/check_haproxy.sh"

interval 2 # 每隔两秒一词,检测到haproxy出现故障无法打开时,此时vip所在主机的优先级每次减2,直到低于另一台主机的优先级,keepalived转移

weight 2

}

......

track_script {

check_haproxy

}

3.做推送,将该文件推送到server1和server4上

salt server4 state.sls keepalived.service salt server1 state.sls keepalived.service

4.我们模仿haproxy彻底宕掉的情形,在server1上:

/etc/init.d/haproxy stop cd /etc/init.d/ chmod -x haproxy # 让他不能自行利用脚本恢复

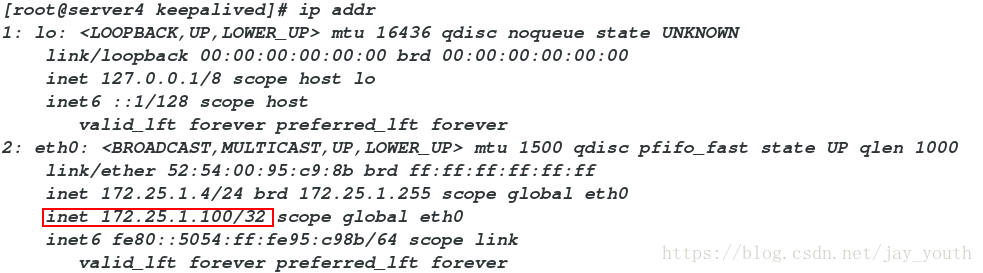

5.过了一会儿之后我们发现vip转移server4上

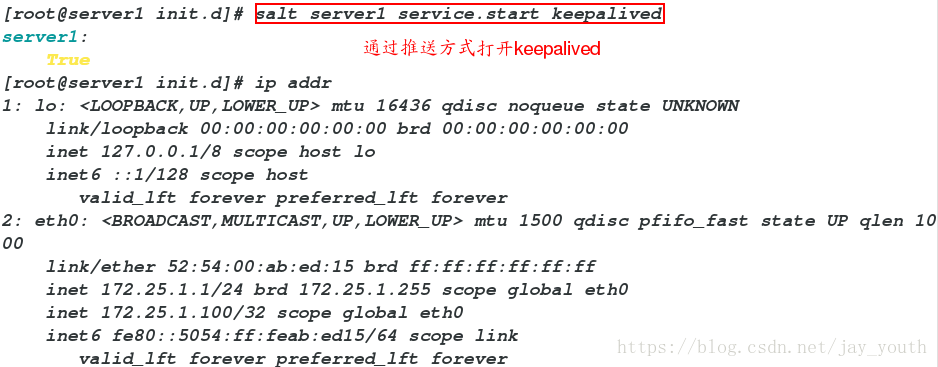

6.在server1上重新打开keepalived

salt server1 service.start keepalived

此时vip回到server1上:

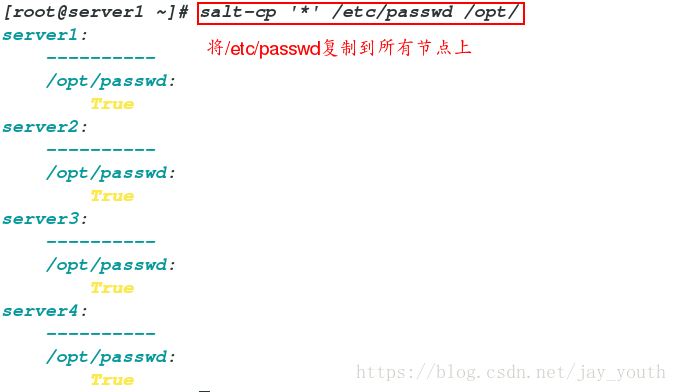

三.两个简单salt模块的用法

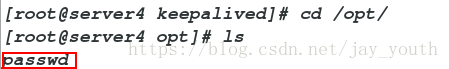

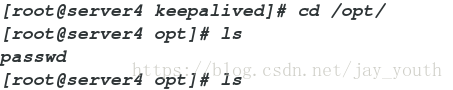

1.向minion上远程发送

salt-cp ‘*’ /etc/passwd /opt/

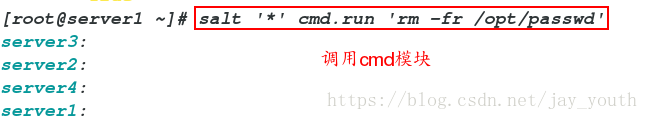

2.远程执行命令

salt ‘*’ cmd.run ‘rm -fr /opt/passwd’

- SaltStack一键自动化部署高可用负载均衡集群

- saltstack多节点推送实现haproxy负载均衡集群

- HAProxy+Varnish+LNMP实现高可用负载均衡动静分离集群部署 推荐

- 利用saltstack部署高可用集群及负载均衡(keepalived+haproxy+nginx)

- LVS+keepalived高可用负载均衡集群部署(二)---LAMP网站服务器与LVS服务器

- saltstack一键部署zabbix_agent&&管理文件

- 利用saltstack部署高可用集群及负载均衡(keepalived+haproxy+nginx)

- 利用saltstack部署高可用集群及负载均衡(keepalived+haproxy+nginx)

- 利用saltstack部署高可用集群及负载均衡(keepalived+haproxy+nginx)

- Keepalived + LVS 高可用负载均衡集群部署

- 利用saltstack部署高可用集群及负载均衡(keepalived+haproxy+nginx)

- Ubuntu构建LVS+Keepalived高可用负载均衡集群【生产环境部署】 推荐

- HAProxy+Varnish+LNMP实现高可用负载均衡动静分离集群部署

- HAProxy+Varnish+LNMP实现高可用负载均衡动静分离集群部署

- [SaltStack] Salt高可用和负载均衡部署

- kubernetes kubeadm部署高可用集群

- CentOS 6.3下部署LVS(NAT)+keepalived实现高性能高可用负载均衡

- saltstack部署nginx

- SaltStack部署

- nginx+keepalived的高可用负载均衡集群构建