Python 自编函数实现反向传播

2018-03-25 10:26

288 查看

问题描述:

禁止使用深度学习与梯度下降相关的库实现下列问题。

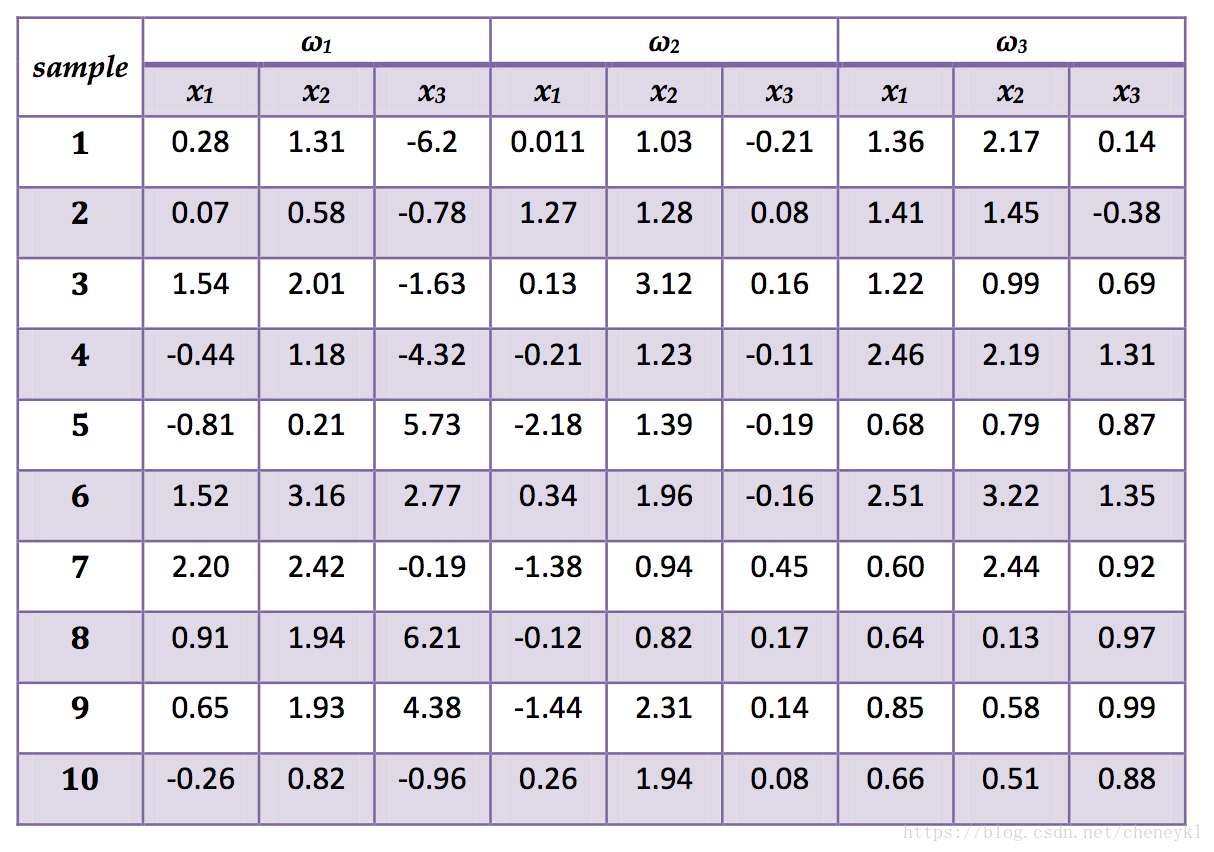

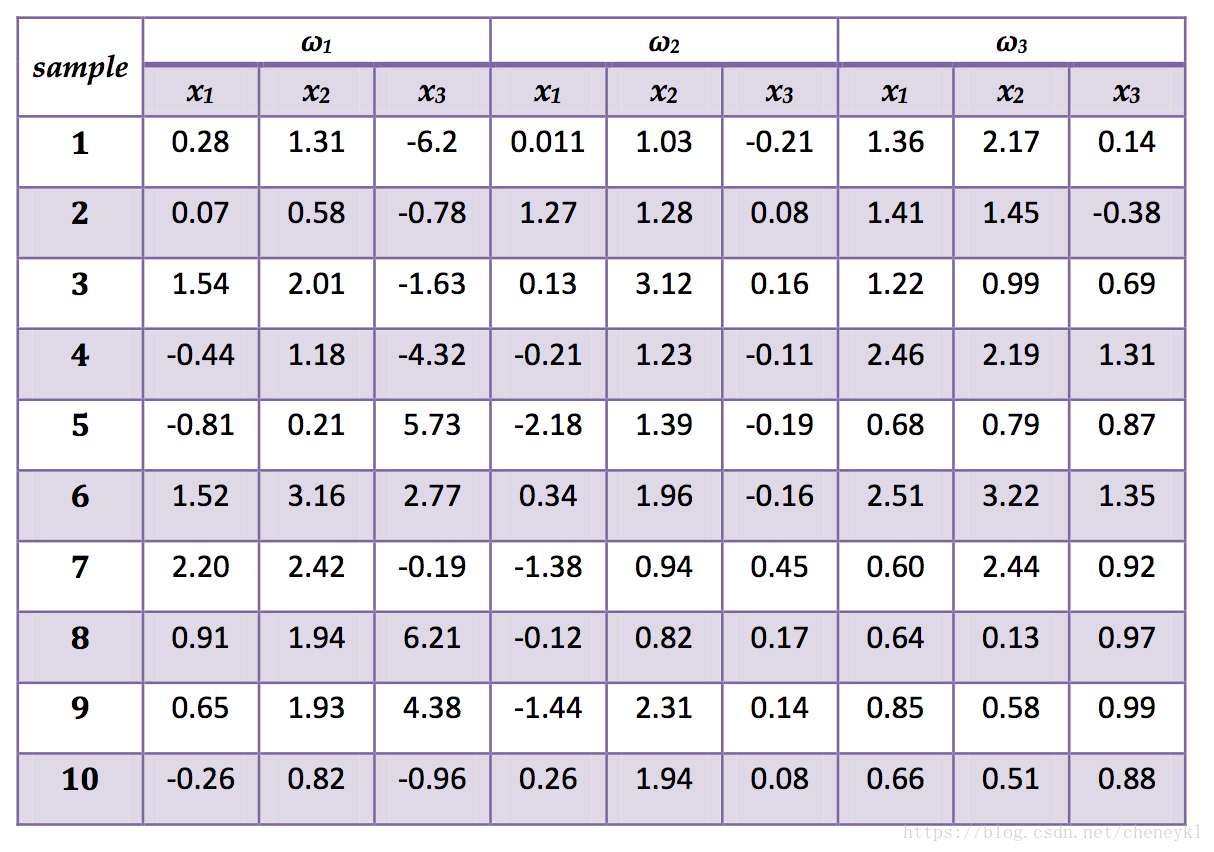

Several exercises will make use of the following three-dimensional data sampledfrom three categories, denoted ωi .

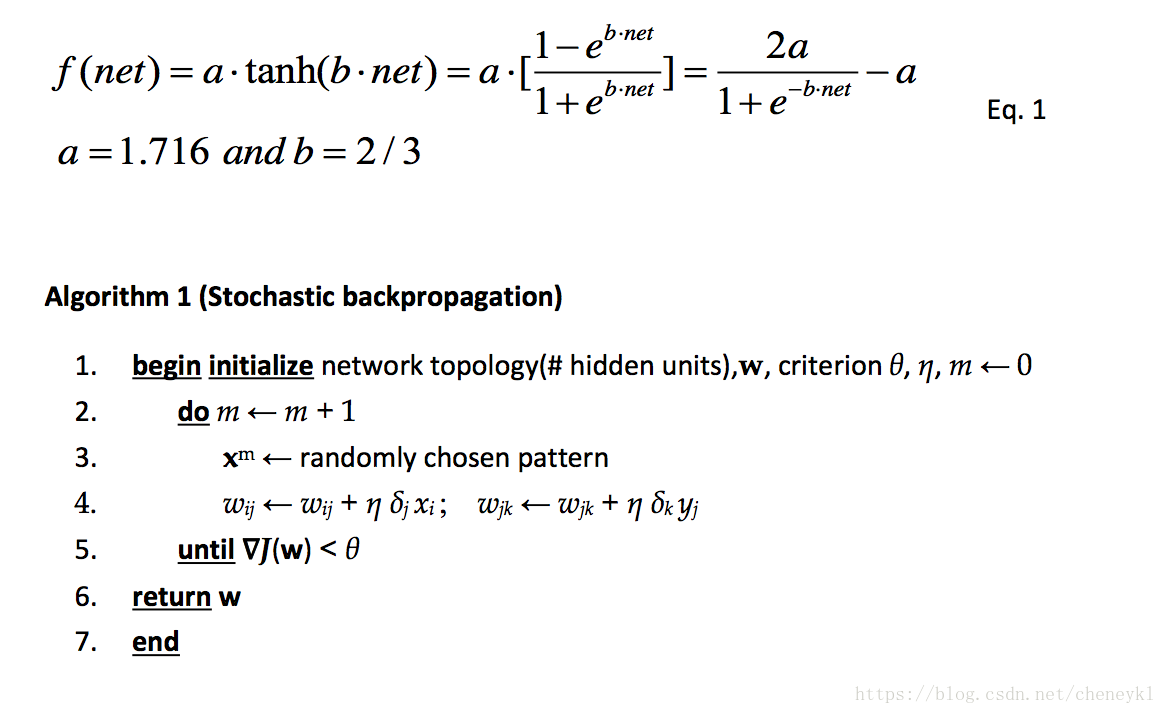

1. Consider a 2-2-1 network with bias, where the activation function at the hiddenunits and the output unit is a sigmoid yj = a tanh(b netj) for a = 1.716 and b = 2/3.

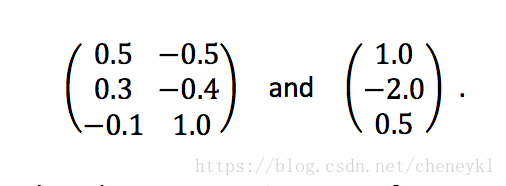

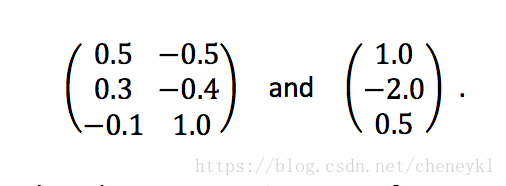

(a) Suppose the matrices describing the input-to-hidden weights (wji for j = 1, 2and i = 0, 1, 2) and the hidden-to-output weights (wkj for k = 1 and j = 0, 1, 2) are,respectively,

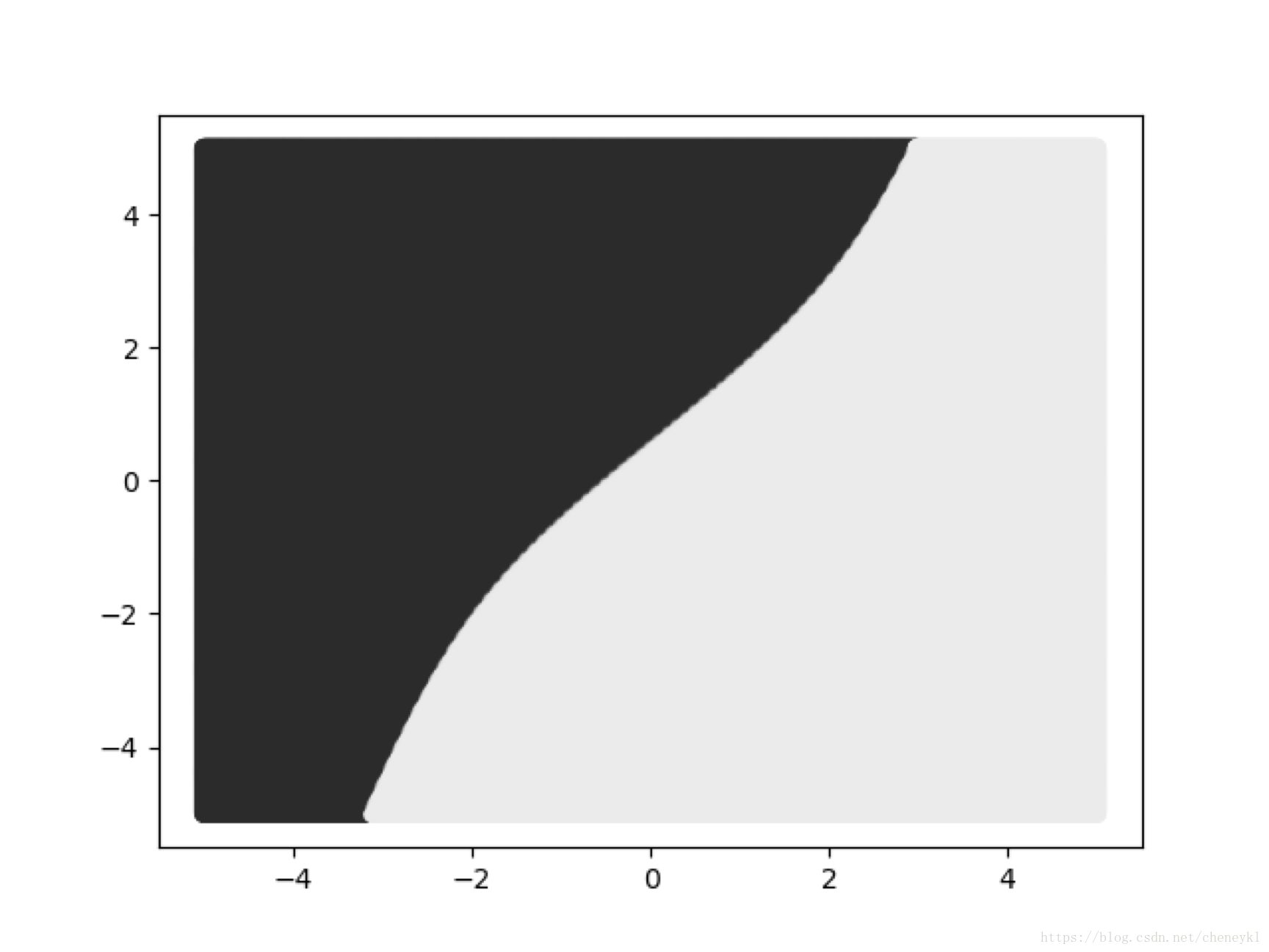

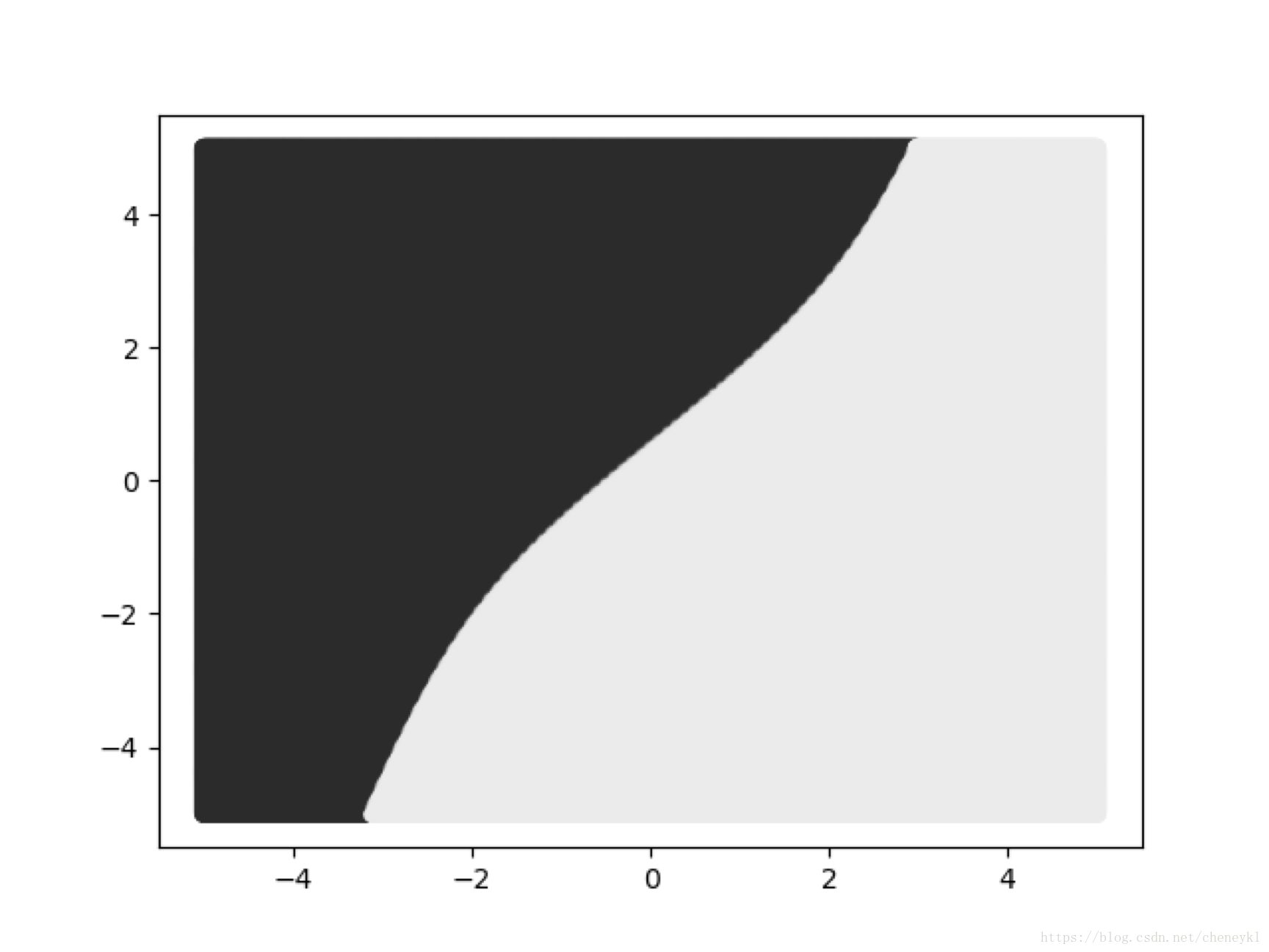

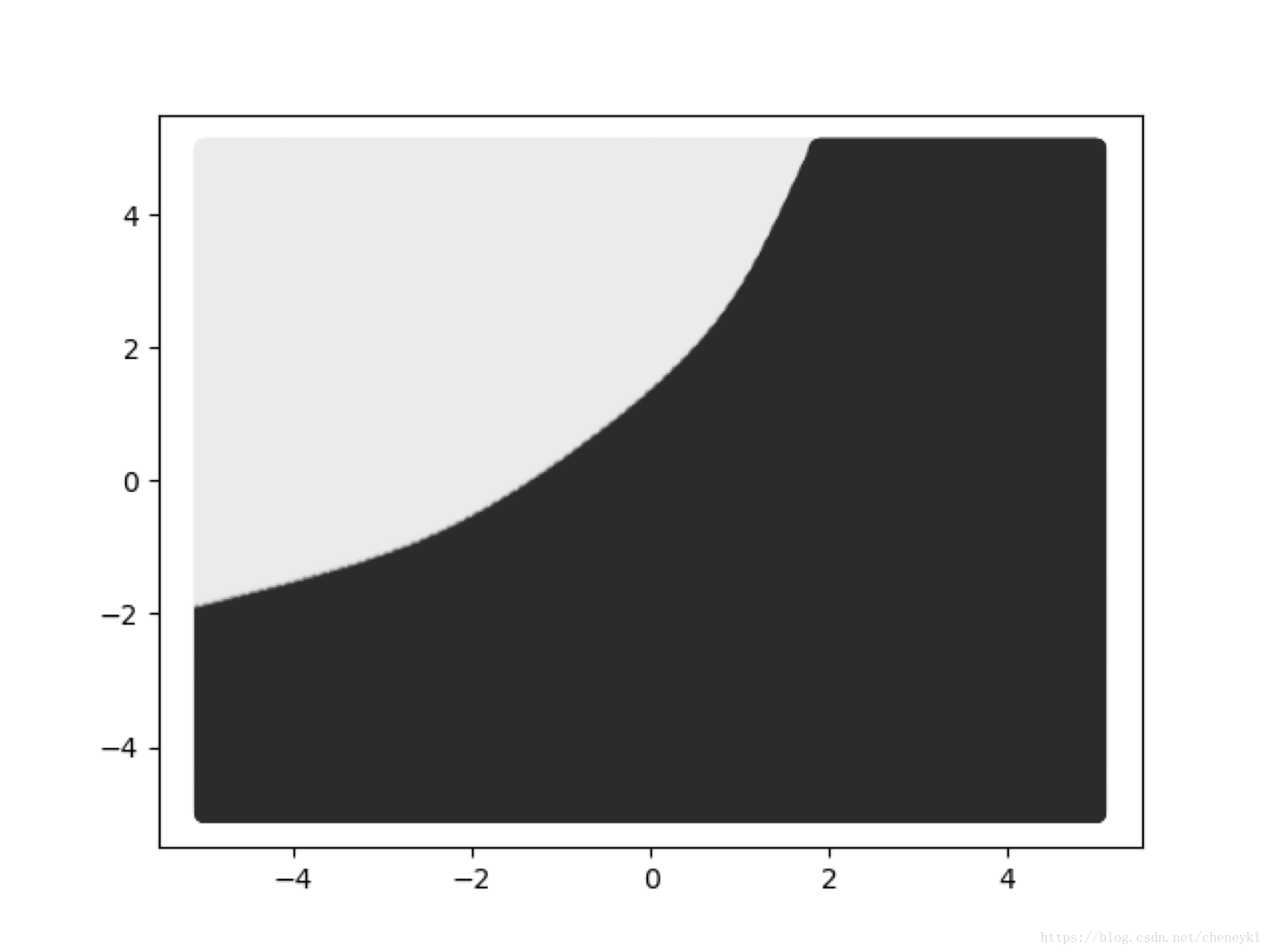

The network is to be used to place patterns into one of two categories, based on the signof the output unit signal. Shade a two-dimensional x1 x2 input space (−5 ≤ x1, x2 ≤ +5)black or white according to the category given by the network.

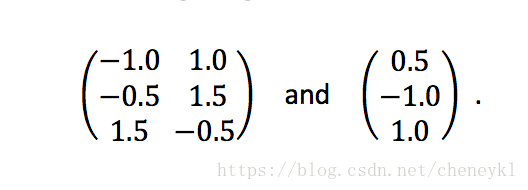

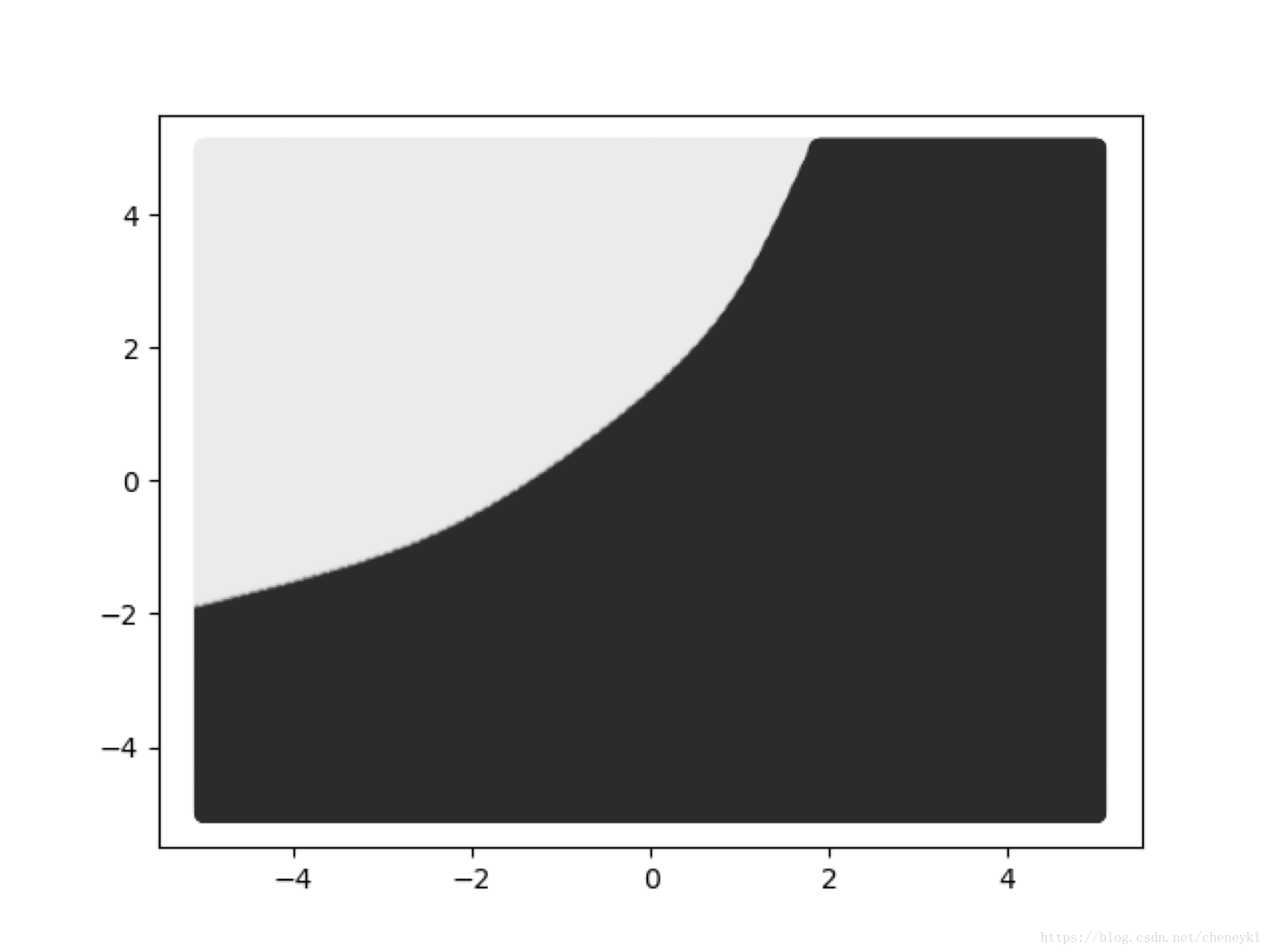

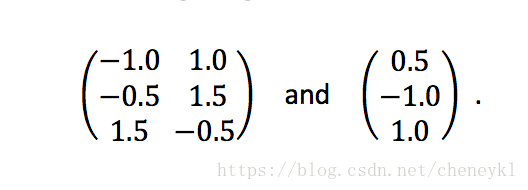

(b) Repeat part(a) with the following weight matrices:

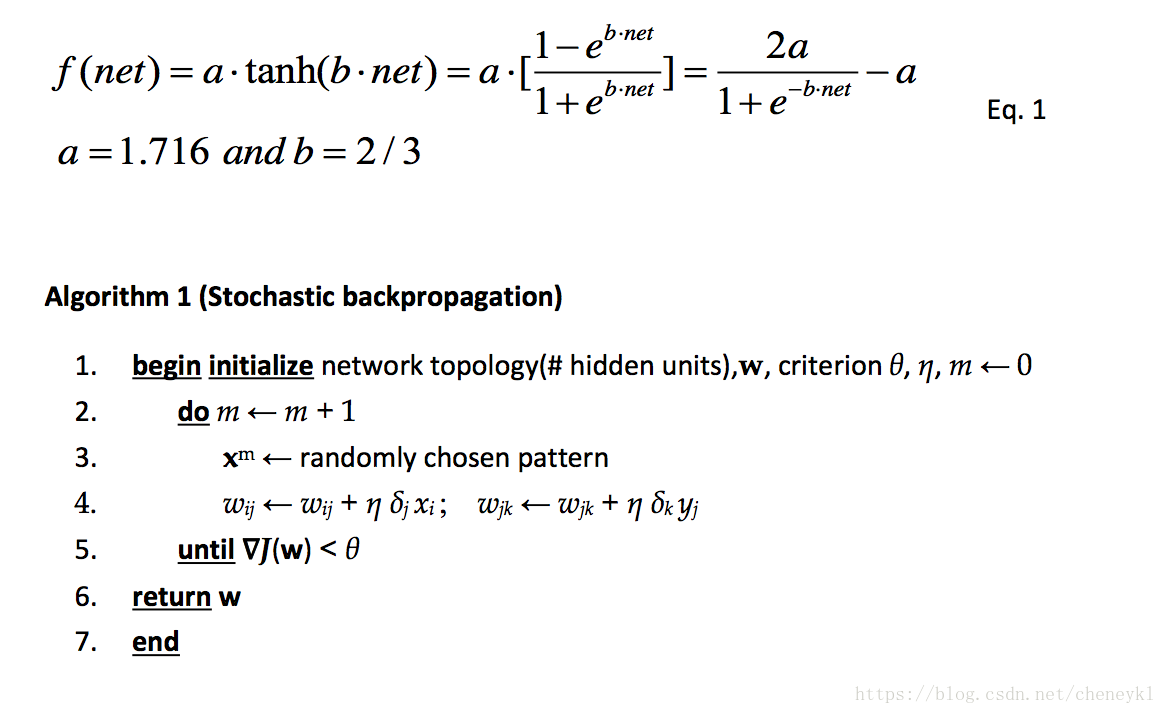

2. Create a 3-1-1 sigmoidal network with bias to be trained to classify patterns from ω1and ω2 in the table above. Use stochastic backpropagation to (Algorithm 1) with learningrate η = 0.1 and sigmoid as described in Eq. 1.

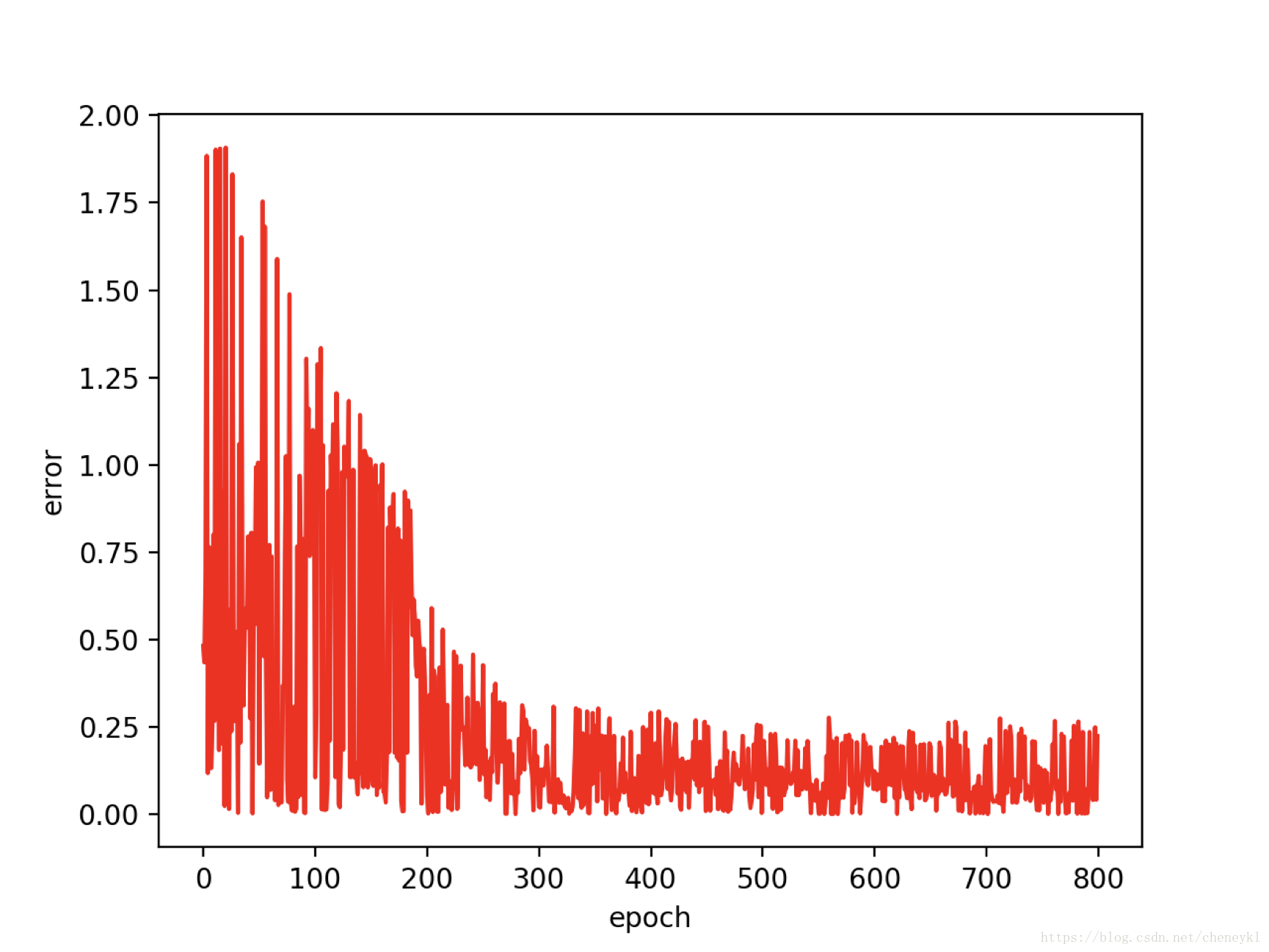

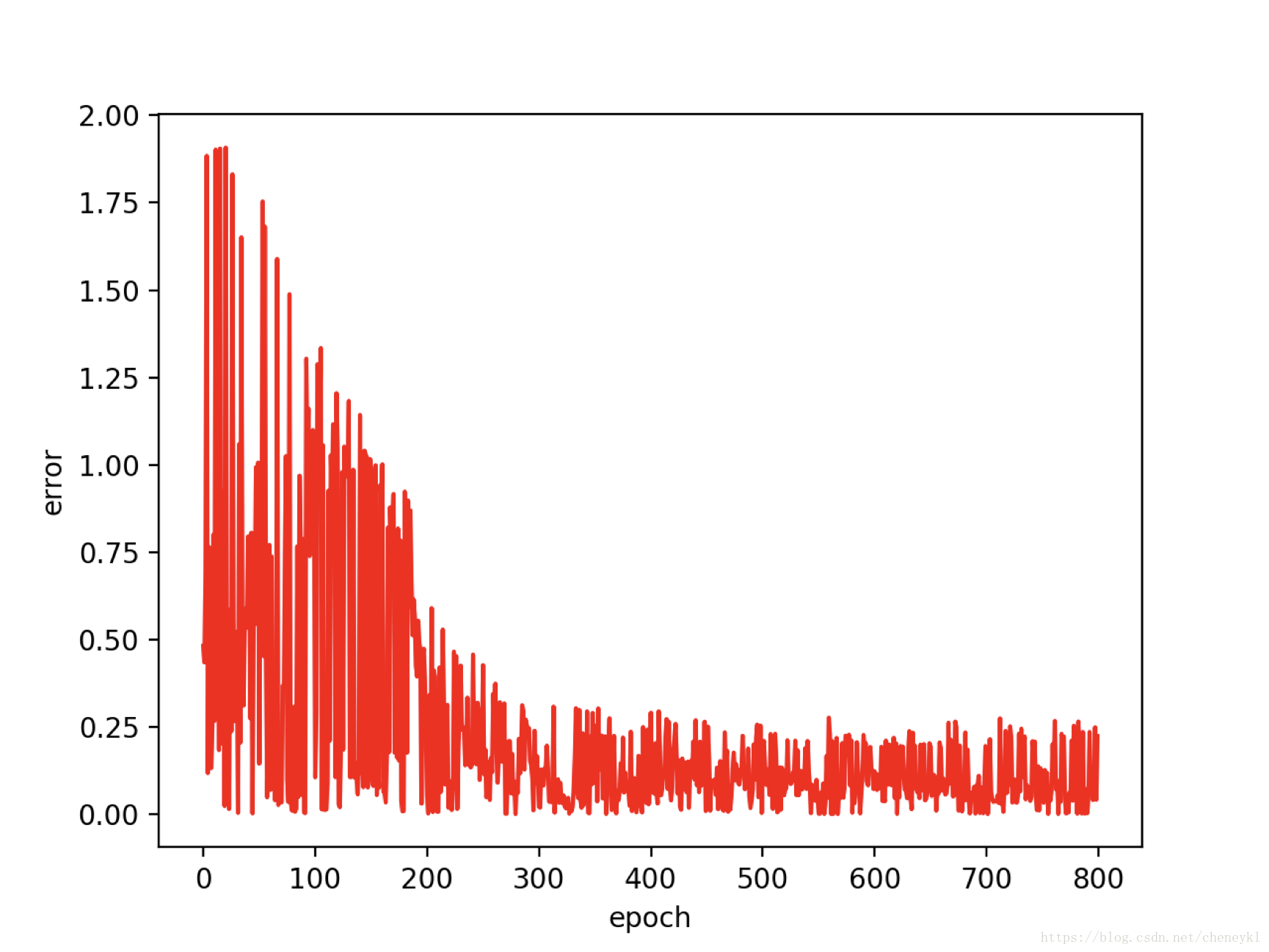

(a) Initialize all weights randomly in the range −1 ≤ w ≤ +1. Plot a learning curve — thetraining error as a function of epoch.

(b) Now repeat (a) but with weights initialized to be the same throughout each level. Inparticular, let all input-to-hidden weights be initialized with wji = 0.5 and allhidden-to-output weights with wkj = −0.5.

(c) Explain the source of the differences between your learning curves.

问题一(a):

代码:#coding=utf-8

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

# 定义激活函数

def sigmoid(x):

c = np.exp(-1.333* x)

return 1.716 * (2 / (1+c) - 1)

x =[] # 输入

x1 = -5

while x1 < 5:

x2 = -5

while x2 < 5:

x.append([1, x1, x2]) # 1为偏置

x2 = x2 + 0.01

x1 = x1 + 0.01

x = np.array(x)

n=len(x)

x.reshape((n,3)) # 将输入变成每一行有三个元素的数组,使得每一行都是[b,x1,x2]

# 输入层到隐藏层

'''s0 = np.array([[0.5, -0.5],[0.3, -0.4],[-0.1, 1.0]])''' # 输入层到隐藏层权值

s0 = np.array([[-1.0, 1.0],[-0.5, 1.5],[1.5, -0.5]]) #第二小问(b)权值

net1 = x.dot(s0) # 输出层的权值点乘reshape之后的输入

l1 = sigmoid(net1) # 通过激活函数隐藏层输出

# 添加隐藏层偏置

b2 = x[:,0] # 偏置也为1

l1 = np.column_stack((b2, l1)) # 将偏置添加到l1

# 隐藏层到输出层

'''s1 = np.array([[1.0],[-2.0],[0.5]])''' # 隐藏层到输出层权值

s1 = np.array([[0.5],[-1.0],[1.0]])#第二小问(b)权值

net2 = l1.dot(s1) # 隐藏层的权值点乘隐藏层输出

l2 = sigmoid(net2) # 通过激活函数隐藏层输出

# 输出分类

y = []

m = len(l2[:,0])

for i in range(m):

if l2[i,:] < 0 : # 输出小于0 标记为0

y.append(0)

else:

y.append(1) # 标记为1

# Create color maps 在图上将两类点由黑白颜色标记

cmap_bold =ListedColormap(['#EBEBEB','#2B2B2B'])

plt.figure()

x1 = x[:,1]

x2 = x[:,2]

plt.scatter(x1,x2,c=y,cmap=cmap_bold)

plt.show()

更改权重值之后:

问题二代码:#coding=utf-8

import numpy as np

import pylab

import pandas as pd

#定义激活函数

def sigmoid(x):

return 1.716 * np.tanh((x * 0.667))

#定义激活函数导数

def d_sigmoid(x):

return 1.716 * 0.667 * (1 - np.square(np.tanh(x*0.667)))

# 读取数据

X = pd.read_csv("/Users/Cheney/Downloads/X.csv").values

Y = pd.read_csv("/Users/Cheney/Downloads/Y.csv").values

# 初始化输入层到隐藏层权值

s0 = 1 - 2 * np.random.random((3,1))

# 初始化隐藏层到输出层权值

s1 = 1 - 2 * np.random.random((1,1))

#s0 = np.array([0.5, 0.5, 0.5]) #第二小问s0全部为0.5,s1为-0.5

#s1 = np.array([-0.5])

η= 0.01 # 学习率

b1 = 0 # 初始化偏置

b2 = 0

error = [] # 误差

for i in range(800):

j = np.random.randint(0,19) #随机抽取样本

l0 = np.array(X[j]) # 输入层

y = np.array(Y[j]) # 输出层

net1 = b1 + s0[0]*l0[0] + s0[1]*l0[1] +s0[2]*l0[2] # 输入层到隐藏层

l1 = sigmoid(net1)

net2 = b2 + l1*s1[0] # 隐藏层到输出层

l2 = sigmoid(net2)

g2 = (y - l2) * d_sigmoid(l2) # 反向传播,计算输出层神经元的梯度

g1 = g2 * s1[0] * d_sigmoid(l1) # 反向传播,计算隐藏层神经元的梯度

b1 = b1 + η * g2 # 反向传播,更新隐藏层权重

s1[0] = s1[0] + η * l1 * g2

b2 += η * g1 # 反向传播,更新输入层权重

n = len(s0)

for m in range(n):

s0[m] = s0[m] + η * g1 * l0[m]

e = 0.5 * np.square(y - l2)

error.append(e)

# 画图

pylab.plot(range(len(error)), error, 'r-')

pylab.xlabel('epoch')

pylab.ylabel('error')

pylab.show()

更改权值之后:

禁止使用深度学习与梯度下降相关的库实现下列问题。

Several exercises will make use of the following three-dimensional data sampledfrom three categories, denoted ωi .

1. Consider a 2-2-1 network with bias, where the activation function at the hiddenunits and the output unit is a sigmoid yj = a tanh(b netj) for a = 1.716 and b = 2/3.

(a) Suppose the matrices describing the input-to-hidden weights (wji for j = 1, 2and i = 0, 1, 2) and the hidden-to-output weights (wkj for k = 1 and j = 0, 1, 2) are,respectively,

The network is to be used to place patterns into one of two categories, based on the signof the output unit signal. Shade a two-dimensional x1 x2 input space (−5 ≤ x1, x2 ≤ +5)black or white according to the category given by the network.

(b) Repeat part(a) with the following weight matrices:

2. Create a 3-1-1 sigmoidal network with bias to be trained to classify patterns from ω1and ω2 in the table above. Use stochastic backpropagation to (Algorithm 1) with learningrate η = 0.1 and sigmoid as described in Eq. 1.

(a) Initialize all weights randomly in the range −1 ≤ w ≤ +1. Plot a learning curve — thetraining error as a function of epoch.

(b) Now repeat (a) but with weights initialized to be the same throughout each level. Inparticular, let all input-to-hidden weights be initialized with wji = 0.5 and allhidden-to-output weights with wkj = −0.5.

(c) Explain the source of the differences between your learning curves.

问题一(a):

代码:#coding=utf-8

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

# 定义激活函数

def sigmoid(x):

c = np.exp(-1.333* x)

return 1.716 * (2 / (1+c) - 1)

x =[] # 输入

x1 = -5

while x1 < 5:

x2 = -5

while x2 < 5:

x.append([1, x1, x2]) # 1为偏置

x2 = x2 + 0.01

x1 = x1 + 0.01

x = np.array(x)

n=len(x)

x.reshape((n,3)) # 将输入变成每一行有三个元素的数组,使得每一行都是[b,x1,x2]

# 输入层到隐藏层

'''s0 = np.array([[0.5, -0.5],[0.3, -0.4],[-0.1, 1.0]])''' # 输入层到隐藏层权值

s0 = np.array([[-1.0, 1.0],[-0.5, 1.5],[1.5, -0.5]]) #第二小问(b)权值

net1 = x.dot(s0) # 输出层的权值点乘reshape之后的输入

l1 = sigmoid(net1) # 通过激活函数隐藏层输出

# 添加隐藏层偏置

b2 = x[:,0] # 偏置也为1

l1 = np.column_stack((b2, l1)) # 将偏置添加到l1

# 隐藏层到输出层

'''s1 = np.array([[1.0],[-2.0],[0.5]])''' # 隐藏层到输出层权值

s1 = np.array([[0.5],[-1.0],[1.0]])#第二小问(b)权值

net2 = l1.dot(s1) # 隐藏层的权值点乘隐藏层输出

l2 = sigmoid(net2) # 通过激活函数隐藏层输出

# 输出分类

y = []

m = len(l2[:,0])

for i in range(m):

if l2[i,:] < 0 : # 输出小于0 标记为0

y.append(0)

else:

y.append(1) # 标记为1

# Create color maps 在图上将两类点由黑白颜色标记

cmap_bold =ListedColormap(['#EBEBEB','#2B2B2B'])

plt.figure()

x1 = x[:,1]

x2 = x[:,2]

plt.scatter(x1,x2,c=y,cmap=cmap_bold)

plt.show()

更改权重值之后:

问题二代码:#coding=utf-8

import numpy as np

import pylab

import pandas as pd

#定义激活函数

def sigmoid(x):

return 1.716 * np.tanh((x * 0.667))

#定义激活函数导数

def d_sigmoid(x):

return 1.716 * 0.667 * (1 - np.square(np.tanh(x*0.667)))

# 读取数据

X = pd.read_csv("/Users/Cheney/Downloads/X.csv").values

Y = pd.read_csv("/Users/Cheney/Downloads/Y.csv").values

# 初始化输入层到隐藏层权值

s0 = 1 - 2 * np.random.random((3,1))

# 初始化隐藏层到输出层权值

s1 = 1 - 2 * np.random.random((1,1))

#s0 = np.array([0.5, 0.5, 0.5]) #第二小问s0全部为0.5,s1为-0.5

#s1 = np.array([-0.5])

η= 0.01 # 学习率

b1 = 0 # 初始化偏置

b2 = 0

error = [] # 误差

for i in range(800):

j = np.random.randint(0,19) #随机抽取样本

l0 = np.array(X[j]) # 输入层

y = np.array(Y[j]) # 输出层

net1 = b1 + s0[0]*l0[0] + s0[1]*l0[1] +s0[2]*l0[2] # 输入层到隐藏层

l1 = sigmoid(net1)

net2 = b2 + l1*s1[0] # 隐藏层到输出层

l2 = sigmoid(net2)

g2 = (y - l2) * d_sigmoid(l2) # 反向传播,计算输出层神经元的梯度

g1 = g2 * s1[0] * d_sigmoid(l1) # 反向传播,计算隐藏层神经元的梯度

b1 = b1 + η * g2 # 反向传播,更新隐藏层权重

s1[0] = s1[0] + η * l1 * g2

b2 += η * g1 # 反向传播,更新输入层权重

n = len(s0)

for m in range(n):

s0[m] = s0[m] + η * g1 * l0[m]

e = 0.5 * np.square(y - l2)

error.append(e)

# 画图

pylab.plot(range(len(error)), error, 'r-')

pylab.xlabel('epoch')

pylab.ylabel('error')

pylab.show()

更改权值之后:

相关文章推荐

- Python神经网络代码实现流程(三):反向传播与梯度下降

- 神经网络中的反向传播的推导和python实现

- python3中实现函数的重载

- 【社团检测】社团检测之标签传播算法Python实现

- python之通过“反射”实现不同的url指向不同函数进行处理(反射应用一)

- Python实现调用另一个路径下py文件中的函数方法总结

- 飘逸的python - 几行代码实现unix管道风格的函数调用

- 【廖雪峰 python教程 课后题 切片】利用切片操作,实现一个trim()函数,去除字符串首尾的空格,注意不要调用str的strip()方法:

- Python实现截屏的函数

- python 实现 Peceptron Learning Algorithm ( 一) 几个函数的记录

- python 知识点:反射 之 getattr()实现以字符串形式导入模块和执行函数

- 【实现&调用】实现可被简单调用的python函数

- python实现在每个独立进程中运行一个函数的方法

- 十一行Python代码实现一个误差逆传播(BP)神经网络

- Python def函数的定义、使用及参数传递实现代码

- python 使用 signal模块实现函数调用超时问题

- Python:使用threading模块实现多线程编程三[threading.Thread类的重要函数]

- python使用threading获取线程函数返回值的实现方法

- 社团检测之标签传播算法Python实现

- 实现Python str.split功能的小函数