Human Pose Matching on mobile — a fun application using Human Pose Estimation (Part 1 Intro)

2017-12-08 14:06

671 查看

Human pose estimation -computationally detecting human body posture- is rising. These technologies capable of detection the human body joints are becoming effective and accessible. And they

look astonishing, have a look:

This video is made using OpenPose and

it’s impressing

OpenPose represents the first real-time system to jointly detect human body, hand and facial keypoints (in total 130 keypoints) on single images. In addition, the system computational

performance on body keypoint estimation is invariant to the number of detected people in the image

Result using OpenPose

This OpenPose library is a wonderful example of *buzzword incoming* Deep Learning. This library is built upon

a neural network and has been developed by Carnegie Mellon University. OpenPose uses an interesting pipeline to achieve it’s robust

performance. If you want to dig into this topic, the paper “Realtime Multi-Person 2D

Pose Estimation using Part Affinity Fields” gives an overview of the inner workings of the system.

If you are looking for alternative algorithms, take a look at DeeperCut.

Here is an implementation in Python.

Result using DeeperCut

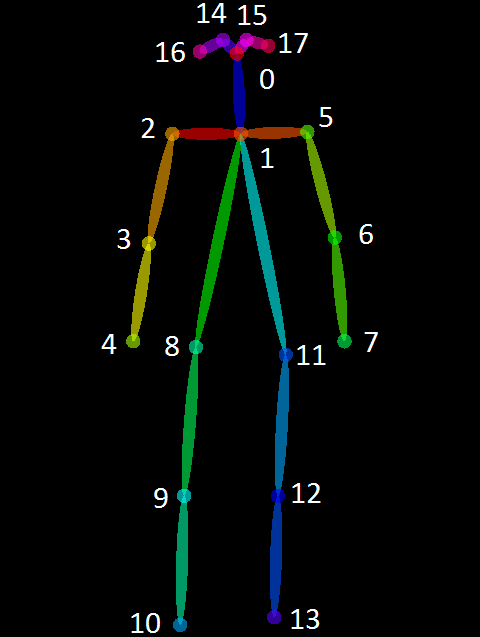

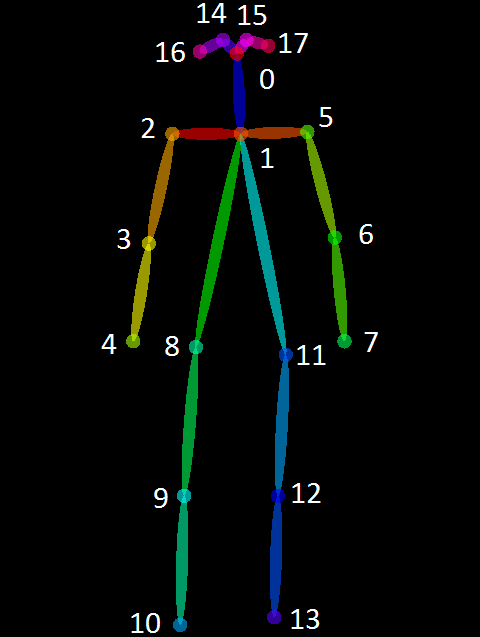

Pose Output Format OpenPose

The fact that these pose estimators work with just a normal camera, opens doors for mobile development. Imagine

using these technology with your shitty smartphone camera. OK, I admit; I want to develop for smartphone, so (ideally?) everything runs embedded on the smartphone itself. This means,

the implementation is capable of running on CPU or smartphone GPU. BUT, as these Estimator Algorithms currently run on some pretty decent GPUs , this is surely a point of discussion.

Most of the new smartphones these days have a GPU on board, but are they capable of running these frameworks? To nuance for this case, the

(multi-person) keypoint detection doesn’t need to be real-time, as there is only 1 picture (no real-time video) and a delay

time of 1 to 1,5 seconds is acceptable.

However, working embedded is not a must, it’s also possible to outsource the estimation/matching algorithm to a central

server containing a decent GPU. This particular choice, embedded or out-sourcing, is an issue that involves a lot of parameters (performance/computation power, server cost, accuracy, mobile battery

usage, delay server communication, multi platform, scalability, mobile data usage -less important- , …).

the human pose of the person is extracted from this image and compared with the predefined pose. Finally a scoring mechanism decides how well the two poses match or if they match at all.

This desired project raises two big problems tough:

support Android, not mentioning iOS. There are plenty of other Deep Learning libraries, yet the mainstream

library is TensorFlow, a Machine Learning

framework developed by Google. And surprise surprise, TensorFlow supports Android (of course, it’s made by Google) and iOS! Great!

So, TensorFlow it is then? I don’t know for sure yet. Still considering/researching the options … Hope to get back on this in the near future.

The community working on this topic is quite small, but it is growing. Actually Ale

Solano is working on this currently, he is trying to port the OpenPose-Caffe library to TensorFlow and he is also blogging about

it! He’s making good progress.

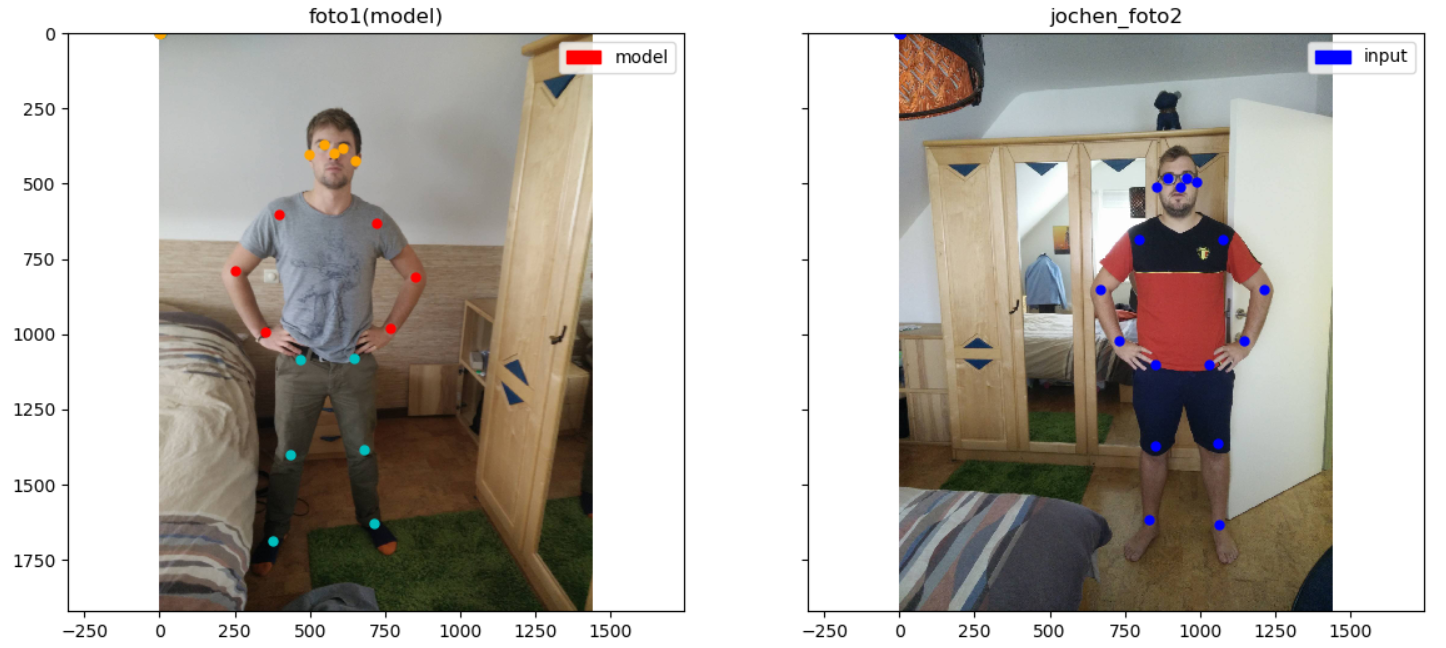

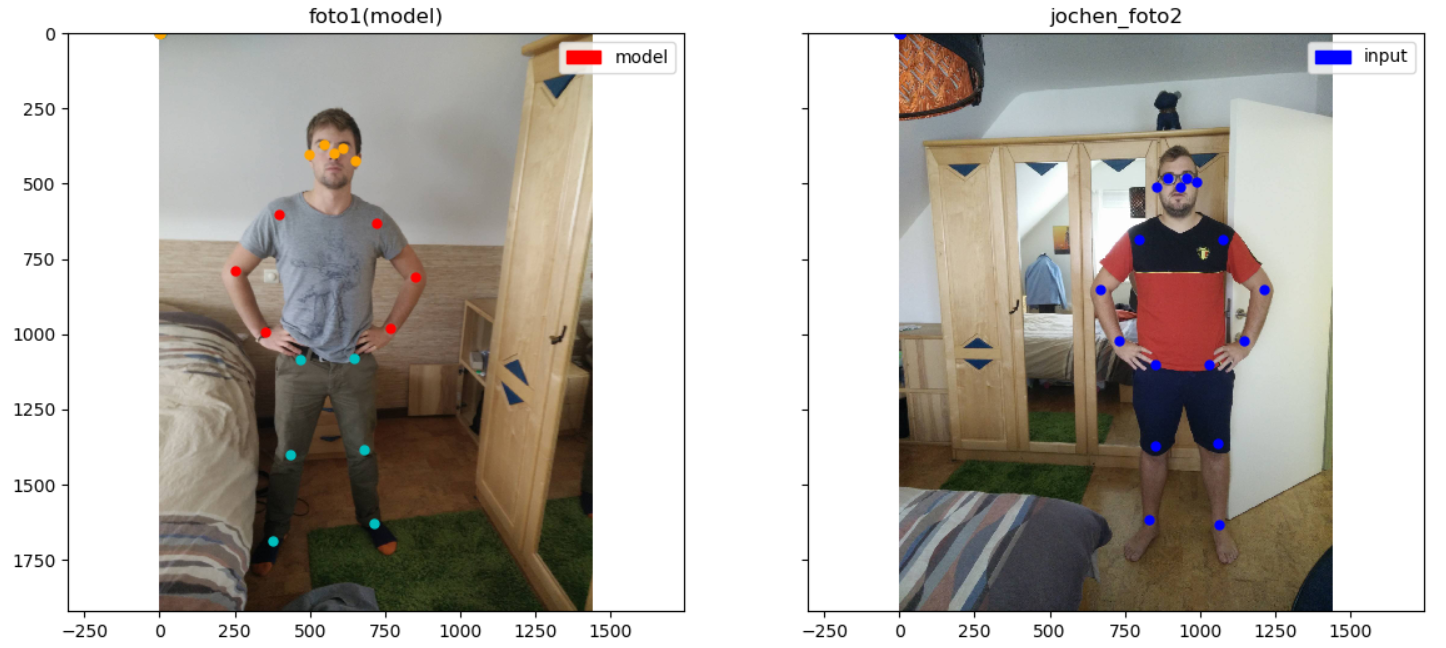

left: model || right: input pose

So we have 2 sets of matching points (each consisting of 18 2D coordinates);

1. The model X (which needs to be mimicked) -left hand side

2. The input Y (needs to be checked on matching grade) -right hand side

Essentially this comes down to checking if the two poses (described by their 18 2D coordinates) are similar.

“Two geometrical objects are called similar if

they both have the same shape,

or one has the same shape as the mirror image of the other. More precisely, one can be obtained from the other by uniformly scaling (enlarging

or reducing), possibly with additional translation, rotation and

reflection. This means that either object can be rescaled, repositioned, and reflected, so as to coincide precisely with the other object.” — Wikipedia

This means, two poses have the same shape if you can transform one pose to the other one using only a combination

of translation, scaling and rotation. From Linear Algebra, we know this combination of operations is wrapped in a linear transformation, more precisely an affine

transformation(composition of linear map and a translation).

This linear transformation is described by it’s transformation matrix [2x3]. So this is the first step: find the transformation matrix. You want this matrix? Solve the linear system

et voilà!

You wish. There is noway two different poses are exactly described by an affine transformation on each other -ie are similar-, what’s the chance? One way or an other there will be some noise introduced,

these are people in the wild you know. Hence, we want something that gives us an approximate. Luckily, there exists something like least-squares

algorithm, which will do the job for us. Math to the rescue again!

Assuming we have the transformation matrix, the next step is to transform the second pose onto the model. Now we

can compare the image of the second pose and the model with each other. If all their corresponding

points are adjacent, we can conclude the two poses match. From the moment a distance exceeds a certain threshold, they’re different.

I’ve been quite vague in the last two steps. I know.

That’s because I’m not sure yet about the ‘how’ precisely. I can list you all possible options I figured out, but that’s not useful I guess. I need to make some choices and try to find the best combination.

There are many ways to determine if the image of the second pose matches with the model (first pose). An option is, as suggested above, to limit the max (Euclidean) distance between two matching points by a determined threshold. But as there are many other distance

metrics, there are some other ways to compute this transformation error.

The same applies for finding the transformation matrix. You can split the problem in multiple smaller problems ( ie face , torso and legs) or keep the body as whole or something else. What’s the way to go?

As always, some approaches are more effectively then others

In the weeks to come, I will try to setup a test case and test on all these different parameters.

https://becominghuman.ai/human-pose-matching-on-mobile-a-fun-application-using-human-pose-estimation-part-1-intro-93c5cbe3a096

look astonishing, have a look:

This video is made using OpenPose and

it’s impressing

OpenPose represents the first real-time system to jointly detect human body, hand and facial keypoints (in total 130 keypoints) on single images. In addition, the system computational

performance on body keypoint estimation is invariant to the number of detected people in the image

Result using OpenPose

This OpenPose library is a wonderful example of *buzzword incoming* Deep Learning. This library is built upon

a neural network and has been developed by Carnegie Mellon University. OpenPose uses an interesting pipeline to achieve it’s robust

performance. If you want to dig into this topic, the paper “Realtime Multi-Person 2D

Pose Estimation using Part Affinity Fields” gives an overview of the inner workings of the system.

If you are looking for alternative algorithms, take a look at DeeperCut.

Here is an implementation in Python.

Result using DeeperCut

Mobile Development — The Opertunities

So, the magic of Deep Learning gives us 18 human body joints. WHAT CAN WE DO WITH IT? A lot actually. Samin does a good job listing the possibilities in his blog.

Pose Output Format OpenPose

The fact that these pose estimators work with just a normal camera, opens doors for mobile development. Imagine

using these technology with your shitty smartphone camera. OK, I admit; I want to develop for smartphone, so (ideally?) everything runs embedded on the smartphone itself. This means,

the implementation is capable of running on CPU or smartphone GPU. BUT, as these Estimator Algorithms currently run on some pretty decent GPUs , this is surely a point of discussion.

Most of the new smartphones these days have a GPU on board, but are they capable of running these frameworks? To nuance for this case, the

(multi-person) keypoint detection doesn’t need to be real-time, as there is only 1 picture (no real-time video) and a delay

time of 1 to 1,5 seconds is acceptable.

However, working embedded is not a must, it’s also possible to outsource the estimation/matching algorithm to a central

server containing a decent GPU. This particular choice, embedded or out-sourcing, is an issue that involves a lot of parameters (performance/computation power, server cost, accuracy, mobile battery

usage, delay server communication, multi platform, scalability, mobile data usage -less important- , …).

Similarity of different poses — The Application

I’m working on a project where a person must mimic a predefined pose (call it the model). A picture is made from the person that mimics this predefined pose. Then, with the help of OpenPosethe human pose of the person is extracted from this image and compared with the predefined pose. Finally a scoring mechanism decides how well the two poses match or if they match at all.

This desired project raises two big problems tough:

1. Porting OpenPose to Mobile Platform

I didn’t tell you yet, but OpenPose is build with Caffe, a Deep Learning framework. Caffe is OK, but it doesn’tsupport Android, not mentioning iOS. There are plenty of other Deep Learning libraries, yet the mainstream

library is TensorFlow, a Machine Learning

framework developed by Google. And surprise surprise, TensorFlow supports Android (of course, it’s made by Google) and iOS! Great!

So, TensorFlow it is then? I don’t know for sure yet. Still considering/researching the options … Hope to get back on this in the near future.

The community working on this topic is quite small, but it is growing. Actually Ale

Solano is working on this currently, he is trying to port the OpenPose-Caffe library to TensorFlow and he is also blogging about

it! He’s making good progress.

2. How to determine similarity between 2 sets of matching 2D points

Let’s say the previous succeeded, and we have the 2D joint points of two poses:

left: model || right: input pose

So we have 2 sets of matching points (each consisting of 18 2D coordinates);

1. The model X (which needs to be mimicked) -left hand side

2. The input Y (needs to be checked on matching grade) -right hand side

Essentially this comes down to checking if the two poses (described by their 18 2D coordinates) are similar.

“Two geometrical objects are called similar if

they both have the same shape,

or one has the same shape as the mirror image of the other. More precisely, one can be obtained from the other by uniformly scaling (enlarging

or reducing), possibly with additional translation, rotation and

reflection. This means that either object can be rescaled, repositioned, and reflected, so as to coincide precisely with the other object.” — Wikipedia

This means, two poses have the same shape if you can transform one pose to the other one using only a combination

of translation, scaling and rotation. From Linear Algebra, we know this combination of operations is wrapped in a linear transformation, more precisely an affine

transformation(composition of linear map and a translation).

This linear transformation is described by it’s transformation matrix [2x3]. So this is the first step: find the transformation matrix. You want this matrix? Solve the linear system

et voilà!

You wish. There is noway two different poses are exactly described by an affine transformation on each other -ie are similar-, what’s the chance? One way or an other there will be some noise introduced,

these are people in the wild you know. Hence, we want something that gives us an approximate. Luckily, there exists something like least-squares

algorithm, which will do the job for us. Math to the rescue again!

Assuming we have the transformation matrix, the next step is to transform the second pose onto the model. Now we

can compare the image of the second pose and the model with each other. If all their corresponding

points are adjacent, we can conclude the two poses match. From the moment a distance exceeds a certain threshold, they’re different.

I’ve been quite vague in the last two steps. I know.

That’s because I’m not sure yet about the ‘how’ precisely. I can list you all possible options I figured out, but that’s not useful I guess. I need to make some choices and try to find the best combination.

There are many ways to determine if the image of the second pose matches with the model (first pose). An option is, as suggested above, to limit the max (Euclidean) distance between two matching points by a determined threshold. But as there are many other distance

metrics, there are some other ways to compute this transformation error.

The same applies for finding the transformation matrix. You can split the problem in multiple smaller problems ( ie face , torso and legs) or keep the body as whole or something else. What’s the way to go?

As always, some approaches are more effectively then others

In the weeks to come, I will try to setup a test case and test on all these different parameters.

https://becominghuman.ai/human-pose-matching-on-mobile-a-fun-application-using-human-pose-estimation-part-1-intro-93c5cbe3a096

相关文章推荐

- 姿态估计论文思路整理 -- Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields

- 论文阅读:RealTime Multi-Person 2D Pose Estimation using Part Affinity Fields

- caffe openpose/Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields配置(转)

- READING NOTE: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields

- Paper Reading:Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields

- 论文阅读-Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields

- Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields

- Paper reading: Realtime Multi-person 2D Pose estimation using Part Affinity Fields(1)

- 论文阅读笔记: 2017 cvpr Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields

- Deformable Part Model + UpperBodyDetector + HumanPoseEstimation

- Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields 个人解读

- 论文阅读:《Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields》CVPR 2017

- Human Pose Estimation using Global and Local Normalization

- 拜读曹哲的Realtime Multi-Person 2D Pose Estimation using Part Affinity Fileds

- 姿态论文整理--02-Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields

- 行人姿态估计--Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields

- Code note: Realtime Multi-person 2D Pose estimation using Part Affinity Fields(2)

- [OpenPose翻译] Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields ∗原文翻译(注释版)

- Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields ∗ 实时多人人体姿态估计论文原理讲解

- Human pose estimation via Convolutional Part Heatmap Regression