Keepalived+LVS-DR模式高可用负载均衡集群的搭建

2016-10-18 18:06

1046 查看

主机环境 redhat6.5 64位

实验环境 服务端1 ip 172.25.25.113 主机名:server3.example.com

服务端2 ip 172.25.25.114 主机名:server4.example.com

管理端2 ip 172.25.25.112 主机名:server2.example.com

管理端1 ip 172.25.25.111 主机名:server1.example.com

防火墙状态:关闭

虚拟ip(vip): 172.25.25.200/24

前面的博文中已经介绍过将DR添加到高可用集群(HA)的heartbeat中避免单点故障,本节将介绍将另一个避免单点故障的方法:将DR添加到HA的keepalived中。keepalived对后端有健康检查,则在安装好keepalived之后可直接添加DR。本节将不再介绍DR的配置,感兴趣的可查看前面的博文,本文直接从keeepalived的安装开始。在Keepalived.org官网可下载leepalived压缩包(keeepalived对后端有健康检查)

1.源码安装keepalived

[root@server1 mnt]# ls

keepalived-1.2.24.tar.gz

[root@server1 mnt]# tar zxf keepalived-1.2.24.tar.gz #解压

keepalived-1.2.24

keepalived-1.2.24.tar.gz

[root@server1 mnt]# cd keepalived-1.2.24

[root@server1 keepalived-1.2.24]# ls

aclocal.m4 ChangeLog CONTRIBUTORS genhash keepalived.spec.in missing

ar-lib compile COPYING INSTALL lib README

AUTHOR configure depcomp install-sh Makefile.am TODO

bin_install configure.ac doc keepalived Makefile.in

[root@server1 keepalived-1.2.24]# ./configure--prefix=/usr/local/keepalived

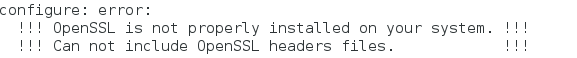

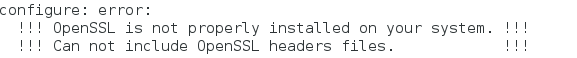

若有以下错误

则安装

[root@server1 keepalived-1.2.24]# yum installopenssl-devel.x86_64 -y

[root@server1 keepalived-1.2.24]# ./configure--prefix=/usr/local/keepalived

[root@server1 keepalived-1.2.24]# make

[root@server1 keepalived-1.2.24]# make install

[root@server1 keepalived-1.2.24]# cd /usr/local/

[root@server1 local]# ls

bin etc games include keepalived lib lib64 libexec sbin share src

[root@server1 local]# scp -r keepalived/172.25.25.112:/usr/local/

root@172.25.25.112's password:

#作软连接

[root@server1 local]# ln -s/usr/local/keepalived/etc/keepalived/ /etc/ #主配置文件的软链接

[root@server1 local]# ln -s/usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/ #启动脚本的软链接

[root@server1 local]# chmod +x /etc/init.d/keepalived #改变脚本的权限

[root@server1 local]# ln -s/usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ #配置文件的软链接

[root@server1 local]# ln -s/usr/local/keepalived/sbin/keepalived /sbin/ #二进制文件的软链接

[root@server1 local]# /etc/init.d/keepalived start #测试,开启

Starting keepalived: [ OK ]

[root@server1 local]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

[root@server1 local]#

2.将DR添加到keepalived及测试(管理端) 1.添加DR到keepalived

[root@server1 local]# cd /etc/keepalived/

[root@server1 keepalived]# ls

keepalived.conf samples

[root@server1 keepalived]# vim keepalived.conf #进入主配置文件

1 ! Configuration Filefor keepalived

2

3 global_defs {

4 notification_email {

5 root@localhost #邮件接受端

6 }

7 notification_email_from keepalived@server1.example.com #邮件发送端

8 smtp_server 127.0.0.1 #本地回环

9 smtp_connect_timeout 30 #连接超时

10 router_id LVS_DEVEL

11 vrrp_skip_check_adv_addr

12 vrrp_strict

13 vrrp_garp_interval 0

14 vrrp_gna_interval 0

15 }

16

17 vrrp_instance VI_1 {

18 state MASTER #服务端1是master

19 interface eth0 #进入接口eth0

20 virtual_router_id 25 #虚拟路由id(1-254之间)

21 priority 100 #在启动keepalived服务时,系统会比较priority的值,哪个值大哪个就是master

22 advert_int 1

23 authentication {

24 auth_type PASS

25 auth_pass 1111

26 }

27 virtual_ipaddress {

28 172.25.25.200 #虚拟ip‘

29 }

30 }

31

32 virtual_server172.25.25.200 80 { #虚拟服务

33 delay_loop 6

34 lb_algo rr

35 lb_kind DR #DR

36 # persistence_timeout 50 #超时

37 protocol TCP

38

39 real_server 172.25.25.113 80 { #真正的服务端

40 weight 1 #权重

41 TCP_CHECK {

42 connect_timeout 3

43 nb_get_retry 3

44 delay_before_retry 3

45 }

46 }

47 real_server 172.25.25.114 80 { #真正的服务端

48 weight 1

49 TCP_CHECK {

50 connect_timeout 3

51 nb_get_retry 3

52 delay_before_retry 3

53 }

54 }

55

56 }

[root@server1 keepalived]# /etc/init.d/keepalived start #开启(服务端1)

Starting keepalived: [ OK ]

[root@server1 keepalived]# scp keepalived.conf172.25.25.112:/etc/keepalived/

root@172.25.25.112's password: #将其传到服务端2

keepalived.conf 100%1049 1.0KB/s 00:00

[root@server2 keepalived]# vim keepalived.conf #进入刚传到服务端2文件,修改

7 notification_email_from keepalived@server2.example.com#邮件发送段,本机

18 state BACKUP #备服务器

2[root@server2 local]# /etc/init.d/keepalived start #开启(服务端2)

Starting keepalived: [ OK ]

1 priority 88 #比主的数值小就可以

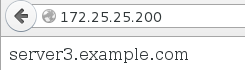

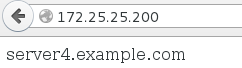

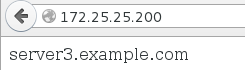

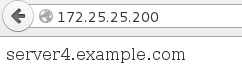

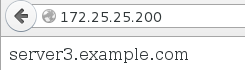

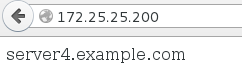

2.测试

[root@server1 keepalived]# ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

->RemoteAddress:Port ForwardWeight ActiveConn InActConn

TCP 172.25.25.200:httprr

->server3.example.com:http Route 1 0 0

->172.25.25.114:http Route 1 0 0

#刷新之后,服务端2

[root@server1 keepalived]# ip addr show #查看ip,虚拟ip在服务端1

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueuestate UNKNOWN

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8scope host lo

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether 52:54:00:ec:8b:36brd ff:ff:ff:ff:ff:ff

inet 172.25.25.111/24brd 172.25.25.255 scope global eth0

inet 172.25.25.200/32scope global eth0 #虚拟ip

inet6fe80::5054:ff:feec:8b36/64 scope link

valid_lft foreverpreferred_lft forever

当主机keepalived停掉,系统会将服务转移到备机上;当主机重新打开时,系统会重新读配置文件,来确定主机和备机,将服务开启到主机上

#将服务端1的keepalived停掉,测试

[root@server1 keepalived]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

#服务正常

#刷新之后,服务端2

[root@server1 keepalived]# ip addr show #查看,虚拟ip不在服务端1

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueuestate UNKNOWN

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8scope host lo

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:ec:8b:36 brd ff:ff:ff:ff:ff:ff

inet 172.25.25.111/24brd 172.25.25.255 scope global eth0

inet6fe80::5054:ff:feec:8b36/64 scope link

valid_lft foreverpreferred_lft forever

[root@server2 keepalived]# ip addr show #查看ip虚拟在服务端2

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueuestate UNKNOWN

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scopehost lo

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:85:1a:3b brd ff:ff:ff:ff:ff:ff

inet 172.25.25.112/24brd 172.25.25.255 scope global eth0

inet 172.25.25.200/32scope global eth0 #虚拟ip

inet6fe80::5054:ff:fe85:1a3b/64 scope link

valid_lft foreverpreferred_lft forever

#将服务端1的keepalived开启

[root@server1 keepalived]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

#服务正常运行

#刷新之后,服务端2

[root@server1 keepalived]# ip addr show #查看虚拟ip,回到服务端1

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueuestate UNKNOWN

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8scope host lo

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:ec:8b:36 brd ff:ff:ff:ff:ff:ff

inet 172.25.25.111/24brd 172.25.25.255 scope global eth0

inet 172.25.25.200/32scope global eth0 #虚拟ip

inet6fe80::5054:ff:feec:8b36/64 scope link

valid_lft foreverpreferred_lft forever

[root@server2 keepalived]# ip addr show #查看,服务端2上没有虚拟ip

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueuestate UNKNOWN

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8scope host lo

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:85:1a:3b brd ff:ff:ff:ff:ff:ff

inet 172.25.25.112/24brd 172.25.25.255 scope global eth0

inet6fe80::5054:ff:fe85:1a3b/64 scope link

valid_lft foreverpreferred_lft forever

实验环境 服务端1 ip 172.25.25.113 主机名:server3.example.com

服务端2 ip 172.25.25.114 主机名:server4.example.com

管理端2 ip 172.25.25.112 主机名:server2.example.com

管理端1 ip 172.25.25.111 主机名:server1.example.com

防火墙状态:关闭

虚拟ip(vip): 172.25.25.200/24

前面的博文中已经介绍过将DR添加到高可用集群(HA)的heartbeat中避免单点故障,本节将介绍将另一个避免单点故障的方法:将DR添加到HA的keepalived中。keepalived对后端有健康检查,则在安装好keepalived之后可直接添加DR。本节将不再介绍DR的配置,感兴趣的可查看前面的博文,本文直接从keeepalived的安装开始。在Keepalived.org官网可下载leepalived压缩包(keeepalived对后端有健康检查)

1.源码安装keepalived

[root@server1 mnt]# ls

keepalived-1.2.24.tar.gz

[root@server1 mnt]# tar zxf keepalived-1.2.24.tar.gz #解压

keepalived-1.2.24

keepalived-1.2.24.tar.gz

[root@server1 mnt]# cd keepalived-1.2.24

[root@server1 keepalived-1.2.24]# ls

aclocal.m4 ChangeLog CONTRIBUTORS genhash keepalived.spec.in missing

ar-lib compile COPYING INSTALL lib README

AUTHOR configure depcomp install-sh Makefile.am TODO

bin_install configure.ac doc keepalived Makefile.in

[root@server1 keepalived-1.2.24]# ./configure--prefix=/usr/local/keepalived

若有以下错误

则安装

[root@server1 keepalived-1.2.24]# yum installopenssl-devel.x86_64 -y

[root@server1 keepalived-1.2.24]# ./configure--prefix=/usr/local/keepalived

[root@server1 keepalived-1.2.24]# make

[root@server1 keepalived-1.2.24]# make install

[root@server1 keepalived-1.2.24]# cd /usr/local/

[root@server1 local]# ls

bin etc games include keepalived lib lib64 libexec sbin share src

[root@server1 local]# scp -r keepalived/172.25.25.112:/usr/local/

root@172.25.25.112's password:

#作软连接

[root@server1 local]# ln -s/usr/local/keepalived/etc/keepalived/ /etc/ #主配置文件的软链接

[root@server1 local]# ln -s/usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/ #启动脚本的软链接

[root@server1 local]# chmod +x /etc/init.d/keepalived #改变脚本的权限

[root@server1 local]# ln -s/usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ #配置文件的软链接

[root@server1 local]# ln -s/usr/local/keepalived/sbin/keepalived /sbin/ #二进制文件的软链接

[root@server1 local]# /etc/init.d/keepalived start #测试,开启

Starting keepalived: [ OK ]

[root@server1 local]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

[root@server1 local]#

2.将DR添加到keepalived及测试(管理端) 1.添加DR到keepalived

[root@server1 local]# cd /etc/keepalived/

[root@server1 keepalived]# ls

keepalived.conf samples

[root@server1 keepalived]# vim keepalived.conf #进入主配置文件

1 ! Configuration Filefor keepalived

2

3 global_defs {

4 notification_email {

5 root@localhost #邮件接受端

6 }

7 notification_email_from keepalived@server1.example.com #邮件发送端

8 smtp_server 127.0.0.1 #本地回环

9 smtp_connect_timeout 30 #连接超时

10 router_id LVS_DEVEL

11 vrrp_skip_check_adv_addr

12 vrrp_strict

13 vrrp_garp_interval 0

14 vrrp_gna_interval 0

15 }

16

17 vrrp_instance VI_1 {

18 state MASTER #服务端1是master

19 interface eth0 #进入接口eth0

20 virtual_router_id 25 #虚拟路由id(1-254之间)

21 priority 100 #在启动keepalived服务时,系统会比较priority的值,哪个值大哪个就是master

22 advert_int 1

23 authentication {

24 auth_type PASS

25 auth_pass 1111

26 }

27 virtual_ipaddress {

28 172.25.25.200 #虚拟ip‘

29 }

30 }

31

32 virtual_server172.25.25.200 80 { #虚拟服务

33 delay_loop 6

34 lb_algo rr

35 lb_kind DR #DR

36 # persistence_timeout 50 #超时

37 protocol TCP

38

39 real_server 172.25.25.113 80 { #真正的服务端

40 weight 1 #权重

41 TCP_CHECK {

42 connect_timeout 3

43 nb_get_retry 3

44 delay_before_retry 3

45 }

46 }

47 real_server 172.25.25.114 80 { #真正的服务端

48 weight 1

49 TCP_CHECK {

50 connect_timeout 3

51 nb_get_retry 3

52 delay_before_retry 3

53 }

54 }

55

56 }

[root@server1 keepalived]# /etc/init.d/keepalived start #开启(服务端1)

Starting keepalived: [ OK ]

[root@server1 keepalived]# scp keepalived.conf172.25.25.112:/etc/keepalived/

root@172.25.25.112's password: #将其传到服务端2

keepalived.conf 100%1049 1.0KB/s 00:00

[root@server2 keepalived]# vim keepalived.conf #进入刚传到服务端2文件,修改

7 notification_email_from keepalived@server2.example.com#邮件发送段,本机

18 state BACKUP #备服务器

2[root@server2 local]# /etc/init.d/keepalived start #开启(服务端2)

Starting keepalived: [ OK ]

1 priority 88 #比主的数值小就可以

2.测试

[root@server1 keepalived]# ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

->RemoteAddress:Port ForwardWeight ActiveConn InActConn

TCP 172.25.25.200:httprr

->server3.example.com:http Route 1 0 0

->172.25.25.114:http Route 1 0 0

#刷新之后,服务端2

[root@server1 keepalived]# ip addr show #查看ip,虚拟ip在服务端1

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueuestate UNKNOWN

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8scope host lo

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether 52:54:00:ec:8b:36brd ff:ff:ff:ff:ff:ff

inet 172.25.25.111/24brd 172.25.25.255 scope global eth0

inet 172.25.25.200/32scope global eth0 #虚拟ip

inet6fe80::5054:ff:feec:8b36/64 scope link

valid_lft foreverpreferred_lft forever

当主机keepalived停掉,系统会将服务转移到备机上;当主机重新打开时,系统会重新读配置文件,来确定主机和备机,将服务开启到主机上

#将服务端1的keepalived停掉,测试

[root@server1 keepalived]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

#服务正常

#刷新之后,服务端2

[root@server1 keepalived]# ip addr show #查看,虚拟ip不在服务端1

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueuestate UNKNOWN

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8scope host lo

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:ec:8b:36 brd ff:ff:ff:ff:ff:ff

inet 172.25.25.111/24brd 172.25.25.255 scope global eth0

inet6fe80::5054:ff:feec:8b36/64 scope link

valid_lft foreverpreferred_lft forever

[root@server2 keepalived]# ip addr show #查看ip虚拟在服务端2

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueuestate UNKNOWN

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scopehost lo

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:85:1a:3b brd ff:ff:ff:ff:ff:ff

inet 172.25.25.112/24brd 172.25.25.255 scope global eth0

inet 172.25.25.200/32scope global eth0 #虚拟ip

inet6fe80::5054:ff:fe85:1a3b/64 scope link

valid_lft foreverpreferred_lft forever

#将服务端1的keepalived开启

[root@server1 keepalived]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

#服务正常运行

#刷新之后,服务端2

[root@server1 keepalived]# ip addr show #查看虚拟ip,回到服务端1

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueuestate UNKNOWN

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8scope host lo

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:ec:8b:36 brd ff:ff:ff:ff:ff:ff

inet 172.25.25.111/24brd 172.25.25.255 scope global eth0

inet 172.25.25.200/32scope global eth0 #虚拟ip

inet6fe80::5054:ff:feec:8b36/64 scope link

valid_lft foreverpreferred_lft forever

[root@server2 keepalived]# ip addr show #查看,服务端2上没有虚拟ip

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueuestate UNKNOWN

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8scope host lo

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:85:1a:3b brd ff:ff:ff:ff:ff:ff

inet 172.25.25.112/24brd 172.25.25.255 scope global eth0

inet6fe80::5054:ff:fe85:1a3b/64 scope link

valid_lft foreverpreferred_lft forever

相关文章推荐

- 高可用负载均衡集群Keepalived+lvs-DR模式的搭建

- Keepalived+LVS-DR模式配置高可用负载均衡集群

- 用keepalived+lvs_dr搭建高可用的负载均衡集群

- 负载均衡集群 之 LVS (2) DR模式搭建 和 keepalived+lvs

- Keepalived+LVS-DR模式配置高可用负载均衡集群

- Linux下Keepalived+LVS-DR模式配置高可用负载均衡集群

- LVS-DR模式的配置及Heartbeat+Ldirectord+DR高可用负载均衡集群的搭建

- apache+inotify-tools+keepalived+lvs-DR模式配置高可用负载均衡集群

- Keepalived+LVS-DR模式配置高可用负载均衡集群

- LVS+keepalived DR模式配置高可用负载均衡集群

- LVS+Keepalived+Nginx+Tomcat高可用负载均衡集群配置(DR模式,一个VIP,多个端口)

- 搭建LVS+Keepalived高可用负载均衡集群

- LVS/DR + keepalived搭建负载均衡集群

- LVS高可用负载均衡集群搭建(keepalived主备)

- lvs DR模式 +keepalived 实现directory 高可用、httpd服务负载均衡集群

- 通过LVS+Keepalived搭建高可用的负载均衡集群系统

- lvs DR模式 +keepalived 实现directory 高可用、httpd服务负载均衡集群

- 虚拟机 搭建LVS + DR + keepalived 高可用负载均衡

- LVS-DR+Keepalived主从高可用负载均衡集群

- CentOS6.2下搭建LVS(DR)+Keepalived实现高性能高可用负载均衡