Linux企业运维篇——SaltStack的部署和配置keepalived高可用集群

一.SaltStack简介

SaltStack是基于Python开发的一套C/S架构配置管理工具(服务器端称作Master,客户端称作Minion),它的底层使用ZeroMQ消息队列pub/sub方式通信,使用SSL证书签发的方式进行认证管理。号称世界上最快的消息队列ZeroMQ使得SaltStack能快速在成千上万台机器上进行各种操作。

主要功能:

Saltstack最主要的两个功能是:配置管理与远程执行

Saltstack不只是一个配置管理工具,还是一个云计算与数据中心架构编排的利器

Saltstack已经支持Docker相关模块

在友好地支持各大云平台之后,配合Saltstack的Mine实时发现功能可以实现各种云平台业务的自动扩展

二.SaltStack安装部署

获得安装包并解压

[root@server1 2018]# ls libsodium-1.0.16-1.el7.x86_64.rpm PyYAML-3.11-1.el7.x86_64.rpm openpgm-5.2.122-2.el7.x86_64.rpm repodata python2-libcloud-2.0.0-2.el7.noarch.rpm salt-2018.3.3-1.el7.noarch.rpm python-cherrypy-5.6.0-2.el7.noarch.rpm salt-api-2018.3.3-1.el7.noarch.rpm python-crypto-2.6.1-2.el7.x86_64.rpm salt-cloud-2018.3.3-1.el7.noarch.rpm python-futures-3.0.3-1.el7.noarch.rpm salt-master-2018.3.3-1.el7.noarch.rpm python-msgpack-0.4.6-1.el7.x86_64.rpm salt-minion-2018.3.3-1.el7.noarch.rpm python-psutil-2.2.1-1.el7.x86_64.rpm salt-ssh-2018.3.3-1.el7.noarch.rpm python-tornado-4.2.1-1.el7.x86_64.rpm salt-syndic-2018.3.3-1.el7.noarch.rpm python-zmq-15.3.0-3.el7.x86_64.rpm zeromq-4.1.4-7.el7.x86_64.rpm [root@server1 2018]# yum install -y *,rpm

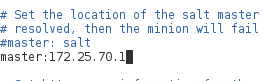

在server1上编辑配置文件,并开启master和minion

[root@server1 2018]# cd /etc/salt/ [root@server1 salt]# vim minion master: 172.25.70.1

[root@server1 salt]# systemctl start salt-master [root@server1 salt]# systemctl start salt-minion [root@server1 salt]# netstat -antlp Active Internet connections (servers and established) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 643/sshd tcp 0 0 0.0.0.0:4505 0.0.0.0:* LISTEN 1182/python tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 774/master tcp 0 0 0.0.0.0:4506 0.0.0.0:* LISTEN 1188/python tcp 0 0 172.25.70.1:22 172.25.70.250:35090 ESTABLISHED 975/sshd: root@pts/ tcp6 0 0 :::22 :::* LISTEN 643/sshd tcp6 0 0 ::1:25 :::* LISTEN 774/master

4505端口:用来链接slave,发布订阅

4506端口:请求响应,模式为:zmq(消息队列)

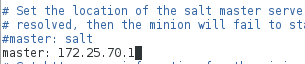

server2也安装上述软件包,编辑配置文件,并开启minion

[root@server2 2018]# ls libsodium-1.0.16-1.el7.x86_64.rpm PyYAML-3.11-1.el7.x86_64.rpm openpgm-5.2.122-2.el7.x86_64.rpm repodata python2-libcloud-2.0.0-2.el7.noarch.rpm salt-2018.3.3-1.el7.noarch.rpm python-cherrypy-5.6.0-2.el7.noarch.rpm salt-api-2018.3.3-1.el7.noarch.rpm python-crypto-2.6.1-2.el7.x86_64.rpm salt-cloud-2018.3.3-1.el7.noarch.rpm python-futures-3.0.3-1.el7.noarch.rpm salt-master-2018.3.3-1.el7.noarch.rpm python-msgpack-0.4.6-1.el7.x86_64.rpm salt-minion-2018.3.3-1.el7.noarch.rpm python-psutil-2.2.1-1.el7.x86_64.rpm salt-ssh-2018.3.3-1.el7.noarch.rpm python-tornado-4.2.1-1.el7.x86_64.rpm salt-syndic-2018.3.3-1.el7.noarch.rpm python-zmq-15.3.0-3.el7.x86_64.rpm zeromq-4.1.4-7.el7.x86_64.rpm [root@server1 2018]# yum install -y *.rpm [root@server2 2018]# cd /etc/salt/ [root@server2 salt]# vim minion master: 172.25.70.1

注意:只开启minion

[root@server2 salt]# systemctl start salt-minion

在master添加minion(master和minion交换公钥的过程)

[root@server1 rhel7]# salt-key -L #查看 minion 列表(这时候 saltstack-minion是红色的) Accepted Keys: Denied Keys: Unaccepted Keys: server1 server2 Rejected Keys: [root@server1 rhel7]# salt-key -A # 添加显示的所有主机 The following keys are going to be accepted: Unaccepted Keys: server1 server2 Proceed? [n/Y] Y Key for minion server1 accepted. Key for minion server2 accepted. [root@server1 rhel7]# salt-key -L Accepted Keys: server1 server2 Denied Keys: Unaccepted Keys: Rejected Keys:

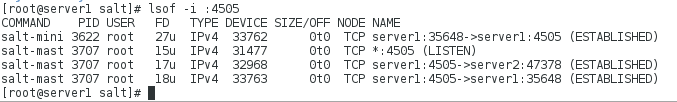

此时查看,已经建立关系

[root@server1 rhel7]# yum install -y lsof [root@server1 salt]# lsof -i :4505 salt-mast 3707 root 17u IPv4 32968 0t0 TCP server1:4505->server2:47378 (ESTABLISHED) salt-mast 3707 root 18u IPv4 33763 0t0 TCP server1:4505->server1:35648 (ESTABLISHED)

列出树状关系

[root@server2 salt]# yum install -y tree [root@server1 salt]# ls cloud cloud.deploy.d cloud.profiles.d master minion minion_id proxy roster cloud.conf.d cloud.maps.d cloud.providers.d master.d minion.d pki proxy.d [root@server1 salt]# cd pki/ [root@server1 pki]# tree . . ├── master │ ├── master.pem │ ├── master.pub │ ├── minions │ │ ├── server1 │ │ └── server2 │ ├── minions_autosign │ ├── minions_denied │ ├── minions_pre │ └── minions_rejected └── minion ├── minion_master.pub ├── minion.pem └── minion.pub 7 directories, 7 files

分别在master和minion端查看两者的公钥 (公钥一致)

server1:

[root@server1 master]# pwd /etc/salt/pki/master [root@server1 master]# ls master.pem master.pub minions minions_autosign minions_denied minions_pre minions_rejected [root@server1 master]# md5sum master.pub fa6ce0736dfd343dbb341153e5538bd5 master.pub

server2:

[root@server2 minion]# pwd /etc/salt/pki/minion [root@server2 minion]# ls minion_master.pub minion.pem minion.pub [root@server2 minion]# md5sum minion_master.pub fa6ce0736dfd343dbb341153e5538bd5 minion_master.pub [root@server2 minion]# md5sum minion.pub 20ddc2caa310ba9f48ba46672547e1a2 minion.pub

server1:

[root@server1 master]# ls master.pem master.pub minions minions_autosign minions_denied minions_pre minions_rejected [root@server1 master]# cd minions [root@server1 minions]# ls server1 server2 [root@server1 minions]# md5sum server2 20ddc2caa310ba9f48ba46672547e1a2 server2

安装python-setproctitle,查看python端口情况

[root@server1 minions]# yum install python-setproctitle -y

[root@server1 minions]# systemctl restart salt-master [root@server1 minions]# ps ax

简单的测试

[root@server1 minions]# salt '*' test.ping [root@server1 minions]# salt '*' cmd.run hostname [root@server1 minions]# salt '*' cmd.run df

三.配置高可用

在server1下建立/srv/salt目录,在此目录下建立keepalived目录,进到目录里边编辑安装keepalived的sls推送文件

[root@server1 ~]# mkdir /srv/salt

[root@server1 ~]# cd /srv/salt/

[root@server1 salt]# mkdir keepalived

[root@server1 salt]# ls

keepalived

[root@server1 salt]# cd keepalived/

[root@server1 keepalived]# mkdir files

[root@server1 saltstack]# cp keepalived-1.4.3.tar.gz /srv/salt/keepalived/files/

[root@server1 saltstack]# cd /srv/salt/keepalived/files/

[root@server1 files]# ls

keepalived-1.4.3.tar.gz

[root@server1 keepalived]# vim install.sls

kp-install:

pkg.installed:

- pkgs:

- keepalived

file.managed:

- name: /etc/keepalived/keepalived.conf

- source: salt://keepalived/files/keepalived.conf

- template: jinja

{% if grains['fqdn'] == 'server1' %} #server1是master

STATE: MASTER

VRID: 51

PRIORITY: 100

{% elif grains['fqdn'] == 'server2' %} #server2是backup

STATE: BACKUP

VRID: 51

PRIORITY: 50

{% endif %}

service.running:

- name: keepalived

- reload: True

- watch:

- file: kp-install

[root@server1 yum.repos.d]# salt server2 state.sls keepalived.install #此时会报错

编辑 keepalived的脚本,配置文件远程发送到到sevrer1中/srv/salt/keepalived/files

server2:

[root@server2 keepalived]# pwd

/etc/keepalived

[root@server2 keepalived]# scp keepalived.conf server1:/srv/salt/keepalived/files

The authenticity of host 'server1 (172.25.70.1)' can't be established.

ECDSA key fingerprint is 23:30:b9:0b:bd:7e:c3:05:4e:9a:fb:6f:c5:d6:23:c9.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'server1,172.25.70.1' (ECDSA) to the list of known hosts.

root@server1's password:

keepalived.conf 100% 3562 3.5KB/s 00:00

[root@server1 files]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state {{ STATE }}

interface eth0

virtual_router_id {{ VRID }}

priority {{ PRIORITY }}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.70.100

}

重新执行

[root@server1 yum.repos.d]# salt server2 state.sls keepalived.install

在/srv/salt下编辑全部节点的推送的top.sls文件

[root@server1 keepalived]# cd /srv/salt/ [root@server1 salt]# ls keepalived [root@server1 salt]# vim top.sls base: 'server1': - keepalived.install 'server2 1b024 ': - keepalived.install

执行top.sls文件,向所有节点安装以及部署服务

[root@server1 salt]# salt '*' state.highstate

[root@server1 salt]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:f2:25:70 brd ff:ff:ff:ff:ff:ff inet 172.25.70.1/24 brd 172.25.70.255 scope global eth0 valid_lft forever preferred_lft forever inet 172.25.70.100/32 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::5054:ff:fef2:2570/64 scope link valid_lft forever preferred_lft forever

关闭server1的keepalived,查看server2的ip,发现已经飘移了

[root@server1 salt]# systemctl stop keepalived [root@server2 keepalived]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:61:d6:ce brd ff:ff:ff:ff:ff:ff inet 172.25.70.2/24 brd 172.25.70.255 scope global eth0 valid_lft forever preferred_lft forever inet 172.25.70.100/32 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::5054:ff:fe61:d6ce/64 scope link valid_lft forever preferred_lft forever

- linux运维企业实战——saltstack配置一些简单的自动化部署(apache、nginx)

- Linux企业运维篇——saltstack的安装部署

- Linux下配置 Keepalived(心跳检测部署)

- Linux下配置 Keepalived(心跳检测部署)

- Linux的企业-高可用集群Lvs+keepalived+ftp

- Linux企业运维篇——saltstack中的Myaql存储,API接口以及常用模块sydic和ssh

- Linux下配置 Keepalived(心跳检测部署)

- linux学习第五十六篇:集群介绍,keepalived介绍,用keepalived配置高可用集群

- Linux集群架构(集群介绍、keepalived介绍、用keepalived配置高可用集群)

- Redis 在linux环境下安装,高可用集群配置安装部署

- Linux下配置 Keepalived(心跳检测部署)

- linux集群架构介绍,Keepalived介绍,用keepalived配置高可用集群

- Linux下配置 Keepalived(心跳检测部署)

- linux企业常用服务---lvs+Keepalived高可用集群

- linux集群-keepalived介绍-用keepalived配置高可用集群

- 阿里云服务器Linux CentOS安装配置(五)jetty配置、部署

- 阿里云服务器Linux CentOS安装配置(六)resin多端口配置、安装、部署

- Linux:一台apache服务器上部署多个项目的apache配置

- Tomcat(三) Tomcat安装配置:Tomcat+Nginx+keepalived 实现动静分离、Session会话保持的高可用集群

- 非web java项目部署到linux总结(非打包成jar,配置文件可以随时修改)