使用 Ceph 作为 OpenStack 的统一存储解决方案

目录

文章目录

前文列表

《手动部署 OpenStack Rocky 双节点》

《手动部署 Ceph Mimic 三节点》

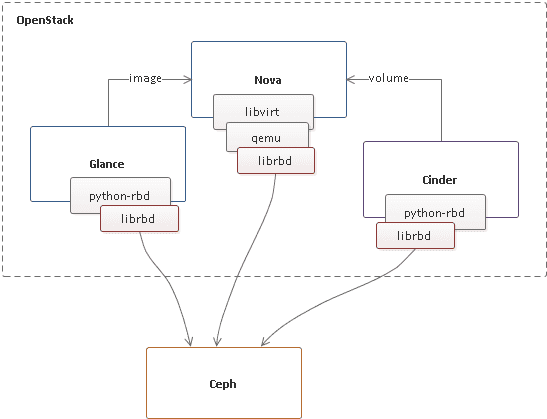

统一存储解决方案

OpenStack 使用 Ceph 作为后端存储可以带来以下好处:

- 不需要购买昂贵的商业存储设备,降低 OpenStack 的部署成本

- Ceph 同时提供了块存储、文件系统和对象存储,能够完全满足 OpenStack 的存储类型需求

- RBD COW 特性支持快速的并发启动多个 OpenStack 实例

- 为 OpenStack 实例默认的提供持久化卷

- 为 OpenStack 卷提供快照、备份以及复制功能

- 为 Swift 和 S3 对象存储接口提供了兼容的 API 支持

在生产环境中,我们经常能够看见将 Nova、Cinder、Glance 与 Ceph RBD 进行对接。除此之外,还可以将 Swift、Manila 分别对接到 Ceph RGW 与 CephFS。Ceph 作为统一存储解决方案,有效降低了 OpenStack 云环境的复杂性与运维成本。

配置 Ceph RBD

NOTE:以下执行步骤基于现有的 OpenStack 双节点和 Ceph 三节点集群之上,请参照前文列表中提供的部署架构。

- Images: OpenStack Glance manages images for VMs. Images are immutable. OpenStack treats images as binary blobs and downloads them accordingly.

- Volumes: Volumes are block devices. OpenStack uses volumes to boot VMs, or to attach volumes to running VMs. OpenStack manages volumes using Cinder services.

- Guest Disks: Guest disks are guest operating system disks. By default, when you boot a virtual machine, its disk appears as a file on the filesystem of the hypervisor (usually under /var/lib/nova/instances//). Prior to OpenStack Havana, the only way to boot a VM in Ceph was to use the boot-from-volume functionality of Cinder. However, now it is possible to boot every virtual machine inside Ceph directly without using Cinder, which is advantageous because it allows you to perform maintenance operations easily with the live-migration process. Additionally, if your hypervisor dies it is also convenient to trigger nova evacuate and run the virtual machine elsewhere almost seamlessly.

官方文档:http://docs.ceph.com/docs/mimic/rbd/rbd-openstack/

为 Glance、Nova、Cinder 创建专用的 RBD Pools:

# glance-api ceph osd pool create images 128 rbd pool init images # cinder-volume ceph osd pool create volumes 128 rbd pool init volumes # cinder-backup [可选] ceph osd pool create backups 128 rbd pool init backups # nova-compute ceph osd pool create vms 128 rbd pool init vms

为 OpenStack 节点上安装 Ceph 客户端:

ssh-copy-id -i ~/.ssh/id_rsa.pub root@controller ssh-copy-id -i ~/.ssh/id_rsa.pub root@compute # 依旧在 /opt/ceph/deploy 执行 [root@ceph-node1 deploy]# ceph-deploy install controller compute [root@ceph-node1 deploy]# ceph-deploy --overwrite-conf admin controller compute [root@controller ~]# ceph -s cluster: id: d82f0b96-6a69-4f7f-9d79-73d5bac7dd6c health: HEALTH_OK services: mon: 3 daemons, quorum ceph-node1,ceph-node2,ceph-node3 mgr: ceph-node1(active), standbys: ceph-node2, ceph-node3 osd: 9 osds: 9 up, 9 in rgw: 3 daemons active data: pools: 8 pools, 544 pgs objects: 221 objects, 2.2 KiB usage: 9.5 GiB used, 80 GiB / 90 GiB avail pgs: 544 active+clean [root@compute ~]# ceph -s cluster: id: d82f0b96-6a69-4f7f-9d79-73d5bac7dd6c health: HEALTH_OK services: mon: 3 daemons, quorum ceph-node1,ceph-node2,ceph-node3 mgr: ceph-node1(active), standbys: ceph-node2, ceph-node3 osd: 9 osds: 9 up, 9 in rgw: 3 daemons active data: pools: 8 pools, 544 pgs objects: 221 objects, 2.2 KiB usage: 9.5 GiB used, 80 GiB / 90 GiB avail pgs: 544 active+clean

- 确保 glance-api 节点安装了 python-rbd

[root@controller ~]# rpm -qa | grep python-rbd python-rbd-13.2.5-0.el7.x86_64

- 确保 nova-compute、cinder-volume 节点都安装了 ceph-common

[root@controller ~]# rpm -qa | grep ceph-common ceph-common-13.2.5-0.el7.x86_64 [root@compute ~]# rpm -qa | grep ceph-common ceph-common-13.2.5-0.el7.x86_64

通过 cephx 为 Glance、Cinder 创建用户:

# glance-api ceph auth get-or-create client.glance mon 'profile rbd' osd 'profile rbd pool=images' # cinder-volume ceph auth get-or-create client.cinder mon 'profile rbd' osd 'profile rbd pool=volumes, profile rbd pool=vms, profile rbd pool=images' # cinder-backup [可选] ceph auth get-or-create client.cinder-backup mon 'profile rbd' osd 'profile rbd pool=backups'

为新建用户 client.cinder 和 client.glance 创建 keyring 文件,允许以 OpenStack Cinder、Glance 用户访问 Ceph Cluster:

# glance-api ceph auth get-or-create client.glance | ssh root@controller sudo tee /etc/ceph/ceph.client.glance.keyring ssh root@controller sudo chown glance:glance /etc/ceph/ceph.client.glance.keyring # cinder-volume ceph auth get-or-create client.cinder | ssh root@controller sudo tee /etc/ceph/ceph.client.cinder.keyring ssh root@controller sudo chown cinder:cinder /etc/ceph/ceph.client.cinder.keyring # cinder-backup [可选] ceph auth get-or-create client.cinder-backup | ssh root@controller sudo tee /etc/ceph/ceph.client.cinder-backup.keyring ssh root@controller sudo chown cinder:cinder /etc/ceph/ceph.client.cinder-backup.keyring # nova-compute ceph auth get-or-create client.cinder | ssh root@compute sudo tee /etc/ceph/ceph.client.cinder.keyring

计算节点上的 Libvirt 进程在挂载或卸载一个由 Cinder 提供的 Volume 时需要访问 Ceph Cluster。所以需要创建 client.cinder 用户的访问秘钥并添加到 Libvirt 守护进程。注意,在 Controller 和 Compute 节点上都运行着 nova-compute,所以在两个节点上都要执行下述步骤:

####### Compute ceph auth get-key client.cinder | ssh root@compute tee /tmp/client.cinder.key # 生成随机 UUID,作为 Libvirt 秘钥的唯一标识 [root@compute ~]# uuidgen 457eb676-33da-42ec-9a8c-9293d545c337 # 创建 Libvirt 秘钥文件 [root@compute ~]# cat > /tmp/secret.xml <<EOF > <secret ephemeral='no' private='no'> > <uuid>457eb676-33da-42ec-9a8c-9293d545c337</uuid> > <usage type='ceph'> > <name>client.cinder secret</name> > </usage> > </secret> > EOF [root@compute ~]# ll /tmp/secret.xml -rw-r--r--. 1 root root 170 Apr 25 05:47 /tmp/secret.xml # 定义一个 Libvirt 秘钥 [root@compute ~]# sudo virsh secret-define --file /tmp/secret.xml Secret 457eb676-33da-42ec-9a8c-9293d545c337 created [root@compute ~]# ll /tmp/client.cinder.key -rw-r--r--. 1 root root 40 Apr 25 05:44 /tmp/client.cinder.key # 设置秘钥的值,值为 Ceph client.cinder 用户的 key,Libvirt 凭此 key 就可能以 Cinder 的用户访问 Ceph Cl 20000 uster [root@compute ~]# sudo virsh secret-set-value --secret 457eb676-33da-42ec-9a8c-9293d545c337 --base64 $(cat /tmp/client.cinder.key) Secret value set ####### Controller ceph auth get-key client.cinder | ssh root@controller tee /tmp/client.cinder.key [root@controller ~]# uuidgen 4810c760-dc42-4e5f-9d41-7346db7d7da2 [root@controller ~]# cat > /tmp/secret.xml <<EOF > <secret ephemeral='no' private='no'> > <uuid>4810c760-dc42-4e5f-9d41-7346db7d7da2</uuid> > <usage type='ceph'> > <name>client.cinder secret</name> > </usage> > </secret> > EOF [root@controller ~]# sudo virsh secret-define --file /tmp/secret.xml Secret 4810c760-dc42-4e5f-9d41-7346db7d7da2 created [root@controller ~]# sudo virsh secret-set-value --secret 4810c760-dc42-4e5f-9d41-7346db7d7da2 --base64 $(cat /tmp/client.cinder.key) Secret value set

对接 Glance

Glance 为 OpenStack 提供镜像及其元数据注册服务,Glance 支持对接多种后端存储。与 Ceph 完成对接后,Glance 上传的 Image 会作为块设备储存在 Ceph 集群中。新版本的 Glance 也开始支持 enabled_backends 了,可以同时对接多个 Storage Provider。

NOTE:对接前检查是否要保留旧镜像,这里我们以替换的方式来对接 Ceph。

# /etc/glance/glance-api.conf [glance_store] # stores = file,http # default_store = file # filesystem_store_datadir = /var/lib/glance/images/ stores = rbd default_store = rbd rbd_store_pool = images rbd_store_user = glance rbd_store_ceph_conf = /etc/ceph/ceph.conf rbd_store_chunk_size = 8 show_image_direct_url = True [database] connection = mysql+pymysql://glance:fanguiju@controller/glance [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = glance password = fanguiju [paste_deploy] flavor = keystone

重启 glance-api 服务:

systemctl restart openstack-glance-api

以 RAW 格式上传一个 Image,而不再使用 QCOW2 格式:

[root@controller ~]# openstack image create --container-format bare --disk-format raw --file cirros-0.3.4-x86_64-disk.img --unprotected --public cirros +------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | checksum | ee1eca47dc88f4879d8a229cc70a07c6 | | container_format | bare | | created_at | 2019-04-25T10:40:13Z | | disk_format | raw | | file | /v2/images/c72488c0-4dfc-4610-888e-914f6dc095b9/file | | id | c72488c0-4dfc-4610-888e-914f6dc095b9 | | min_disk | 0 | | min_ram | 0 | | name | cirros | | owner | a2b55e37121042a1862275a9bc9b0223 | | properties | os_hash_algo='sha512', os_hash_value='1b03ca1bc3fafe448b90583c12f367949f8b0e665685979d95b004e48574b953316799e23240f4f739d1b5eb4c4ca24d38fdc6f4f9d8247a2bc64db25d6bbdb2', os_hidden='False' | | protected | False | | schema | /v2/schemas/image | | size | 13287936 | | status | active | | tags | | | updated_at | 2019-04-25T10:40:16Z | | virtual_size | None | | visibility | public | +------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

查看 Pool images 的 Objects 信息:

[root@ceph-node1 ~]# rbd ls images c72488c0-4dfc-4610-888e-914f6dc095b9 [root@ceph-node1 ~]# rbd info images/c72488c0-4dfc-4610-888e-914f6dc095b9 rbd image 'c72488c0-4dfc-4610-888e-914f6dc095b9': size 13 MiB in 2 objects order 23 (8 MiB objects) id: 149127d7461e2 block_name_prefix: rbd_data.149127d7461e2 format: 2 features: layering op_features: flags: create_timestamp: Thu Apr 25 06:40:15 2019 [root@ceph-node1 ~]# rados ls -p images rbd_header.149127d7461e2 rbd_id.c72488c0-4dfc-4610-888e-914f6dc095b9 rbd_directory rbd_data.149127d7461e2.0000000000000000 rbd_info rbd_data.149127d7461e2.0000000000000001

可见在 Pool images 中新建了一个 {glance_image_uuid} 块设备,块设备的 Object Size 为 8M,对应的 Objects 有 2 个,足以放下 cirros img 13M 的数据。

对接 Cinder

Cinder 为 OpenStack 提供卷服务,支持非常广泛的后端存储类型。对接 Ceph 后,Cinder 创建的 Volume,本质就是 Ceph RBD 通过 rbd 协议 map 到指定节点的块设备。除了 cinder-volume 之后,Cinder 的 Backup 服务也可以对接 Ceph,将备份的 Image 以对象或块设备的形式上传到 Ceph 集群。

NOTE:对接前检查是否要保留旧卷,这里我们以添加的方式来对接 Ceph。

# cat /etc/cinder/cinder.conf [DEFAULT] my_ip = 172.18.22.231 enabled_backends = lvm,ceph auth_strategy = keystone transport_url = rabbit://openstack:fanguiju@controller glance_api_version = 2 glance_api_servers = http://controller:9292 [database] connection = mysql+pymysql://cinder:fanguiju@controller/cinder [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_id = default user_domain_id = default project_name = service username = cinder password = fanguiju [oslo_concurrency] lock_path = /var/lib/cinder/tmp [lvm] volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver volume_backend_name = lvm volume_group = cinder-volumes iscsi_protocol = iscsi iscsi_helper = lioadm [ceph] volume_driver = cinder.volume.drivers.rbd.RBDDriver volume_backend_name = ceph rbd_pool = volumes rbd_ceph_conf = /etc/ceph/ceph.conf rbd_flatten_volume_from_snapshot = false rbd_max_clone_depth = 5 rbd_store_chunk_size = 4 rados_connect_timeout = -1 # for cephx authentication rbd_user = cinder # rbd_user 访问 Ceph Cluster 所使用的 Libvirt Secret UUID rbd_secret_uuid = 4810c760-dc42-4e5f-9d41-7346db7d7da2

重启 cinder-volume 服务:

systemctl restart openstack-cinder-volume

创建一个 RBD Type Volume:

[root@controller ~]# openstack volume type create --public --property volume_backend_name="ceph" ceph_rbd +-------------+--------------------------------------+ | Field | Value | +-------------+--------------------------------------+ | description | None | | id | 5cfadfea-df0a-4c2c-917c-2575cc968b5d | | is_public | True | | name | ceph_rbd | | properties | volume_backend_name='ceph' | +-------------+--------------------------------------+ [root@controller ~]# openstack volume type create --public --property volume_backend_name="lvm" local_lvm +-------------+--------------------------------------+ | Field | Value | +-------------+--------------------------------------+ | description | None | | id | cb718d85-1abf-43e9-a644-a02365ec6e66 | | is_public | True | | name | local_lvm | | properties | volume_backend_name='lvm' | +-------------+--------------------------------------+ [root@controller ~]# openstack volume create --type ceph_rbd --size 1 ceph_rbd_vol01 +---------------------+--------------------------------------+ | Field | Value | +---------------------+--------------------------------------+ | attachments | [] | | availability_zone | nova | | bootable | false | | consistencygroup_id | None | | created_at | 2019-04-25T11:05:43.000000 | | description | None | | encrypted | False | | id | 1f8a3f58-72b2-4c81-958c-d2f15d835fe2 | | migration_status | None | | multiattach | False | | name | ceph_rbd_vol01 | | properties | | | replication_status | None | | size | 1 | | snapshot_id | None | | source_volid | None | | status | creating | | type | ceph_rbd | | updated_at | None | | user_id | 92602c24daa24f019f05ecb95f1ce68e | +---------------------+--------------------------------------+ [root@controller ~]# openstack volume list +--------------------------------------+----------------+-----------+------+-------------+ | ID | Name | Status | Size | Attached to | +--------------------------------------+----------------+-----------+------+-------------+ | 1f8a3f58-72b2-4c81-958c-d2f15d835fe2 | ceph_rbd_vol01 | available | 1 | | +--------------------------------------+----------------+-----------+------+-------------+

查看 Pool images 的 Objects 信息:

[root@ceph-node1 ~]# rbd ls volumes volume-1f8a3f58-72b2-4c81-958c-d2f15d835fe2 [root@ceph-node1 ~]# rbd info volumes/volume-1f8a3f58-72b2-4c81-958c-d2f15d835fe2 rbd image 'volume-1f8a3f58-72b2-4c81-958c-d2f15d835fe2': size 1 GiB in 256 objects order 22 (4 MiB objects) id: 149a77b0d206a block_name_prefix: rbd_data.149a77b0d206a format: 2 features: layering op_features: flags: create_timestamp: Thu Apr 25 07:05:45 2019 [root@ceph-node1 ~]# rados ls -p volumes rbd_directory rbd_info rbd_header.149a77b0d206a rbd_id.volume-1f8a3f58-72b2-4c81-958c-d2f15d835fe2

可见,在 Pool volumes 下创建了一个 volume-{cinder_volume_uuis} 块设备,而且此时的块设备是没有 Objects 的,这是因为该 Volume 暂时没有任何的数据,所以 RBD 也不会存储数据的 Objects。在 Attached Volume 并不断写入数据之后,RBD 块设备的 Objects 也会慢慢的增加,体现了 RBD 的精简置备特性。

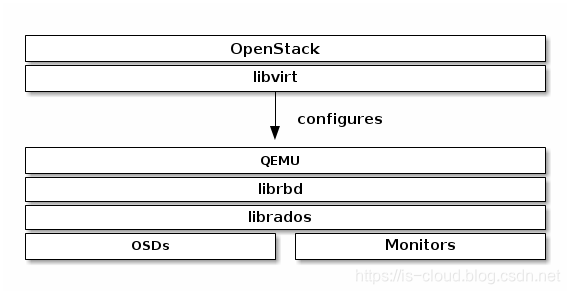

对接 Nova

与其说是对接 Nova,更准确来说是对接 QEMU-KVM/libvirt,因为 librbd 早已原生集成到其中。

Nova 为 OpenStack 提供计算服务,对接 Ceph 主要是希望将虚拟机实例的系统磁盘文件储存到 Ceph 集群中。当 Glance 和 Nova 同时对接到了 Ceph 存储后端之后,启动虚拟机实例的系统磁盘文件的本质是从 RBD Image(由 Glance 上传的 Image)克隆出来的一个 COW 副本镜像。如果 Glance 并没有对接到 Ceph 的话,那么正式启动虚拟机之前就需要先完成从 Glance Backend Download Image 并 Upload 到 Ceph RBD 的过程(??? 这里暂时只是猜测,因为 RBD Image 的 PGs 被 Pool images、vms 隔离了,应该不可以执行克隆操作,但如果 Nova 和 Glance 对接了同一个 Pool 或许是可以实现的)。

NOTE:对接无需检查是否存在旧虚拟机。因为我将 Controller 和 Compute 均作为计算节点,所以下述配置需要在两者之上完成。

修改两个节点上的 Ceph Client 配置,启用 RBD 客户端缓存和管理 Socket,有助于提升性能和便于查看故障日志:

# /etc/ceph/ceph.conf [client] rbd cache = true rbd cache writethrough until flush = true admin socket = /var/run/ceph/guests/$cluster-$type.$id.$pid.$cctid.asok log file = /var/log/qemu/qemu-guest-$pid.log rbd concurrent management ops = 20

mkdir -p /var/run/ceph/guests/ /var/log/qemu/ chown qemu:qemu /var/run/ceph/guests /var/log/qemu/

修改 Nova 配置:

- Controller

# /etc/nova/nova.conf ... [libvirt] virt_type = qemu images_type = rbd images_rbd_pool = vms images_rbd_ceph_conf = /etc/ceph/ceph.conf rbd_user = cinder rbd_secret_uuid = 4810c760-dc42-4e5f-9d41-7346db7d7da2 disk_cachemodes="network=writeback"

- Compute

# /etc/nova/nova.conf ... [libvirt] virt_type = qemu images_type = rbd images_rbd_pool = vms images_rbd_ceph_conf = /etc/ceph/ceph.conf rbd_user = cinder rbd_secret_uuid = 457eb676-33da-42ec-9a8c-9293d545c337 disk_cachemodes="network=writeback"

创建虚拟机:

[root@controller ~]# openstack server create --image c72488c0-4dfc-4610-888e-914f6dc095b9 --flavor 66ddc38d-452a-40b6-a0f3-f867658754ff --nic net-id=f31b2060-ccc0-457a-948c-d805a7680faf VM1 +-------------------------------------+-----------------------------------------------+ | Field | Value | +-------------------------------------+-----------------------------------------------+ | OS-DCF:diskConfig | MANUAL | | OS-EXT-AZ:availability_zone | | | OS-EXT-SRV-ATTR:host | None | | OS-EXT-SRV-ATTR:hypervisor_hostname | None | | OS-EXT-SRV-ATTR:instance_name | | | OS-EXT-STS:power_state | NOSTATE | | OS-EXT-STS:task_state | scheduling | | OS-EXT-STS:vm_state | building | | OS-SRV-USG:launched_at | None | | OS-SRV-USG:terminated_at | None | | accessIPv4 | | | accessIPv6 | | | addresses | | | adminPass | 8csFJ7fM6hhP | | config_drive | | | created | 2019-04-25T11:34:36Z | | flavor | mini (66ddc38d-452a-40b6-a0f3-f867658754ff) | | hostId | | | id | b10b570e-07f9-4fba-864c-52f02f6b9922 | | image | cirros (c72488c0-4dfc-4610-888e-914f6dc095b9) | | key_name | None | | name | VM1 | | progress | 0 | | project_id | a2b55e37121042a1862275a9bc9b0223 | | properties | | | security_groups | name='default' | | status | BUILD | | updated | 2019-04-25T11:34:37Z | | user_id | 92602c24daa24f019f05ecb95f1ce68e | | volumes_attached | | +-------------------------------------+-----------------------------------------------+ [root@controller ~]# openstack server list +--------------------------------------+------+--------+---------------------------+--------+--------+ | ID | Name | Status | Networks | Image | Flavor | +--------------------------------------+------+--------+---------------------------+--------+--------+ | b10b570e-07f9-4fba-864c-52f02f6b9922 | VM1 | ACTIVE | vlan-net-100=192.168.1.20 | cirros | mini | +--------------------------------------+------+--------+---------------------------+--------+--------+

查看 Libvirt 虚拟机的 XML 文件:

[root@compute ~]# virsh dumpxml instance-00000023 ... <source protocol='rbd' name='vms/b10b570e-07f9-4fba-864c-52f02f6b9922_disk'> <host name='172.18.22.234' port='6789'/> <host name='172.18.22.235' port='6789'/> <host name='172.18.22.236' port='6789'/> </source>

访问 Ceph 客户端管理 Socket:

ceph daemon /var/run/ceph/ceph-client.cinder.19195.32310016.asok help

查看 QEMU 日志:

tailf /var/log/qemu/qemu-guest-11843.log

查看 Pool vms 状态信息:

[root@ceph-node1 ~]# rbd ls vms b10b570e-07f9-4fba-864c-52f02f6b9922_disk [root@ceph-node1 ~]# rbd info vms/b10b570e-07f9-4fba-864c-52f02f6b9922_disk rbd image 'b10b570e-07f9-4fba-864c-52f02f6b9922_disk': size 1 GiB in 256 objects order 22 (4 MiB objects) id: 14ba06b8b4567 block_name_prefix: rbd_data.14ba06b8b4567 format: 2 features: layering op_features: flags: create_timestamp: Thu Apr 25 07:34:48 2019 [root@ceph-node1 ~]# rados ls -p vms rbd_data.14ba06b8b4567.0000000000000003 rbd_data.14ba06b8b4567.0000000000000002 rbd_data.14ba06b8b4567.0000000000000005 rbd_data.14ba06b8b4567.0000000000000000 rbd_directory rbd_header.14ba06b8b4567 rbd_info rbd_id.b10b570e-07f9-4fba-864c-52f02f6b9922_disk rbd_data.14ba06b8b4567.0000000000000006 rbd_data.14ba06b8b4567.0000000000000001 rbd_data.14ba06b8b4567.0000000000000004 rbd_data.14ba06b8b4567.0000000000000007

可见,块设备 {nova_instance_uuid}_disk 是一个没有 parent 的 RBD Image,是从 Pool images 拷贝到 Pool vms 的 Glance Image。块设备 {nova_instance_uuid}_disk 的 Objects 也是随着虚拟机的运行而逐渐增加,直到用满位置。

- Openstack存储总结之:使用Ceph集群作为后端统一存储

- 使用Ceph作为OpenStack的后端存储

- 使用Ceph作为OpenStack的后端存储

- 【转载】ceph作为OpenStack的后端存储解决方案

- 使用Ceph作为OpenStack的后端存储

- OpenStack CEPH Liberty 统一存储 bug解决

- [OpenStack 存储] Nova,Glance与Cinder 基于Ceph的统一存储方案

- OpenStack入门修炼之Cinder服务-->使用NFS作为后端存储(19)

- openstack kilo 卷备份使用nfs作为后端存储

- openstack中使用glusterfs作为nova共享存储

- ceph客户端挂在ceph集群存储作为本地文件系统来使用

- Openstack 之使用外部ceph存储

- 使用 ceph 作为 openstack 的后端

- Ceph作为OpenStack后端存储

- openstack使用NFS作为虚拟机存储

- [OpenStack 存储] Nova,Glance与Cinder 基于Ceph的统一存储方案

- openstack的glance、nova、cinder使用ceph做后端存储

- 使用Ceph集群作为Kubernetes的动态分配持久化存储

- Openstack存储总结之:详解如何使用NFS作为Cinder的后端存储

- openstack 组件volume,image后端存储使用ceph