Python爬虫入门学习实战项目(二)

2019-04-11 18:49

633 查看

- 动态数据的采集

之前第一个项目是静态数据的采集,因为很容易爬取不到数据,所以我们常用的还是动态数据的采集。依然还是拉勾网招聘信息,在首页直接点机器学习进去的页面是静态数据,而我们搜索机器学习进去的页面变成了动态数据了。

1.首先导入相关库。

import json import time import requests from bs4 import BeautifulSoup import pandas as pd

2.定义主抓取函数。

#定义抓取主函数

def lagou_dynamic_crawl():

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3650.400 QQBrowser/10.4.3341.400',

'Host' : 'www.lagou.com',

'Referer' : 'https://www.lagou.com/jobs/list_%E6%9C%BA%E5%99%A8%E5%AD%A6%E4%B9%A0?labelWords=&fromSearch=true&suginput=',

'X-Anit-Forge-Code' : '0',

'X-Anit-Forge-Token' : None,

'X-Requested-With' : 'XMLHttpRequest',

'Cookie' : 'user_trace_token=20190329130619-9fcf5ee7-dcc5-4a9b-b82e-53a0eba6862c;...;SEARCH_ID=ede1206d10e3492eb610370457ddeda8',

}

与静态数据采集一样,首先要设置heards:

然后查看页数,构造循环:

#创建一个职位列表容器

position = []

#6页循环遍历抓取

for page in range(1,7):

print('正在抓取第{}页...'.format(page))

#构建请求表单参数

params = {

'first' : 'true',

'pn' : page,

'kd' : '机器学习'

}

#构造请求并返回结果

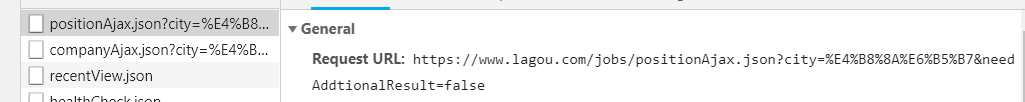

result = requests.post('https://www.lagou.com/jobs/positionAjax.json?city=%E4%B8%8A%E6%B5%B7&needAddtionalResult=false',

headers=headers,data=params)

开始循环爬取职位信息:

#将请求结果转为json

json_result = json.loads(result.text)

#解析json数据结构获取目标信息

position_info = json_result['content']['positionResult']['result']

#循环当前页每一个职位信息,再去爬职位详情页面

for position in position_info:

position_dict = {

'position_name':position['positionName'],

'work_year':position['workYear'],

'education':position['education'],

'salary':position['salary'],

'city':position['city'],

'company_name':position['companyFullName'],

'address': position['businessZones'],

'label': position['companyLabelList'],

'stage': position['financeStage'],

'size': position['companySize'],

'advantage': position['positionAdvantage'],

'industry': position['industryField'],

'industryLables': position['industryLabels']

}

#找到职位 ID

position_id = position['positionId']

#根据职位ID调用岗位描述函数获取职位JD

position_dict['position_detail'] = recruit_detail(position_id)

positions.append(position_dict)

time.sleep(8)

print('全部数据采集完毕。')

return positions

2.定义抓取岗位描述信息。(与静态数据采集相同)

#定义抓取岗位描述函数

def recruit_detail(position_id):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3650.400 QQBrowser/10.4.3341.400',

'Host': 'www.lagou.com',

'Referer': 'https://www.lagou.com/jobs/list_%E6%9C%BA%E5%99%A8%E5%AD%A6%E4%B9%A0?labelWords=&fromSearch=true&suginput=',

'Upgrade-Insecure=Requests':'1',

'Cookie': 'user_trace_token=20190329130619-9fcf5ee7-dcc5-4a9b-b82e-53a0eba6862c;...; SEARCH_ID=ede1206d10e3492eb610370457ddeda8',

}

url = 'https://www.lagou.com/jobs/%s.html' % position_id

result = requests.get(url,headers=headers)

time.sleep(10)

#解析职位要求text

soup = BeautifulSoup(result.text,'html.parser')

job_jd = soup.find(class_="job_bt")

#通过尝试发现部分记录描述存在空的情况

#所以这里需要判断处理一下

if job_jd != None:

job_jd = job_jd.text

else:

job_jd = 'null'

return job_jd

完整代码:

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

import json

import time

import requests

from bs4 import BeautifulSoup

import pandas as pd

#定义抓取主函数

def lagou_dynamic_crawl():

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3650.400 QQBrowser/10.4.3341.400',

'Host' : 'www.lagou.com',

4000

'Referer' : 'https://www.lagou.com/jobs/list_%E6%9C%BA%E5%99%A8%E5%AD%A6%E4%B9%A0?labelWords=&fromSearch=true&suginput=',

'X-Anit-Forge-Code' : '0',

'X-Anit-Forge-Token' : None,

'X-Requested-With' : 'XMLHttpRequest',

'Cookie' : 'user_trace_token=20190329130619-9fcf5ee7-dcc5-4a9b-b82e-53a0eba6862c; ...; SEARCH_ID=ede1206d10e3492eb610370457ddeda8',

}

#创建一个职位列表容器

position = []

#6页循环遍历抓取

for page in range(1,7):

print('正在抓取第{}页...'.format(page))

#构建请求表单参数

params = {

'first' : 'true',

'pn' : page,

'kd' : '机器学习'

}

#构造请求并返回结果

result = requests.post('https://www.lagou.com/jobs/positionAjax.json?city=%E4%B8%8A%E6%B5%B7&needAddtionalResult=false',

headers=headers,data=params)#将请求结果转为json

json_result = json.loads(result.text)

#解析json数据结构获取目标信息

position_info = json_result['content']['positionResult']['result']

#循环当前页每一个职位信息,再去爬职位详情页面

for position in position_info:

position_dict = {

'position_name':position['positionName'],

'work_year':position['workYear'],

'education':position['education'],

'salary':position['salary'],

'city':position['city'],

'company_name':position['companyFullName'],

'address': position['businessZones'],

'label': position['companyLabelList'],

'stage': position['financeStage'],

'size': position['companySize'],

'advantage': position['positionAdvantage'],

'industry': position['industryField'],

'industryLables': position['industryLabels']

}

#找到职位 ID

position_id = position['positionId']

#根据职位ID调用岗位描述函数获取职位JD

position_dict['position_detail'] = recruit_detail(position_id)

positions.append(position_dict)

time.sleep(8)

print('全部数据采集完毕。')

return positions

#定义抓取岗位描述函数

def recruit_detail(position_id):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3650.400 QQBrowser/10.4.3341.400',

'Host': 'www.lagou.com',

'Referer': 'https://www.lagou.com/jobs/list_%E6%9C%BA%E5%99%A8%E5%AD%A6%E4%B9%A0?labelWords=&fromSearch=true&suginput=',

'Upgrade-Insecure=Requests':'1',

'Cookie': 'user_trace_token=20190329130619-9fcf5ee7-dcc5-4a9b-b82e-53a0eba6862c; ...;SEARCH_ID=ede1206d10e3492eb610370457ddeda8',

}

url = 'https://www.lagou.com/jobs/%s.html' % position_id

result = requests.get(url,headers=headers)

time.sleep(10)

#解析职位要求text

soup = BeautifulSoup(result.text,'html.parser')

job_jd = soup.find(class_="job_bt")

#通过尝试发现部分记录描述存在空的情况

#所以这里需要判断处理一下

if job_jd != None:

job_jd = job_jd.text

else:

job_jd = 'null'

return job_jd

if __name__ == '__main__':

positions = lagou_dynamic_crawl()

相关文章推荐

- python3 [入门基础实战] 爬虫入门之智联招聘的学习(一)

- Python 数据学习入门之 django Blog 项目实战练习

- 学习Python就业有哪些方向?附加视频教程(python3从入门到进阶(面向对象),实战(爬虫,飞机游戏,GUI)视频教程)

- JAVA从菜鸟【入门】到新手【实习】一一Python项目实战学习规划

- python3 [入门基础实战] 爬虫入门之xpath的学习

- python3 [入门基础实战] 爬虫入门之智联招聘的学习(一)

- Python 爬虫如何入门学习?

- python小白入门学习笔记-爬虫入门

- python3 [爬虫入门实战]scrapy爬取盘多多五百万数据并存mongoDB

- Python爬虫框架Scrapy 学习笔记 10.2 -------【实战】 抓取天猫某网店所有宝贝详情

- Python爬虫入门学习--(向网页提交数据)

- python3 [爬虫入门实战]爬虫之scrapy爬取传智播客讲师初体验

- Python3网络爬虫:Scrapy入门实战之爬取动态网页图片

- Spark入门到精通视频学习资料--第八章:项目实战(2讲)

- Python爬虫入门实战一

- python学习(4):python爬虫入门案例-爬取图片

- python3 [入门基础实战] 爬虫入门之刷博客浏览量

- python3 [爬虫入门实战]scrapy爬取盘多多五百万数据并存mongoDB

- Python 爬虫学习 —— Scrapy 入门知识学习

- python学习笔记(一)爬虫实战:图片自动下载器