TF之NN之回归预测:利用NN算法(RelU)实现根据三个自变量预测一个因变量的回归问题

2018-11-27 03:13

811 查看

版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/qq_41185868/article/details/84559637

TF之NN:基于TF利用NN算法实现根据三个自变量预测一个因变量的回归问题

TF之NN之回归预测:利用NN算法(RelU)实现基于30行样本(每个样本含有18列参数包括label)预测一个新样本值

实验数据

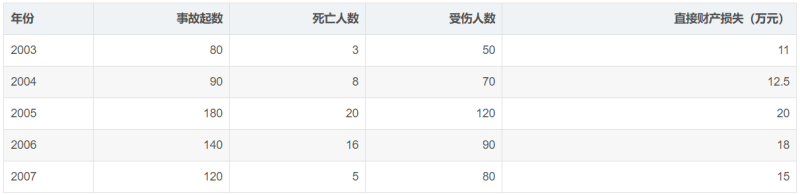

说明:利用前四年的数据建立回归模型,并对第五年进行预测。

设计思路

输出结果

[code]loss is: 913.6623 loss is: 781206160000.0 loss is: 9006693000.0 loss is: 103840136.0 loss is: 1197209.2 loss is: 13816.644 loss is: 173.0564 loss is: 15.756571 loss is: 13.9430275 loss is: 13.922119 loss is: 13.921878 loss is: 13.921875 loss is: 13.921875 loss is: 13.921875 loss is: 13.921875 input is:[120, 5, 85] output is:15.375002

[code]y_list is [[65.0], [64.0], [64.0], [61.0], [59.0], [61.0], [62.0], [60.0], [61.0], [61.0], [59.0], [61.0], [61.0], [60.0], [60.0], [60.0], [59.0], [61.0], [60.0], [59.0], [60.0], [60.0], [59.0], [60.0], [60.0], [60.0], [64.0], [61.0], [59.0], [59.0]] x_pred is [[6.0, 30.0, 0.5, 3.12, 3.13, 364.0, 452.0, 473.0, 1858.0, 1996.0, 2036.0, 0.23, 0.47, 0.5, 0.0, 146.0, 149.0]] loss is: 747890.9 loss is: 6.603946e+26 loss is: 7.613832e+24 loss is: 8.778148e+22 loss is: 1.0120521e+21 loss is: 1.1668169e+19 loss is: 1.3452473e+17 loss is: 1550963400000000.0 loss is: 17881387000000.0 loss is: 206158180000.0 loss is: 2376841700.0 loss is: 27403098.0 loss is: 315938.72 loss is: 3645.1162 loss is: 44.61735 loss is: 3.1063914 loss is: 2.627804 loss is: 2.6222868 loss is: 2.622223 loss is: 2.6222224 input is:[6.0, 30.0, 0.5, 3.12, 3.13, 364.0, 452.0, 473.0, 1858.0, 1996.0, 2036.0, 0.23, 0.47, 0.5, 0.0, 146.0, 149.0] output is:60.66666

实现代码

[code]import numpy as np

import tensorflow as tf

x = [[80,3,50],[90,8,70],[180,20,120],[140,16,90]]

y = [[11],[12.5],[20],[18]]

# y = [11,12.5,20,18]

x_pred = [[120,5,85]]

# dataset = np.loadtxt("data/20181127test04.csv", delimiter=",")

# # split into input (X) and output (Y) variables

# x = dataset[0:7,0:17]

# print(x)

# y = dataset[0:7,17]

# print(y)

# x_pred = dataset[7,0:17]

# print(x_pred)

tf_x = tf.placeholder(tf.float32, [None,3]) # input x

tf_y = tf.placeholder(tf.float32, [None,1]) # input y

print(tf_x)

# neural network layers

l1 = tf.layers.dense(tf_x, 100, tf.nn.relu) # hidden layer 18*8

output = tf.layers.dense(l1, 1) # output layer

loss = tf.losses.mean_squared_error(tf_y, output) # compute cost

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.1)

train_op = optimizer.minimize(loss)

sess = tf.Session() # control training and others

sess.run(tf.global_variables_initializer()) # initialize var in graph

for step in range(150):

# train and net output

_, l, pred = sess.run([train_op, loss, output], {tf_x: x, tf_y: y})

if step % 10 == 0:

print('loss is: ' + str(l))

# print('prediction is:' + str(pred))

output_pred = sess.run(output,{tf_x:x_pred})

print('input is:' + str(x_pred[0][:]))

print('output is:' + str(output_pred[0][0]))

阅读更多

相关文章推荐

- MLiR:利用MLiR多元线性回归法根据五个因变量来预测一个自变量

- 要求顺序循环队不损失一个空间,全部能够得到有效利用,试采用设置标志位tag的方法解决“假溢出”问题,实现顺序循环队列算法

- 一个类24点问题算法实现

- Java,Mysql-根据一个给定经纬度的点,进行附近500米地点查询–合理利用算法

- 操作系统算法:如何利用信号量实现优先级(从读者写者问题引发的联想)

- 利用KNN算法实现的一个demo

- 约瑟夫问题 算法很简单保证每个人都能看懂用的是模拟现实 用数组实现 利用循环删除数组中的元素

- 根据一个给定经纬度的点,进行附近500米地点查询–合理利用算法

- TF之RNN:TensorBoard可视化之基于顺序的RNN回归案例实现蓝色正弦虚线预测红色余弦实线—Jason niu

- PHP,Mysql-根据一个给定经纬度的点,进行附近地点查询–合理利用算法

- Java,Mysql-根据一个给定经纬度的点,进行附近500米地点查询–合理利用算法

- 一个java写的贪心算法实现删数问题

- PHP,Mysql-根据一个给定经纬度的点,进行附近地点查询–合理利用算法,效率提高2125倍

- 在一个数组中实现三个栈,并且数组未满之前栈不能溢出的问题

- PHP,Mysql-根据一个给定经纬度的点,进行附近地点查询–合理利用算法,效率提高2125倍

- 实现一个算法1/2+1/3+1/4+........Hangover问题

- (1.5.1.2)编程之美:中国象棋将帅问题——一个变量实现多重循环

- PHP,Mysql-根据一个给定经纬度的点,进行附近地点查询–合理利用算法,效率提高2125倍

- PHP,Mysql-根据一个给定经纬度的点,进行附近地点查询–合理利用算法,即附近的人

- 用递归法:设计算法求解汉诺塔问题,并编程实现。 (1) Hanoi(汉诺)塔问题分析 这是一个古典的数学问题,是一个用递归方法解题的典型例子。问题是这样的:古代有一个梵塔,塔内有3个座 A,B,C