android6.0源码分析之Camera2 HAL分析

2018-04-02 17:08

274 查看

转载自https://blog.csdn.net/yangzhihuiguming/article/details/51831888

在上一篇文章对Camera API2.0的框架进行了简单的介绍,其中Camera HAL屏蔽了底层的实现细节,并且为上层提供了相应的接口,具体的HAL的原理,个人觉得老罗的文章Android硬件抽象层(HAL)概要介绍和学习计划分析的很详细,这里不做分析,本文将只分析Camera HAL的初始化等相关流程。

以下是Camera2的相关文章目录:

android6.0源码分析之Camera API2.0简介

android6.0源码分析之Camera2 HAL分析

android6.0源码分析之Camera API2.0下的初始化流程分析

android6.0源码分析之Camera API2.0下的Preview(预览)流程分析

android6.0源码分析之Camera API2.0下的Capture流程分析

android6.0源码分析之Camera API2.0下的video流程分析

Camera API2.0的应用

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

其中,CameraService继承自BinderService,instantiate也是在BinderService中定义的,此方法就是调用publish方法,所以来看publish方法:

2

3

4

5

6

这里,将会把CameraService服务加入到ServiceManager进行管理。

而在前面的文章android6.0源码分析之Camera API2.0简介中,需要通过Java层的IPC Binder来获取此CameraService对象,在此过程中会初始CameraService的sp类型的对象,而对于sp,此处不做过多的分析,具体的可以查看深入理解Android卷Ⅰ中的第五章中的相关内容。此处,在CameraService的构造时,会调用CameraService的onFirstRef方法:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

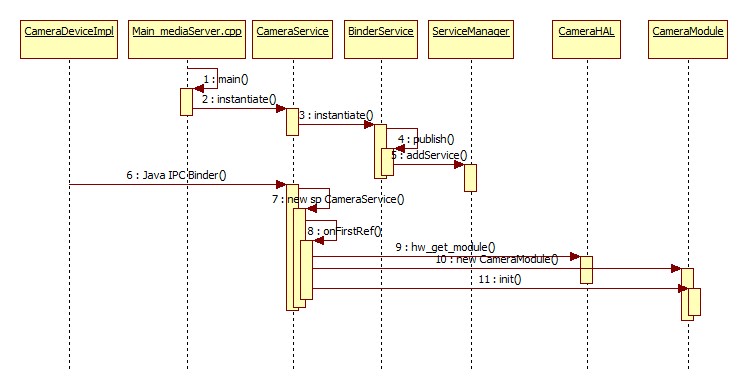

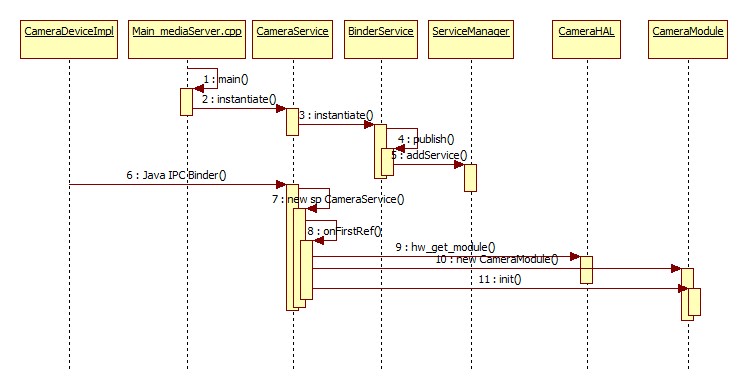

onFirstRef方法中,首先会通过HAL框架的hw_get_module来获取CameraModule对象,然后会对其进行相应的初始化,并会进行一些参数的设置,如camera的数量,闪光灯的初始化,以及回调函数的设置等,到这里,Camera2 HAL的模块就初始化结束了,下面给出初始化时序图:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

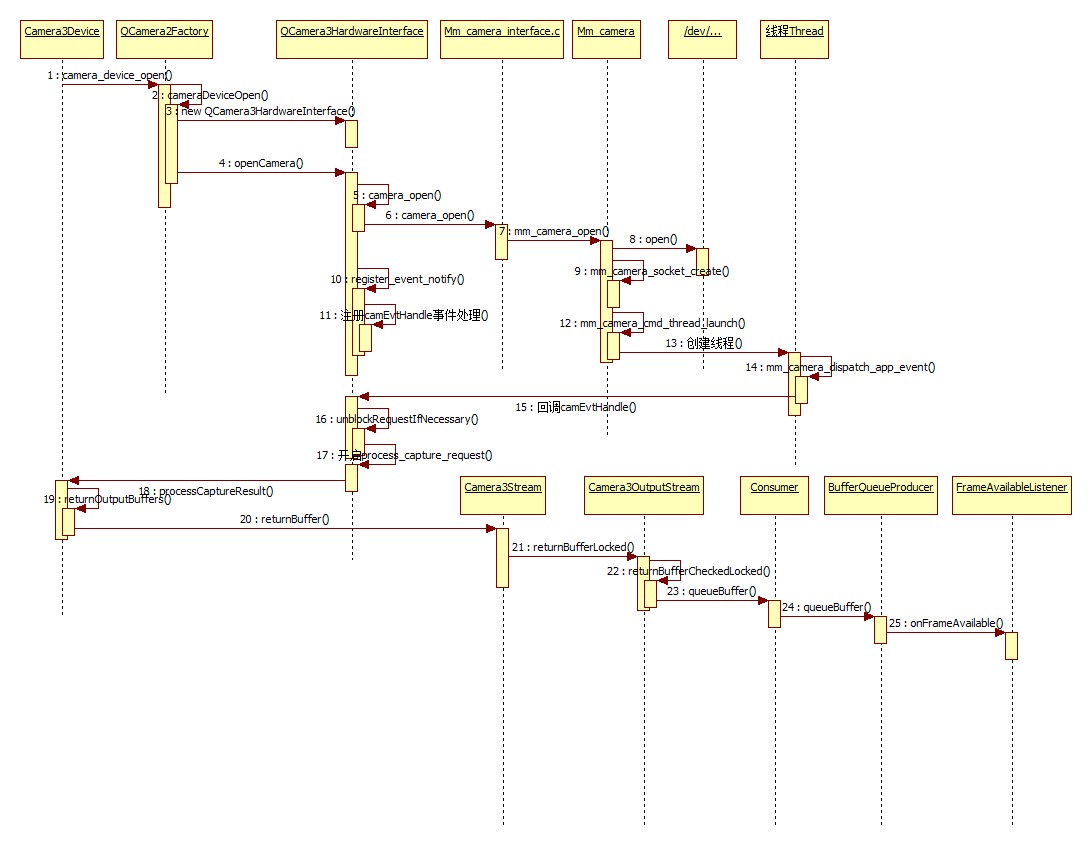

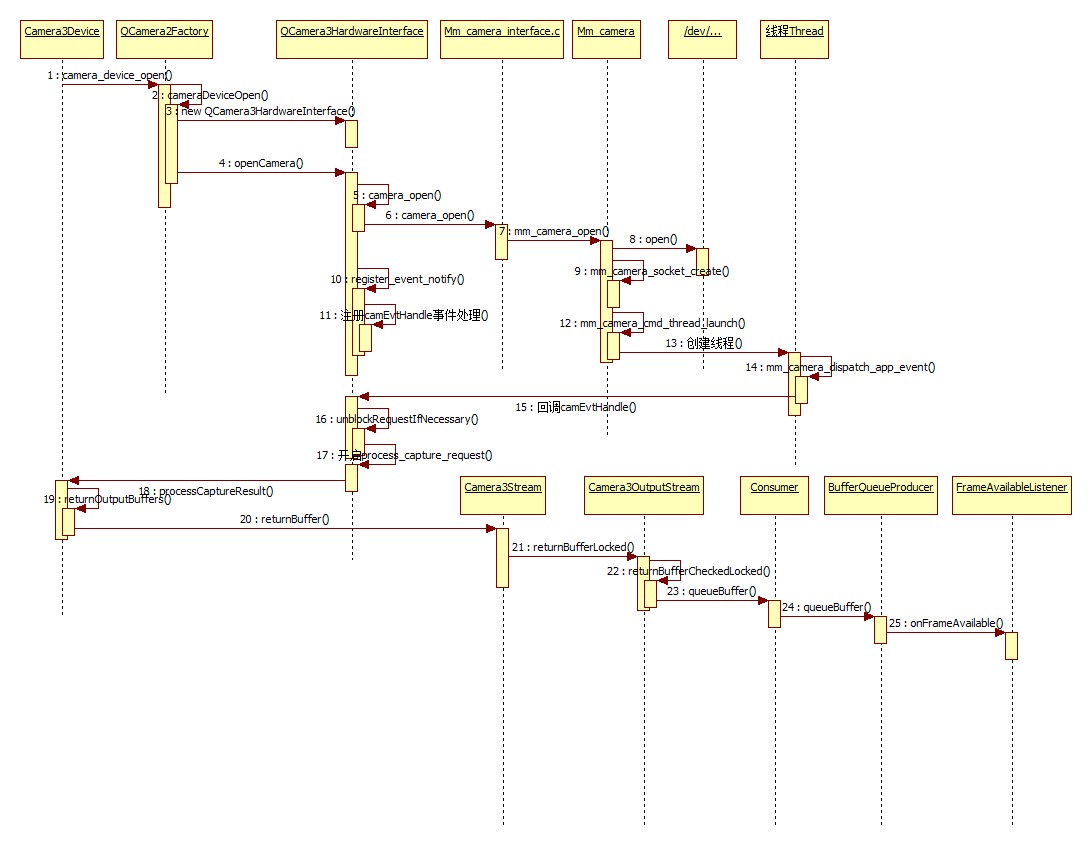

Camera HAL层的open入口其实就是camera_device_open方法:

2

3

4

5

6

它调用了cameraDeviceOpen方法,而其中的hw_device就是最后要返回给应用层的CameraDeviceImpl在Camera HAL层的对象,继续分析cameraDeviceOpen方法:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

此方法有两个关键点:一个是QCamera3HardwareInterface对象的创建,它是用户空间与内核空间进行交互的接口;另一个是调用它的openCamera方法来打开Camera,下面将分别进行分析。

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

其中,会在configure_streams中配置好流的处理handle:

2

3

4

5

6

7

8

9

10

11

继续追踪configureStream方法:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

此方法内容比较多,只抽取其中核心的代码进行说明,它首先会根据HAL的版本来对stream进行相应的配置初始化,然后再根据stream类型对stream_list的stream创建相应的Channel,主要有QCamera3MetadataChannel,QCamera3SupportChannel等,然后再进行相应的配置,其中QCamera3MetadataChannel在后面的处理capture request的时候会用到,这里就不做分析,而Camerametadata则是Java层和CameraService之间传递的元数据,见android6.0源码分析之Camera API2.0简介中的Camera2架构图,至此,QCamera3HardwareInterface构造结束,与本文相关的就是配置了mCameraDevice.ops。

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

它调用了openCamera()方法来打开Camera:

2

3

4

5

6

7

8

9

10

11

12

13

它调用camera_open方法来打开Camera,并且向CameraHandle注册了Camera 时间处理的Handle–camEvtHandle,首先分析camera_open方法,这里就将进入高通的Camera的实现了,而Mm_camera_interface.c是高通提供的相关操作的接口,接下来分析高通Camera的camera_open方法:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

由代码可知,这里将会初始化一个mm_camera_obj_t对象,其中,ds_fd为socket fd,而mm_camera_ops则绑定了相关的接口,最后调用mm_camera_open来打开Camera,首先来看看mm_camera_ops绑定了哪些方法:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

接着分析mm_camera_open方法:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

由代码可知,它会打开Camera的设备文件,然后开启dispatch_app_event线程,线程方法体mm_camera_dispatch_app_event方法代码如下:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

最后会调用mm-camera-interface中注册好的事件处理evt_cb,它就是在前面注册好的camEvtHandle:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

由代码可知,它会调用QCamera3HardwareInterface的unblockRequestIfNecessary来发起结果处理请求:

2

3

4

5

6

7

在初始化QCamera3HardwareInterface对象的时候,就绑定了处理Metadata的回调captureResultCb方法:它主要是对数据源进行相应的处理,而具体的capture请求的结果处理还是由process_capture_request来进行处理的,而这里会调用方法unblockRequestIfNecessary来触发process_capture_request方法执行,而在Camera框架中,发起请求时会启动一个RequestThread线程,在它的threadLoop方法中,会不停的调用process_capture_request方法来进行请求的处理,而它最后会回调Camera3Device中的processCaptureResult方法来进行结果处理:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

分析returnOutputBuffers方法,inputbuffer的runturnInputBuffer方法流程类似:

2

3

4

5

6

7

8

9

10

方法里调用了returnBuffer方法:

2

3

4

5

6

7

8

9

10

再继续看returnBufferLocked,它调用了returnAnyBufferLocked方法,而returnAnyBufferLocked方法又调用了returnBufferCheckedLocked方法,现在分析returnBufferCheckedLocked:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

由代码可知,如果Buffer没有出现状态错误,它会调用currentConsumer的queueBuffer方法,而具体的Consumer则是在应用层初始化Camera时进行绑定的,典型的Consumer有SurfaceTexture,ImageReader等,而在Native层中,它会调用BufferQueueProducer的queueBuffer方法:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

由代码可知,它最后会调用Consumer的回调FrameAvailableListener的onFrameAvailable方法,到这里,就比较清晰为什么我们在写Camera应用,为其初始化Surface时,我们需要重写FrameAvailableListener了,因为在此方法里面,会进行结果的处理,至此,Camera HAL的Open流程就分析结束了。下面给出流程的时序图:

在上一篇文章对Camera API2.0的框架进行了简单的介绍,其中Camera HAL屏蔽了底层的实现细节,并且为上层提供了相应的接口,具体的HAL的原理,个人觉得老罗的文章Android硬件抽象层(HAL)概要介绍和学习计划分析的很详细,这里不做分析,本文将只分析Camera HAL的初始化等相关流程。

以下是Camera2的相关文章目录:

android6.0源码分析之Camera API2.0简介

android6.0源码分析之Camera2 HAL分析

android6.0源码分析之Camera API2.0下的初始化流程分析

android6.0源码分析之Camera API2.0下的Preview(预览)流程分析

android6.0源码分析之Camera API2.0下的Capture流程分析

android6.0源码分析之Camera API2.0下的video流程分析

Camera API2.0的应用

1、Camera HAL的初始化

Camera HAL的初始加载是在Native的CameraService初始化流程中的,而CameraService初始化是在Main_mediaServer.cpp的main方法开始的://Main_mediaServer.cpp

int main(int argc __unused, char** argv){

…

sp<ProcessState> proc(ProcessState::self());

//获取ServieManager

sp<IServiceManager> sm = defaultServiceManager();

ALOGI("ServiceManager: %p", sm.get());

AudioFlinger::instantiate();

//初始化media服务

MediaPlayerService::instantiate();

//初始化资源管理服务

ResourceManagerService::instantiate();

//初始化Camera服务

CameraService::instantiate();

//初始化音频服务

AudioPolicyService::instantiate();

SoundTriggerHwService::instantiate();

//初始化Radio服务

RadioService::instantiate();

registerExtensions();

//开始线程池

ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

其中,CameraService继承自BinderService,instantiate也是在BinderService中定义的,此方法就是调用publish方法,所以来看publish方法:

// BinderService.h

static status_t publish(bool allowIsolated = false) {

sp<IServiceManager> sm(defaultServiceManager());

//将服务添加到ServiceManager

return sm->addService(String16(SERVICE::getServiceName()),new SERVICE(), allowIsolated);

}12

3

4

5

6

这里,将会把CameraService服务加入到ServiceManager进行管理。

而在前面的文章android6.0源码分析之Camera API2.0简介中,需要通过Java层的IPC Binder来获取此CameraService对象,在此过程中会初始CameraService的sp类型的对象,而对于sp,此处不做过多的分析,具体的可以查看深入理解Android卷Ⅰ中的第五章中的相关内容。此处,在CameraService的构造时,会调用CameraService的onFirstRef方法:

//CameraService.cpp

void CameraService::onFirstRef()

{

BnCameraService::onFirstRef();

...

camera_module_t *rawModule;

//根据CAMERA_HARDWARE_MODULE_ID(字符串camera)来获取camera_module_t对象

int err = hw_get_module(CAMERA_HARDWARE_MODULE_ID,

(const hw_module_t **)&rawModule);

//创建CameraModule对象

mModule = new CameraModule(rawModule);

//模块初始化

err = mModule->init();

...

//通过Module获取Camera的数量

mNumberOfCameras = mModule->getNumberOfCameras();

mNumberOfNormalCameras = mNumberOfCameras;

//初始化闪光灯

mFlashlight = new CameraFlashlight(*mModule, *this);

status_t res = mFlashlight->findFlashUnits();

int latestStrangeCameraId = INT_MAX;

for (int i = 0; i < mNumberOfCameras; i++) {

//初始化CameraID

String8 cameraId = String8::format("%d", i);

struct camera_info info;

bool haveInfo = true;

//获取Camera信息

status_t rc = mModule->getCameraInfo(i, &info);

...

//如果Module版本高于2.4,找出冲突的设备参数

if (mModule->getModuleApiVersion() >= CAMERA_MODULE_API_VERSION_2_4 && haveInfo) {

cost = info.resource_cost;

conflicting_devices = info.conflicting_devices;

conflicting_devices_length = info.conflicting_devices_length;

}

//将冲突设备加入冲突set集中

std::set<String8> conflicting;

for (size_t i = 0; i < conflicting_devices_length; i++) {

conflicting.emplace(String8(conflicting_devices[i]));

}

...

}

//如果Module的API大于2.1,则设置回调

if (mModule->getModuleApiVersion() >= CAMERA_MODULE_API_VERSION_2_1) {

mModule->setCallbacks(this);

}

//若大于2.2,则设置供应商的Tag

if (mModule->getModuleApiVersion() >= CAMERA_MODULE_API_VERSION_2_2) {

setUpVendorTags();

}

//将此服务注册到CameraDeviceFactory

CameraDeviceFactory::registerService(this);

CameraService::pingCameraServiceProxy();

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

onFirstRef方法中,首先会通过HAL框架的hw_get_module来获取CameraModule对象,然后会对其进行相应的初始化,并会进行一些参数的设置,如camera的数量,闪光灯的初始化,以及回调函数的设置等,到这里,Camera2 HAL的模块就初始化结束了,下面给出初始化时序图:

2、Camera HAL的open流程分析

通过阅读android6.0源码发现,它提供了高通的Camera实现,并且提供了高通的Camera库,也实现了高通的Camera HAL的相应接口,对于高通的Camera,它在后台会有一个守护进程daemon,daemon是介于应用和驱动之间翻译ioctl的中间层(委托处理)。本节将以Camera中的open流程为例,来分析Camera HAL的工作过程,在应用对硬件发出open请求后,会通过Camera HAL来发起open请求,而Camera HAL的open入口在QCamera2Hal.cpp进行了定义://QCamera2Hal.cpp

camera_module_t HAL_MODULE_INFO_SYM = {

//它里面包含模块的公共方法信息

common: camera_common,

get_number_of_cameras: qcamera::QCamera2Factory::get_number_of_cameras,

get_camera_info: qcamera::QCamera2Factory::get_camera_info,

set_callbacks: qcamera::QCamera2Factory::set_callbacks,

get_vendor_tag_ops: qcamera::QCamera3VendorTags::get_vendor_tag_ops,

open_legacy: qcamera::QCamera2Factory::open_legacy,

set_torch_mode: NULL,

init : NULL,

reserved: {0}

};

static hw_module_t camera_common = {

tag: HARDWARE_MODULE_TAG,

module_api_version: CAMERA_MODULE_API_VERSION_2_3,

hal_api_version: HARDWARE_HAL_API_VERSION,

id: CAMERA_HARDWARE_MODULE_ID,

name: "QCamera Module",

author: "Qualcomm Innovation Center Inc",

//它的方法数组里绑定了open接口

methods: &qcamera::QCamera2Factory::mModuleMethods,

dso: NULL,

reserved: {0}

};

struct hw_module_methods_t QCamera2Factory::mModuleMethods = {

//open方法的绑定

open: QCamera2Factory::camera_device_open,

};12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

Camera HAL层的open入口其实就是camera_device_open方法:

// QCamera2Factory.cpp

int QCamera2Factory::camera_device_open(const struct hw_module_t *module, const char *id,

struct hw_device_t **hw_device){

...

return gQCamera2Factory->cameraDeviceOpen(atoi(id), hw_device);

}12

3

4

5

6

它调用了cameraDeviceOpen方法,而其中的hw_device就是最后要返回给应用层的CameraDeviceImpl在Camera HAL层的对象,继续分析cameraDeviceOpen方法:

// QCamera2Factory.cpp

int QCamera2Factory::cameraDeviceOpen(int camera_id, struct hw_device_t **hw_device){

...

//Camera2采用的Camera HAL版本为HAL3.0

if ( mHalDescriptors[camera_id].device_version == CAMERA_DEVICE_API_VERSION_3_0 ) {

//初始化QCamera3HardwareInterface对象,这里构造函数里将会进行configure_streams以及

//process_capture_result等的绑定

QCamera3HardwareInterface *hw = new QCamera3HardwareInterface(

mHalDescriptors[camera_id].cameraId, mCallbacks);

//通过QCamera3HardwareInterface来打开Camera

rc = hw->openCamera(hw_device);

...

} else if (mHalDescriptors[camera_id].device_version == CAMERA_DEVICE_API_VERSION_1_0) {

//HAL API为2.0

QCamera2HardwareInterface *hw = new QCamera2HardwareInterface((uint32_t)camera_id);

rc = hw->openCamera(hw_device);

...

} else {

...

}

return rc;

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

此方法有两个关键点:一个是QCamera3HardwareInterface对象的创建,它是用户空间与内核空间进行交互的接口;另一个是调用它的openCamera方法来打开Camera,下面将分别进行分析。

2.1 QCamera3HardwareInterface构造函数分析

在它的构造函数里面有一个关键的初始化,即mCameraDevice.ops = &mCameraOps,它会定义Device操作的接口://QCamera3HWI.cpp

camera3_device_ops_t QCamera3HardwareInterface::mCameraOps = {

initialize: QCamera3HardwareInterface::initialize,

//配置流数据的相关处理

configure_streams: QCamera3HardwareInterface::configure_streams,

register_stream_buffers: NULL,

construct_default_request_settings:

QCamera3HardwareInterface::construct_default_request_settings,

//处理结果的接口

process_capture_request:

QCamera3HardwareInterface::process_capture_request,

get_metadata_vendor_tag_ops: NULL,

dump: QCamera3HardwareInterface::dump,

flush: QCamera3HardwareInterface::flush,

reserved: {0},

};12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

其中,会在configure_streams中配置好流的处理handle:

//QCamera3HWI.cpp

int QCamera3HardwareInterface::configure_streams(const struct camera3_device *device,

camera3_stream_configuration_t *stream_list){

//获得QCamera3HardwareInterface对象

QCamera3HardwareInterface *hw =reinterpret_cast<QCamera3HardwareInterface *>(device->priv);

...

//调用它的configureStreams进行配置

int rc = hw->configureStreams(stream_list);

..

return rc;

}12

3

4

5

6

7

8

9

10

11

继续追踪configureStream方法:

//QCamera3HWI.cpp

int QCamera3HardwareInterface::configureStreams(camera3_stream_configuration_t *streamList){

...

//初始化Camera版本

al_version = CAM_HAL_V3;

...

//开始配置stream

...

//初始化相关Channel为NULL

if (mMetadataChannel) {

delete mMetadataChannel;

mMetadataChannel = NULL;

}

if (mSupportChannel) {

delete mSupportChannel;

mSupportChannel = NULL;

}

if (mAnalysisChannel) {

delete mAnalysisChannel;

mAnalysisChannel = NULL;

}

//创建Metadata Channel,并对其进行初始化

mMetadataChannel = new QCamera3MetadataChannel(mCameraHandle->camera_handle,

mCameraHandle->ops, captureResultCb,&gCamCapability[mCameraId]->padding_info,

CAM_QCOM_FEATURE_NONE, this);

...

//初始化

rc = mMetadataChannel->initialize(IS_TYPE_NONE);

...

//如果h/w support可用,则创建分析stream的Channel

if (gCamCapability[mCameraId]->hw_analysis_supported) {

mAnalysisChannel = new QCamera3SupportChannel(mCameraHandle->camera_handle,

mCameraHandle->ops,&gCamCapability[mCameraId]->padding_info,

CAM_QCOM_FEATURE_PP_SUPERSET_HAL3,CAM_STREAM_TYPE_ANALYSIS,

&gCamCapability[mCameraId]->analysis_recommended_res,this);

...

}

bool isRawStreamRequested = false;

//清空stream配置信息

memset(&mStreamConfigInfo, 0, sizeof(cam_stream_size_info_t));

//为requested stream分配相关的channel对象

for (size_t i = 0; i < streamList->num_streams; i++) {

camera3_stream_t *newStream = streamList->streams[i];

uint32_t stream_usage = newStream->usage;

mStreamConfigInfo.stream_sizes[mStreamConfigInfo.num_streams].width = (int32_t)newStream-

>width;

mStreamConfigInfo.stream_sizes[mStreamConfigInfo.num_streams].height = (int32_t)newStream-

>height;

if ((newStream->stream_type == CAMERA3_STREAM_BIDIRECTIONAL||newStream->usage &

GRALLOC_USAGE_HW_CAMERA_ZSL) &&newStream->format ==

HAL_PIXEL_FORMAT_IMPLEMENTATION_DEFINED && jpegStream){

mStreamConfigInfo.type[mStreamConfigInfo.num_streams] = CAM_STREAM_TYPE_SNAPSHOT;

mStreamConfigInfo.postprocess_mask[mStreamConfigInfo.num_streams] =

CAM_QCOM_FEATURE_NONE;

} else if(newStream->stream_type == CAMERA3_STREAM_INPUT) {

} else {

switch (newStream->format) {

//为非zsl streams查找他们的format

...

}

}

if (newStream->priv == NULL) {

//为新的stream构造Channel

switch (newStream->stream_type) {//分类型构造

case CAMERA3_STREAM_INPUT:

newStream->usage |= GRALLOC_USAGE_HW_CAMERA_READ;

newStream->usage |= GRALLOC_USAGE_HW_CAMERA_WRITE;//WR for inplace algo's

break;

case CAMERA3_STREAM_BIDIRECTIONAL:

...

break;

case CAMERA3_STREAM_OUTPUT:

...

break;

default:

break;

}

//根据前面的得到的stream的参数类型以及format分别对各类型的channel进行构造

if (newStream->stream_type == CAMERA3_STREAM_OUTPUT ||

newStream->stream_type == CAMERA3_STREAM_BIDIRECTIONAL) {

QCamera3Channel *channel = NULL;

switch (newStream->format) {

case HAL_PIXEL_FORMAT_IMPLEMENTATION_DEFINED:

/* use higher number of buffers for HFR mode */

...

//创建Regular Channel

channel = new QCamera3RegularChannel(mCameraHandle->camera_handle,

mCameraHandle->ops, captureResultCb,&gCamCapability[mCameraId]-

>padding_info,this,newStream,(cam_stream_type_t)mStreamConfigInfo.type[

mStreamConfigInfo.num_streams],mStreamConfigInfo.postprocess_mask[

mStreamConfigInfo.num_streams],mMetadataChannel,numBuffers);

...

newStream->max_buffers = channel->getNumBuffers();

newStream->priv = channel;

break;

case HAL_PIXEL_FORMAT_YCbCr_420_888:

//创建YWV Channel

...

break;

case HAL_PIXEL_FORMAT_RAW_OPAQUE:

case HAL_PIXEL_FORMAT_RAW16:

case HAL_PIXEL_FORMAT_RAW10:

//创建Raw Channel

...

break;

case HAL_PIXEL_FORMAT_BLOB:

//创建QCamera3PicChannel

...

break;

default:

break;

}

} else if (newStream->stream_type == CAMERA3_STREAM_INPUT) {

newStream->max_buffers = MAX_INFLIGHT_REPROCESS_REQUESTS;

} else {

}

for (List<stream_info_t*>::iterator it=mStreamInfo.begin();it != mStreamInfo.end();

it++) {

if ((*it)->stream == newStream) {

(*it)->channel = (QCamera3Channel*) newStream->priv;

break;

}

}

} else {

}

if (newStream->stream_type != CAMERA3_STREAM_INPUT)

mStreamConfigInfo.num_streams++;

}

}

if (isZsl) {

if (mPictureChannel) {

mPictureChannel->overrideYuvSize(zslStream->width, zslStream->height);

}

} else if (mPictureChannel && m_bIs4KVideo) {

mPictureChannel->overrideYuvSize(videoWidth, videoHeight);

}

//RAW DUMP channel

if (mEnableRawDump && isRawStreamRequested == false){

cam_dimension_t rawDumpSize;

rawDumpSize = getMaxRawSize(mCameraId);

mRawDumpChannel = new QCamera3RawDumpChannel(mCameraHandle->camera_handle,

mCameraHandle->ops,rawDumpSize,&gCamCapability[mCameraId]->padding_info,

this, CAM_QCOM_FEATURE_NONE);

...

}

//进行相关Channel的配置

...

/* Initialize mPendingRequestInfo and mPendnigBuffersMap */

for (List<PendingRequestInfo>::iterator i = mPendingRequestsList.begin();

i != mPendingRequestsList.end(); i++) {

clearInputBuffer(i->input_buffer);

i = mPendingRequestsList.erase(i);

}

mPendingFrameDropList.clear();

// Initialize/Reset the pending buffers list

mPendingBuffersMap.num_buffers = 0;

mPendingBuffersMap.mPendingBufferList.clear();

mPendingReprocessResultList.clear();

return rc;

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

此方法内容比较多,只抽取其中核心的代码进行说明,它首先会根据HAL的版本来对stream进行相应的配置初始化,然后再根据stream类型对stream_list的stream创建相应的Channel,主要有QCamera3MetadataChannel,QCamera3SupportChannel等,然后再进行相应的配置,其中QCamera3MetadataChannel在后面的处理capture request的时候会用到,这里就不做分析,而Camerametadata则是Java层和CameraService之间传递的元数据,见android6.0源码分析之Camera API2.0简介中的Camera2架构图,至此,QCamera3HardwareInterface构造结束,与本文相关的就是配置了mCameraDevice.ops。

2.2 openCamera分析

本节主要分析Module是如何打开Camera的,openCamera的代码如下://QCamera3HWI.cpp

int QCamera3HardwareInterface::openCamera(struct hw_device_t **hw_device){

int rc = 0;

if (mCameraOpened) {//如果Camera已经被打开,则此次打开的设备为NULL,并且打开结果为PERMISSION_DENIED

*hw_device = NULL;

return PERMISSION_DENIED;

}

//调用openCamera方法来打开

rc = openCamera();

//打开结果处理

if (rc == 0) {

//获取打开成功的hw_device_t对象

*hw_device = &mCameraDevice.common;

} else

*hw_device = NULL;

}

return rc;

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

它调用了openCamera()方法来打开Camera:

// QCamera3HWI.cpp

int QCamera3HardwareInterface::openCamera()

{

...

//打开camera,获取mCameraHandle

mCameraHandle = camera_open((uint8_t)mCameraId);

...

mCameraOpened = true;

//注册mm-camera-interface里的事件处理,其中camEctHandle为事件处理Handle

rc = mCameraHandle->ops->register_event_notify(mCameraHandle->camera_handle,camEvtHandle

,(void *)this);

return NO_ERROR;

}12

3

4

5

6

7

8

9

10

11

12

13

它调用camera_open方法来打开Camera,并且向CameraHandle注册了Camera 时间处理的Handle–camEvtHandle,首先分析camera_open方法,这里就将进入高通的Camera的实现了,而Mm_camera_interface.c是高通提供的相关操作的接口,接下来分析高通Camera的camera_open方法:

//Mm_camera_interface.c

mm_camera_vtbl_t * camera_open(uint8_t camera_idx)

{

int32_t rc = 0;

mm_camera_obj_t* cam_obj = NULL;

/* opened already 如果已经打开*/

if(NULL != g_cam_ctrl.cam_obj[camera_idx]) {

/* Add reference */

g_cam_ctrl.cam_obj[camera_idx]->ref_count++;

pthread_mutex_unlock(&g_intf_lock);

return &g_cam_ctrl.cam_obj[camera_idx]->vtbl;

}

cam_obj = (mm_camera_obj_t *)malloc(sizeof(mm_camera_obj_t));

...

/* initialize camera obj */

memset(cam_obj, 0, sizeof(mm_camera_obj_t));

cam_obj->ctrl_fd = -1;

cam_obj->ds_fd = -1;

cam_obj->ref_count++;

cam_obj->my_hdl = mm_camera_util_generate_handler(camera_idx);

cam_obj->vtbl.camera_handle = cam_obj->my_hdl; /* set handler */

//mm_camera_ops里绑定了相关的操作接口

cam_obj->vtbl.ops = &mm_camera_ops;

pthread_mutex_init(&cam_obj->cam_lock, NULL);

pthread_mutex_lock(&cam_obj->cam_lock);

pthread_mutex_unlock(&g_intf_lock);

//调用mm_camera_open方法来打开camera

rc = mm_camera_open(cam_obj);

pthread_mutex_lock(&g_intf_lock);

...

//结果处理,并返回

...

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

由代码可知,这里将会初始化一个mm_camera_obj_t对象,其中,ds_fd为socket fd,而mm_camera_ops则绑定了相关的接口,最后调用mm_camera_open来打开Camera,首先来看看mm_camera_ops绑定了哪些方法:

//Mm_camera_interface.c

static mm_camera_ops_t mm_camera_ops = {

.query_capability = mm_camera_intf_query_capability,

//注册事件通知的方法

.register_event_notify = mm_camera_intf_register_event_notify,

.close_camera = mm_camera_intf_close,

.set_parms = mm_camera_intf_set_parms,

.get_parms = mm_camera_intf_get_parms,

.do_auto_focus = mm_camera_intf_do_auto_focus,

.cancel_auto_focus = mm_camera_intf_cancel_auto_focus,

.prepare_snapshot = mm_camera_intf_prepare_snapshot,

.start_zsl_snapshot = mm_camera_intf_start_zsl_snapshot,

.stop_zsl_snapshot = mm_camera_intf_stop_zsl_snapshot,

.map_buf = mm_camera_intf_map_buf,

.unmap_buf = mm_camera_intf_unmap_buf,

.add_channel = mm_camera_intf_add_channel,

.delete_channel = mm_camera_intf_del_channel,

.get_bundle_info = mm_camera_intf_get_bundle_info,

.add_stream = mm_camera_intf_add_stream,

.link_stream = mm_camera_intf_link_stream,

.delete_stream = mm_camera_intf_del_stream,

//配置stream的方法

.config_stream = mm_camera_intf_config_stream,

.qbuf = mm_camera_intf_qbuf,

.get_queued_buf_count = mm_camera_intf_get_queued_buf_count,

.map_stream_buf = mm_camera_intf_map_stream_buf,

.unmap_stream_buf = mm_camera_intf_unmap_stream_buf,

.set_stream_parms = mm_camera_intf_set_stream_parms,

.get_stream_parms = mm_camera_intf_get_stream_parms,

.start_channel = mm_camera_intf_start_channel,

.stop_channel = mm_camera_intf_stop_channel,

.request_super_buf = mm_camera_intf_request_super_buf,

.cancel_super_buf_request = mm_camera_intf_cancel_super_buf_request,

.flush_super_buf_queue = mm_camera_intf_flush_super_buf_queue,

.configure_notify_mode = mm_camera_intf_configure_notify_mode,

//处理capture的方法

.process_advanced_capture = mm_camera_intf_process_advanced_capture

};12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

接着分析mm_camera_open方法:

//Mm_camera.c

int32_t mm_camera_open(mm_camera_obj_t *my_obj){

...

do{

n_try--;

//根据设备名字,打开相应的设备驱动fd

my_obj->ctrl_fd = open(dev_name, O_RDWR | O_NONBLOCK);

if((my_obj->ctrl_fd >= 0) || (errno != EIO) || (n_try <= 0 )) {

break;

}

usleep(sleep_msec * 1000U);

}while (n_try > 0);

...

//打开domain socket

n_try = MM_CAMERA_DEV_OPEN_TRIES;

do {

n_try--;

my_obj->ds_fd = mm_camera_socket_create(cam_idx, MM_CAMERA_SOCK_TYPE_UDP);

usleep(sleep_msec * 1000U);

} while (n_try > 0);

...

//初始化锁

pthread_mutex_init(&my_obj->msg_lock, NULL);

pthread_mutex_init(&my_obj->cb_lock, NULL);

pthread_mutex_init(&my_obj->evt_lock, NULL);

pthread_cond_init(&my_obj->evt_cond, NULL);

//开启线程,它的线程体在mm_camera_dispatch_app_event方法中

mm_camera_cmd_thread_launch(&my_obj->evt_thread,

mm_camera_dispatch_app_event,

(void *)my_obj);

mm_camera_poll_thread_launch(&my_obj->evt_poll_thread,

MM_CAMERA_POLL_TYPE_EVT);

mm_camera_evt_sub(my_obj, TRUE);

return rc;

...

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

由代码可知,它会打开Camera的设备文件,然后开启dispatch_app_event线程,线程方法体mm_camera_dispatch_app_event方法代码如下:

//Mm_camera.c

static void mm_camera_dispatch_app_event(mm_camera_cmdcb_t *cmd_cb,void* user_data){

mm_camera_cmd_thread_name("mm_cam_event");

int i;

mm_camera_event_t *event = &cmd_cb->u.evt;

mm_camera_obj_t * my_obj = (mm_camera_obj_t *)user_data;

if (NULL != my_obj) {

pthread_mutex_lock(&my_obj->cb_lock);

for(i = 0; i < MM_CAMERA_EVT_ENTRY_MAX; i++) {

if(my_obj->evt.evt[i].evt_cb) {

//调用camEvtHandle方法

my_obj->evt.evt[i].evt_cb(

my_obj->my_hdl,

event,

my_obj->evt.evt[i].user_data);

}

}

pthread_mutex_unlock(&my_obj->cb_lock);

}

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

最后会调用mm-camera-interface中注册好的事件处理evt_cb,它就是在前面注册好的camEvtHandle:

//QCamera3HWI.cpp

void QCamera3HardwareInterface::camEvtHandle(uint32_t /*camera_handle*/,mm_camera_event_t *evt,

void *user_data){

//获取QCamera3HardwareInterface接口指针

QCamera3HardwareInterface *obj = (QCamera3HardwareInterface *)user_data;

if (obj && evt) {

switch(evt->server_event_type) {

case CAM_EVENT_TYPE_DAEMON_DIED:

camera3_notify_msg_t notify_msg;

memset(¬ify_msg, 0, sizeof(camera3_notify_msg_t));

notify_msg.type = CAMERA3_MSG_ERROR;

notify_msg.message.error.error_code = CAMERA3_MSG_ERROR_DEVICE;

notify_msg.message.error.error_stream = NULL;

notify_msg.message.error.frame_number = 0;

obj->mCallbackOps->notify(obj->mCallbackOps, ¬ify_msg);

break;

case CAM_EVENT_TYPE_DAEMON_PULL_REQ:

pthread_mutex_lock(&obj->mMutex);

obj->mWokenUpByDaemon = true;

//开启process_capture_request

obj->unblockRequestIfNecessary();

pthread_mutex_unlock(&obj->mMutex);

break;

default:

break;

}

} else {

}

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

由代码可知,它会调用QCamera3HardwareInterface的unblockRequestIfNecessary来发起结果处理请求:

//QCamera3HWI.cpp

void QCamera3HardwareInterface::unblockRequestIfNecessary()

{

// Unblock process_capture_request

//开启process_capture_request

pthread_cond_signal(&mRequestCond);

}12

3

4

5

6

7

在初始化QCamera3HardwareInterface对象的时候,就绑定了处理Metadata的回调captureResultCb方法:它主要是对数据源进行相应的处理,而具体的capture请求的结果处理还是由process_capture_request来进行处理的,而这里会调用方法unblockRequestIfNecessary来触发process_capture_request方法执行,而在Camera框架中,发起请求时会启动一个RequestThread线程,在它的threadLoop方法中,会不停的调用process_capture_request方法来进行请求的处理,而它最后会回调Camera3Device中的processCaptureResult方法来进行结果处理:

//Camera3Device.cpp

void Camera3Device::processCaptureResult(const camera3_capture_result *result) {

...

{

...

if (mUsePartialResult && result->result != NULL) {

if (mDeviceVersion >= CAMERA_DEVICE_API_VERSION_3_2) {

...

if (isPartialResult) {

request.partialResult.collectedResult.append(result->result);

}

} else {

camera_metadata_ro_entry_t partialResultEntry;

res = find_camera_metadata_ro_entry(result->result,

ANDROID_QUIRKS_PARTIAL_RESULT, &partialResultEntry);

if (res != NAME_NOT_FOUND &&partialResultEntry.count > 0 &&

partialResultEntry.data.u8[0] ==ANDROID_QUIRKS_PARTIAL_RESULT_PARTIAL) {

isPartialResult = true;

request.partialResult.collectedResult.append(

result->result);

request.partialResult.collectedResult.erase(

ANDROID_QUIRKS_PARTIAL_RESULT);

}

}

if (isPartialResult) {

// Fire off a 3A-only result if possible

if (!request.partialResult.haveSent3A) {

//处理3A结果

request.partialResult.haveSent3A =processPartial3AResult(frameNumber,

request.partialResult.collectedResult,request.resultExtras);

}

}

}

...

//查找camera元数据入口

camera_metadata_ro_entry_t entry;

res = find_camera_metadata_ro_entry(result->result,

ANDROID_SENSOR_TIMESTAMP, &entry);

if (shutterTimestamp == 0) {

request.pendingOutputBuffers.appendArray(result->output_buffers,

result->num_output_buffers);

} else {

重要的分析//返回处理的outputbuffer

returnOutputBuffers(result->output_buffers,

result->num_output_buffers, shutterTimestamp);

}

if (result->result != NULL && !isPartialResult) {

if (shutterTimestamp == 0) {

request.pendingMetadata = result->result;

request.partialResult.collectedResult = collectedPartialResult;

} else {

CameraMetadata metadata;

metadata = result->result;

//发送Capture结构,即调用通知回调

sendCaptureResult(metadata, request.resultExtras,

collectedPartialResult, frameNumber, hasInputBufferInRequest,

request.aeTriggerCancelOverride);

}

}

removeInFlightRequestIfReadyLocked(idx);

} // scope for mInFlightLock

if (result->input_buffer != NULL) {

if (hasInputBufferInRequest) {

Camera3Stream *stream =

Camera3Stream::cast(result->input_buffer->stream);

重要的分析//返回处理的inputbuffer

res = stream->returnInputBuffer(*(result->input_buffer));

} else {}

}

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

分析returnOutputBuffers方法,inputbuffer的runturnInputBuffer方法流程类似:

//Camera3Device.cpp

void Camera3Device::returnOutputBuffers(const camera3_stream_buffer_t *outputBuffers, size_t

numBuffers, nsecs_t timestamp) {

for (size_t i = 0; i < numBuffers; i++)

{

Camera3Stream *stream = Camera3Stream::cast(outputBuffers[i].stream);

status_t res = stream->returnBuffer(outputBuffers[i], timestamp);

...

}

}12

3

4

5

6

7

8

9

10

方法里调用了returnBuffer方法:

//Camera3Stream.cpp

status_t Camera3Stream::returnBuffer(const camera3_stream_buffer &buffer,nsecs_t timestamp) {

//返回buffer

status_t res = returnBufferLocked(buffer, timestamp);

if (res == OK) {

fireBufferListenersLocked(buffer, /*acquired*/false, /*output*/true);

mOutputBufferReturnedSignal.signal();

}

return res;

}12

3

4

5

6

7

8

9

10

再继续看returnBufferLocked,它调用了returnAnyBufferLocked方法,而returnAnyBufferLocked方法又调用了returnBufferCheckedLocked方法,现在分析returnBufferCheckedLocked:

// Camera3OutputStream.cpp

status_t Camera3OutputStream::returnBufferCheckedLocked(const camera3_stream_buffer &buffer,

nsecs_t timestamp,bool output,/*out*/sp<Fence> *releaseFenceOut) {

...

// Fence management - always honor release fence from HAL

sp<Fence> releaseFence = new Fence(buffer.release_fence);

int anwReleaseFence = releaseFence->dup();

if (buffer.status == CAMERA3_BUFFER_STATUS_ERROR) {

// Cancel buffer

res = currentConsumer->cancelBuffer(currentConsumer.get(),

container_of(buffer.buffer, ANativeWindowBuffer, handle),

anwReleaseFence);

...

} else {

...

res = currentConsumer->queueBuffer(currentConsumer.get(),

container_of(buffer.buffer, ANativeWindowBuffer, handle),

anwReleaseFence);

...

}

...

return res;

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

由代码可知,如果Buffer没有出现状态错误,它会调用currentConsumer的queueBuffer方法,而具体的Consumer则是在应用层初始化Camera时进行绑定的,典型的Consumer有SurfaceTexture,ImageReader等,而在Native层中,它会调用BufferQueueProducer的queueBuffer方法:

// BufferQueueProducer.cpp

status_t BufferQueueProducer::queueBuffer(int slot,

const QueueBufferInput &input, QueueBufferOutput *output) {

...

//初始化Frame可用的监听器

sp<IConsumerListener> frameAvailableListener;

sp<IConsumerListener> frameReplacedListener;

int callbackTicket = 0;

BufferItem item;

{ // Autolock scope

...

const sp<GraphicBuffer>& graphicBuffer(mSlots[slot].mGraphicBuffer);

Rect bufferRect(graphicBuffer->getWidth(), graphicBuffer->getHeight());

Rect croppedRect;

crop.intersect(bufferRect, &croppedRect);

...

//如果队列为空

if (mCore->mQueue.empty()) {

mCore->mQueue.push_back(item);

frameAvailableListener = mCore->mConsumerListener;

} else {

//否则,不为空,对Buffer进行处理,并获取FrameAvailableListener监听

BufferQueueCore::Fifo::iterator front(mCore->mQueue.begin());

if (front->mIsDroppable) {

if (mCore->stillTracking(front)) {

mSlots[front->mSlot].mBufferState = BufferSlot::FREE;

mCore->mFreeBuffers.push_front(front->mSlot);

}

*front = item;

frameReplacedListener = mCore->mConsumerListener;

} else {

mCore->mQueue.push_back(item);

frameAvailableListener = mCore->mConsumerListener;

}

}

mCore->mBufferHasBeenQueued = true;

mCore->mDequeueCondition.broadcast();

output->inflate(mCore->mDefaultWidth, mCore->mDefaultHeight,mCore->mTransformHint,

static_cast<uint32_t>(mCore->mQueue.size()));

// Take a ticket for the callback functions

callbackTicket = mNextCallbackTicket++;

mCore->validateConsistencyLocked();

} // Autolock scope

...

{

...

if (frameAvailableListener != NULL) {

//回调SurfaceTexture中定义好的监听IConsumerListener的onFrameAvailable方法来对数据进行处理

frameAvailableListener->onFrameAvailable(item);

} else if (frameReplacedListener != NULL) {

frameReplacedListener->onFrameReplaced(item);

}

++mCurrentCallbackTicket;

mCallbackCondition.broadcast();

}

return NO_ERROR;

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

由代码可知,它最后会调用Consumer的回调FrameAvailableListener的onFrameAvailable方法,到这里,就比较清晰为什么我们在写Camera应用,为其初始化Surface时,我们需要重写FrameAvailableListener了,因为在此方法里面,会进行结果的处理,至此,Camera HAL的Open流程就分析结束了。下面给出流程的时序图:

相关文章推荐

- android6.0源码分析之Camera2 HAL分析

- android6.0源码分析之Camera2 HAL分析

- android6.0源码分析之Camera2 HAL分析

- android6.0源码分析之Camera2 HAL分析

- android6.0源码分析之Camera2 HAL分析

- android6.0源码分析之Camera2 HAL分析

- android6.0源码分析之Camera2 HAL分析

- android6.0源码分析之Activity启动过程

- android6.0 Phone源码分析之Phone适配过程

- Android6.0源码分析之蓝牙

- android6.0源码分析之Camera API2.0下的初始化流程分析

- Android6.0的phone应用源码分析(2)——phone相关进程启动分析

- Android6.0源码分析—— Zygote进程分析(补充)

- android6.0源码分析之Camera API2.0下的初始化流程分析

- Android6.0的phone应用源码分析(5)——RIL层分析

- Android6.0的phone应用源码分析(4)——phone拨号流程分析

- android6.0源码分析之Camera API2.0下的Capture流程分析

- android6.0源码分析之Camera API2.0下的初始化流程分析

- Android6.0关机流程源码分析一

- Android6.0源码分析之录音功能(一)