Kafka学习笔记——centos7下kafka安装配置与验证

2017-09-16 14:13

766 查看

简介

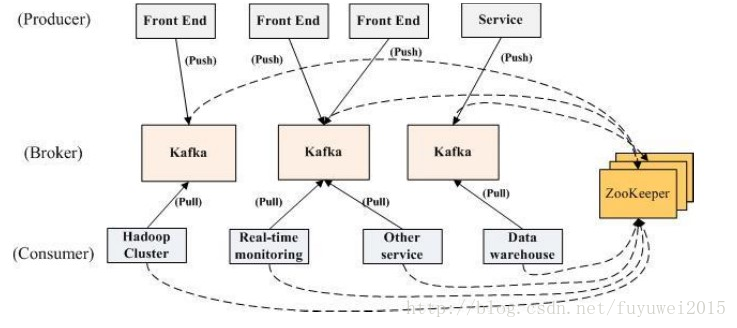

我们先看看官方给出的kafka分布式架构图

多个 broker 协同合作,producer 和 consumer 部署在各个业务逡辑中被频繁的调用,三者通过 zookeeper管理协调请求和转収。返样一个高怅能的分布式消息収布不订阅系统就完成了。

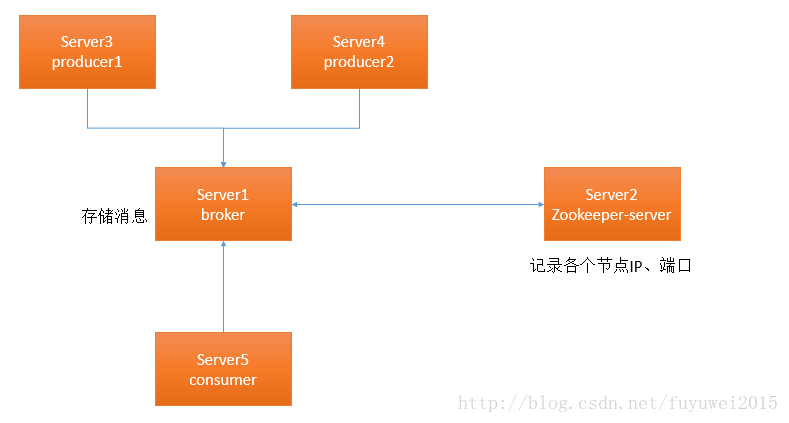

我们以一个broker为例介绍下整个消息系统的启动过程

整个系统运行的顺序:

1.启劢 zookeeper 的 server

2.启劢 kafka 的 server

3.Producer 如果生产了数据,会先通过 zookeeper 找刡 broker,然后将数据存放迕 broker

4.Consumer 如果要消费数据,会先通过 zookeeper 找对应的 broker,然后消费。

分布式环境搭建

单机版

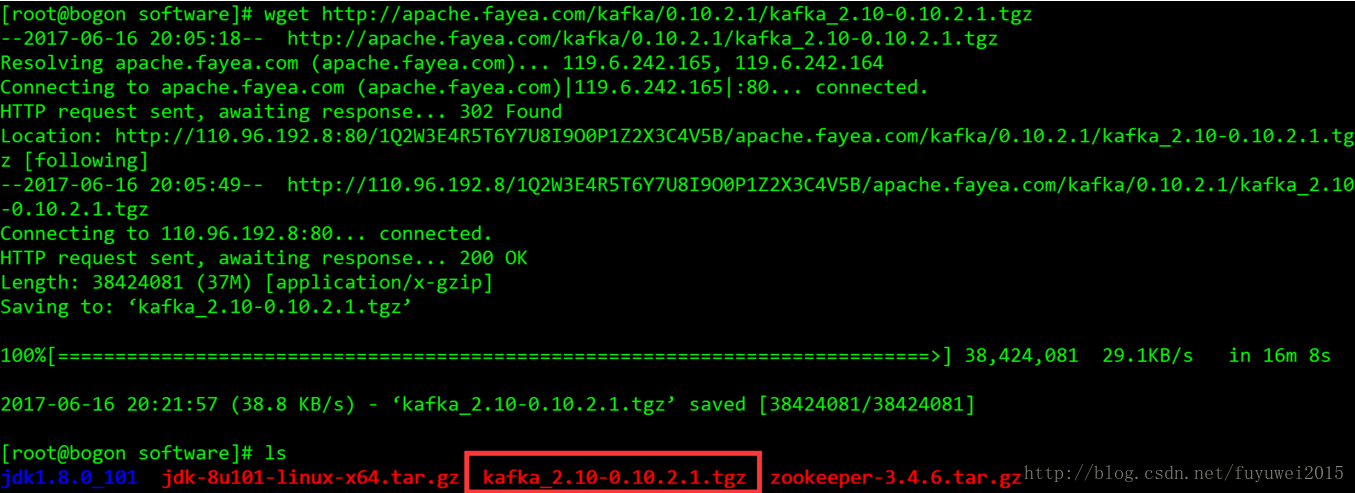

先去kafka官网现在最新的kafka安装包[root@bogon software]# wget http://apache.fayea.com/kafka/0.10.2.1/kafka_2.10-0.10.2.1.tgz[/code]1

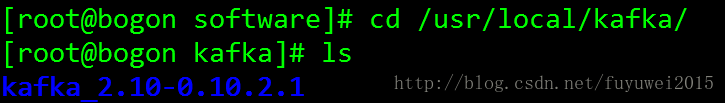

然后把kafka解压到安装目录下[root@bogon software]# tar -zvxf kafka_2.10-0.10.2.1.tgz -C /usr/local/kafka/1

重命名kafka安装包,方便配置[root@bogon kafka]# mv kafka_2.10-0.10.2.1 kafk1

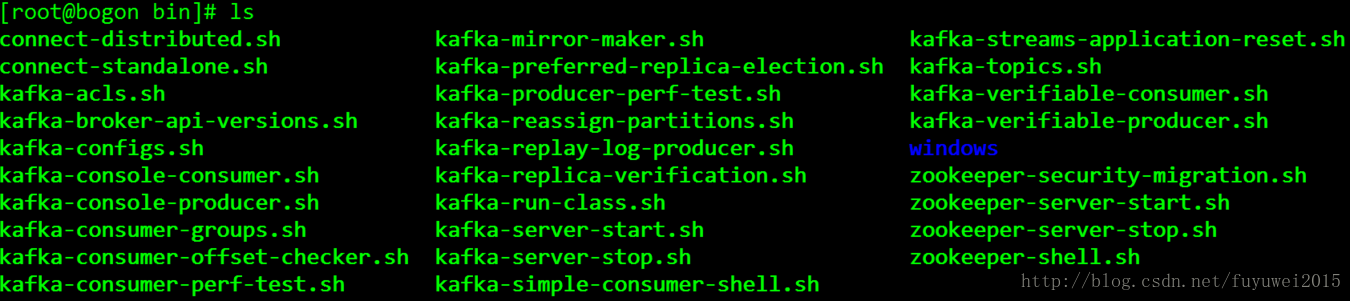

进入kafka/bin文件夹,这里有各种功能脚本,包括发送消息,消费消息、创建topic,查看topic以及各种启动、停止服务脚本等

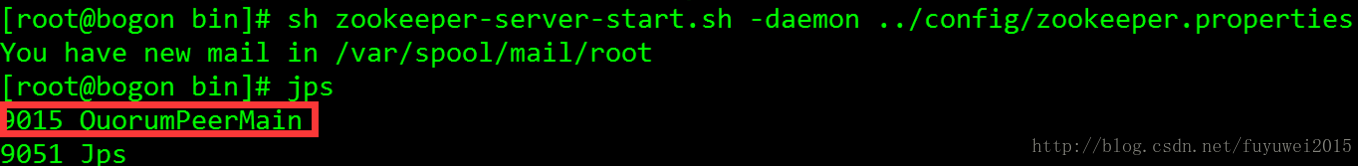

启动zookeeper[root@bogon bin]# sh zookeeper-server-start.sh -daemon ../config/zookeeper.properties1

启动kafka服务[root@bogon bin]# sh kafka-server-start.sh ../config/server.properties1

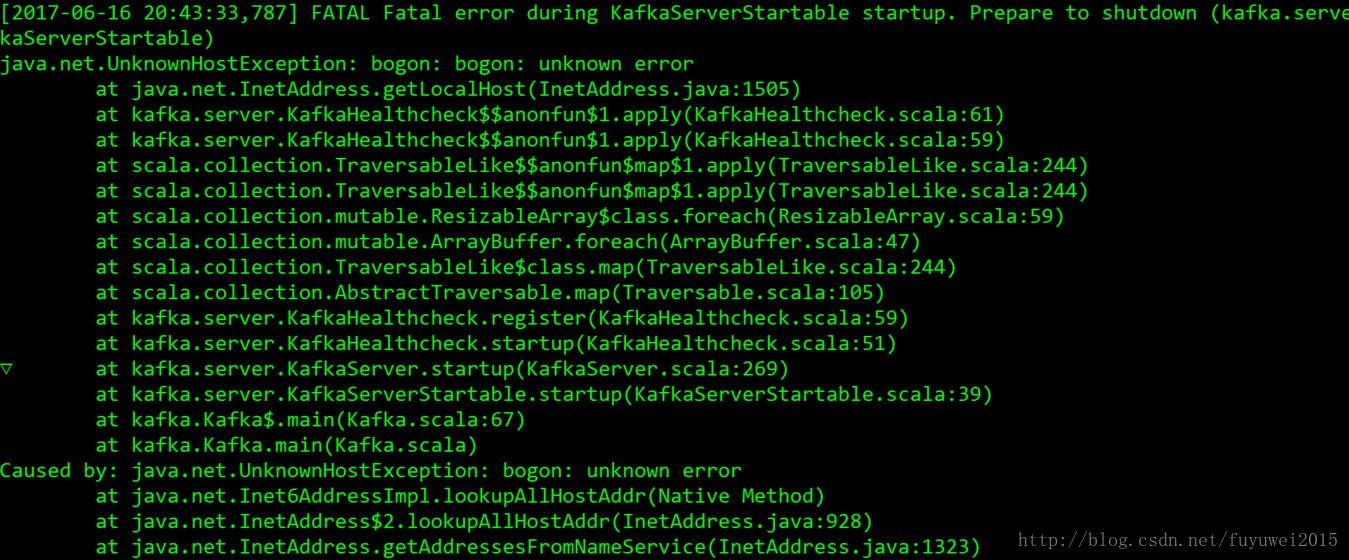

提示如下错误:

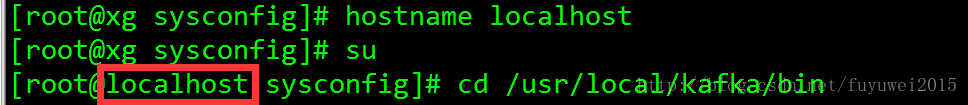

这时候我们需要修改下主机名[root@xg sysconfig]# hostname localhost [root@xg sysconfig]# su1

2

再次执行[root@localhost bin]# sh kafka-server-start.sh ../config/server.properties1[root@localhost bin]# sh kafka-server-start.sh ../config/server.properties [2017-06-16 20:49:10,581] INFO KafkaConfig values: advertised.host.name = null advertised.listeners = null advertised.port = null authorizer.class.name = auto.create.topics.enable = true auto.leader.rebalance.enable = true background.threads = 10 broker.id = 0 broker.id.generation.enable = true broker.rack = null compression.type = producer connections.max.idle.ms = 600000 controlled.shutdown.enable = true controlled.shutdown.max.retries = 3 controlled.shutdown.retry.backoff.ms = 5000 controller.socket.timeout.ms = 30000 create.topic.policy.class.name = null default.replication.factor = 1 delete.topic.enable = false fetch.purgatory.purge.interval.requests = 1000 group.max.session.timeout.ms = 300000 group.min.session.timeout.ms = 6000 host.name = inter.broker.listener.name = null inter.broker.protocol.version = 0.10.2-IV0 leader.imbalance.check.interval.seconds = 300 leader.imbalance.per.broker.percentage = 10 listener.security.protocol.map = SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,TRACE:TRACE,SASL_SSL:SASL_SSL,PLAINTEXT:PLAINTEXT listeners = null log.cleaner.backoff.ms = 15000 log.cleaner.dedupe.buffer.size = 134217728 log.cleaner.delete.retention.ms = 86400000 log.cleaner.enable = true log.cleaner.io.buffer.load.factor = 0.9 log.cleaner.io.buffer.size = 524288 log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308 log.cleaner.min.cleanable.ratio = 0.5 log.cleaner.min.compaction.lag.ms = 0 log.cleaner.threads = 1 log.cleanup.policy = [delete] log.dir = /tmp/kafka-logs log.dirs = /tmp/kafka-logs log.flush.interval.messages = 9223372036854775807 log.flush.interval.ms = null log.flush.offset.checkpoint.interval.ms = 60000 log.flush.scheduler.interval.ms = 9223372036854775807 log.index.interval.bytes = 4096 log.index.size.max.bytes = 10485760 log.message.format.version = 0.10.2-IV0 log.message.timestamp.difference.max.ms = 9223372036854775807 log.message.timestamp.type = CreateTime log.preallocate = false log.retention.bytes = -1 log.retention.check.interval.ms = 300000 log.retention.hours = 168 log.retention.minutes = null log.retention.ms = null log.roll.hours = 168 log.roll.jitter.hours = 0 log.roll.jitter.ms = null log.roll.ms = null log.segment.bytes = 1073741824 log.segment.delete.delay.ms = 60000 max.connections.per.ip = 2147483647 max.connections.per.ip.overrides = message.max.bytes = 1000012 metric.reporters = [] metrics.num.samples = 2 metrics.recording.level = INFO metrics.sample.window.ms = 30000 min.insync.replicas = 1 num.io.threads = 8 num.network.threads = 3 num.partitions = 1 num.recovery.threads.per.data.dir = 1 num.replica.fetchers = 1 offset.metadata.max.bytes = 4096 offsets.commit.required.acks = -1 offsets.commit.timeout.ms = 5000 offsets.load.buffer.size = 5242880 offsets.retention.check.interval.ms = 600000 offsets.retention.minutes = 1440 offsets.topic.compression.codec = 0 offsets.topic.num.partitions = 50 offsets.topic.replication.factor = 3 offsets.topic.segment.bytes = 104857600 port = 9092 principal.builder.class = class org.apache.kafka.common.security.auth.DefaultPrincipalBuilder producer.purgatory.purge.interval.requests = 1000 queued.max.requests = 500 quota.consumer.default = 9223372036854775807 quota.producer.default = 9223372036854775807 quota.window.num = 11 quota.window.size.seconds = 1 replica.fetch.backoff.ms = 1000 replica.fetch.max.bytes = 1048576 replica.fetch.min.bytes = 1 replica.fetch.response.max.bytes = 10485760 replica.fetch.wait.max.ms = 500 replica.high.watermark.checkpoint.interval.ms = 5000 replica.lag.time.max.ms = 10000 replica.socket.receive.buffer.bytes = 65536 replica.socket.timeout.ms = 30000 replication.quota.window.num = 11 replication.quota.window.size.seconds = 1 request.timeout.ms = 30000 reserved.broker.max.id = 1000 sasl.enabled.mechanisms = [GSSAPI] sasl.kerberos.kinit.cmd = /usr/bin/kinit sasl.kerberos.min.time.before.relogin = 60000 sasl.kerberos.principal.to.local.rules = [DEFAULT] sasl.kerberos.service.name = null sasl.kerberos.ticket.renew.jitter = 0.05 sasl.kerberos.ticket.renew.window.factor = 0.8 sasl.mechanism.inter.broker.protocol = GSSAPI security.inter.broker.protocol = PLAINTEXT socket.receive.buffer.bytes = 102400 socket.request.max.bytes = 104857600 socket.send.buffer.bytes = 102400 ssl.cipher.suites = null ssl.client.auth = none ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1] ssl.endpoint.identification.algorithm = null ssl.key.password = null ssl.keymanager.algorithm = SunX509 ssl.keystore.location = null ssl.keystore.password = null ssl.keystore.type = JKS ssl.protocol = TLS ssl.provider = null ssl.secure.random.implementation = null ssl.trustmanager.algorithm = PKIX ssl.truststore.location = null ssl.truststore.password = null ssl.truststore.type = JKS unclean.leader.election.enable = true zookeeper.connect = localhost:2181 zookeeper.connection.timeout.ms = 6000 zookeeper.session.timeout.ms = 6000 zookeeper.set.acl = false zookeeper.sync.time.ms = 2000 (kafka.server.KafkaConfig) [2017-06-16 20:49:10,678] INFO starting (kafka.server.KafkaServer) [2017-06-16 20:49:10,681] INFO Connecting to zookeeper on localhost:2181 (kafka.server.KafkaServer) [2017-06-16 20:49:10,706] INFO Starting ZkClient event thread. (org.I0Itec.zkclient.ZkEventThread) [2017-06-16 20:49:10,729] INFO Client environment:zookeeper.version=3.4.9-1757313, built on 08/23/2016 06:50 GMT (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,729] INFO Client environment:host.name=localhost (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,729] INFO Client environment:java.version=1.8.0_101 (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,729] INFO Client environment:java.vendor=Oracle Corporation (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,729] INFO Client environment:java.home=/usr/local/java/jdk1.8.0_101/jre (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,729] INFO Client environment:java.class.path=.:/usr/local/java/jdk1.8.0_101/lib/dt.jar:/usr/local/java/jdk1.8.0_101/lib/tools.jar:/usr/local/kafka/kafka/bin/../libs/aopalliance-repackaged-2.5.0-b05.jar:/usr/local/kafka/kafka/bin/../libs/argparse4j-0.7.0.jar:/usr/local/kafka/kafka/bin/../libs/connect-api-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/connect-file-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/connect-json-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/connect-runtime-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/connect-transforms-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/guava-18.0.jar:/usr/local/kafka/kafka/bin/../libs/hk2-api-2.5.0-b05.jar:/usr/local/kafka/kafka/bin/../libs/hk2-locator-2.5.0-b05.jar:/usr/local/kafka/kafka/bin/../libs/hk2-utils-2.5.0-b05.jar:/usr/local/kafka/kafka/bin/../libs/jackson-annotations-2.8.0.jar:/usr/local/kafka/kafka/bin/../libs/jackson-annotations-2.8.5.jar:/usr/local/kafka/kafka/bin/../libs/jackson-core-2.8.5.jar:/usr/local/kafka/kafka/bin/../libs/jackson-databind-2.8.5.jar:/usr/local/kafka/kafka/bin/../libs/jackson-jaxrs-base-2.8.5.jar:/usr/local/kafka/kafka/bin/../libs/jackson-jaxrs-json-provider-2.8.5.jar:/usr/local/kafka/kafka/bin/../libs/jackson-module-jaxb-annotations-2.8.5.jar:/usr/local/kafka/kafka/bin/../libs/javassist-3.20.0-GA.jar:/usr/local/kafka/kafka/bin/../libs/javax.annotation-api-1.2.jar:/usr/local/kafka/kafka/bin/../libs/javax.inject-1.jar:/usr/local/kafka/kafka/bin/../libs/javax.inject-2.5.0-b05.jar:/usr/local/kafka/kafka/bin/../libs/javax.servlet-api-3.1.0.jar:/usr/local/kafka/kafka/bin/../libs/javax.ws.rs-api-2.0.1.jar:/usr/local/kafka/kafka/bin/../libs/jersey-client-2.24.jar:/usr/local/kafka/kafka/bin/../libs/jersey-common-2.24.jar:/usr/local/kafka/kafka/bin/../libs/jersey-container-servlet-2.24.jar:/usr/local/kafka/kafka/bin/../libs/jersey-container-servlet-core-2.24.jar:/usr/local/kafka/kafka/bin/../libs/jersey-guava-2.24.jar:/usr/local/kafka/kafka/bin/../libs/jersey-media-jaxb-2.24.jar:/usr/local/kafka/kafka/bin/../libs/jersey-server-2.24.jar:/usr/local/kafka/kafka/bin/../libs/jetty-continuation-9.2.15.v20160210.jar:/usr/local/kafka/kafka/bin/../libs/jetty-http-9.2.15.v20160210.jar:/usr/local/kafka/kafka/bin/../libs/jetty-io-9.2.15.v20160210.jar:/usr/local/kafka/kafka/bin/../libs/jetty-security-9.2.15.v20160210.jar:/usr/local/kafka/kafka/bin/../libs/jetty-server-9.2.15.v20160210.jar:/usr/local/kafka/kafka/bin/../libs/jetty-servlet-9.2.15.v20160210.jar:/usr/local/kafka/kafka/bin/../libs/jetty-servlets-9.2.15.v20160210.jar:/usr/local/kafka/kafka/bin/../libs/jetty-util-9.2.15.v20160210.jar:/usr/local/kafka/kafka/bin/../libs/jopt-simple-5.0.3.jar:/usr/local/kafka/kafka/bin/../libs/kafka_2.10-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/kafka_2.10-0.10.2.1-sources.jar:/usr/local/kafka/kafka/bin/../libs/kafka_2.10-0.10.2.1-test-sources.jar:/usr/local/kafka/kafka/bin/../libs/kafka-clients-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/kafka-log4j-appender-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/kafka-streams-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/kafka-streams-examples-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/kafka-tools-0.10.2.1.jar:/usr/local/kafka/kafka/bin/../libs/log4j-1.2.17.jar:/usr/local/kafka/kafka/bin/../libs/lz4-1.3.0.jar:/usr/local/kafka/kafka/bin/../libs/metrics-core-2.2.0.jar:/usr/local/kafka/kafka/bin/../libs/osgi-resource-locator-1.0.1.jar:/usr/local/kafka/kafka/bin/../libs/reflections-0.9.10.jar:/usr/local/kafka/kafka/bin/../libs/rocksdbjni-5.0.1.jar:/usr/local/kafka/kafka/bin/../libs/scala-library-2.10.6.jar:/usr/local/kafka/kafka/bin/../libs/slf4j-api-1.7.21.jar:/usr/local/kafka/kafka/bin/../libs/slf4j-log4j12-1.7.21.jar:/usr/local/kafka/kafka/bin/../libs/snappy-java-1.1.2.6.jar:/usr/local/kafka/kafka/bin/../libs/validation-api-1.1.0.Final.jar:/usr/local/kafka/kafka/bin/../libs/zkclient-0.10.jar:/usr/local/kafka/kafka/bin/../libs/zookeeper-3.4.9.jar (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,730] INFO Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,730] INFO Client environment:java.io.tmpdir=/tmp (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,730] INFO Client environment:java.compiler=<NA> (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,730] INFO Client environment:os.name=Linux (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,730] INFO Client environment:os.arch=amd64 (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,730] INFO Client environment:os.version=3.10.0-327.el7.x86_64 (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,730] INFO Client environment:user.name=root (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,730] INFO Client environment:user.home=/root (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,730] INFO Client environment:user.dir=/usr/local/kafka/kafka/bin (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,732] INFO Initiating client connection, connectString=localhost:2181 sessionTimeout=6000 watcher=org.I0Itec.zkclient.ZkClient@21de60b4 (org.apache.zookeeper.ZooKeeper) [2017-06-16 20:49:10,759] INFO Waiting for keeper state SyncConnected (org.I0Itec.zkclient.ZkClient) [2017-06-16 20:49:10,766] INFO Opening socket connection to server localhost/0:0:0:0:0:0:0:1:2181. Will not attempt to authenticate using SASL (unknown error) (org.apache.zookeeper.ClientCnxn) [2017-06-16 20:49:10,903] INFO Socket connection established to localhost/0:0:0:0:0:0:0:1:2181, initiating session (org.apache.zookeeper.ClientCnxn) [2017-06-16 20:49:10,926] INFO Session establishment complete on server localhost/0:0:0:0:0:0:0:1:2181, sessionid = 0x15cb42456980001, negotiated timeout = 6000 (org.apache.zookeeper.ClientCnxn) [2017-06-16 20:49:10,931] INFO zookeeper state changed (SyncConnected) (org.I0Itec.zkclient.ZkClient) [2017-06-16 20:49:11,200] INFO Cluster ID = qln2xViYSCGEl63misidng (kafka.server.KafkaServer) [2017-06-16 20:49:11,206] WARN No meta.properties file under dir /tmp/kafka-logs/meta.properties (kafka.server.BrokerMetadataCheckpoint) [2017-06-16 20:49:11,246] INFO [ThrottledRequestReaper-Fetch], Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper) [2017-06-16 20:49:11,250] INFO [ThrottledRequestReaper-Produce], Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper) [2017-06-16 20:49:11,314] INFO Loading logs. (kafka.log.LogManager) [2017-06-16 20:49:11,327] INFO Logs loading complete in 11 ms. (kafka.log.LogManager) [2017-06-16 20:49:11,436] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager) [2017-06-16 20:49:11,439] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager) [2017-06-16 20:49:11,520] INFO Awaiting socket connections on 0.0.0.0:9092. (kafka.network.Acceptor) [2017-06-16 20:49:11,526] INFO [Socket Server on Broker 0], Started 1 acceptor threads (kafka.network.SocketServer) [2017-06-16 20:49:11,560] INFO [ExpirationReaper-0], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2017-06-16 20:49:11,564] INFO [ExpirationReaper-0], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2017-06-16 20:49:11,644] INFO Creating /controller (is it secure? false) (kafka.utils.ZKCheckedEphemeral) [2017-06-16 20:49:11,660] INFO Result of znode creation is: OK (kafka.utils.ZKCheckedEphemeral) [2017-06-16 20:49:11,660] INFO 0 successfully elected as leader (kafka.server.ZookeeperLeaderElector) [2017-06-16 20:49:11,821] INFO [ExpirationReaper-0], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2017-06-16 20:49:11,842] INFO [ExpirationReaper-0], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2017-06-16 20:49:11,848] INFO New leader is 0 (kafka.server.ZookeeperLeaderElector$LeaderChangeListener) [2017-06-16 20:49:11,851] INFO [ExpirationReaper-0], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2017-06-16 20:49:11,866] INFO [GroupCoordinator 0]: Starting up. (kafka.coordinator.GroupCoordinator) [2017-06-16 20:49:11,868] INFO [GroupCoordinator 0]: Startup complete. (kafka.coordinator.GroupCoordinator) [2017-06-16 20:49:11,871] INFO [Group Metadata Manager on Broker 0]: Removed 0 expired offsets in 2 milliseconds. (kafka.coordinator.GroupMetadataManager) [2017-06-16 20:49:11,948] INFO Will not load MX4J, mx4j-tools.jar is not in the classpath (kafka.utils.Mx4jLoader$) [2017-06-16 20:49:12,035] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.utils.ZKCheckedEphemeral) [2017-06-16 20:49:12,043] INFO Result of znode creation is: OK (kafka.utils.ZKCheckedEphemeral) [2017-06-16 20:49:12,046] INFO Registered broker 0 at path /brokers/ids/0 with addresses: EndPoint(localhost,9092,ListenerName(PLAINTEXT),PLAINTEXT) (kafka.utils.ZkUtils) [2017-06-16 20:49:12,048] WARN No meta.properties file under dir /tmp/kafka-logs/meta.properties (kafka.server.BrokerMetadataCheckpoint) [2017-06-16 20:49:12,130] INFO Kafka version : 0.10.2.1 (org.apache.kafka.common.utils.AppInfoParser) [2017-06-16 20:49:12,130] INFO Kafka commitId : e89bffd6b2eff799 (org.apache.kafka.common.utils.AppInfoParser) [2017-06-16 20:49:12,132] INFO [Kafka Server 0], started (kafka.server.KafkaServer)1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

最后看到kafka成功启动

测试

创建一个topic[root@localhost bin]# sh kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic sunwukong Created topic "sunwukong".1

2

topic创建成功,下面我查看一下[root@localhost bin]# sh kafka-topics.sh --list --zookeeper localhost:2181 sunwukong1

2

发送消息[root@localhost bin]# sh kafka-console-producer.sh --broker-list localhost:9092 --topic sunwukong hello sunwukong,haha this is a test message1

2

3

客户端接收消息[root@localhost bin]# sh kafka-console-consumer.sh --zookeeper localhost:2181 --topic sunwukong --from-beginning Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper]. hello sunwukong,haha this is a test message1

2

3

4

集群配置在单节点部署多个broker。 不同的broker 设置不同的 id,监听端口及日志目录。 例如:

单机多broker 集群配置cp config/server.properties config/server-1.properties1

修改server-1.propertiesconfig/server-1.properties: broker.id=1 port=9093 log.dir=/tmp/kafka-logs-11

2

3

4

启动Kafka服务sh kafka-server-start.sh ..config/server-1.properties &1

启动多个服务,具体操作通单机版一样我用的是vm,可以克隆3个vm,模拟安装配置

多机多broker 集群配置

分别在多个节点按上述方式安装Kafka,配置启动多个Zookeeper 实例。zookeeper.properties配置如下:initLimit=5 syncLimit=2 server.1=192.168.1.102:2888:3888 server.2=192.168.1.103:2888:3888 server.3=192.168.1.104:2888:38881

2

3

4

5

分别配置多个机器上的Kafka服务 设置不同的broke id,修改server.propertieszookeeper.connect=192.168.1.102:2181,192.168.1.102:2181,192.168.1.102:21811

最后我们分别启动zk服务和kafka服务进行验证。

相关文章推荐

- Kafka学习笔记——centos7下kafka安装配置与验证

- CentOS 6.4 i386的学习笔记-003 vsftp 的安装与简单配置

- hadoop学习笔记1--centos6.2 64位 最小化(minimal)安装配置

- 学习笔记1——Linux(CentOS)在虚拟机上最小化安装之后的网络配置及其与主机的连接

- MongoDB学习笔记之-- MongoDB安装与配置(CentOS6.5)

- Docker学习笔记3:CentOS7下安装Docker-Compose

- Linux学习笔记之Centos 6.3下NFS的安装配置

- Scala学习笔记 --- Centos7下安装Scala编译环境

- Linux学习笔记 --- centos7下安装pymssql

- Hadoop学习笔记(二)----环境搭建之CentOS 7 配置与安装Hadoop

- Docker学习笔记3:CentOS7下安装Docker-Compose

- 学习笔记1——Linux(CentOS)在虚拟机上最小化安装之后的网络配置及其与主机的连接

- kafka学习笔记——安装配置(单例-linux)

- Hadoop学习笔记-011-CentOS_6.5_64_HA高可用-Zookeeper3.4.5安装Kafka+消息监控KafkaOffsetMonitor

- AD学习笔记8——活动目录服务的基本安装和配置

- 【Andriod 学习笔记1】--环境安装与配置

- MySQL入门很简单-学习笔记 - 第 2 章 Windows平台下安装与配置MySQL

- EX07学习笔记之二:安装之后(2)-安全配置向导

- linux 学习笔记 - php 环境安装与配置

- Linux学习笔记---RHEL-5配置CentOS-5的yum源