线性回归(linear_regression),多项式回归(polynomial regression)(Tensorflow实现)

2017-08-07 11:58

731 查看

#回归

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

n_observations=100

xs=np.linspace(-3,3,n_observations)

ys=np.sin(xs)+np.random.uniform(-0.5,0.5,n_observations)

plt.scatter(xs,ys)

plt.show()

X=tf.placeholder(tf.float32,name='X')

Y=tf.placeholder(tf.float32,name='Y')

# just state these two parameters

W=tf.Variable(tf.random_normal([1]),name='weight')

b=tf.Variable(tf.random_normal([1]),name='bias')

Y_pred=tf.add(tf.multiply(X,W),b,name="y_pred")

loss=tf.square(Y-Y_pred,name='loss')

learning_rate=0.01

optimizer=tf.train.ProximalGradientDescentOptimizer(learning_rate).minimize(loss)

n_samples=xs.shape[0]

# inititalize these parameters.Only after this step, the para will have value

init=tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

writer=tf.summary.FileWriter('./graphs/linear_reg',sess.graph)

for i in range(50):

total_loss=0

for x,y in zip(xs,ys):

_,l=sess.run([optimizer,loss],feed_dict={X:x,Y:y})

total_loss+=l

if i%5 ==0:

print('Epoch:{0}: {1}'.format(l,total_loss/n_samples) )

writer.close();

# need run, and then you can get the content of w and b

W,b=sess.run([W,b])

print(W,b)

print ("W:"+str(W[0]))

print ("b:"+str(b[0]))

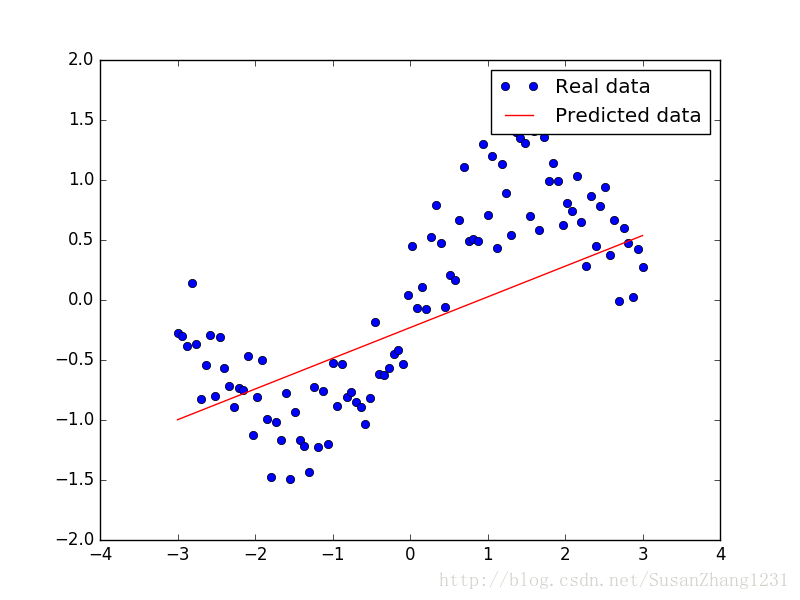

plt.plot(xs,ys,'bo',label='Real data')

plt.plot(xs,xs*W+b,'r',label='Predicted data')

plt.legend()

plt.show()

#多项式回归

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

n_observation=100

xs=np.linspace(-3,3,n_observation)

ys=np.sin(xs)+np.random.uniform(-0.5,0.5,n_observation)

plt.scatter(xs,ys)

plt.show()

X=tf.placeholder(tf.float32,name="X")

Y=tf.placeholder(tf.float32,name="Y")

W=tf.Variable(tf.random_uniform([1]),name="weights")

b=tf.Variable(tf.random_uniform([1]),name="bias")

Y_pred=tf.add(tf.multiply(X,W),b)

W_2=tf.Variable(tf.random_uniform([1]),name="weights_2")

Y_pred=tf.add(tf.multiply(tf.pow(X,2),W_2),Y_pred)

W_3=tf.Variable(tf.random_uniform([1]),name="weights_3")

Y_pred=tf.add(tf.multiply(tf.pow(X,2),W_3),Y_pred)

sample_num=xs.shape[0]

loss=tf.reduce_sum(tf.pow(Y_pred-Y,2))/sample_num

learning_rate=0.01

optimizer=tf.train.GradientDescentOptimizer(learning_rate).minimize(loss)

init=tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

writer=tf.summary.FileWriter('./graphs/polynomial_reg',sess.graph)

for i in range(1000):

total_loss=0

for x,y in zip(xs,ys):

_,l=sess.run([optimizer,loss],feed_dict={X:x,Y:y})

total_loss+=l

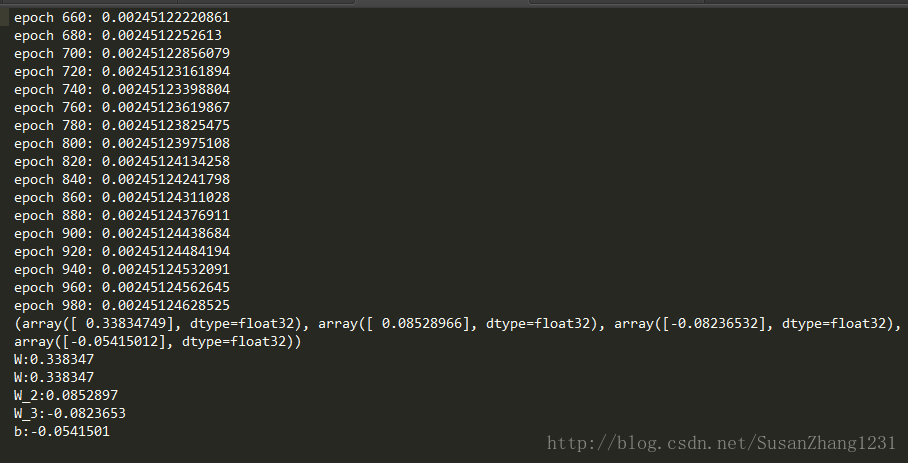

if i%20 ==0:

print ('epoch {0}: {1}'.format(i,total_loss/sample_num))

writer.close()

W, W_2, W_3, b=sess.run([W, W_2, W_3, b])

print(W, W_2, W_3, b)

print ("W:" + str(W[0]))

# print(W, b)

print ("W:" + str(W[0]))

print ("W_2:" + str(W_2[0]))

print ("W_3:" + str(W_3[0]))

print ("b:" + str(b[0]))

plt.plot(xs,ys,'bo',label='real_data')

plt.plot(xs,xs*W+np.power(xs,2)*W_2+np.power(xs,3)*W_3+b,'r',label='Predicted data')

plt.legend()

plt.show()

相关文章推荐

- Matlab实现线性回归和逻辑回归: Linear Regression & Logistic Regression

- Matlab实现线性回归和逻辑回归: Linear Regression & Logistic Regression

- Matlab实现线性回归和逻辑回归: Linear Regression & Logistic Regression

- Matlab实现线性回归和逻辑回归: Linear Regression & Logistic Regression

- Stanford机器学习网络课程---第三讲(续)Matlab实现线性回归和逻辑回归: Linear Regression & Logistic Regression

- Matlab实现线性回归和逻辑回归: Linear Regression & Logistic Regression

- Matlab实现线性回归和逻辑回归: Linear Regression & Logistic Regression

- Matlab实现线性回归和逻辑回归: Linear Regression & Logistic Regression

- tensorflow实现多项式回归

- Tensorflow实战学习(二十四)【实现Softmax Regression(回归)识别手写数字】

- [03]tensorflow实现softmax回归(softmax regression)

- 线性回归(linear-regression)预测算法基本概念&C++实现

- 【自用】ML: 简单线性回归(Simple Linear Regression)实现

- 学习笔记TF024:TensorFlow实现Softmax Regression(回归)识别手写数字

- Locally Weighted Linear Regression 局部加权线性回归-R实现

- tensorflow实现softmax回归(softmax regression)——简单的MNIST识别(第一课)

- 从零开始实现线性回归、岭回归、lasso回归、多项式回归模型

- Tensorflow学习笔记(三)——用Tensorflow实现线性回归和逻辑回归

- 梯度下降法 线性回归 多项式回归 python实现

- python实现多变量线性回归(Linear Regression with Multiple Variables)