how to use Sqoop to import/ export data

2016-11-25 09:35

561 查看

[b]Sqoop [/b]is a tool designed for efficiently transferring data between RDBMS and HDFS, we can import data from mysql, oracle, and other data bases into HDFS very easily; meanwhile we can dump data into data base from HDFS. For detailed documentation, please refer to sqoop documentation.

Before using Sqoop, please follow steps to setup it correctly.

Sqoop - Import

the following command is used for import

sqoop import (generic-args) (import-args)

given a table named stock_info, and the schema is:

Case 1: we can use below command to import stock_info data to hadoop hdfs file system:

sqoop import --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --m 1

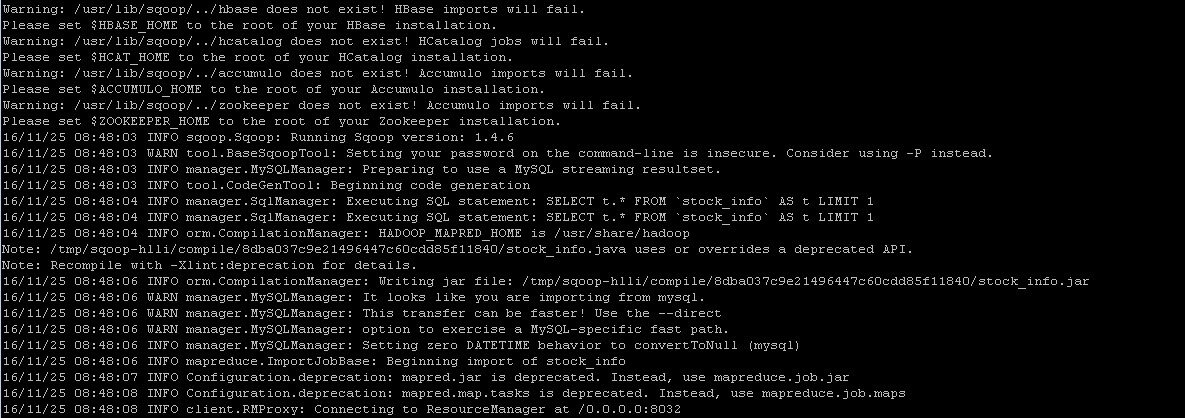

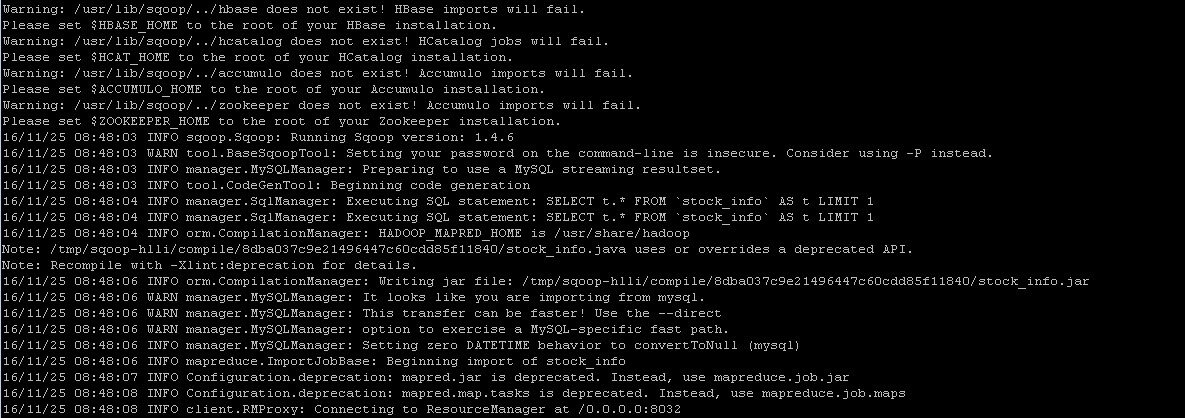

and the result looks like:

we can verify result in hdfs by running command

hadoop fs -cat /emp/part-m-*

Case 2: sepcify the target directory in hdfs by running the following import command

sqoop import --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --m 1 --target-dir /temp

then we can verify result by executing the same command as above

Case 3: imcremental import by specifying --incremental, --check-column and --append arguments. Note we should change 'last_chg_date' when applying other tables.

sqoop import --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --m 1 --target-dir /temp --incremental lastmodified --check-column last_chg_date --append

Case 4: specify target file format as parquet format by adding argument '--as-parquetfile'

sqoop import --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --m 1 --target-dir /temp --incremental lastmodified --check-column last_chg_date --append --as-parquetfile

Case 5: import all tables

sqoop import-all-tables --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser

Sqoop - Export

export means to dump data from hdfs to mysql, oracle or other data bases, command syntax is like

sqoop export (generic-args) (export-args)

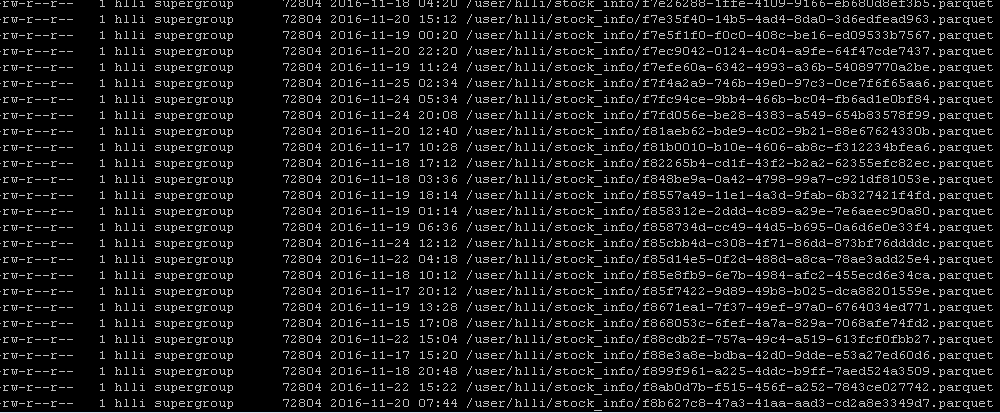

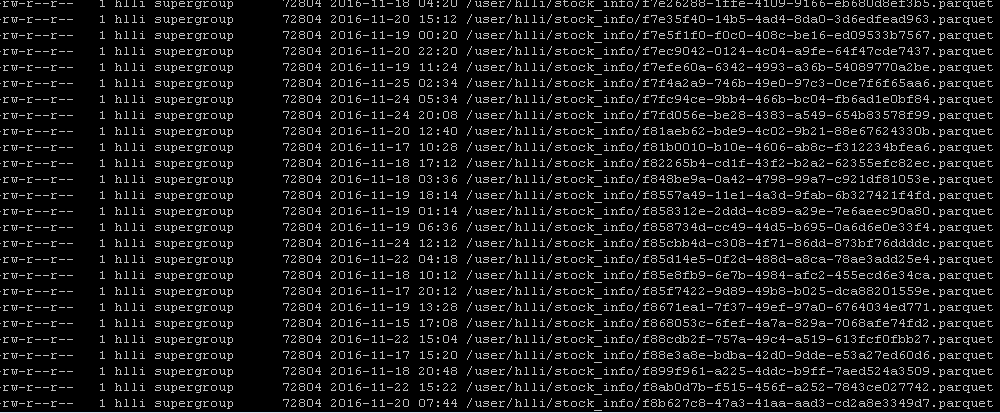

given there are many parquet files under stock_info folder which is imported by sqoop import command incrementally

then we want to dump data back into mysql data base, using the following command

sqoop export --connent jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --export-dir /user/hlli/stock_info

finally verify data in mysql command line

select * from stock_info;

Incremental importing data

by using linux timer 'crontab' to schedule a job to execute importing periodically.

cd /var/spool/cron

touch hlli (please change hlli to your user name here)

vi hlli

if it works, you will receive email in '/var/spool/mail/hlli'; meanwhile we can verify data by running command

hadoop fs -ls /

Commonly used Sqoop commands

sqoop help import

sqoop help export

sqoop help job

sqoop help codegen

sqoop help eval

sqoop help list-tables

sqoop help list-databases

sqoop help import-all-tables

References:

http://sqoop.apache.org/ http://man.linuxde.net/crontab

Before using Sqoop, please follow steps to setup it correctly.

Sqoop - Import

the following command is used for import

sqoop import (generic-args) (import-args)

given a table named stock_info, and the schema is:

Case 1: we can use below command to import stock_info data to hadoop hdfs file system:

sqoop import --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --m 1

and the result looks like:

we can verify result in hdfs by running command

hadoop fs -cat /emp/part-m-*

Case 2: sepcify the target directory in hdfs by running the following import command

sqoop import --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --m 1 --target-dir /temp

then we can verify result by executing the same command as above

Case 3: imcremental import by specifying --incremental, --check-column and --append arguments. Note we should change 'last_chg_date' when applying other tables.

sqoop import --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --m 1 --target-dir /temp --incremental lastmodified --check-column last_chg_date --append

Case 4: specify target file format as parquet format by adding argument '--as-parquetfile'

sqoop import --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --m 1 --target-dir /temp --incremental lastmodified --check-column last_chg_date --append --as-parquetfile

Case 5: import all tables

sqoop import-all-tables --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser

Sqoop - Export

export means to dump data from hdfs to mysql, oracle or other data bases, command syntax is like

sqoop export (generic-args) (export-args)

given there are many parquet files under stock_info folder which is imported by sqoop import command incrementally

then we want to dump data back into mysql data base, using the following command

sqoop export --connent jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --export-dir /user/hlli/stock_info

finally verify data in mysql command line

select * from stock_info;

Incremental importing data

by using linux timer 'crontab' to schedule a job to execute importing periodically.

cd /var/spool/cron

touch hlli (please change hlli to your user name here)

vi hlli

*/5 * * * * /usr/lib/sqoop/bin/sqoop import --connect jdbc:mysql://host:port/dbname --username loginuser --password loginuser --table stock_info --m 1 --target-dir /temp --incremental lastmodified --check-column last_chg_date --append --as-parquetfile

if it works, you will receive email in '/var/spool/mail/hlli'; meanwhile we can verify data by running command

hadoop fs -ls /

Commonly used Sqoop commands

sqoop help import

sqoop help export

sqoop help job

sqoop help codegen

sqoop help eval

sqoop help list-tables

sqoop help list-databases

sqoop help import-all-tables

References:

http://sqoop.apache.org/ http://man.linuxde.net/crontab

相关文章推荐

- How to export/import data with LOB type from one user/schema to another

- Export/Import DataPump Parameter QUERY - How to Specify a Query

- 1.3 Quick Start中 Step 7: Use Kafka Connect to import/export data官网剖析(博主推荐)

- Export/Import DataPump Parameter ACCESS_METHOD - How to Enforce a Method of Loading and Unloading Data ?

- How to use an ActiveX script task to import data into a new Excel file

- Export/Import DataPump Parameter ACCESS_METHOD - How to Enforce a Method of Loading and Unloading Data ? [ID 552424.1]

- How to use SQLPlus export data to csv format

- Export/Import DataPump Parameter ACCESS_METHOD - How to Enforce a Method of Loading and Unloading Data ? [ID 552424.1]

- Export/Import DataPump Parameter ACCESS_METHOD - How to Enforce a Method of Loading and Unloading Data ? [ID 552424.1]

- 【译】Export/Import DataPump Parameter TRACE - How to Diagnose Oracle Data Pump [ID 286496.1]

- Export/Import DataPump Parameter ACCESS_METHOD - How to Enforce a Method of Loading and Unloading Data ? [ID 552424.1]

- Export/Import DataPump Parameter ACCESS_METHOD - How to Enforce a Method of Loading and Unloading Data ? [ID 552424.1]

- Export/Import DataPump Parameter ACCESS_METHOD - How to Enforce a Method of Loading and Unloading Data ? [ID 552424.1]

- Export/Import DataPump Parameter ACCESS_METHOD - How to Enforce a Method of Loading and Unloading Data ? [ID 552424.1]

- How To Export/import OAM 11g Policies? (文档 ID 1487455.1)

- How to use Connector to sync data between AX and CRM

- DacImportExportCli : Import / Export Data from SQL Azure to SQL Server (vice versa)

- How To Validate A DataPump Export (EXPDP) Dump File ? (文档 ID 2113967.1) 转到底部 -------------------

- WPF Tutorial - How To Use A DataTemplateSelector

- How to use 'SetItemData()' and 'GetItemData()'?