UFLDL教程答案(7):Exercise:Learning color features with Sparse Autoencoders

2015-12-23 21:09

435 查看

教程地址:http://deeplearning.stanford.edu/wiki/index.php/%E7%BA%BF%E6%80%A7%E8%A7%A3%E7%A0%81%E5%99%A8

练习地址:http://deeplearning.stanford.edu/wiki/index.php/Exercise:Learning_color_features_with_Sparse_Autoencoders

PCA 白化处理的输入并不满足[0,1] 范围要求,也不清楚是否有最好的办法可以将数据缩放到特定范围中。

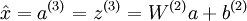

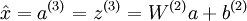

a(3) = f(z(3)) =z(3)

线性激励函数(上式)可以解决这个问题。需要注意,神经网络中隐含层的神经元依然使用S型(或者tanh)激励函数。

改变W(2) ,可以使输出值a(3)

大于 1 或者小于 0,不用再缩放输入数据。

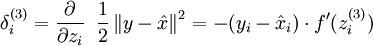

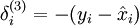

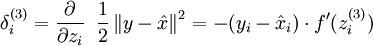

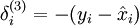

末尾层残差:

linear decoder (a sparse autoencoder whose output layer uses a linear activation function). You will then apply it to learn features on color images from the STL-10 dataset. These features will be used in an laterexercise

on convolution and pooling for classifying STL-10 images.

对于彩色图片:

You can just combine the intensities from all the color channels for the pixels into one long vector, as if you were working with a grayscale image with 3x the number of pixels as the original image.

save('STL10Features.mat', 'optTheta', 'ZCAWhite', 'meanPatch'); 这几个被保存了起来,后面练习会用到。

结果图:

练习地址:http://deeplearning.stanford.edu/wiki/index.php/Exercise:Learning_color_features_with_Sparse_Autoencoders

1.要点简述

S 型激励函数输出范围是 [0,1],当 f(z(3)) 采用该激励函数时,就要对输入限制或缩放,使其位于[0,1] 范围中。一些数据集,比如 MNIST,能方便将输出缩放到 [0,1] 中。但是有些很难满足对输入值的要求,比如,PCA 白化处理的输入并不满足[0,1] 范围要求,也不清楚是否有最好的办法可以将数据缩放到特定范围中。

a(3) = f(z(3)) =z(3)

线性激励函数(上式)可以解决这个问题。需要注意,神经网络中隐含层的神经元依然使用S型(或者tanh)激励函数。

改变W(2) ,可以使输出值a(3)

大于 1 或者小于 0,不用再缩放输入数据。

末尾层残差:

2.进入正题

In this exercise, you will implement alinear decoder (a sparse autoencoder whose output layer uses a linear activation function). You will then apply it to learn features on color images from the STL-10 dataset. These features will be used in an laterexercise

on convolution and pooling for classifying STL-10 images.

对于彩色图片:

You can just combine the intensities from all the color channels for the pixels into one long vector, as if you were working with a grayscale image with 3x the number of pixels as the original image.

Step 1: Modify your sparse autoencoder to use a linear decoder

只改了很少几个地方W1 = reshape(theta(1:hiddenSize*visibleSize), hiddenSize, visibleSize); W2 = reshape(theta(hiddenSize*visibleSize+1:2*hiddenSize*visibleSize), visibleSize, hiddenSize); b1 = theta(2*hiddenSize*visibleSize+1:2*hiddenSize*visibleSize+hiddenSize); b2 = theta(2*hiddenSize*visibleSize+hiddenSize+1:end); cost = 0; W1grad = zeros(size(W1)); W2grad = zeros(size(W2)); b1grad = zeros(size(b1)); b2grad = zeros(size(b2)); m=size(data,2); B1=repmat(b1,1,m); B2=repmat(b2,1,m); z2=W1*data+B1; a2=sigmoid(z2);%(25,10000) z3=W2*a2+B2; a3=z3;%(64,10000) 这里改了 rho=sparsityParam; rho_hat=sum(a2,2)/m; KL=rho.*log(rho./rho_hat)+(1-rho).*log((1-rho)./(1-rho_hat)); cost=1/m*sum(sum((data-a3).^2)/2)+lambda/2*(sum(sum(W1.^2))+sum(sum(W2.^2)))+beta*sum(KL); delta_sparsity=beta*(-rho./rho_hat+((1-rho)./(1-rho_hat))); delta3=(a3-data); %这里改了,线性激励导数为1 delta2=(W2'*delta3+repmat(delta_sparsity,1,m)).*a2.*(1-a2); W2grad=delta3*a2'/m+lambda*W2; W1grad=delta2*data'/m+lambda*W1; b2grad=sum(delta3,2)/m; b1grad=sum(delta2,2)/m; grad = [W1grad(:) ; W2grad(:) ; b1grad(:) ; b2grad(:)];

Step 2: Learn features on small patches

save('STL10Features.mat', 'optTheta', 'ZCAWhite', 'meanPatch'); 这几个被保存了起来,后面练习会用到。结果图:

相关文章推荐

- CSS浏览器兼容性----Hack

- 网页滚动条样式知识

- 初学HTML 需要注意什么

- 深入理解jQuery插件开发

- html5中拨打电话代码

- JS小知识点----基本包装类型和引用类型

- 学习笔记:《jQuery基础教程》第四版第六章课后练习——通过Ajax发送数据

- canvas+js绘制饼状统计图

- JS实现表单输入Enter键转换焦点框

- cJSON源码学习总结

- 安卓开发--sharedpreferences存储数据

- jQuery获取属性之自己遇到的问题

- jquery中eq和get (

- javascript/jquery判断是否为undefined或是null!

- jQuery获取和设置disabled属性、背景图片路径

- CSS浏览器兼容性----Hack

- javascript生成表格增删改查

- 【CSS+DIV】(1)——滤镜的应用

- JS链接页面

- 20151223jquery学习笔记--Ajax表单提交