Very Brief Introduction to Machine Learning for AI

2014-08-15 09:22

501 查看

Intelligence

The notion of intelligence can be defined in many ways. Here we define it as theability to take theright decisions, according to some criterion (e.g. survivaland reproduction, for most animals). To take better decisions requires

knowledge,in a form that is operational, i.e., can be used to interpret sensory dataand use that information to take decisions.

Artificial Intelligence

Computers already possess some intelligence thanks to all the programs that humanshave crafted and which allow them to “do things” that we consider useful (and thatis basically what we mean for a computer to take the right decisions).But there are many taskswhich animals and humans are able to do rather easilybut remain out of reach of computers, at the beginning of the 21st century.Many of these tasks fall under the label of

Artificial Intelligence, and includemany perception and control tasks. Why is it that we have failed to write programsfor these tasks? I believe that it is mostly because we do not know explicitly(formally) how to do these tasks, even though our brain

(coupled with a body)can do them. Doing those tasks involve knowledge that is currently implicit,but we have information about those tasks through data and examples (e.g. observationsof what a human would do given a particular request or input).How do we get

machines to acquire that kind of intelligence? Using data and examples tobuild operational knowledge is what learning is about.

Machine Learning

Machine learning has a long history and numerous textbooks have been written thatdo a good job of covering its main principles. Among the recent ones I suggest:Chris Bishop, “Pattern Recognition and Machine Learning”, 2007

Simon Haykin, “Neural Networks: a Comprehensive Foundation”, 2009 (3rd edition)

Richard O. Duda, Peter E. Hart and David G. Stork, “Pattern Classification”, 2001 (2nd edition)

Here we focus on a few concepts that are most relevant to this course.

Formalization of Learning

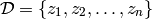

First, let us formalize the most common mathematical framework for learning.We are given training examples

with the

being examples sampled from an

unknown process

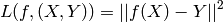

.We are also given a loss functional

which takes as argumenta decision function

and an example

, and returnsa real-valued scalar. We want to minimize the expected value of

under the unknown generating process

.

Supervised Learning

In supervised learning, each examples is an (input,target) pair:

and

takes an

as argument.The most common examples are

regression:

is a real-valued scalar or vector, the output of

is in the same set of values as

, and we oftentake as loss functional the squared error

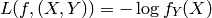

classification:

is a finite integer (e.g. a symbol) corresponding toa class index, and we often take

as loss function the negative conditional log-likelihood,with the interpretation that

estimates

:

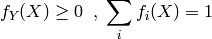

where we have the constraints

Unsupervised Learning

In unsupervised learning we are learning a function

which helps tocharacterize the unknown distribution

. Sometimes

isdirectly an estimator of

itself (this is called density estimation).In many other cases

is an attempt to characterize where the densityconcentrates. Clustering algorithms divide up the input space in regions(often

centered around a prototype example or centroid). Some clusteringalgorithms create a hard partition (e.g. the k-means algorithm) while othersconstruct a soft partition (e.g. a Gaussian mixture model) which assignto each

a probability of belonging to each cluster. Anotherkind of unsupervised learning algorithms are those that construct

anew representation for

. Many deep learning algorithms fallin this category, and so does Principal Components Analysis.

Local Generalization

The vast majority of learning algorithms exploit a single principle for achieving generalization:local generalization. It assumes that if input example

is close toinput example

, then the corresponding outputs

and

should also be close. This is basically the principle used to perform localinterpolation. This principle is very

powerful, but it has limitations:what if we have to extrapolate? or equivalently, what if the target unknown functionhas many more variations than the number of training examples? in that case thereis no way that local generalization will work, because we

need at least as manyexamples as there are ups and downs of the target function, in order to coverthose variations and be able to generalize by this principle.This issue is deeply connected to the so-called

curse of dimensionality forthe following reason. When the input space is high-dimensional, it is easy forit to have a number of variations of interest that is exponential in the numberof input dimensions. For example, imagine that we want to

distinguish between10 different values of each input variable (each element of the input vector),and that we care about about all the

configurations of these

variables. Using only local generalization, we need to see at leastone example of each of these

configurations in order tobe able to generalize to all of them.

Distributed versus Local Representation and Non-Local Generalization

A simple-minded binary local representation of integer

is a sequence of

bitssuch that

, and all bits are 0 except the

-th one. A simple-mindedbinary distributed representation of integer

is a sequence of

bits with the usual binary encoding for

. In this example we seethat distributed representations can be exponentially more efficient than local ones.In general,

for learning algorithms, distributed representations have the potentialto capture exponentially more variations than local ones for the same number offree parameters. They hence offer the potential for better generalization becauselearning theory shows that

the number of examples needed (to achieve a desireddegree of generalization performance) to tune

effective degrees of freedom is

.

Another illustration of the difference between distributed and localrepresentation (and corresponding local and non-local generalization)is with (traditional) clustering versus Principal Component Analysis (PCA)or Restricted Boltzmann Machines (RBMs).The

former is local while the latter is distributed. With k-meansclustering we maintain a vector of parameters for each prototype,i.e., one for each of the regions distinguishable by the learner.With PCA we represent the distribution by keeping track of itsmajor

directions of variations. Now imagine a simplified interpretationof PCA in which we care mostly, for each direction of variation,whether the projection of the data in that direction is above orbelow a threshold. With

directions, we can thusdistinguish between

regions. RBMs are similar inthat they define

hyper-planes and associate a bitto an indicator of being on one side or the other of each hyper-plane.An RBM therefore

associates one inputregion to each configuration of the representation bits(these bits are called the hidden units, in neural network parlance).The number of parameters of the RBM is roughly equal to the number thesebits times the input dimension.Again, we

see that the number of regions representableby an RBM or a PCA (distributed representation) can grow exponentially in the number ofparameters, whereas the number of regions representableby traditional clustering (e.g. k-means or Gaussian mixture, local representation)grows

only linearly with the number of parameters.Another way to look at this is to realize that an RBM can generalizeto a new region corresponding to a configuration of its hidden unit bitsfor which no example was seen, something not possible for clusteringalgorithms

(except in the trivial sense of locally generalizing to that newregions what has been learned for the nearby regions for which exampleshave been seen).

相关文章推荐

- 【转载】Very Brief Introduction to Machine Learning for AI

- A Gentle Introduction to the Gradient Boosting Algorithm for Machine Learning

- A Gentle Introduction to Singular-Value Decomposition for Machine Learning

- Introduction to Machine Learning

- A Brief Introduction to Hibernate for .NET with A Simple Example

- Introduction to Machine Learning

- Introduction to Machine Learning

- Andrew NG机器学习课程笔记系列之——Introduction to Machine Learning

- 李菲菲课程笔记:Deep Learning for Computer Vision – Introduction to Convolution Neural Networks

- How to use data analysis for machine learning (example, part 1)

- [转]Introduction to Machine Learning with Python and Scikit-Learn

- Udacity----Introduction to Machine Learning----Decision Trees决策树

- An introduction to machine learning with scikit-learn

- Introduction to Machine Learning (一)

- Introduction to Machine Learning

- A Brief Introduction to Hibernate for .NET with A Simple Example

- Introduction to Machine Learning

- advice for applying machine learning:Deciding what to do next

- An introduction to machine learning with scikit-learn

- Introduction to machine learning