Linux内核--网络栈实现分析(二)--数据包的传递过程(上)

2012-04-24 12:42

621 查看

本文分析基于Linux Kernel 1.2.13

原创作品,转载请标明http://blog.csdn.net/yming0221/article/details/7492423

更多请看专栏,地址http://blog.csdn.net/column/details/linux-kernel-net.html

作者:闫明

注:标题中的”(上)“,”(下)“表示分析过程基于数据包的传递方向:”(上)“表示分析是从底层向上分析、”(下)“表示分析是从上向下分析。

上一篇博文中我们从宏观上分析了Linux内核中网络栈的初始化过程,这里我们再从宏观上分析一下一个数据包在各网络层的传递的过程。

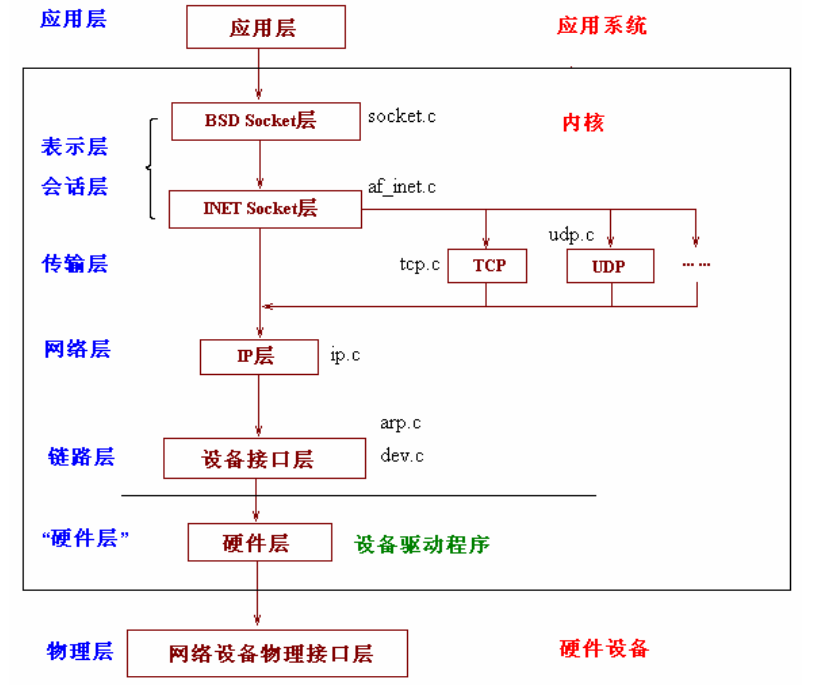

我们知道网络的OSI模型和TCP/IP模型层次结构如下:

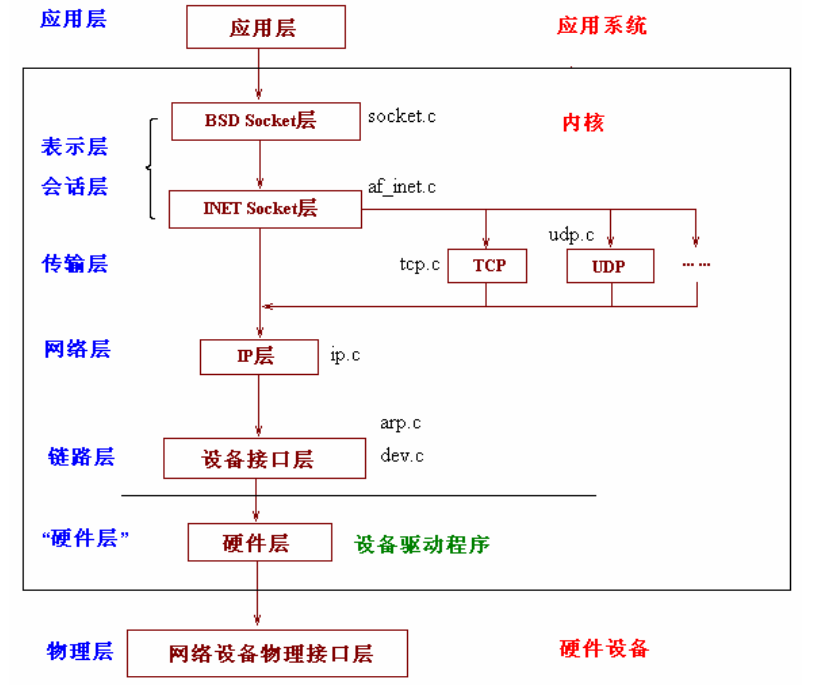

上文中我们看到了网络栈的层次结构:

我们就从最底层开始追溯一个数据包的传递流程。

1、网络接口层

* 硬件监听物理介质,进行数据的接收,当接收的数据填满了缓冲区,硬件就会产生中断,中断产生后,系统会转向中断服务子程序。

* 在中断服务子程序中,数据会从硬件的缓冲区复制到内核的空间缓冲区,并包装成一个数据结构(sk_buff),然后调用对驱动层的接口函数netif_rx()将数据包发送给链路层。该函数的实现在net/inet/dev.c中,(在整个网络栈实现中dev.c文件的作用重大,它衔接了其下的驱动层和其上的网络层,可以称它为链路层模块的实现)

该函数的实现如下:

该函数中用到了bootom half技术,该技术的原理是将中断处理程序人为的分为两部分,上半部分是实时性要求较高的任务,后半部分可以稍后完成,这样就可以节省中断程序的处理时间。可整体的提高系统的性能。该技术将会在后续的博文中详细分析。

我们从上一篇分析中知道,在网络栈初始化的时候,已经将NET的下半部分执行函数定义成了net_bh(在socket.c文件中1375行左右)

* 函数net_bh的实现在net/inet/dev.c中

2、网络层

* 就以IP数据包为例来说明,那么从链路层向网络层传递时将调用ip_rcv函数。该函数完成本层的处理后会根据IP首部中使用的传输层协议来调用相应协议的处理函数。UDP对应udp_rcv、TCP对应tcp_rcv、ICMP对应icmp_rcv、IGMP对应igmp_rcv(虽然这里的ICMP,IGMP一般成为网络层协议,但是实际上他们都封装在IP协议里面,作为传输层对待)

这个函数比较复杂,后续会详细分析。这里粘贴一下,让我们对整体了解更清楚

3、传输层

如果在IP数据报的首部标明的是使用TCP传输数据,则在上述函数中会调用tcp_rcv函数。该函数的大体处理流程为:

“所有使用TCP 协议的套接字对应sock 结构都被挂入tcp_prot 全局变量表示的proto 结构之sock_array 数组中,采用以本地端口号为索引的插入方式,所以当tcp_rcv 函数接收到一个数据包,在完成必要的检查和处理后,其将以TCP 协议首部中目的端口号(对于一个接收的数据包而言,其目的端口号就是本地所使用的端口号)为索引,在tcp_prot 对应sock 结构之sock_array 数组中得到正确的sock 结构队列,在辅之以其他条件遍历该队列进行对应sock 结构的查询,在得到匹配的sock 结构后,将数据包挂入该sock 结构中的缓存队列中(由sock 结构中receive_queue 字段指向),从而完成数据包的最终接收。”

该函数的实现也会比较复杂,这是由TCP协议的复杂功能决定的。附代码如下:

4、应用层

当用户需要接收数据时,首先根据文件描述符inode得到socket结构和sock结构,然后从sock结构中指向的队列recieve_queue中读取数据包,将数据包COPY到用户空间缓冲区。数据就完整的从硬件中传输到用户空间。这样也完成了一次完整的从下到上的传输。

原创作品,转载请标明http://blog.csdn.net/yming0221/article/details/7492423

更多请看专栏,地址http://blog.csdn.net/column/details/linux-kernel-net.html

作者:闫明

注:标题中的”(上)“,”(下)“表示分析过程基于数据包的传递方向:”(上)“表示分析是从底层向上分析、”(下)“表示分析是从上向下分析。

上一篇博文中我们从宏观上分析了Linux内核中网络栈的初始化过程,这里我们再从宏观上分析一下一个数据包在各网络层的传递的过程。

我们知道网络的OSI模型和TCP/IP模型层次结构如下:

上文中我们看到了网络栈的层次结构:

我们就从最底层开始追溯一个数据包的传递流程。

1、网络接口层

* 硬件监听物理介质,进行数据的接收,当接收的数据填满了缓冲区,硬件就会产生中断,中断产生后,系统会转向中断服务子程序。

* 在中断服务子程序中,数据会从硬件的缓冲区复制到内核的空间缓冲区,并包装成一个数据结构(sk_buff),然后调用对驱动层的接口函数netif_rx()将数据包发送给链路层。该函数的实现在net/inet/dev.c中,(在整个网络栈实现中dev.c文件的作用重大,它衔接了其下的驱动层和其上的网络层,可以称它为链路层模块的实现)

该函数的实现如下:

/*

* Receive a packet from a device driver and queue it for the upper

* (protocol) levels. It always succeeds. This is the recommended

* interface to use.

* 从设备驱动层接受到的数据发送到协议的

* 上层,该函数实际是一个接口。

*/

void netif_rx(struct sk_buff *skb)

{

static int dropping = 0;

/*

* Any received buffers are un-owned and should be discarded

* when freed. These will be updated later as the frames get

* owners.

*/

skb->sk = NULL;

skb->free = 1;

if(skb->stamp.tv_sec==0)

skb->stamp = xtime;

/*

* Check that we aren't overdoing things.

*/

if (!backlog_size)

dropping = 0;

else if (backlog_size > 300)

dropping = 1;

if (dropping)

{

kfree_skb(skb, FREE_READ);

return;

}

/*

* Add it to the "backlog" queue.

*/

#ifdef CONFIG_SKB_CHECK

IS_SKB(skb);

#endif

skb_queue_tail(&backlog,skb);//加入队列backlog

backlog_size++;

/*

* If any packet arrived, mark it for processing after the

* hardware interrupt returns.

*/

mark_bh(NET_BH);//下半部分bottom half技术可以减少中断处理程序的执行时间

return;

}该函数中用到了bootom half技术,该技术的原理是将中断处理程序人为的分为两部分,上半部分是实时性要求较高的任务,后半部分可以稍后完成,这样就可以节省中断程序的处理时间。可整体的提高系统的性能。该技术将会在后续的博文中详细分析。

我们从上一篇分析中知道,在网络栈初始化的时候,已经将NET的下半部分执行函数定义成了net_bh(在socket.c文件中1375行左右)

bh_base[NET_BH].routine= net_bh;//设置NET 下半部分的处理函数为net_bh

* 函数net_bh的实现在net/inet/dev.c中

/*

* When we are called the queue is ready to grab, the interrupts are

* on and hardware can interrupt and queue to the receive queue a we

* run with no problems.

* This is run as a bottom half after an interrupt handler that does

* mark_bh(NET_BH);

*/

void net_bh(void *tmp)

{

struct sk_buff *skb;

struct packet_type *ptype;

struct packet_type *pt_prev;

unsigned short type;

/*

* Atomically check and mark our BUSY state.

*/

if (set_bit(1, (void*)&in_bh))//标记BUSY状态

return;

/*

* Can we send anything now? We want to clear the

* decks for any more sends that get done as we

* process the input.

*/

dev_transmit();//调用dev_tinit()函数发送数据

/*

* Any data left to process. This may occur because a

* mark_bh() is done after we empty the queue including

* that from the device which does a mark_bh() just after

*/

cli();//防止队列操作错误,需要关中断和开中断

/*

* While the queue is not empty

*/

while((skb=skb_dequeue(&backlog))!=NULL)//出队直到队列为空

{

/*

* We have a packet. Therefore the queue has shrunk

*/

backlog_size--;//队列元素个数减一

sti();

/*

* Bump the pointer to the next structure.

* This assumes that the basic 'skb' pointer points to

* the MAC header, if any (as indicated by its "length"

* field). Take care now!

*/

skb->h.raw = skb->data + skb->dev->hard_header_len;

skb->len -= skb->dev->hard_header_len;

/*

* Fetch the packet protocol ID. This is also quite ugly, as

* it depends on the protocol driver (the interface itself) to

* know what the type is, or where to get it from. The Ethernet

* interfaces fetch the ID from the two bytes in the Ethernet MAC

* header (the h_proto field in struct ethhdr), but other drivers

* may either use the ethernet ID's or extra ones that do not

* clash (eg ETH_P_AX25). We could set this before we queue the

* frame. In fact I may change this when I have time.

*/

type = skb->dev->type_trans(skb, skb->dev);//取出该数据包所属的协议类型

/*

* We got a packet ID. Now loop over the "known protocols"

* table (which is actually a linked list, but this will

* change soon if I get my way- FvK), and forward the packet

* to anyone who wants it.

*

* [FvK didn't get his way but he is right this ought to be

* hashed so we typically get a single hit. The speed cost

* here is minimal but no doubt adds up at the 4,000+ pkts/second

* rate we can hit flat out]

*/

pt_prev = NULL;

for (ptype = ptype_base; ptype != NULL; ptype = ptype->next) //遍历ptype_base所指向的网络协议队列

{

//判断协议号是否匹配

if ((ptype->type == type || ptype->type == htons(ETH_P_ALL)) && (!ptype->dev || ptype->dev==skb->dev))

{

/*

* We already have a match queued. Deliver

* to it and then remember the new match

*/

if(pt_prev)

{

struct sk_buff *skb2;

skb2=skb_clone(skb, GFP_ATOMIC);//复制数据包结构

/*

* Kick the protocol handler. This should be fast

* and efficient code.

*/

if(skb2)

pt_prev->func(skb2, skb->dev, pt_prev);//调用相应协议的处理函数,

//这里和网络协议的种类有关系

//如IP 协议的处理函数就是ip_rcv

}

/* Remember the current last to do */

pt_prev=ptype;

}

} /* End of protocol list loop */

/*

* Is there a last item to send to ?

*/

if(pt_prev)

pt_prev->func(skb, skb->dev, pt_prev);

/*

* Has an unknown packet has been received ?

*/

else

kfree_skb(skb, FREE_WRITE);

/*

* Again, see if we can transmit anything now.

* [Ought to take this out judging by tests it slows

* us down not speeds us up]

*/

dev_transmit();

cli();

} /* End of queue loop */

/*

* We have emptied the queue

*/

in_bh = 0;//BUSY状态还原

sti();

/*

* One last output flush.

*/

dev_transmit();//清空缓冲区

}2、网络层

* 就以IP数据包为例来说明,那么从链路层向网络层传递时将调用ip_rcv函数。该函数完成本层的处理后会根据IP首部中使用的传输层协议来调用相应协议的处理函数。UDP对应udp_rcv、TCP对应tcp_rcv、ICMP对应icmp_rcv、IGMP对应igmp_rcv(虽然这里的ICMP,IGMP一般成为网络层协议,但是实际上他们都封装在IP协议里面,作为传输层对待)

这个函数比较复杂,后续会详细分析。这里粘贴一下,让我们对整体了解更清楚

/*

* This function receives all incoming IP datagrams.

*/

int ip_rcv(struct sk_buff *skb, struct device *dev, struct packet_type *pt)

{

struct iphdr *iph = skb->h.iph;

struct sock *raw_sk=NULL;

unsigned char hash;

unsigned char flag = 0;

unsigned char opts_p = 0; /* Set iff the packet has options. */

struct inet_protocol *ipprot;

static struct options opt; /* since we don't use these yet, and they

take up stack space. */

int brd=IS_MYADDR;

int is_frag=0;

#ifdef CONFIG_IP_FIREWALL

int err;

#endif

ip_statistics.IpInReceives++;

/*

* Tag the ip header of this packet so we can find it

*/

skb->ip_hdr = iph;

/*

* Is the datagram acceptable?

*

* 1. Length at least the size of an ip header

* 2. Version of 4

* 3. Checksums correctly. [Speed optimisation for later, skip loopback checksums]

* (4. We ought to check for IP multicast addresses and undefined types.. does this matter ?)

*/

if (skb->len<sizeof(struct iphdr) || iph->ihl<5 || iph->version != 4 ||

skb->len<ntohs(iph->tot_len) || ip_fast_csum((unsigned char *)iph, iph->ihl) !=0)

{

ip_statistics.IpInHdrErrors++;

kfree_skb(skb, FREE_WRITE);

return(0);

}

/*

* See if the firewall wants to dispose of the packet.

*/

#ifdef CONFIG_IP_FIREWALL

if ((err=ip_fw_chk(iph,dev,ip_fw_blk_chain,ip_fw_blk_policy, 0))!=1)

{

if(err==-1)

icmp_send(skb, ICMP_DEST_UNREACH, ICMP_PORT_UNREACH, 0, dev);

kfree_skb(skb, FREE_WRITE);

return 0;

}

#endif

/*

* Our transport medium may have padded the buffer out. Now we know it

* is IP we can trim to the true length of the frame.

*/

skb->len=ntohs(iph->tot_len);

/*

* Next analyse the packet for options. Studies show under one packet in

* a thousand have options....

*/

if (iph->ihl != 5)

{ /* Fast path for the typical optionless IP packet. */

memset((char *) &opt, 0, sizeof(opt));

if (do_options(iph, &opt) != 0)

return 0;

opts_p = 1;

}

/*

* Remember if the frame is fragmented.

*/

if(iph->frag_off)

{

if (iph->frag_off & 0x0020)

is_frag|=1;

/*

* Last fragment ?

*/

if (ntohs(iph->frag_off) & 0x1fff)

is_frag|=2;

}

/*

* Do any IP forwarding required. chk_addr() is expensive -- avoid it someday.

*

* This is inefficient. While finding out if it is for us we could also compute

* the routing table entry. This is where the great unified cache theory comes

* in as and when someone implements it

*

* For most hosts over 99% of packets match the first conditional

* and don't go via ip_chk_addr. Note: brd is set to IS_MYADDR at

* function entry.

*/

if ( iph->daddr != skb->dev->pa_addr && (brd = ip_chk_addr(iph->daddr)) == 0)

{

/*

* Don't forward multicast or broadcast frames.

*/

if(skb->pkt_type!=PACKET_HOST || brd==IS_BROADCAST)

{

kfree_skb(skb,FREE_WRITE);

return 0;

}

/*

* The packet is for another target. Forward the frame

*/

#ifdef CONFIG_IP_FORWARD

ip_forward(skb, dev, is_frag);

#else

/* printk("Machine %lx tried to use us as a forwarder to %lx but we have forwarding disabled!\n",

iph->saddr,iph->daddr);*/

ip_statistics.IpInAddrErrors++;

#endif

/*

* The forwarder is inefficient and copies the packet. We

* free the original now.

*/

kfree_skb(skb, FREE_WRITE);

return(0);

}

#ifdef CONFIG_IP_MULTICAST

if(brd==IS_MULTICAST && iph->daddr!=IGMP_ALL_HOSTS && !(dev->flags&IFF_LOOPBACK))

{

/*

* Check it is for one of our groups

*/

struct ip_mc_list *ip_mc=dev->ip_mc_list;

do

{

if(ip_mc==NULL)

{

kfree_skb(skb, FREE_WRITE);

return 0;

}

if(ip_mc->multiaddr==iph->daddr)

break;

ip_mc=ip_mc->next;

}

while(1);

}

#endif

/*

* Account for the packet

*/

#ifdef CONFIG_IP_ACCT

ip_acct_cnt(iph,dev, ip_acct_chain);

#endif

/*

* Reassemble IP fragments.

*/

if(is_frag)

{

/* Defragment. Obtain the complete packet if there is one */

skb=ip_defrag(iph,skb,dev);

if(skb==NULL)

return 0;

skb->dev = dev;

iph=skb->h.iph;

}

/*

* Point into the IP datagram, just past the header.

*/

skb->ip_hdr = iph;

skb->h.raw += iph->ihl*4;

/*

* Deliver to raw sockets. This is fun as to avoid copies we want to make no surplus copies.

*/

hash = iph->protocol & (SOCK_ARRAY_SIZE-1);

/* If there maybe a raw socket we must check - if not we don't care less */

if((raw_sk=raw_prot.sock_array[hash])!=NULL)

{

struct sock *sknext=NULL;

struct sk_buff *skb1;

raw_sk=get_sock_raw(raw_sk, hash, iph->saddr, iph->daddr);

if(raw_sk) /* Any raw sockets */

{

do

{

/* Find the next */

sknext=get_sock_raw(raw_sk->next, hash, iph->saddr, iph->daddr);

if(sknext)

skb1=skb_clone(skb, GFP_ATOMIC);

else

break; /* One pending raw socket left */

if(skb1)

raw_rcv(raw_sk, skb1, dev, iph->saddr,iph->daddr);

raw_sk=sknext;

}

while(raw_sk!=NULL);

/* Here either raw_sk is the last raw socket, or NULL if none */

/* We deliver to the last raw socket AFTER the protocol checks as it avoids a surplus copy */

}

}

/*

* skb->h.raw now points at the protocol beyond the IP header.

*/

hash = iph->protocol & (MAX_INET_PROTOS -1);

for (ipprot = (struct inet_protocol *)inet_protos[hash];ipprot != NULL;ipprot=(struct inet_protocol *)ipprot->next)

{

struct sk_buff *skb2;

if (ipprot->protocol != iph->protocol)

continue;

/*

* See if we need to make a copy of it. This will

* only be set if more than one protocol wants it.

* and then not for the last one. If there is a pending

* raw delivery wait for that

*/

if (ipprot->copy || raw_sk)

{

skb2 = skb_clone(skb, GFP_ATOMIC);

if(skb2==NULL)

continue;

}

else

{

skb2 = skb;

}

flag = 1;

/*

* Pass on the datagram to each protocol that wants it,

* based on the datagram protocol. We should really

* check the protocol handler's return values here...

*/

ipprot->handler(skb2, dev, opts_p ? &opt : 0, iph->daddr,

(ntohs(iph->tot_len) - (iph->ihl * 4)),

iph->saddr, 0, ipprot);

}

/*

* All protocols checked.

* If this packet was a broadcast, we may *not* reply to it, since that

* causes (proven, grin) ARP storms and a leakage of memory (i.e. all

* ICMP reply messages get queued up for transmission...)

*/

if(raw_sk!=NULL) /* Shift to last raw user */

raw_rcv(raw_sk, skb, dev, iph->saddr, iph->daddr);

else if (!flag) /* Free and report errors */

{

if (brd != IS_BROADCAST && brd!=IS_MULTICAST)

icmp_send(skb, ICMP_DEST_UNREACH, ICMP_PROT_UNREACH, 0, dev);

kfree_skb(skb, FREE_WRITE);

}

return(0);

}3、传输层

如果在IP数据报的首部标明的是使用TCP传输数据,则在上述函数中会调用tcp_rcv函数。该函数的大体处理流程为:

“所有使用TCP 协议的套接字对应sock 结构都被挂入tcp_prot 全局变量表示的proto 结构之sock_array 数组中,采用以本地端口号为索引的插入方式,所以当tcp_rcv 函数接收到一个数据包,在完成必要的检查和处理后,其将以TCP 协议首部中目的端口号(对于一个接收的数据包而言,其目的端口号就是本地所使用的端口号)为索引,在tcp_prot 对应sock 结构之sock_array 数组中得到正确的sock 结构队列,在辅之以其他条件遍历该队列进行对应sock 结构的查询,在得到匹配的sock 结构后,将数据包挂入该sock 结构中的缓存队列中(由sock 结构中receive_queue 字段指向),从而完成数据包的最终接收。”

该函数的实现也会比较复杂,这是由TCP协议的复杂功能决定的。附代码如下:

/*

* A TCP packet has arrived.

*/

int tcp_rcv(struct sk_buff *skb, struct device *dev, struct options *opt,

unsigned long daddr, unsigned short len,

unsigned long saddr, int redo, struct inet_protocol * protocol)

{

struct tcphdr *th;

struct sock *sk;

int syn_ok=0;

if (!skb)

{

printk("IMPOSSIBLE 1\n");

return(0);

}

if (!dev)

{

printk("IMPOSSIBLE 2\n");

return(0);

}

tcp_statistics.TcpInSegs++;

if(skb->pkt_type!=PACKET_HOST)

{

kfree_skb(skb,FREE_READ);

return(0);

}

th = skb->h.th;

/*

* Find the socket.

*/

sk = get_sock(&tcp_prot, th->dest, saddr, th->source, daddr);

/*

* If this socket has got a reset it's to all intents and purposes

* really dead. Count closed sockets as dead.

*

* Note: BSD appears to have a bug here. A 'closed' TCP in BSD

* simply drops data. This seems incorrect as a 'closed' TCP doesn't

* exist so should cause resets as if the port was unreachable.

*/

if (sk!=NULL && (sk->zapped || sk->state==TCP_CLOSE))

sk=NULL;

if (!redo)

{

if (tcp_check(th, len, saddr, daddr ))

{

skb->sk = NULL;

kfree_skb(skb,FREE_READ);

/*

* We don't release the socket because it was

* never marked in use.

*/

return(0);

}

th->seq = ntohl(th->seq);

/* See if we know about the socket. */

if (sk == NULL)

{

/*

* No such TCB. If th->rst is 0 send a reset (checked in tcp_reset)

*/

tcp_reset(daddr, saddr, th, &tcp_prot, opt,dev,skb->ip_hdr->tos,255);

skb->sk = NULL;

/*

* Discard frame

*/

kfree_skb(skb, FREE_READ);

return(0);

}

skb->len = len;

skb->acked = 0;

skb->used = 0;

skb->free = 0;

skb->saddr = daddr;

skb->daddr = saddr;

/* We may need to add it to the backlog here. */

cli();

if (sk->inuse)

{

skb_queue_tail(&sk->back_log, skb);

sti();

return(0);

}

sk->inuse = 1;

sti();

}

else

{

if (sk==NULL)

{

tcp_reset(daddr, saddr, th, &tcp_prot, opt,dev,skb->ip_hdr->tos,255);

skb->sk = NULL;

kfree_skb(skb, FREE_READ);

return(0);

}

}

if (!sk->prot)

{

printk("IMPOSSIBLE 3\n");

return(0);

}

/*

* Charge the memory to the socket.

*/

if (sk->rmem_alloc + skb->mem_len >= sk->rcvbuf)

{

kfree_skb(skb, FREE_READ);

release_sock(sk);

return(0);

}

skb->sk=sk;

sk->rmem_alloc += skb->mem_len;

/*

* This basically follows the flow suggested by RFC793, with the corrections in RFC1122. We

* don't implement precedence and we process URG incorrectly (deliberately so) for BSD bug

* compatibility. We also set up variables more thoroughly [Karn notes in the

* KA9Q code the RFC793 incoming segment rules don't initialise the variables for all paths].

*/

if(sk->state!=TCP_ESTABLISHED) /* Skip this lot for normal flow */

{

/*

* Now deal with unusual cases.

*/

if(sk->state==TCP_LISTEN)

{

if(th->ack) /* These use the socket TOS.. might want to be the received TOS */

tcp_reset(daddr,saddr,th,sk->prot,opt,dev,sk->ip_tos, sk->ip_ttl);

/*

* We don't care for RST, and non SYN are absorbed (old segments)

* Broadcast/multicast SYN isn't allowed. Note - bug if you change the

* netmask on a running connection it can go broadcast. Even Sun's have

* this problem so I'm ignoring it

*/

if(th->rst || !th->syn || th->ack || ip_chk_addr(daddr)!=IS_MYADDR)

{

kfree_skb(skb, FREE_READ);

release_sock(sk);

return 0;

}

/*

* Guess we need to make a new socket up

*/

tcp_conn_request(sk, skb, daddr, saddr, opt, dev, tcp_init_seq());

/*

* Now we have several options: In theory there is nothing else

* in the frame. KA9Q has an option to send data with the syn,

* BSD accepts data with the syn up to the [to be] advertised window

* and Solaris 2.1 gives you a protocol error. For now we just ignore

* it, that fits the spec precisely and avoids incompatibilities. It

* would be nice in future to drop through and process the data.

*/

release_sock(sk);

return 0;

}

/* retransmitted SYN? */

if (sk->state == TCP_SYN_RECV && th->syn && th->seq+1 == sk->acked_seq)

{

kfree_skb(skb, FREE_READ);

release_sock(sk);

return 0;

}

/*

* SYN sent means we have to look for a suitable ack and either reset

* for bad matches or go to connected

*/

if(sk->state==TCP_SYN_SENT)

{

/* Crossed SYN or previous junk segment */

if(th->ack)

{

/* We got an ack, but it's not a good ack */

if(!tcp_ack(sk,th,saddr,len))

{

/* Reset the ack - its an ack from a

different connection [ th->rst is checked in tcp_reset()] */

tcp_statistics.TcpAttemptFails++;

tcp_reset(daddr, saddr, th,

sk->prot, opt,dev,sk->ip_tos,sk->ip_ttl);

kfree_skb(skb, FREE_READ);

release_sock(sk);

return(0);

}

if(th->rst)

return tcp_std_reset(sk,skb);

if(!th->syn)

{

/* A valid ack from a different connection

start. Shouldn't happen but cover it */

kfree_skb(skb, FREE_READ);

release_sock(sk);

return 0;

}

/*

* Ok.. it's good. Set up sequence numbers and

* move to established.

*/

syn_ok=1; /* Don't reset this connection for the syn */

sk->acked_seq=th->seq+1;

sk->fin_seq=th->seq;

tcp_send_ack(sk->sent_seq,sk->acked_seq,sk,th,sk->daddr);

tcp_set_state(sk, TCP_ESTABLISHED);

tcp_options(sk,th);

sk->dummy_th.dest=th->source;

sk->copied_seq = sk->acked_seq;

if(!sk->dead)

{

sk->state_change(sk);

sock_wake_async(sk->socket, 0);

}

if(sk->max_window==0)

{

sk->max_window = 32;

sk->mss = min(sk->max_window, sk->mtu);

}

}

else

{

/* See if SYN's cross. Drop if boring */

if(th->syn && !th->rst)

{

/* Crossed SYN's are fine - but talking to

yourself is right out... */

if(sk->saddr==saddr && sk->daddr==daddr &&

sk->dummy_th.source==th->source &&

sk->dummy_th.dest==th->dest)

{

tcp_statistics.TcpAttemptFails++;

return tcp_std_reset(sk,skb);

}

tcp_set_state(sk,TCP_SYN_RECV);

/*

* FIXME:

* Must send SYN|ACK here

*/

}

/* Discard junk segment */

kfree_skb(skb, FREE_READ);

release_sock(sk);

return 0;

}

/*

* SYN_RECV with data maybe.. drop through

*/

goto rfc_step6;

}

/*

* BSD has a funny hack with TIME_WAIT and fast reuse of a port. There is

* a more complex suggestion for fixing these reuse issues in RFC1644

* but not yet ready for general use. Also see RFC1379.

*/

#define BSD_TIME_WAIT

#ifdef BSD_TIME_WAIT

if (sk->state == TCP_TIME_WAIT && th->syn && sk->dead &&

after(th->seq, sk->acked_seq) && !th->rst)

{

long seq=sk->write_seq;

if(sk->debug)

printk("Doing a BSD time wait\n");

tcp_statistics.TcpEstabResets++;

sk->rmem_alloc -= skb->mem_len;

skb->sk = NULL;

sk->err=ECONNRESET;

tcp_set_state(sk, TCP_CLOSE);

sk->shutdown = SHUTDOWN_MASK;

release_sock(sk);

sk=get_sock(&tcp_prot, th->dest, saddr, th->source, daddr);

if (sk && sk->state==TCP_LISTEN)

{

sk->inuse=1;

skb->sk = sk;

sk->rmem_alloc += skb->mem_len;

tcp_conn_request(sk, skb, daddr, saddr,opt, dev,seq+128000);

release_sock(sk);

return 0;

}

kfree_skb(skb, FREE_READ);

return 0;

}

#endif

}

/*

* We are now in normal data flow (see the step list in the RFC)

* Note most of these are inline now. I'll inline the lot when

* I have time to test it hard and look at what gcc outputs

*/

if(!tcp_sequence(sk,th,len,opt,saddr,dev))

{

kfree_skb(skb, FREE_READ);

release_sock(sk);

return 0;

}

if(th->rst)

return tcp_std_reset(sk,skb);

/*

* !syn_ok is effectively the state test in RFC793.

*/

if(th->syn && !syn_ok)

{

tcp_reset(daddr,saddr,th, &tcp_prot, opt, dev, skb->ip_hdr->tos, 255);

return tcp_std_reset(sk,skb);

}

/*

* Process the ACK

*/

if(th->ack && !tcp_ack(sk,th,saddr,len))

{

/*

* Our three way handshake failed.

*/

if(sk->state==TCP_SYN_RECV)

{

tcp_reset(daddr, saddr, th,sk->prot, opt, dev,sk->ip_tos,sk->ip_ttl);

}

kfree_skb(skb, FREE_READ);

release_sock(sk);

return 0;

}

rfc_step6: /* I'll clean this up later */

/*

* Process urgent data

*/

if(tcp_urg(sk, th, saddr, len))

{

kfree_skb(skb, FREE_READ);

release_sock(sk);

return 0;

}

/*

* Process the encapsulated data

*/

if(tcp_data(skb,sk, saddr, len))

{

kfree_skb(skb, FREE_READ);

release_sock(sk);

return 0;

}

/*

* And done

*/

release_sock(sk);

return 0;

}4、应用层

当用户需要接收数据时,首先根据文件描述符inode得到socket结构和sock结构,然后从sock结构中指向的队列recieve_queue中读取数据包,将数据包COPY到用户空间缓冲区。数据就完整的从硬件中传输到用户空间。这样也完成了一次完整的从下到上的传输。

相关文章推荐

- Linux内核--网络栈实现分析(二)--数据包的传递过程(上)

- Linux内核--网络栈实现分析(七)--数据包的传递过程(下)

- Linux内核--网络栈实现分析(二)--数据包的传递过程(上)

- Linux内核--网络栈实现分析(二)--数据包的传递过程(上)

- Linux内核--网络栈实现分析(二)--数据包的传递过程--转

- Linux内核--网络栈实现分析(二)--数据包的传递过程(上)

- Linux内核--网络栈实现分析(二)--数据包的传递过程(上)

- Linux内核--网络栈实现分析(七)--数据包的传递过程(下)

- Linux内核--网络栈实现分析(七)--数据包的传递过程(下)

- Linux内核--网络栈实现分析(七)--数据包的传递过程(下)

- Linux内核--网络栈实现分析(七)--数据包的传递过程(下)

- Linux内核--网络栈实现分析(七)--数据包的传递过程(下)

- Linux内核--网络栈实现分析(六)--应用层获取数据包(上)

- Linux内核--网络栈实现分析(六)--应用层获取数据包(上)

- Linux内核--网络栈实现分析(六)--应用层获取数据包(上)

- Linux内核--网络栈实现分析(十一)--驱动程序层(下)

- Linux内核--网络栈实现分析(一)--网络栈初始化--转

- 消息传递机制的具体实现过程(分析源码之后的总结)

- Linux内核--网络栈实现分析(八)--应用层发送数据(下)

- Linux内核源码分析--内核启动命令行的传递过程(Linux-3.0 ARMv7)