玩转大数据-如何使用14台服务器部署hbase集群

防伪码:大鹏一日同风起,扶摇直上九万里。

一、环境介绍

操作平台:物理机

操作系统:CentOS 6.5

软件版本:hadoop-2.5.2,hbase-1.1.2-bin,jdk-7u79-linux-x64,protobuf-2.5.0,snappy-1.1.1,zookeeper-3.4.6,hadoop-snappy-0.0.1-SNAPSHOT

软件部署用户:hadoop

软件放置位置:/opt/soft

软件安装位置:/opt/server

软件数据位置:/opt/data

软件日志位置:/opt/var/logs

| 主机名 | IP地址 | Hadoop进程 |

| INVOICE-GL-01 | 10.162.16.6 | QuorumPeerMain ,HMaster |

| INVOICE-GL-02 | 10.162.16.7 | QuorumPeerMain ,HMaster |

| INVOICE-GL-03 | 10.162.16.8 | QuorumPeerMain ,HMaster |

| INVOICE-23 | 10.162.16.227 | NameNode, DFSZKFailoverController |

| INVOICE-24 | 10.162.16.228 | NameNode, DFSZKFailoverController |

| INVOICE-25 | 10.162.16.229 | JournalNode, DataNode, HRegionServer |

| INVOICE-26 | 10.162.16.230 | JournalNode, DataNode, HRegionServer |

| INVOICE-27 | 10.162.16.231 | JournalNode, DataNode, HRegionServer |

| INVOICE-DN-01 | 10.162.16.232 | DataNode, HRegionServer |

| INVOICE-DN-02 | 10.162.16.233 | DataNode, HRegionServer |

| INVOICE-DN-03 | 10.162.16.234 | DataNode, HRegionServer |

| INVOICE-DN-04 | 10.162.16.235 | DataNode, HRegionServer |

| INVOICE-DN-05 | 10.162.16.236 | DataNode, HRegionServer |

| INVOICE-DN-06 | 10.162.16.237 | DataNode, HRegionServer |

二、安装步骤

1、关闭防火墙和selinux

##sed -i '/SELINUX/s/enforcing/disabled/' /etc/selinux/config

##setenforce 0

##chkconfig iptables off

##/etc/init.d/iptables stop

2、时间服务器同步

##vim /etc/ntp.conf

server NTP服务器

driftfile /var/lib/ntp/drift

logfile /var/log/ntp

##/etc/init.d/ntpd start

3、所有机器修改hosts主机名

4、所有机器创建hadoop用户和相应的文件夹

##useradd hadoop

##echo "hadoop" | passwd --stdin hadoop

##mkdir -p /opt/server

##mkdir -p /opt/soft

##mkdir -p /opt/data

##mkdir -p /opt/var/logs

##chown -R hadoop:hadoop /opt/server

##chown -R hadoop:hadoop /opt/soft

##chown -R hadoop:hadoop /opt/data

##chown -R hadoop:hadoop /opt/var/logs

5、SSH免登录配置(INVOICE-GL-01, INVOICE-23, INVOICE-24, INVOICE-25四台管理节点操作):

##su - hadoop

##ssh-keygen

##ssh-copy-id INVOICE-GL-01

##ssh-copy-id INVOICE-GL-02

##ssh-copy-id INVOICE-GL-03

##ssh-copy-id INVOICE-23

##ssh-copy-id INVOICE-24

.....

## ssh-copy-id INVOICE-DN-05

## ssh-copy-id INVOICE-DN-06

保证四台在hadoop用户下可以无密码登录所有机器

如 ssh INVOICE-23可以直接登录

#####################################################################

##################以下操作都在hadoop用户下##########################

#####################################################################

6、所有机器环境变量配置:

## vim /home/hadoop/.bash_profile

################set java home##################

export JAVA_HOME=/opt/server/jdk1.7.0_79

export PATH=$JAVA_HOME/bin:$PATH

###############set zk home##################

export ZOOKEEPER_HOME=/opt/server/zookeeper-3.4.6

export PATH=$ZOOKEEPER_HOME/bin:$PATH

############set hadoop home###################

export HADOOP_HOME=/opt/server/hadoop-2.5.2

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

###############set hbase home################

export HBASE_HOME=/opt/server/hbase-1.1.2

export PATH=$HBASE_HOME/bin:$PATH

##source /home/hadoop/.bash_profile

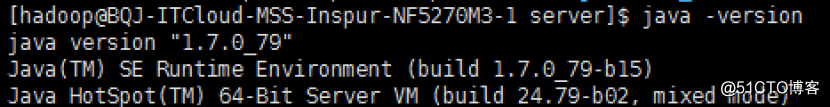

7、JDK部署:

##cd /opt/soft

##tar -zxvf jdk-7u79-linux-x64.tar.gz

##发送到其他机器(本机为INVOICE-GL-01)

##scp -r jdk1.7.0_79 INVOICE-GL-01:/opt/server/

##scp -r jdk1.7.0_79 INVOICE-GL-02:/opt/server/

##scp -r jdk1.7.0_79 INVOICE-GL-03:/opt/server/

##scp -r jdk1.7.0_79 INVOICE-23:/opt/server/

...

## scp -r jdk1.7.0_79 INVOICE-DN-05:/opt/server/

## scp -r jdk1.7.0_79 INVOICE-DN-06:/opt/server/

##mv jdk1.7.0_79 /opt/server

##java -version

8、Zookeeper部署:

##cd /opt/soft

##tar -zxvf zookeeper-3.4.6.tar.gz

##cp zookeeper-3.4.6/conf/zoo_sample.cfg zookeeper-3.4.6/conf/zoo.cfg

##vim zookeeper-3.4.6/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/opt/data/zookeeper

dataLogDir=/opt/var/logs/zookeeper

clientPort=2181

maxClientCnxns=10000

autopurge.snapRetainCount=3

autopurge.purgeInterval=2

server.1=INVOICE-GL-01:2888:3888

server.2= INVOICE-GL-02:2888:3888

server.3= INVOICE-GL-03:2888:3888

##vim zookeeper-3.4.6/conf/java.env

#!/bin/bash

export JAVA_HOME=/opt/server/jdk1.7.0_79

export JVMFLAGS="-Xms2048m -Xmx10240m $JVMFLAGS"

##vim zookeeper-3.4.6/bin/zkEnv.sh

(修改下面的参数zookeeper.out存储路径)

ZOO_LOG_DIR="/opt/var/logs/zookeeper"

##发送到其他机器(本机为第INVOICE-GL-01台机器)

##scp -r zookeeper-3.4.6 INVOICE-GL-02:/opt/server/

##scp -r zookeeper-3.4.6 INVOICE-GL-03:/opt/server/

## mv zookeeper-3.4.6 /opt/server/

##第INVOICE-GL-01机器创建相应文件夹

##mkdir -p /opt/data/zookeeper

##mkdir -p /opt/var/logs/zookeeper

##echo "1" > /opt/data/zookeeper/myid

##zkServer.sh start

##第INVOICE-GL-02机器创建相应文件夹

##mkdir -p /opt/data/zookeeper

##mkdir -p /opt/var/logs/zookeeper

##echo "2" > /opt/data/zookeeper/myid

##zkServer.sh start

##第INVOICE-GL-03机器创建相应文件夹

##mkdir -p /opt/data/zookeeper

##mkdir -p /opt/var/logs/zookeeper

##echo "3" > /opt/data/zookeeper/myid

##zkServer.sh start

9、Hadoop部署:

###所有机器安装hadoop snappy压缩支持

##yum -y install gcc gcc-c++ automake autoconf libtool

##cd /opt/soft/

##tar -zxvf snappy-1.1.1.tar.gz

## cd snappy-1.1.1

##./configure && make && make install

##cd /opt/soft/

##tar -xvf protobuf-2.5.0.tar

##cd protobuf-2.5.0

##./configure && make && make install

##echo "/usr/local/lib" >>/etc/ld.so.conf

##ldconfig

###第INVOICE-25台机器安装hadoop

##cd /opt/soft

##tar -zxvf hadoop-2.5.2.tar.gz

##vim hadoop-2.5.2/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/opt/server/jdk1.7.0_79

export HADOOP_HOME=/opt/server/hadoop-2.5.2

export HADOOP_LOG_DIR=/opt/var/logs/hadoop

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_HOME/lib/native/Linux-amd64-64/:/usr/local/lib/

##vim hadoop-2.5.2/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://mycluster</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/data/hadoop</value>

</property>

<property>

<name>fs.trash.interval</name>

<value>120</value>

</property>

<property>

<name>io.compression.codecs</name>

<value>org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.BZip2Codec,org.apache.hadoop.io.compress.SnappyCodec</value></property>

</configuration>

##vim hadoop-2.5.2/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1</name>

<value>INVOICE-23:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2</name>

<value>INVOICE-24:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.nn1</name>

<value>INVOICE-23:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.nn2</name>

<value>INVOICE-24:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://INVOICE-25:8485;INVOICE-26:8485;INVOICE-27:8485/mycluster</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/opt/data/journal/local/data</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>INVOICE-GL-01:2181,INVOICE-GL-02:2181,INVOICE-GL-03:2181</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/data/hadoop1,/opt/data/hadoop2</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>40</value>

</property>

</configuration>

##vim hadoop-2.5.2/etc/hadoop/slaves

INVOICE-25

INVOICE-26

INVOICE-27

INVOICE-DN-01

INVOICE-DN-02

INVOICE-DN-03

INVOICE-DN-04

INVOICE-DN-05

INVOICE-DN-06

###复制snappylib包到hadoop目录下

##tar -zxvf hadoop-snappy-0.0.1-SNAPSHOT.tar.gz

## cp -r hadoop-snappy-0.0.1-SNAPSHOT/lib/hadoop-snappy-0.0.1-SNAPSHOT.jar hadoop-2.5.2/lib/

## cp -r hadoop-snappy-0.0.1-SNAPSHOT/lib/native/Linux-amd64-64 hadoop-2.5.2/lib/native/

##发送到其他机器(本机为第INVOICE-25台机器)

##scp -r hadoop-2.5.2 INVOICE-GL-01:/opt/server/

##scp -r hadoop-2.5.2 INVOICE-GL-02:/opt/server/

##scp -r hadoop-2.5.2 INVOICE-GL-03:/opt/server/

##scp -r hadoop-2.5.2 INVOICE-23:/opt/server/

...

##scp -r hadoop-2.5.2 INVOICE-DN-05:/opt/server/

##scp -r hadoop-2.5.2 INVOICE-DN-06:/opt/server/

## mv hadoop-2.5.2 /opt/server/

###所有机器创建相应文件夹

mkdir -p /opt/data/hadoop

mkdir -p /opt/var/logs/hadoop

mkdir -p /opt/data/journal/local/data

###初始化hadoop集群

###第INVOICE-25, INVOICE-26, INVOICE-27台启动journalnode

##hadoop-daemon.sh start journalnode

###第INVOICE-23台格式化namenode并启动

##hdfs namenode -format

##hadoop-daemon.sh start namenode

###第INVOICE-24台格式化namenode

##hdfs namenode -bootstrapStandby

###第INVOICE-23台格式化zkfc

##hdfs zkfc -formatZK

###第INVOICE-25台先停止再启动集群

stop-dfs.sh

start-dfs.sh

添加Yarn服务

# master上配置

# su - hadoop

# cd /opt/server/hadoop-2.5.2/etc/hadoop

# cat ./yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>nn1,nn2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.nn1</name>

<value>INVOICE-23</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.nn2</name>

<value>INVOICE-24</value>

</property>

<property>

<name>yarn.resourcemanager.ha.id</name>

<value>nn1</value> #master2 这里要改成nn2

</property>

<property>

<name>yarn.resourcemanager.address.nn1</name>

<value>${yarn.resourcemanager.hostname.nn1}:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.nn1</name>

<value>${yarn.resourcemanager.hostname.nn1}:8030</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.nn1</name>

<value>${yarn.resourcemanager.hostname.nn1}:8089</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.nn1</name>

<value>${yarn.resourcemanager.hostname.nn1}:8088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.nn1</name>

<value>${yarn.resourcemanager.hostname.nn1}:8025</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.nn1</name>

<value>${yarn.resourcemanager.hostname.nn1}:8041</value>

</property>

<property>

<name>yarn.resourcemanager.address.nn2</name>

<value>${yarn.resourcemanager.hostname.nn2}:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.nn2</name>

<value>${yarn.resourcemanager.hostname.nn2}:8030</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.nn2</name>

<value>${yarn.resourcemanager.hostname.nn2}:8089</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.nn2</name>

<value>${yarn.resourcemanager.hostname.nn2}:8088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.nn2</name>

<value>${yarn.resourcemanager.hostname.nn2}:8025</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.nn2</name>

<value>${yarn.resourcemanager.hostname.nn2}:8041</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/opt/data/hadoop/yarn</value>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/opt/var/logs/hadoop</value>

</property>

<property>

<name>yarn.client.failover-proxy-provider</name>

<value>org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider</value>

</property>

<property>

<name>yarn.resourcemanager.zk-state-store.address</name>

<value>INVOICE-GL-01:2181,INVOICE-GL-02:2181,INVOICE-GL-03:2181</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>INVOICE-GL-01:2181,INVOICE-GL-02:2181,INVOICE-GL-03:2181</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>cluster</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

</configuration>

# cat mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

# start-yarn.sh

10、Hbase部署:

###第INVOICE-25台机器安装hbase

##cd /opt/soft

##tar -zxvf hbase-1.1.2-bin.tar.gz

##vim hbase-1.1.2/conf/hbase-env.sh

export JAVA_HOME=/opt/server/jdk1.7.0_79

export HADOOP_HOME=/home/hadoop/server/hadoop-2.5.2

export HBASE_HOME=/home/hadoop/server/hbase-1.1.2

export HBASE_MANAGES_ZK=false

export HBASE_LOG_DIR=/opt/var/logs/hbase

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_HOME/lib/native/Linux-amd64-64/:/usr/local/lib/

export HBASE_LIBRARY_PATH=$HBASE_LIBRARY_PATH:$HBASE_HOME/lib/native/Linux-amd64-64/:/usr/local/lib/

export CLASSPATH=$CLASSPATH:$HBASE_LIBRARY_PATH

## vim hbase-1.1.2/conf/hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://mycluster/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>INVOICE-GL-01,INVOICE-GL-02,INVOICE-GL-03</value>

</property>

<property>

<name>hbase.regionserver.codecs</name>

<value>snappy</value>

</property>

<property>

<name>hbase.regionserver.handler.count</name>

<value>500</value>

</property>

<property>

<name>dfs.support.append</name>

<value>true</value>

</property>

<property>

<name>zookeeper.session.timeout</name>

<value>60000</value>

</property>

<property>

<name>hbase.master.distributed.log.splitting</name>

<value>false</value>

</property>

<property>

<name>hbase.rpc.timeout</name>

<value>600000</value>

</property>

<property>

<name>hbase.client.scanner.timeout.period</name>

<value>60000</value>

</property>

<property>

<name>hbase.snapshot.master.timeoutMillis</name>

<value>600000</value>

</property>

<property>

<name>hbase.snapshot.region.timeout</name>

<value>600000</value>

</property>

<property>

<name>hbase.hregion.max.filesize</name>

<value>107374182400</value>

</property>

<property>

<name>hbase.master.maxclockskew</name>

<value>180000</value>

</property>

<property>

<name>hbase.master.maxclockskew</name>

<value>180000</value>

</property>

<property>

<name>hbase.zookeeper.property.maxClientCnxns</name>

<value>10000</value>

</property>

<property>

<name>hbase.regionserver.region.split.policy</name>

<value>org.apache.hadoop.hbase.regionserver.ConstantSizeRegionSplitPolicy</value>

</property>

</configuration>

##vim hbase-1.1.2/conf/regionservers

INVOICE-25

INVOICE-26

INVOICE-27

INVOICE-DN-01

INVOICE-DN-02

INVOICE-DN-03

INVOICE-DN-04

INVOICE-DN-05

INVOICE-DN-06

##vim hbase-1.1.2/conf/backup-masters

INVOICE-GL-02

INVOICE-GL-03

##ln -s /opt/server/hadoop-2.5.2/etc/hadoop/hdfs-site.xml /opt/server/hbase-1.1.2/conf/

##发送到其他机器(本机为第INVOICE-25台机器)

##scp -r hbase-1.1.2 INVOICE-GL-01:/opt/server/

##scp -r hbase-1.1.2 INVOICE-GL-02:/opt/server/

##scp -r hbase-1.1.2 INVOICE-GL-03:/opt/server/

##scp -r hbase-1.1.2 INVOICE-23:/opt/server/

...

##scp -r hbase-1.1.2 INVOICE-DN-05:/opt/server/

##scp -r hbase-1.1.2 INVOICE-DN-06:/opt/server/

###所有机器创建相应文件夹

mkdir -p /opt/var/logs/hbase

###启动hbase(本机为第INVOICE-GL-01台机器)

start-hbase.sh

11、基本维护命令:

(第INVOICE-GL-01, INVOICE-GL-02, INVOICE-GL-03台负责启动和停止,其他机器只看状态)

zookeeper启动,状态,停止:

INVOICE-GL-01, INVOICE-GL-02, INVOICE-GL-03台:zkServer.sh start,status,stop

检查zookeeper运行状态:

三台中有一个leader,两个follower为正常。

hadoop启动,停止:

第INVOICE-25台:

启动: start-dfs.sh

停止: stop-dfs.sh

状态: hdfs fsck /

hdfs dfsadmin -report

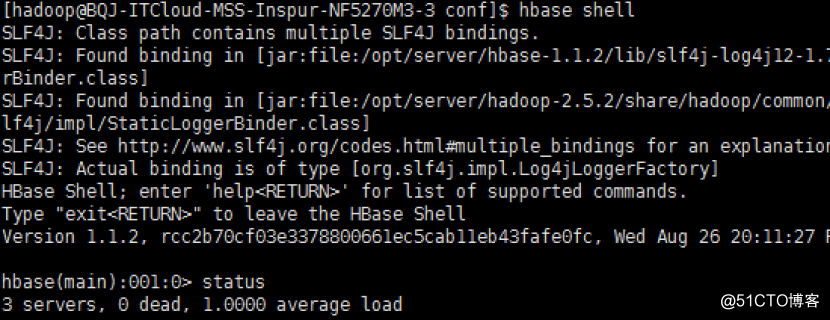

hbase 启动,停止:

第INVOICE-GL-01台:

启动: start-hbase.sh

停止:stop-hbase.sh

状态:hbase shell

- 如何使用Docker Machine部署Swarm集群

- 阿里云服务器数据盘挂载后如何使用

- Android 如何使用juv-rtmp-client.jar向Red5服务器发布实时视频数据

- 如何使用Monit部署服务器监控系统

- 大数据IMF传奇行动绝密课程第118课:Spark Streaming性能优化:如何获得和持续使用足够的集群计算资源

- 如何使用javascript向服务器提交数据(post)

- 使用概要管理工具创建定制概要文件,并在此节点上 创建集群以及在集群服务器中部署应用

- 如何使用Spark的local模式远程读取Hadoop集群数据

- ant design pro 的model,service,connect的使用以及如何调取后端服务器的数据并渲染到本地

- 如何使用部署实用工具和配置来简化在服务器之间移动软件包的工作

- 如何使用MobaXterm与服务器建立连接并传输数据

- 工具推荐:如何部署使用“远程桌面Web连接”,并且扩展它,可以连接非3389端口的远程桌面,(服务器管理员必备)

- 如何将liquibase部署到tomcat服务器上(使用postgresql数据库)

- 如何在V8中带入的数据使用服务器自动增加的值

- 在集群中部署多个服务器如何解决SESSION问题?

- Android 如何使用juv-rtmp-client.jar向Red5服务器发布实时视频数据

- 如何使用Hive&R从Hadoop集群中提取数据进行分析

- 在集群中部署多个服务器如何解决SESSION问题

- 如何使用网际数据脚本部署向导上传网际数据脚本

- websphere服务器,如何使用jndi数据源