图像处理基本库的学习笔记2--SVM,MATLAB,Tensorflow下分别对mnist数据集进行训练,并且进行预测

2020-04-01 12:14

465 查看

目录

SVM

支持向量机(Support Vector Machine, SVM)是一类按监督学习(supervised learning)方式对数据进行二元分类(binary classification)的广义线性分类器(generalized linear classifier),其决策边界是对学习样本求解的最大边距超平面(maximum-margin hyperplane)

**流程

1. 收集数据:此处使用给定的文本文件

2. 准备数据:基于二值图像构造数据

3. 分析数据:对图像向量进行目测

4. 训练算法:采用三种不同的方法,不同的参数 线性分类器二次多项式核函数径向基核函数

5. 测试并计算错误率

以下直接使用sklearn.svm模块中的算法库对mnist数据集进行训练,并且进行预测:

import numpy as np

import struct

import matplotlib.pyplot as plt

import osimport pickle

##加载svm模型

from sklearn import svm

###用于做数据预处理

from sklearn import preprocessing

import time

##读取数据集

path='E:\\数据挖掘\\MNIST_data'

def load_mnist_train(path, kind='train'):

labels_path = os.path.join(path,'%s-labels.idx1-ubyte'% kind)

images_path = os.path.join(path,'%s-images.idx3-ubyte'% kind)

with open(labels_path, 'rb') as lbpath:

magic, n = struct.unpack('>II',lbpath.read(8))

labels = np.fromfile(lbpath,dtype=np.uint8)

with open(images_path, 'rb') as imgpath:

magic, num, rows, cols = struct.unpack('>IIII',imgpath.read(16))

images = np.fromfile(imgpath,dtype=np.uint8).reshape(len(labels), 784)

return images, labels

def load_mnist_test(path, kind='t10k'):

labels_path = os.path.join(path,'%s-labels.idx1-ubyte'% kind)

images_path = os.path.join(path,'%s-images.idx3-ubyte'% kind)

with open(labels_path, 'rb') as lbpath:

magic, n = struct.unpack('>II',lbpath.read(8))

labels = np.fromfile(lbpath,dtype=np.uint8)

with open(images_path, 'rb') as imgpath:

magic, num, rows, cols = struct.unpack('>IIII',imgpath.read(16))

images = np.fromfile(imgpath,dtype=np.uint8).reshape(len(labels), 784)

return images, labels

train_images,train_labels=load_mnist_train(path)

test_images,test_labels=load_mnist_test(path)

##标准化

X=preprocessing.StandardScaler().fit_transform(train_images)

X_train=X[0:60000]

y_train=train_labels[0:60000]

##定义并训练模型

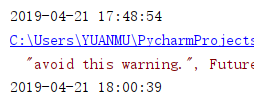

print(time.strftime('%Y-%m-%d %H:%M:%S'))

model_svc = svm.SVC()

model_svc.fit(X_train,y_train)

print(time.strftime('%Y-%m-%d %H:%M:%S'))##保存模型

file = open("model.pickle", "wb")

pickle.dump(model_svc, file)

file.close()

##读取模型

file = open("model.pickle", "rb")

model_svc = pickle.load(file)

file.close()

##评分并预测

x=preprocessing.StandardScaler().fit_transform(test_images)

x_test=x[0:10000]

y_pred=test_labels[0:10000]

print(model_svc.score(x_test,y_pred))

y=model_svc.predict(x_test)

训练用时将近12分钟

测试输出正确率:96.57%

输出预测结果:可知结果均正确

MATLAB

自动识别MNIST手写数字数据库

在命令行运行cnet tool,出现GUI界面,选择t10k-images.idx3-ubyte。

直接手写测试也是正确的

Tensorflow

用tensorflow识别,效果依旧很好

训练模型

from tensorflow.examples.tutorials.mnist import input_data

import tensorflow as tf

mnist = input_data.read_data_sets('E:\数据挖掘\MNIST_data', one_hot=True) #MNIST数据集所在路径

x = tf.placeholder(tf.float32, [None, 784])

y_ = tf.placeholder(tf.float32, [None, 10])

def weight_variable(shape):

initial = tf.truncated_normal(shape,stddev = 0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1,shape = shape)

return tf.Variable(initial)

def conv2d(x,W):

return tf.nn.conv2d(x, W, strides = [1,1,1,1], padding = 'SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

x_image = tf.reshape(x,[-1,28,28,1])

h_conv1 = tf.nn.relu(conv2d(x_image,W_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

W_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

keep_prob = tf.placeholder("float")

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv=tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)

cross_entropy = -tf.reduce_sum(y_*tf.log(y_conv))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv,1), tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

saver = tf.train.Saver() #定义saver

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(600):

batch = mnist.train.next_batch(50)

if i % 100 == 0:

train_accuracy = accuracy.eval(feed_dict={

x: batch[0], y_: batch[1], keep_prob: 1.0})

print('step %d, training accuracy %g' % (i, train_accuracy))

train_step.run(feed_dict={x: batch[0], y_: batch[1], keep_prob: 0.5})

saver.save(sess, 'C:/Users/YUANMU/PycharmProjects/tensorflow') #模型储存位置

print('test accuracy %g' % accuracy.eval(feed_dict={

x: mnist.test.images, y_: mnist.test.labels, keep_prob: 1.0}))

测试模型

from PIL import Image, ImageFilter

import tensorflow as tf

import matplotlib.pyplot as plt

def imageprepare():

im = Image.open('C:/Users/YUANMU/PycharmProjects/tensorflow/4.png') #读取的图片所在路径,注意是28*28像素

plt.imshow(im) #显示需要识别的图片

plt.show()

im = im.convert('L')

tv = list(im.getdata())

tva = [(255-x)*1.0/255.0 for x in tv]

return tva

result=imageprepare()

x = tf.placeholder(tf.float32, [None, 784])

y_ = tf.placeholder(tf.float32, [None, 10])

def weight_variable(shape):

initial = tf.truncated_normal(shape,stddev = 0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1,shape = shape)

return tf.Variable(initial)

def conv2d(x,W):

return tf.nn.conv2d(x, W, strides = [1,1,1,1], padding = 'SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

x_image = tf.reshape(x,[-1,28,28,1])

h_conv1 = tf.nn.relu(conv2d(x_image,W_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

W_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

keep_prob = tf.placeholder("float")

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv=tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)

cross_entropy = -tf.reduce_sum(y_*tf.log(y_conv))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv,1), tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

saver.restore(sess, "C:/Users/YUANMU/PycharmProjects/tensorflow") #使用模型,参数和之前的代码保持一致

prediction=tf.argmax(y_conv,1)

predint=prediction.eval(feed_dict={x: [result],keep_prob: 1.0}, session=sess)

print('识别结果:')

print(predint[0])

- 点赞

- 收藏

- 分享

- 文章举报

来一块葱花饼

发布了17 篇原创文章 · 获赞 12 · 访问量 1926

私信

关注

来一块葱花饼

发布了17 篇原创文章 · 获赞 12 · 访问量 1926

私信

关注

相关文章推荐

- Tensorflow学习教程------利用卷积神经网络对mnist数据集进行分类_利用训练好的模型进行分类

- 【caffe学习笔记之7】caffe-matlab/python训练LeNet模型并应用于mnist数据集(2)

- Tensorflow学习教程------利用卷积神经网络对mnist数据集进行分类_训练模型

- tensorflow 学习笔记(十二)- 用别人训练好的模型来进行图像分类

- python 计算机视觉学习笔记(1)--对图像进行基本处理

- 【深度学习·笔记一】基于Matlab的已训练神经网络Alexnet进行图像识别

- Matlab图像处理学习笔记(九):获取叠加物体的数量并进行分割

- 【caffe学习笔记之6】caffe-matlab/python训练LeNet模型并应用于mnist数据集(1)

- matlab图像处理学习笔记-数学形态与二值图像操作

- Matlab图像处理学习笔记(一):二值化、开操作、连通区域提取、重心、ROI

- tensorflow 学习笔记(四) - mnist实例--用简单的神经网络来训练和测试

- matlab 数字图像处理 intrans函数 学习笔记

- TensorFlow官方教程学习笔记(四)——MNIST数据集的读取

- MATLAB学习笔记八(关于图像处理)

- 构建一个简单的神经网络(又名多层感知器)来对MNIST数字数据集进行分类--学习笔记

- Matlab图像处理学习笔记(四):多边形检测

- tensorflow学习笔记四:mnist实例--用简单的神经网络来训练和测试

- tensorflow学习笔记四:mnist实例--用简单的神经网络来训练和测试(MNIST For ML Beginners)

- MATLAB图像处理_学习笔记

- tensorflow 学习专栏(四):使用tensorflow在mnist数据集上使用逻辑回归logistic Regression进行分类