学习笔记:python+CNN识别手写数字(自己的数据集)2.0

2020-01-13 23:38

393 查看

由1.0升级的这个2.0其实也非常的简单,无非是按照1.0预处理那样的方式,处理好图片,准确率确实提高了

1.数据集

我是做成黑底白字的图片放在data文件夹里

命名格式是0_,1_这种,_前代表label,图片来源于网上

2.训练,细心的朋友可以发现我省略了验证集的部分

import os

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

import tensorflow as tf

old_v = tf.compat.v1.logging.get_verbosity()

tf.compat.v1.logging.set_verbosity(old_v)

tf.compat.v1.logging.set_verbosity(tf.compat.v1.logging.ERROR)

import tensorflow.examples.tutorials.mnist.input_data as input_data

from PIL import Image

import numpy as np

import random

import cv2

data_dir="data"

MODEL_SAVE_PATH = "model_data/"

MODEL_NAME = "save_net.ckpt"

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding="SAME")

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

def read_data(data_dir):

datas = []

labes = []

fnames = []

for fname in os.listdir(data_dir):

fpath = os.path.join(data_dir, fname)

fnames.append(fname)

image = Image.open(fpath)

data = np.array(image) /255.0

data=cv2.resize(data,(28,28),interpolation=cv2.INTER_AREA)

label = int(fname.split("_",1)[0])

datas.append(data)

labes.append(label)

datas = np.array(datas)

datas = np.reshape(datas, (-1, 784))

labels=np.zeros((200,10))

for i in range(0, 200):

m = labes[i]

labels[i][m] = 1

print("shape of datas: {}\tshape of labels: {}".format(datas.shape,labels.shape))

return fnames, datas, labels

fnames, datas, labels = read_data(data_dir)

x = tf.placeholder(tf.float32, [None, 784])

w_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

x_image = tf.reshape(x, [-1, 28, 28, 1])

y_ = tf.placeholder("float", [None, 10])

h_conv1 = tf.nn.relu(conv2d(x_image, w_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

w_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, w_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

w_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7 * 7 * 64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, w_fc1) + b_fc1)

keep_prob = tf.placeholder("float")

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

w_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv = tf.nn.softmax(tf.matmul(h_fc1_drop, w_fc2) + b_fc2)

cross_entropy = -tf.reduce_sum(y_ * tf.log(y_conv))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(4000):

batchdata = []

batchlabel = []

for j in range(0, 50):

a = random.randint(0, 199)

batchdata.append(datas[a])

batchlabel.append(labels[a])

batchdata = np.array(batchdata)

batchlabel = np.array(batchlabel)

if i % 100 == 0:

train_accuracy = accuracy.eval(feed_dict={x: batchdata, y_: batchlabel, keep_prob: 1})

print("step %d,training accuracy %g" % (i, train_accuracy))

train_step.run(feed_dict={x: batchdata, y_: batchlabel, keep_prob: 0.5})

print("test accuracy %g" % accuracy.eval(feed_dict={x: datas, y_: labels, keep_prob: 0.5}))

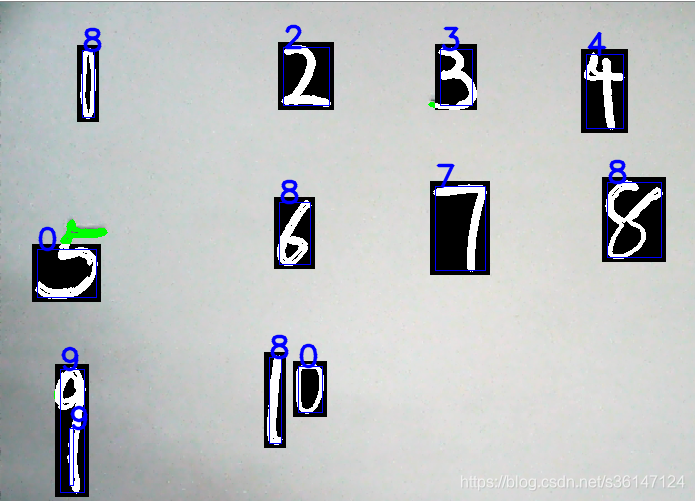

3.测试效果,和1.0相比没变化

import os

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

import tensorflow as tf

old_v = tf.compat.v1.logging.get_verbosity()

tf.compat.v1.logging.set_verbosity(old_v)

tf.compat.v1.logging.set_verbosity(tf.compat.v1.logging.ERROR)

import numpy as np

import imutils

import cv2

def weight_variable(shape):

initial = tf.truncated_normal(shape,stddev = 0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1,shape = shape)

return tf.Variable(initial)

def conv2d(x,W):

return tf.nn.conv2d(x, W, strides = [1,1,1,1], padding = 'SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

im=cv2.imread('yuanshi.png')

im=imutils.resize(im,height=500)

gray=cv2.cvtColor(im,cv2.COLOR_BGR2GRAY)

imgG=cv2.GaussianBlur(gray,(5,5),0)

erosion=cv2.erode(imgG,(3,3),iterations=3)

dilate=cv2.dilate(erosion,(3,3),iterations=3)

edged=cv2.Canny(dilate,80,200,255)

aa,contours, hierarchy=cv2.findContours(edged,cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cv2.drawContours(im,contours,-1,(0,255,0),3)

digitcnts=[]

for i in contours:

(x,y,w,h)=cv2.boundingRect(i)

if w <100 and h > 45 and h <160:

digitcnts.append(i)

m = 0

for c in digitcnts:

(x, y, w, h) = cv2.boundingRect(c)

m += 1

roi = im[y-5:y + h+5, x-5:x + w+5]

height, width, channel = roi.shape

for i in range(height):

for j in range(width):

b, g, r = roi[i, j]

if g > 250:

b = 255

r = 255

g = 255

else:

b = 0

g = 0

r = 0

roi[i, j] = [r, g, b]

cv2.imwrite('%d.png' % m, roi)

x = tf.placeholder(tf.float32, [None, 784])

y_ = tf.placeholder(tf.float32, [None, 10])

W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

x_image = tf.reshape(x,[-1,28,28,1])

h_conv1 = tf.nn.relu(conv2d(x_image,W_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

W_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

keep_prob = tf.placeholder("float")

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv=tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)

cross_entropy = -tf.reduce_sum(y_*tf.log(y_conv))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv,1), tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

saver.restore(sess, "model_data/save_net.ckpt") # 使用模型,参数和之前的代码保持一致

outputlist=[]

k=0

for c in digitcnts:

k=k+1

img = cv2.imread('%d.png' % k)

img = cv2.resize(img, (28, 28), interpolation=cv2.INTER_CUBIC)

gray = (cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)) / 255

img = np.reshape(gray, [-1, 784])

prediction = tf.argmax(y_conv, 1)

predint=prediction.eval(feed_dict={x: img,keep_prob: 1.0}, session=sess)

print('the predict is : ', predint[0])

outputlist.append(predint[0])

p = 0

for c in digitcnts:

(x, y, w, h) = cv2.boundingRect(c)

d=outputlist

p= p+1

font = cv2.FONT_HERSHEY_SIMPLEX

cv2.rectangle(im, (x, y), (x + w, y + h), (255, 0, 0), 0)

cv2.putText(im,str(d),(x,y),font,1,(255,0,0),2,cv2.LINE_AA)

cv2.imshow('img', im)

cv2.waitKey(0)

cv2.destroyAllWindows()

[p]

其实可以发现,对于书写清楚,筛选范围内数字准确率祥比mnist数据集提高了两成吧,比较我的数据集只有200个图片

- 点赞

- 收藏

- 分享

- 文章举报

该死的碳酸饮料呀

发布了18 篇原创文章 · 获赞 0 · 访问量 177

私信

关注

该死的碳酸饮料呀

发布了18 篇原创文章 · 获赞 0 · 访问量 177

私信

关注

相关文章推荐

- 【CNTK】CNTK学习笔记之制作自己的数据集(以MNIST手写数字数据集为例)

- Python 学习笔记(Machine Learning In Action)K-近邻算法识别手写数字

- 手写数字识别-(卷积神经网络)-基于keras的python学习笔记(十一)

- Python scikit-learn 学习笔记—手写数字识别

- caffe学习笔记——python+mnist手写数字识别

- caffe的python接口学习(4):mnist实例---手写数字识别

- TensorFlow学习-基于CNN实现手写数字识别

- 5/12 cnn 手写字体识别代码学习笔记

- [Python]基于CNN的MNIST手写数字识别

- 学习笔记TF024:TensorFlow实现Softmax Regression(回归)识别手写数字

- Caffe——Python接口学习(4):mnist实例——手写数字识别

- 深度学习笔记(四)用Torch实现MNIST手写数字识别

- 【深度学习】笔记2_caffe自带的第一个例子,Mnist手写数字识别代码,过程,网络详解

- TensorFlow学习_02_CNN卷积神经网络_Mnist手写数字识别

- 深度学习框架Caffe学习笔记(2)-MNIST手写数字识别例程

- Tensorflow深度学习之八:再探CNN解决mnist手写数字识别问题

- 学习笔记(五)Tensorflow实现Soft Regression简单识别MNIST手写数字

- Keras_深度学习_MNIST数据集手写数字识别之各种调参

- DL之LiR&DNN&CNN:利用LiR、DNN、CNN算法对MNIST手写数字图片(csv)识别数据集实现(10)分类预测

- 深度学习笔记(一):mnist 数据集手写数字图片识别