【Python】爬虫-获取五级行政区划(2018)

2019-07-07 09:38

148 查看

版权声明:本文为博主原创文章,遵循 CC 4.0 by-sa 版权协议,转载请附上原文出处链接和本声明。

本文链接:https://blog.csdn.net/weixin_43826590/article/details/94963563

更新于2019-07-07,不足之处还望批评指正

目前尚存在的问题:

(1)连接重置后尝试重连的问题

(2)字符集为GBK

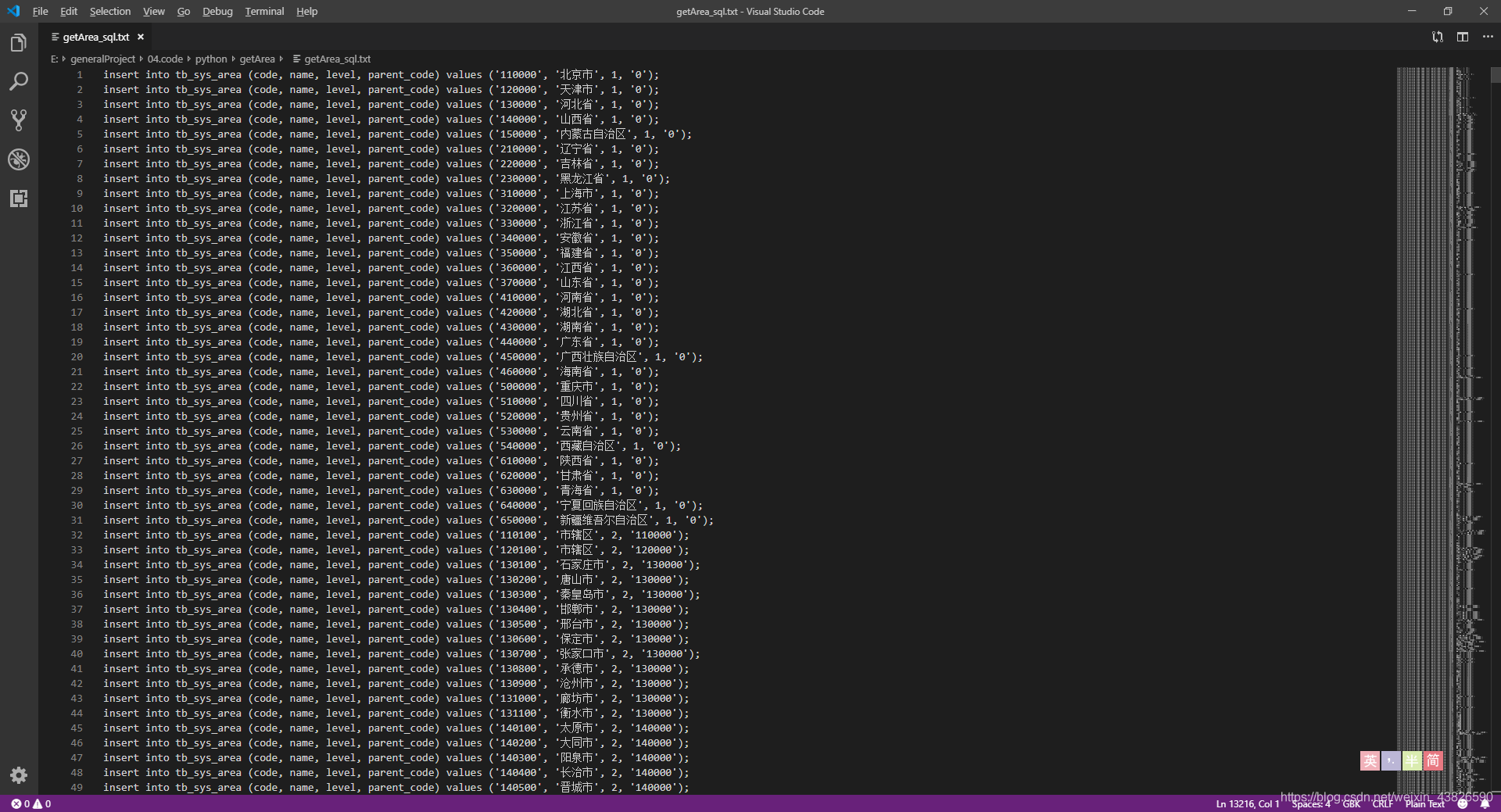

最终效果(注意字符集)

以下是源码:

[code]# -*- coding: UTF-8 -*-

"""

获取统计用区划代码和城乡划分代码

2018年度

http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2018/

"""

import re

import requests

import time

from bs4 import BeautifulSoup

headers = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36"

}

f = open('getArea_sql.txt', 'r+')

def getItem(itemData, dataArray, parentRequestUrl, type):

item = {}

if(type == 5):

item['name'] = str(dataArray[2].get_text())

else:

item['name'] = str(dataArray[1].get_text())

# 下一级请求url

href = re.findall('(.*)/', parentRequestUrl)

if type != 5:

item['href'] = href[0] + '/' + dataArray[0].get('href')

# 父级code

item['parentCode'] = itemData.get('code')

# 类型

item['type'] = type

# code

if type <= 3:

item['code'] = str(dataArray[0].get_text())[0 : 6]

else:

item['code'] = str(dataArray[0].get_text())[0: 12]

# sql语句

f.write('insert into tb_sys_area (code, name, level, parent_code) values (\'%s\', \'%s\', %s, \'%s\')' % (

item['code'], item['name'], item['type'], item['parentCode']

) + ";\n")

return item

# 获取BeautifulSoup

def getSoup(requestUrl):

# 数据量太大,有abort的可能

try:

htmls = requests.get(requestUrl, headers=headers)

except requests.exceptions.ConnectionError:

time.sleep(5)

htmls = requests.get(requestUrl, headers=headers)

htmls.encoding = 'GBK'

soup = BeautifulSoup(htmls.text, 'html.parser')

return soup

# 循环处理

def loopItem(label, labelClass, labelChild, item, requestUrl, type, lists):

for link in soup.find_all(label, labelClass):

array = link.find_all(labelChild, class_='')

if not len(array):

continue

itemData = getItem(item, array, requestUrl, type)

lists.append(itemData)

requestProvinceUrl = 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2018/index.html'

soup = getSoup(requestProvinceUrl)

# 省列表

provinceList = []

for link in soup.find_all('a', class_=''):

requestCityUrl = re.findall('(.*)/', requestProvinceUrl)

item = {}

# 名称

item['name'] = str(link.get_text())

# 下一级url

href = str(link.get('href'))

item['href'] = 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2018/' + href

# 父级code

item['parentCode'] = '0'

# 类型

item['type'] = '1'

# code

item['code'] = (href.split('.'))[0] + '0000'

provinceList.append(item)

f.write('insert into tb_sys_area (code, name, level, parent_code) values (\'%s\', \'%s\', %s, \'%s\')' % (

item['code'], item['name'], item['type'], item['parentCode']

) + ";\n")

# 市列表

cityList = []

for item in provinceList:

cityRequestUrl = str(item.get('href'))

soup = getSoup(cityRequestUrl)

loopItem('tr', 'citytr', 'a', item, cityRequestUrl, 2, cityList)

# 县列表

countyList = []

for item in cityList:

countyRequestUrl = str(item.get('href'))

soup = getSoup(countyRequestUrl)

loopItem('tr', 'countytr', 'a', item, countyRequestUrl, 3, countyList)

# 城镇列表

townList = []

for item in countyList:

townRequestUrl = str(item.get('href'))

soup = getSoup(townRequestUrl)

loopItem('tr', 'towntr', 'a', item, townRequestUrl, 4, townList)

# 村列表

villageList = []

for item in townList:

villageRequestUrl = str(item.get('href'))

soup = getSoup(villageRequestUrl)

loopItem('tr', 'villagetr', 'td', item, villageRequestUrl, 5, villageList)

相关文章推荐

- python-网络爬虫初学一:获取网页源码以及发送POST和GET请求

- python 爬虫获取网站信息(二)

- python爬虫(14)获取淘宝MM个人信息及照片(上)

- Python爬虫实战五之模拟登录淘宝并获取所有订单

- python爬虫获取豆瓣正在热播电影

- python 爬虫实战--登陆学校教务系统获取成绩信息

- Python新手写出漂亮的爬虫代码1——从html获取信息

- python爬虫-利用request,bs4(BeautifulSoup)获取天天书屋的在线阅读内容并存为txt文档

- 【用Python写爬虫】获取html的方法【四】:使用urllib下载文件

- 利用Python爬虫每天获取最新的CVE安全漏洞,存放至mysql数据库中

- python爬虫获取11选5彩票历史开奖号码

- python 爬虫 批量获取代理ip

- Python新手写出漂亮的爬虫代码2——从json获取信息

- Python爬虫实践:获取空气质量历史数据

- python 爬虫(1):爬取 DDCTF-2018 参赛选手

- python爬虫学习(一)通过urllib2模块获取html,设置用户代理

- (转)零基础掌握百度地图兴趣点获取POI爬虫(python语言爬取)(进阶篇)

- Python爬虫之web内容获取(一)

- Python爬虫:获取新浪网新闻

- 使用python爬虫获取黄金价格的核心代码