FFmpeg通过摄像头实现对视频流进行解码并显示测试代码(旧接口)

这里通过USB摄像头(注:windows7/10下使用内置摄像头,linux下接普通的usb摄像头(Logitech))获取视频流,然后解码,最后再用opencv显示。用到的模块包括avformat、avcodec和avdevice。libavdevice库是libavformat的一个补充库(complementary library)。主要涉及到的接口函数包括:注:其中一些接口是被废弃的旧接口

1. avdevice_register_all:初始化libavdevice库并注册所有输入输出设备;

2. av_find_input_format:根据输入格式的名字查找AVInputFormat,在测试代码中,windows平台使用”vfwcap”(video for windows capture),linux平台使用”v4l2”(Video4Linux2);

3. avformat_alloc_context:分配AVFormatContext;

4. avformat_open_input:打开输入流并读取header;

5. avformat_find_stream_info:读取媒体文件的数据包以获取流信息;

6. 通过AVFormatContext中AVStream查找视频/音频流索引,这里在windows10下获取到的编码类型为mjpeg即AV_CODEC_ID_MJPEG,像素格式为yuv422p即AV_PIX_FMT_YUVJ422P;在linux或windows7下获取到的编码类型为rawvideo即AV_CODEC_ID_RAWVIDEO,像素格式yuyv422即AV_PIX_FMT_YUYV422;可见摄像头类型不同获取到的编码类型和像素格式可能也不同;

7. avcodec_find_decoder:由codec ID查找已注册的解码器;

8. avcodec_open2:初始化AVCodecContext;

9. av_frame_alloc:分配一个AVFrame并设置它的字段为默认值;

10. av_malloc:为一个AVPacket分配内存块;

11. sws_getContext:分配一个SwsContext;

12. av_read_frame:获取流即packet(AVPacket);

13. avcodec_decode_video2:解码视频帧,从流数据到图像数据;

14. sws_scale:转换图像格式;

15. av_free_packet:释放AVPacket;

16. av_frame_free:释放由av_frame_alloc分配的AVFrame;

17. sws_freeContext:释放由sws_getContext分配的SwsContext;

18. av_free:释放由av_malloc分配的AVPacket;

19. avformat_close_input:关闭打开的AVFormatContext并释放。

测试代码(test_ffmpeg_decode_show.cpp):

[code]#include "funset.hpp"

#include <stdio.h>

#include <iostream>

#include <memory>

#include <fstream>

#ifdef __cplusplus

extern "C" {

#endif

#include <libavdevice/avdevice.h>

#include <libavformat/avformat.h>

#include <libavcodec/avcodec.h>

#include <libswscale/swscale.h>

#include <libavutil/mem.h>

#include <libavutil/imgutils.h>

#ifdef __cplusplus

}

#endif

#include <opencv2/opencv.hpp>

int test_ffmpeg_decode_show_old()

{

avdevice_register_all();

#ifdef _MSC_VER

const char* input_format_name = "vfwcap";

const char* url = "";

#else

const char* input_format_name = "video4linux2";

const char* url = "/dev/video0";

#endif

AVInputFormat* input_fmt = av_find_input_format(input_format_name);

AVFormatContext* format_ctx = avformat_alloc_context();

int ret = avformat_open_input(&format_ctx, url, input_fmt, nullptr);

if (ret != 0) {

fprintf(stderr, "fail to open url: %s, return value: %d\n", url, ret);

return -1;

}

ret = avformat_find_stream_info(format_ctx, nullptr);

if (ret < 0) {

fprintf(stderr, "fail to get stream information: %d\n", ret);

return -1;

}

int video_stream_index = -1;

for (int i = 0; i < format_ctx->nb_streams; ++i) {

const AVStream* stream = format_ctx->streams[i];

if (stream->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

video_stream_index = i;

fprintf(stdout, "type of the encoded data: %d, dimensions of the video frame in pixels: width: %d, height: %d, pixel format: %d\n",

stream->codecpar->codec_id, stream->codecpar->width, stream->codecpar->height, stream->codecpar->format);

}

}

if (video_stream_index == -1) {

fprintf(stderr, "no video stream\n");

return -1;

}

AVCodecContext* codec_ctx = format_ctx->streams[video_stream_index]->codec;

AVCodec* codec = avcodec_find_decoder(codec_ctx->codec_id);

if (!codec) {

fprintf(stderr, "no decoder was found\n");

return -1;

}

ret = avcodec_open2(codec_ctx, codec, nullptr);

if (ret != 0) {

fprintf(stderr, "fail to init AVCodecContext: %d\n", ret);

return -1;

}

AVFrame* frame = av_frame_alloc();

AVPacket* packet = (AVPacket*)av_malloc(sizeof(AVPacket));

SwsContext* sws_ctx = sws_getContext(codec_ctx->width, codec_ctx->height, codec_ctx->pix_fmt, codec_ctx->width, codec_ctx->height, AV_PIX_FMT_BGR24, 0, nullptr, nullptr, nullptr);

if (!frame || !packet || !sws_ctx) {

fprintf(stderr, "fail to alloc\n");

return -1;

}

int got_picture = -1;

std::unique_ptr<uint8_t[]> data(new uint8_t[codec_ctx->width * codec_ctx->height * 3]);

cv::Mat mat(codec_ctx->height, codec_ctx->width, CV_8UC3);

int width_new = 320, height_new = 240;

cv::Mat dst(height_new, width_new, CV_8UC3);

const char* winname = "usb video1";

cv::namedWindow(winname);

while (1) {

ret = av_read_frame(format_ctx, packet);

if (ret < 0) {

fprintf(stderr, "fail to av_read_frame: %d\n", ret);

continue;

}

if (packet->stream_index == video_stream_index) {

ret = avcodec_decode_video2(codec_ctx, frame, &got_picture, packet);

if (ret < 0) {

fprintf(stderr, "fail to avcodec_decode_video2: %d\n", ret);

av_free_packet(packet);

continue;

}

if (got_picture) {

uint8_t* p[1] = { data.get() };

int dst_stride[1] = { frame->width * 3 };

sws_scale(sws_ctx, frame->data, frame->linesize, 0, codec_ctx->height, p, dst_stride);

mat.data = data.get();

cv::resize(mat, dst, cv::Size(width_new, height_new));

cv::imshow(winname, dst);

}

}

av_free_packet(packet);

int key = cv::waitKey(25);

if (key == 27) break;

}

cv::destroyWindow(winname);

av_frame_free(&frame);

sws_freeContext(sws_ctx);

av_free(packet);

avformat_close_input(&format_ctx);

fprintf(stdout, "test finish\n");

return 0;

}

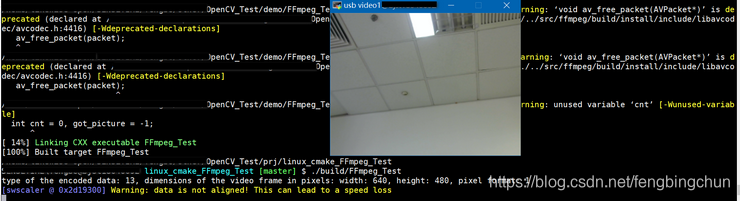

执行结果如下:

- FFmpeg中编码类型为rawvideo无须解码直接显示测试代码

- android jni中将大数据回调到java层的时候用法,比如视频流,音频流等,图片流等 比如我用ffmpeg解码好视频流,想送到java层使用opengGL进行显示,opencv进行人脸识别等等

- 通过Handler实现先显示进度条,后台进行数据处理,处理结束后取消进度条

- 接口测试—-中篇,通过django实现一个非常简单的记事本接口

- 利用ffmpeg来进行视频解码的完整示例代码

- ffmpeg解码数据转为Mat通过opencv函数显示

- jvava 代码创建接口 通过jni实现C的调用步骤

- 利用ffmpeg来进行视频解码的完整示例代码(H.264)

- C#通过显示接口实现解决命名冲突

- 嵌入式linux------ffmpeg移植 解码H264(am335x解码H264到yuv420并通过SDL显示)

- Android 通过蒲公英pgyer的接口 Service 实现带进度下载App 通知栏显示 在线更新 自动更新Demo

- JAVA调用OPENCV中DNN.Darknet接口进行目标检测测试(代码备份)

- libudev库接口通过匹配vid/pid实现多个摄像头设备节点的管理

- 嵌入式linux------ffmpeg移植 解码H264(am335x解码H264到yuv420并通过SDL显示)

- 通过实现 Filter 接口进行项目全局编码控制

- 利用ffmpeg来进行视频解码的完整示例代码(H.264)

- FFMPEG教程2_解码后在屏幕显示(使用2014年新SDK重新整理编译通过)

- 测试时钟显示程序通过, 点阵显示 碰到一些困难 发现调试比写代码更纠结。代码没错 烧到板子里就是打不到想要的现象

- ffmpeg解码+opencv显示+时间测试

- (1)写一个程序,用于分析一个字符串中各个单词出现的频率,并将单词和它出现的频率输出显示。(单词之间用空格隔开,如“Hello World My First Unit Test”); (2)编写单元测试进行测试; (3)用ElcEmma查看代码覆盖率,要求覆盖率达到100%。