爬虫学习Day7:实战项目

2019-03-06 23:16

127 查看

文章目录

任务

【Task7 实战大项目】:(1天)

实战大项目:模拟登录丁香园,并抓取论坛页面所有的人员基本信息与回复帖子内容。

丁香园论坛:http://www.dxy.cn/bbs/thread/626626#626626 。

思路

- 找到登录页面

- selenium模拟登录

- 抓取回帖内容

找到登录页面

登录网页:

https://auth.dxy.cn/accounts/login?service=http://www.dxy.cn/bbs/

browser = webdriver.Firefox() return browser def web_login(browser, url): browser.get(url) sleep(random.random()*3) #等待一段随机时间

需要点击“返回电脑登录”,才会显示用户名、密码框

login_link = browser.find_element_by_link_text('返回电脑登录')

login_link.click()

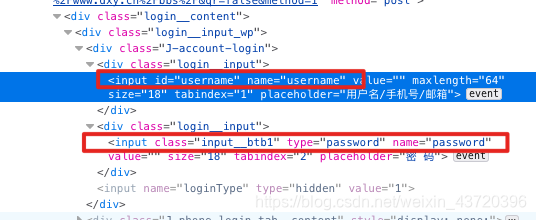

Inspect:

继续,定位用户名、密码框:

username = browser.find_element_by_name('username')

username.clear()

username.send_keys('username')

sleep(random.random()*3) # 等待一段随机时间

password = browser.find_element_by_name('password')

password.send_keys('********')

sleep(random.random()*3) # 等待一段随机时间

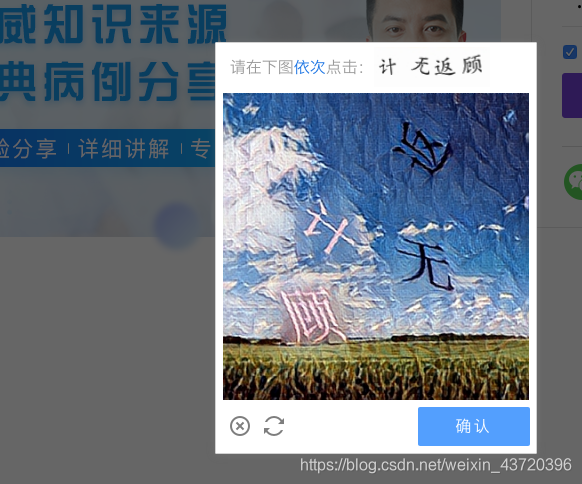

browser.find_element_by_class_name('button').click()

sleep(10)

出现需要点击图片验证,搞不定,手工点击:

获取网页内容

大致参照前几天BS4的内容:

https://blog.csdn.net/weixin_43720396/article/details/88087934

def get_web_detail(browser, url):

browser.get(url)

sleep(random.random()*8) # 等待一段随机时间

data = {}

soup = BeautifulSoup(browser.page_source, 'html.parser')

data['title'] = soup.title.string

data['replies'] = soup.select('.postbody')

data['authors'] = soup.select('.auth')

return data

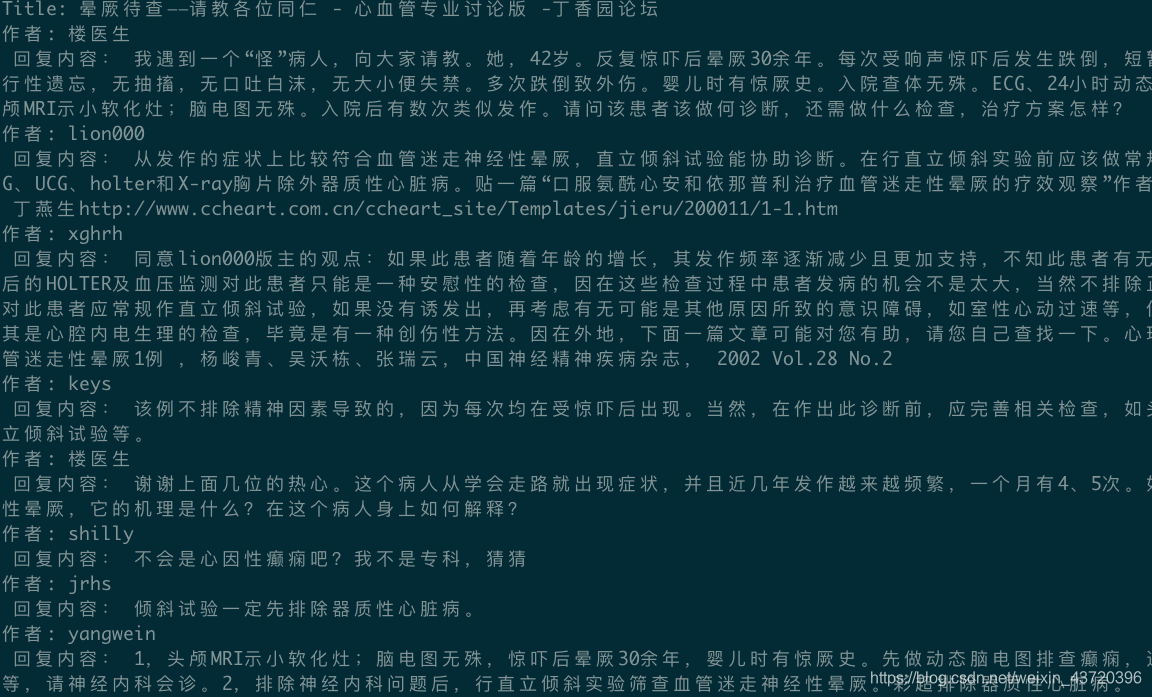

def show_data(data):

print(f'Title: {data["title"]}')

replies = []

for reply in data['replies']:

replies.append(reply.text.strip())

authors = []

for author in data['authors']:

authors.append(author.text)

for i in range(len(replies)):

print(f'作者: {authors[i]} \n 回复内容: {replies[i]}')

可以得到以下结果

全部代码如下:

from selenium import webdriver

from time import sleep

import random

from bs4 import BeautifulSoup

import json

def open():

browser = webdriver.Firefox()

# browser = webdriver.Chrome()

return browser

def web_login(browser, url):

browser.get(url)

sleep(random.random()*3) # 等待一段随机时间

login_link = browser.find_element_by_link_text('返回电脑登录')

login_link.click()

sleep(random.random()*3) # 等待一段随机时间

username = browser.find_element_by_name('username')

username.clear()

username.send_keys('username')

sleep(random.random()*3) # 等待一段随机时间

password = browser.find_element_by_name('password')

password.send_keys('password')

sleep(random.random()*3) # 等待一段随机时间

browser.find_element_by_class_name('button').click()

sleep(10)

cookie = browser.get_cookies()

return {i['name']: i['value'] for i in cookie}

def get_web_detail(browser, url):

browser.get(url)

sleep(random.random()*8) # 等待一段随机时间

data = {}

soup = BeautifulSoup(browser.page_source, 'html.parser')

data['title'] = soup.title.string

data['replies'] = soup.select('.postbody')

data['authors'] = soup.select('.auth')

return data

def show_data(data):

print(f'Title: {data["title"]}')

replies = []

for reply in data['replies']:

replies.append(reply.text.strip())

authors = []

for author in data['authors']:

authors.append(author.text)

for i in range(len(replies)):

print(f'作者: {authors[i]} \n 回复内容: {replies[i]}')def main():

browser = open()

web_login(browser, login_url)

data = get_web_detail(browser, url)

show_data(data)

if __name__ == '__main__':

login_url = 'https://auth.dxy.cn/accounts/login'

url = 'http://www.dxy.cn/bbs/thread/626626# 626626'

main()

相关文章推荐

- 苏嵌项目实战 学习日志

- JavaWeb 学习(Java Web 典型模块与项目实战大全)

- 基础爬虫框架及运行(选自范传辉Python爬虫开发与项目实战)

- Android 项目实战视频资料 学习充电必备

- Python网络爬虫实战项目代码大全

- 开源学习 百度推送实战项目 友聊 (二)

- python 爬虫学习三(Scrapy 实战,豆瓣爬取电影信息)

- 【备忘】深度学习实战项目-利用RNN与LSTM网络原理进行唐诗生成视频课程

- SpringMVC+Mybatis+Mysql实战项目学习--环境搭建【转】

- Python爬虫框架Scrapy 学习笔记 10.1 -------【实战】 抓取天猫某网店所有宝贝详情

- 学习《第一行代码》之实战项目-天气预报API实例

- Vue.js学习之路—项目中实战学习(二)编码空格

- 精通 Python 网络爬虫:核心技术、框架与项目实战

- 30天学习Swift项目实战第二天--------自定义字体

- swift学习第八天 项目实战-知乎日报之UIScollView和UIpageConrolView使用

- 爬虫实战项目

- asp.net4.0网站开发与项目实战—学习笔记1

- Python的学习笔记DAY7---关于爬虫(2)之Scrapy初探

- Python大型网络爬虫项目开发实战

- 学习贪吃蛇JS项目实战笔记2