【Python】网络爬虫(静态网站)实例

2018-10-12 21:01

363 查看

版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/m0_37811192/article/details/83032620

本爬虫的特点:

1.目标:静态网站

2.级数:二级

3.线程:单线程(未采用同步,为了避免顺序错乱,因此采用单线程)

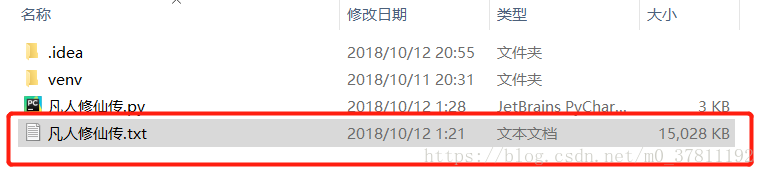

4.结果:爬取一部网络小说,将分散的各章节合并成一个txt文本文件

获取网页模板:

[code]def get_url(url):

try:

response = requests.get(url)

print(response.encoding)

print(response.apparent_encoding)

response.encoding = response.apparent_encoding

if response.status_code == 200:

return response.text

else:

print("url Error:", url)

except RequestException:

print("URL RequestException Error:", url)

return None

解析保存函数:

[code]def parse_url(html):

count = 0

essay = ""

pattern = re.compile('<td class="L"><a href="(.*?)">(.*?)</a></td>', re.S)

items = re.findall(pattern, html)

pattern_page = re.compile('<meta property="og:url" content="(.*?)"/>', re.S)

item_page = re.findall(pattern_page, html)

print(items)

print(items.__len__())

for item in items:

count += 1

if count <= 2416:

continue

this_url = item_page[0] + item[0]

this_title = item[1]

essay = get_book(this_url, this_title).replace("\ufffd", "*")

try:

if count % 100 == 1:

file = open(sys.path[0]+"凡人修仙传.txt", "a")

file.write(essay)

if count % 100 == 0 or count == items.__len__():

file.close()

print("前"+str(count)+"章保存完毕!")

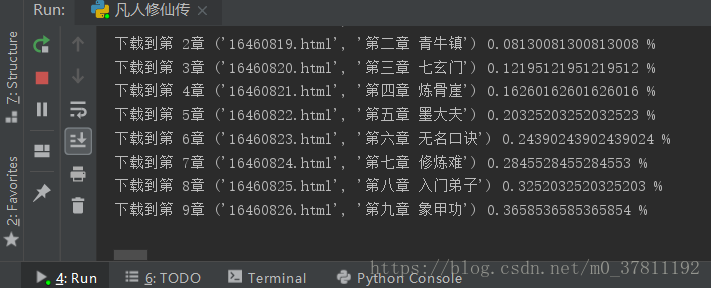

print("下载到第 " + str(count) + "章", item, count / items.__len__() * 100, "%")

except RequestException:

# print("Error", item)

print(essay)

完整代码:

[code]import requests

from requests.exceptions import RequestException

import re

import sys

from multiprocessing import Pool

import sqlite3

import os

def get_url(url):

try:

response = requests.get(url)

print(response.encoding)

print(response.apparent_encoding)

response.encoding = response.apparent_encoding

if response.status_code == 200:

return response.text

else:

print("url Error:", url)

except RequestException:

print("URL RequestException Error:", url)

return None

def parse_url(html):

count = 0

essay = ""

pattern = re.compile('<td class="L"><a href="(.*?)">(.*?)</a></td>', re.S)

items = re.findall(pattern, html)

pattern_page = re.compile('<meta property="og:url" content="(.*?)"/>', re.S)

item_page = re.findall(pattern_page, html)

print(items)

print(items.__len__())

for item in items:

count += 1

if count <= 2416:

continue

this_url = item_page[0] + item[0]

this_title = item[1]

essay = get_book(this_url, this_title).replace("\ufffd", "*")

try:

if count % 100 == 1:

file = open(sys.path[0]+"凡人修仙传.txt", "a")

file.write(essay)

if count % 100 == 0 or count == items.__len__():

file.close()

print("前"+str(count)+"章保存完毕!")

print("下载到第 " + str(count) + "章", item, count / items.__len__() * 100, "%")

except RequestException:

# print("Error", item)

print(essay)

def get_book(url, title):

data = "\n" + str(title) + "\n"

pattern = re.compile('<dd id="contents">(.*?)</dd>', re.S)

essay = re.findall(pattern, get_url(url))

essay_str = str(essay[0])

data = data + essay_str.replace(" ", " ").replace("<br />", "\n")

return data

if __name__ == '__main__':

parse_url(get_url("https://www.x23us.com/html/0/328/"))

相关文章推荐

- python中的实例方法、静态方法、类方法、类变量和实例变量浅析

- Python Web框架Flask下网站开发入门实例

- [Python]网络爬虫(四):Opener与Handler的介绍和实例应用(转)

- python中的实例方法、静态方法、类方法、类变量和实例变量浅析

- Python2.7 以及 Python 3.5的实例方法,类方法,静态方法之间的区别及调用关系

- Python网络爬虫与信息提取(实例讲解)

- [Python]网络爬虫(四):Opener与Handler的介绍和实例应用

- Python的实例方法,类方法,静态方法之间的区别及调用关系

- python中的实例方法、静态方法、类方法、类变量和实例变量浅析

- python实例1--用正则表达式爬取静态网页上的图片

- python中类方法、类实例方法、静态方法的使用与区别

- Python的实例方法,类方法,静态方法之间的区别及调用关系

- [Python]网络爬虫(四):Opener与Handler的介绍和实例应用

- python爬取有声小说网站实现自动下载实例

- [Python]网络爬虫(四):Opener与Handler的介绍和实例应用

- [Python]网络爬虫(四):Opener与Handler的介绍和实例应用

- Python 登录网站详解及实例

- python3 网络爬虫(六)静态加载数据的鉴别

- python使用代理ip访问网站的实例

- python网络爬虫实例(一):爬取糗事百科