Tensorflow学习笔记:CNN篇(3)——CIFAR-10数据集的CNN实现

2018-02-11 18:40

896 查看

Tensorflow学习笔记:CNN篇(3)——CIFAR-10数据集的CNN实现

前序

—在前面的介绍中,使用卷积神经网络对MNIST数据集做了应用,然而MNIST数据集仅限于对手写数字的识别,而且手写数字相对于自然物体和图片非常简单,也缺少相应的噪声和变换。—本文将使用CNN对CIFAR-10数据集进行验证,同时会比较不同参数作用下卷积神经网络对准确率产生的影响。

CIFAR-10数据集

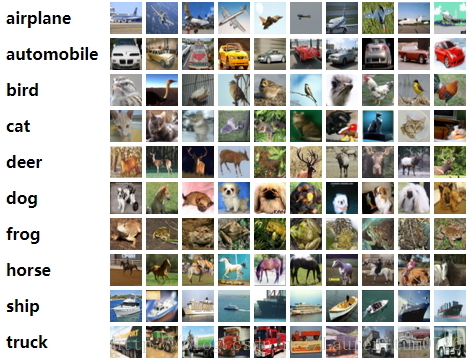

CIFAR-10数据集官网从网站首页可以看到,这里提供10个分类的现实物体的图片,与前面所讲的成熟的人工手写识别相比,现实物体识别挑战巨大,而且图片中含有大量特征、噪声,识别物体比例不一,也加大了识别的难度,使其非常具有挑战性。

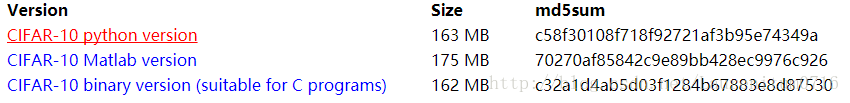

官网提供了数据集的下载,这里选用python版本

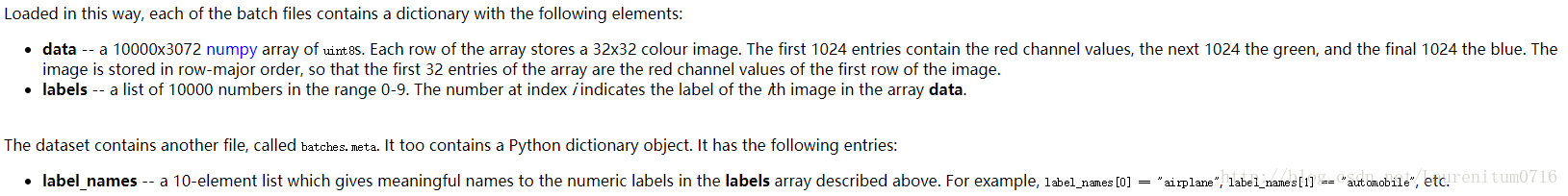

此外,官网提供了CIFAR-10的数据结构介绍:

可以看到,数据集中的数据分成了两部分:第一部分是特征部分,使用一个[10000,3072的uint8的矩阵进行存储,每一行向量都是3X3大小的3通道图片,构成的格式类似于[3,3,3];第二部分为标签部分,使用一个10000数据的list进行存储,每个list对应的是0-9中的一个数字,对应于物品分类。另外对于python的数据集,还有一个标签为“label_names”,例如label_names[0] == “airplane”等。

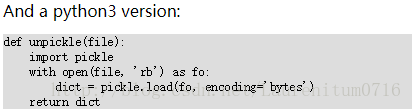

对于具体的数据读取,官网上也提供了相应的代码:

代码实例

1、数据读取

前面说到,label是一个包含0-9的list列表,根据之前我们用到的one-hot方法,采用稀疏性列表法,即10个列表数字中只有对应的那个值为1,其他值都为0,因此需要将list格式转化成对应的one-hot矩阵。def unpickle(filename):

with open(filename, 'rb') as f:

d = pickle.load(f, encoding='latin1')

return d

def onehot(labels):

'''one-hot 编码'''

n_sample = len(labels)

n_class = max(labels) + 1

onehot_labels = np.zeros((n_sample, n_class))

onehot_labels[np.arange(n_sample), labels] = 1

return onehot_labels

# 训练数据集

data1 = unpickle('cifar10-dataset/data_batch_1')

data2 = unpickle('cifar10-dataset/data_batch_2')

data3 = unpickle('cifar10-dataset/data_batch_3')

data4 = unpickle('cifar10-dataset/data_batch_4')

data5 = unpickle('cifar10-dataset/data_batch_5')

X_train = np.concatenate((data1['data'], data2['data'], data3['data'], data4['data'], data5['data']), axis=0)

y_train = np.concatenate((data1['labels'], data2['labels'], data3['labels'], data4['labels'], data5['labels']), axis=0)

y_train = onehot(y_train)

# 测试数据集

test = unpickle('cifar10-dataset/test_batch')

X_test = test['data'][:5000, :]

y_test = onehot(test['labels'])[:5000, :]

print('Training dataset shape:', X_train.shape)

print('Training labels shape:', y_train.shape)

print('Testing dataset shape:', X_test.shape)

print('Testing labels shape:', y_test.shape)这里使用unpick函数依次读取5个batch中的数据,生成5个dict格式文件,而其中的数据以[data, labels]格式存放,之后链接对应的5个特征数据和标签数据生成最终的训练集,采用前5000个数据作为测试集进行使用。

2、模型参数

learning_rate = 1e-3 training_iters = 200 batch_size = 50 display_step = 5 n_features = 3072 # 32*32*3 n_classes = 10 n_fc1 = 384 n_fc2 = 192

3、模型构建

# 构建模型

x = tf.placeholder(tf.float32, [None, n_features])

y = tf.placeholder(tf.float32, [None, n_classes])

W_conv = {

'conv1': tf.Variable(tf.truncated_normal([5, 5, 3, 32], stddev=0.0001)),

'conv2': tf.Variable(tf.truncated_normal([5, 5, 32, 64],stddev=0.01)),

'fc1': tf.Variable(tf.truncated_normal([8*8*64, n_fc1], stddev=0.1)),

'fc2': tf.Variable(tf.truncated_normal([n_fc1, n_fc2], stddev=0.1)),

'fc3': tf.Variable(tf.truncated_normal([n_fc2, n_classes], stddev=0.1))

}

b_conv = {

'conv1': tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[32])),

'conv2': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[64])),

'fc1': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[n_fc1])),

'fc2': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[n_

4000

fc2])),

'fc3': tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[n_classes]))

}

x_image = tf.reshape(x, [-1, 32, 32, 3])

# 卷积层 1

conv1 = tf.nn.conv2d(x_image, W_conv['conv1'], strides=[1, 1, 1, 1], padding='SAME')

conv1 = tf.nn.bias_add(conv1, b_conv['conv1'])

conv1 = tf.nn.relu(conv1)

# 池化层 1

pool1 = tf.nn.avg_pool(conv1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME')

# LRN层,Local Response Normalization

norm1 = tf.nn.lrn(pool1, 4, bias=1.0, alpha=0.001/9.0, beta=0.75)

# 卷积层 2

conv2 = tf.nn.conv2d(norm1, W_conv['conv2'], strides=[1, 1, 1, 1], padding='SAME')

conv2 = tf.nn.bias_add(conv2, b_conv['conv2'])

conv2 = tf.nn.relu(conv2)

# LRN层,Local Response Normalization

norm2 = tf.nn.lrn(conv2, 4, bias=1.0, alpha=0.001/9.0, beta=0.75)

# 池化层 2

pool2 = tf.nn.avg_pool(norm2, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME')

reshape = tf.reshape(pool2, [-1, 8*8*64])

fc1 = tf.add(tf.matmul(reshape, W_conv['fc1']), b_conv['fc1'])

fc1 = tf.nn.relu(fc1)

# 全连接层 2

fc2 = tf.add(tf.matmul(fc1, W_conv['fc2']), b_conv['fc2'])

fc2 = tf.nn.relu(fc2)

# 全连接层 3, 即分类层

fc3 = tf.nn.softmax(tf.add(tf.matmul(fc2, W_conv['fc3']), b_conv['fc3']))

# 定义损失

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=fc3, labels=y))

optimizer = tf.train.GradientDescentOptimizer(learning_rate=learning_rate).minimize(loss)

# 评估模型

correct_pred = tf.equal(tf.argmax(fc3, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

c = []

total_batch = int(X_train.shape[0] / batch_size)

# for i in range(training_iters):

start_time = time.time()

for i in range(200):

for batch in range(total_batch):

batch_x = X_train[batch*batch_size : (batch+1)*batch_size, :]

batch_y = y_train[batch*batch_size : (batch+1)*batch_size, :]

sess.run(optimizer, feed_dict={x: batch_x, y: batch_y})

acc = sess.run(accuracy, feed_dict={x: batch_x, y: batch_y})

print(acc)

c.append(acc)

end_time = time.time()

print('time: ', (end_time - start_time))

start_time = end_time

print("---------------%d onpech is finished-------------------",i)

print("Optimization Finished!")

# Test

test_acc = sess.run(accuracy, feed_dict={x: X_test, y: y_test})

print("Testing Accuracy:", test_acc)

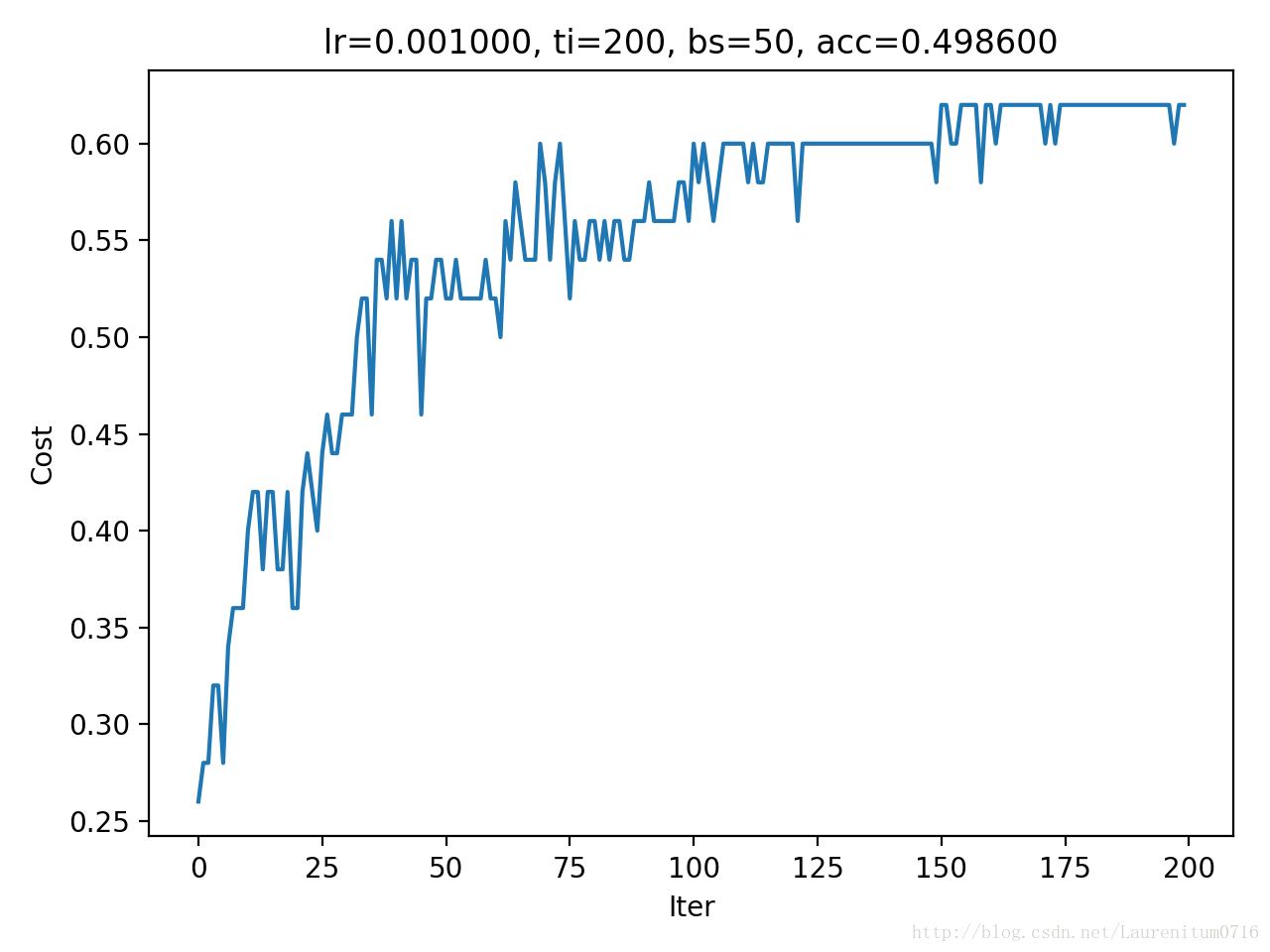

plt.plot(c)

plt.xlabel('Iter')

plt.ylabel('Cost')

plt.title('lr=%f, ti=%d, bs=%d, acc=%f' % (learning_rate, training_iters, batch_size, test_acc))

plt.tight_layout()

plt.savefig('cnn-tf-cifar10-%s.png' % test_acc, dpi=200)运行结果

根据计算机的运行速率不同,在笔者的计算机,大概10s运行一个周期(GPU:GTX1060 6G),训练200个周期,准确率为0.62,测试集准确率0.498。

完整代码

# coding: utf-8

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

import _pickle as pickle

import time

def unpickle(filename): with open(filename, 'rb') as f: d = pickle.load(f, encoding='latin1') return d def onehot(labels): '''one-hot 编码''' n_sample = len(labels) n_class = max(labels) + 1 onehot_labels = np.zeros((n_sample, n_class)) onehot_labels[np.arange(n_sample), labels] = 1 return onehot_labels # 训练数据集 data1 = unpickle('cifar10-dataset/data_batch_1') data2 = unpickle('cifar10-dataset/data_batch_2') data3 = unpickle('cifar10-dataset/data_batch_3') data4 = unpickle('cifar10-dataset/data_batch_4') data5 = unpickle('cifar10-dataset/data_batch_5') X_train = np.concatenate((data1['data'], data2['data'], data3['data'], data4['data'], data5['data']), axis=0) y_train = np.concatenate((data1['labels'], data2['labels'], data3['labels'], data4['labels'], data5['labels']), axis=0) y_train = onehot(y_train) # 测试数据集 test = unpickle('cifar10-dataset/test_batch') X_test = test['data'][:5000, :] y_test = onehot(test['labels'])[:5000, :] print('Training dataset shape:', X_train.shape) print('Training labels shape:', y_train.shape) print('Testing dataset shape:', X_test.shape) print('Testing labels shape:', y_test.shape)

# 模型参数

learning_rate = 1e-3 training_iters = 200 batch_size = 50 display_step = 5 n_features = 3072 # 32*32*3 n_classes = 10 n_fc1 = 384 n_fc2 = 192

# 构建模型

x = tf.placeholder(tf.float32, [None, n_features])

y = tf.placeholder(tf.float32, [None, n_classes])

W_conv = {

'conv1': tf.Variable(tf.truncated_normal([5, 5, 3, 32], stddev=0.0001)),

'conv2': tf.Variable(tf.truncated_normal([5, 5, 32, 64],stddev=0.01)),

'fc1': tf.Variable(tf.truncated_normal([8*8*64, n_fc1], stddev=0.1)),

'fc2': tf.Variable(tf.truncated_normal([n_fc1, n_fc2], stddev=0.1)),

'fc3': tf.Variable(tf.truncated_normal([n_fc2, n_classes], stddev=0.1))

}

b_conv = {

'conv1': tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[32])),

'conv2': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[64])),

'fc1': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[n_fc1])),

'fc2': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[n_fc2])),

'fc3': tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[n_classes]))

}

x_image = tf.reshape(x, [-1, 32, 32, 3])

# 卷积层 1

conv1 = tf.nn.conv2d(x_image, W_conv['conv1'], strides=[1, 1, 1, 1], padding='SAME')

conv1 = tf.nn.bias_add(conv1, b_conv['conv1'])

conv1 = tf.nn.relu(conv1)

# 池化层 1

pool1 = tf.nn.avg_pool(conv1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME')

# LRN层,Local Response Normalization

norm1 = tf.nn.lrn(pool1, 4, bias=1.0, alpha=0.001/9.0, beta=0.75)

# 卷积层 2

conv2 = tf.nn.conv2d(norm1, W_conv['conv2'], strides=[1, 1, 1, 1], padding='SAME')

conv2 = tf.nn.bias_add(conv2, b_conv['conv2'])

conv2 = tf.nn.relu(conv2)

# LRN层,Local Response Normalization

norm2 = tf.nn.lrn(conv2, 4, bias=1.0, alpha=0.001/9.0, beta=0.75)

# 池化层 2

pool2 = tf.nn.avg_pool(norm2, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME')

reshape = tf.reshape(pool2, [-1, 8*8*64])

fc1 = tf.add(tf.matmul(reshape, W_conv['fc1']), b_conv['fc1'])

fc1 = tf.nn.relu(fc1)

# 全连接层 2

fc2 = tf.add(tf.matmul(fc1, W_conv['fc2']), b_conv['fc2'])

fc2 = tf.nn.relu(fc2)

# 全连接层 3, 即分类层

fc3 = tf.nn.softmax(tf.add(tf.matmul(fc2, W_conv['fc3']), b_conv['fc3']))

# 定义损失

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=fc3, labels=y))

optimizer = tf.train.GradientDescentOptimizer(learning_rate=learning_rate).minimize(loss)

# 评估模型

correct_pred = tf.equal(tf.argmax(fc3, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

c = []

total_batch = int(X_train.shape[0] / batch_size)

# for i in range(training_iters):

start_time = time.time()

for i in range(200):

for batch in range(total_bat

a871

ch):

batch_x = X_train[batch*batch_size : (batch+1)*batch_size, :]

batch_y = y_train[batch*batch_size : (batch+1)*batch_size, :]

sess.run(optimizer, feed_dict={x: batch_x, y: batch_y})

acc = sess.run(accuracy, feed_dict={x: batch_x, y: batch_y})

print(acc)

c.append(acc)

end_time = time.time()

print('time: ', (end_time - start_time))

start_time = end_time

print("---------------%d onpech is finished-------------------",i)

print("Optimization Finished!")

# Test

test_acc = sess.run(accuracy, feed_dict={x: X_test, y: y_test})

print("Testing Accuracy:", test_acc)

plt.plot(c)

plt.xlabel('Iter')

plt.ylabel('Cost')

plt.title('lr=%f, ti=%d, bs=%d, acc=%f' % (learning_rate, training_iters, batch_size, test_acc))

plt.tight_layout()

plt.savefig('cnn-tf-cifar10-%s.png' % test_acc, dpi=200)

相关文章推荐

- Tensorflow学习笔记:CNN篇(5)——CIFAR-10数据集VGG19实现(Keras版)

- Tensorflow学习笔记:CNN篇(4)——CIFAR-10数据集LeNet实现(Keras版)

- TensorFlow学习笔记---CNN分类CIFAR-10数据集3

- 【学习笔记】机器学习之用TensorFlow cnn 测试CIFAR-10数据集

- TensorFlow-CNN CIFAR-10数据集 学习

- TensorFlow深度学习进阶教程:TensorFlow实现CIFAR-10数据集测试的卷积神经网络

- Tensorflow深度学习之二十二:AlexNet的实现(CIFAR-10数据集)

- tensorflow学习笔记四——实现一个CNN网络

- [keras实战] 小型CNN实现Cifar-10数据集84%准确率

- Tensorflow学习笔记:CNN篇(10)——Finetuning,猫狗大战,VGGNet的重新针对训练

- tensorflow学习笔记----二(CIFAR-10 模型 )

- TensorFlow学习——CIFAR-10(二)代码实现

- tensorflow 学习专栏(六):使用卷积神经网络(CNN)在mnist数据集上实现分类

- TensorFlow学习笔记--CNN精要及实现

- TensorFlow应用之进阶版卷积神经网络CNN在CIFAR-10数据集上分类

- tensorflow用CNN实现CIFAR-10图像分类(cpu贼慢)

- Keras基于Cifar-10数据集的CNN实现

- Tensorflow实战学习(十六)【CNN实现、数据集、TFRecord、加载图像、模型、训练、调试】

- CNN入门详解及TensorFlow源码实现--深度学习笔记

- Windows Caffe 学习笔记(一)训练和测试CIFAR-10数据集