大数据_数据采集引擎(Sqoop和Flume)

2018-01-14 21:36

127 查看

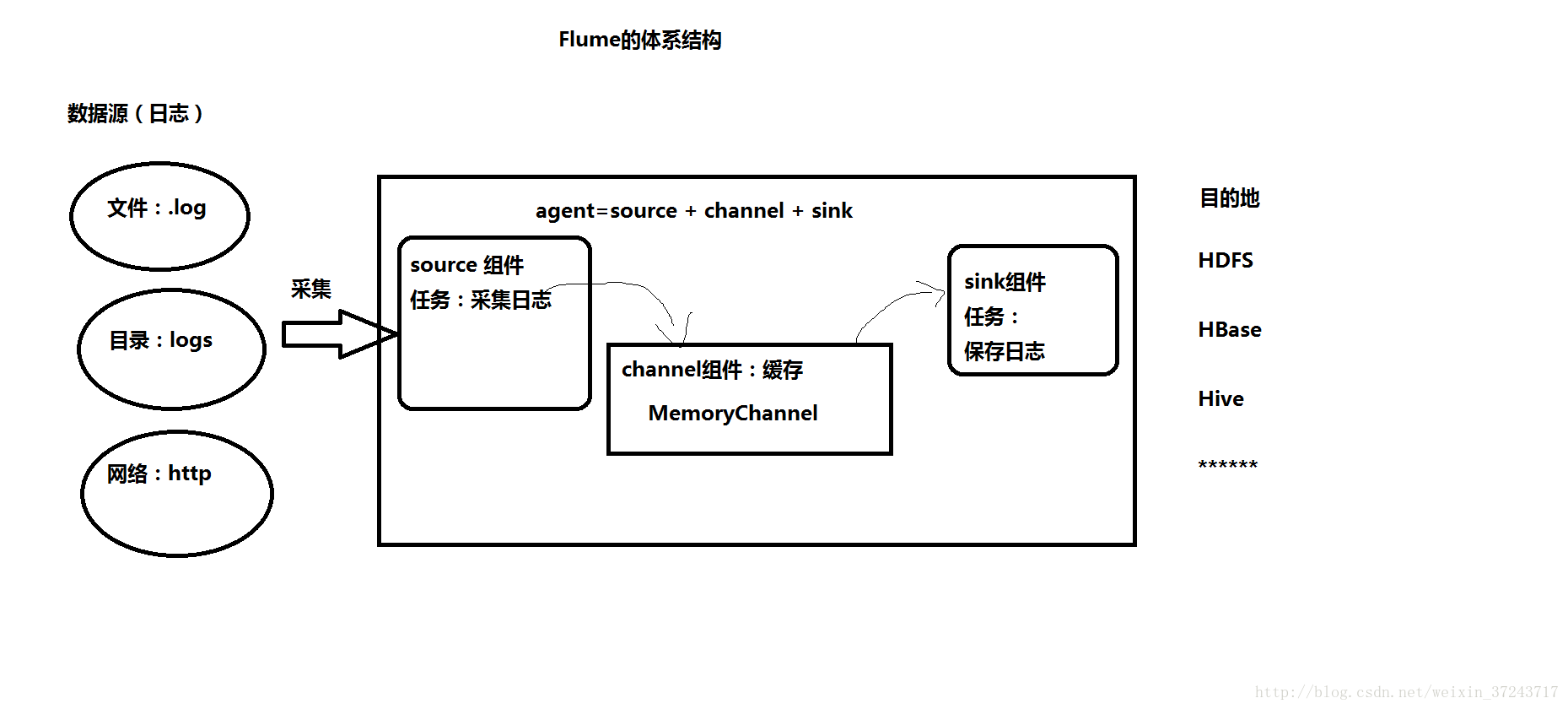

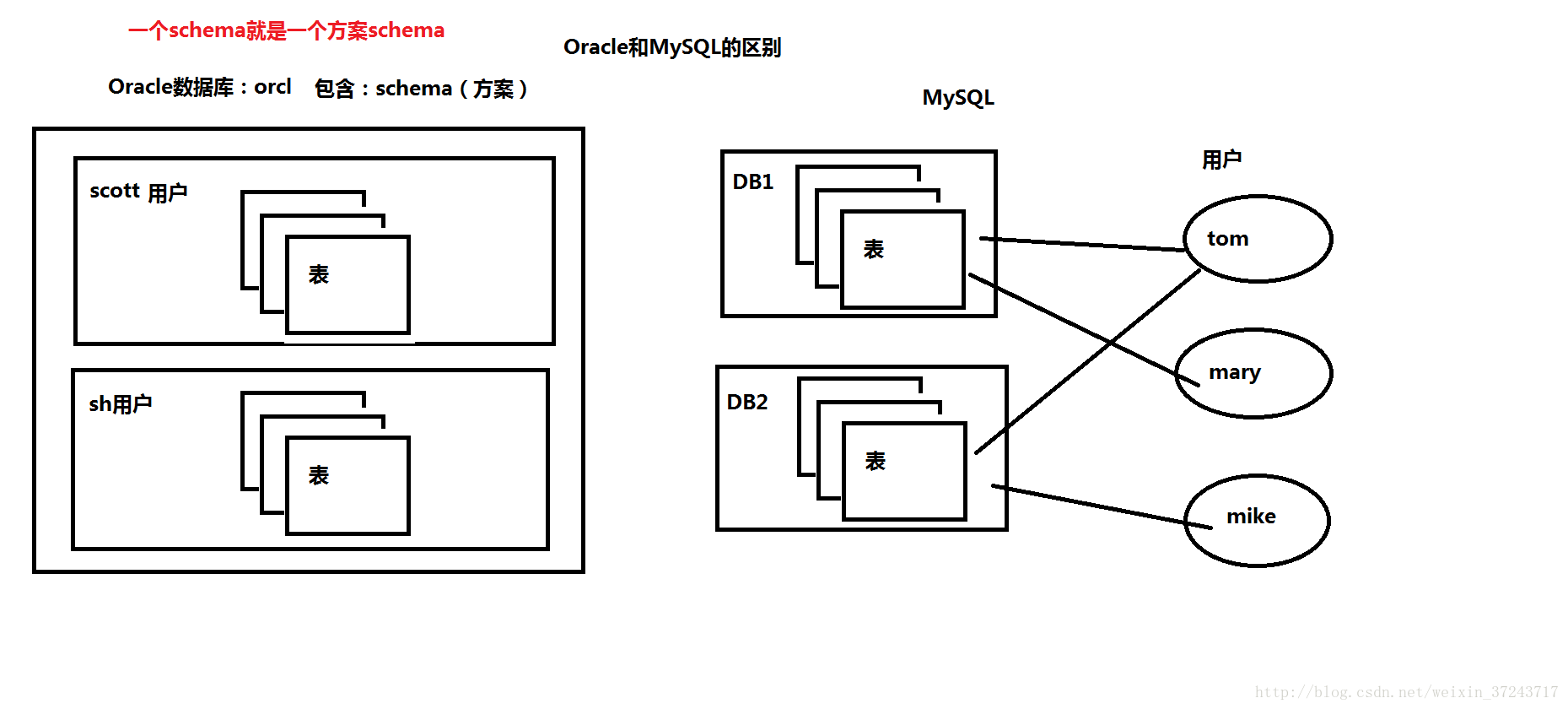

一、数据采集引擎 1、准备实验环境: 准备Oracle数据库 用户:sh 表:sales 订单表(92万) 2、Sqoop:采集关系型数据库中的数据 用在离线计算的应用中 强调:批量 (1)数据交换引擎: RDBMS <---> Sqoop <---> HDFS、HBase、Hive (2)底层依赖MapReduce (3)依赖JDBC (4)安装:tar -zxvf sqoop-1.4.5.bin__hadoop-0.23.tar.gz -C ~/training/ 设置环境变量: SQOOP_HOME=/root/training/sqoop-1.4.5.bin__hadoop-0.23 export SQOOP_HOME PATH=$SQOOP_HOME/bin:$PATH export PATH 注意:如果是Oracle数据库,大写:用户名、表名、列名 (*)codegen Generate code to interact with database records 根据表结构自动生成对应Java类 sqoop codegen --connect jdbc:oracle:thin:@192.168.157.163:1521/orcl --username SCOTT --password tiger --table EMP --outdir /root/sqoop (*)create-hive-table Import a table definition into Hive (*)eval Evaluate a SQL statement and display the results 在Sqoop中执行SQL sqoop eval --connect jdbc:oracle:thin:@192.168.157.163:1521/orcl --username SCOTT --password tiger --query 'select * from emp' (*)export Export an HDFS directory to a database table (*)help List available commands (*)import Import a table from a database to HDFS 导入数据 (1)导入EMP表的所有数据(HDFS上) sqoop import --connect jdbc:oracle:thin:@192.168.157.163:1521/orcl --username SCOTT --password tiger --table EMP --target-dir /sqoop/import/emp1 (2)导入指定的列 sqoop import --connect jdbc:oracle:thin:@192.168.157.163:1521/orcl --username SCOTT --password ti 4000 ger --table EMP --columns ENAME,SAL --target-dir /sqoop/import/emp2 (3) 导入订单表 sqoop import --connect jdbc:oracle:thin:@192.168.157.163:1521/orcl --username SH --password sh --table SALES --target-dir /sqoop/import/sales -m 1 错误:ERROR tool.ImportTool: Error during import: No primary key could be found for table SALES. Please specify one with --split-by or perform a sequential import with '-m 1'. (*)import-all-tables Import tables from a database to HDFS 导入某个用户下所有的表,默认路径:/user/root sqoop import-all-tables --connect jdbc:oracle:thin:@192.168.157.163:1521/orcl --username SCOTT --password tiger (*)job Work with saved jobs (*)list-databases List available databases on a server (*) MySQL数据库:就是数据库的名字 (*) Oracle数据库:是数据库中所有用户的名字 sqoop list-databases --connect jdbc:oracle:thin:@192.168.157.163:1521/orcl --username SYSTEM --password password (*)list-tables List available tables in a database (*)merge Merge results of incremental imports (*)metastore Run a standalone Sqoop metastore (*)version Display version information 3、Flume:采集日志 用在实时计算(流式计算)的应用中 强调:实时 #bin/flume-ng agent -n a4 -f myagent/a4.conf -c conf -Dflume.root.logger=INFO,console #定义agent名, source、channel、sink的名称 a4.sources = r1 a4.channels = c1 a4.sinks = k1 #具体定义source a4.sources.r1.type = spooldir a4.sources.r1.spoolDir = /root/training/logs #具体定义channel a4.channels.c1.type = memory a4.channels.c1.capacity = 10000 a4.channels.c1.transactionCapacity = 100 #定义拦截器,为消息添加时间戳 a4.sources.r1.interceptors = i1 a4.sources.r1.interceptors.i1.type = org.apache.flume.interceptor.TimestampInterceptor$Builder #具体定义sink a4.sinks.k1.type = hdfs a4.sinks.k1.hdfs.path = hdfs://192.168.157.11:9000/flume/%Y%m%d a4.sinks.k1.hdfs.filePrefix = events- a4.sinks.k1.hdfs.fileType = DataStream #不按照条数生成文件 a4.sinks.k1.hdfs.rollCount = 0 #HDFS上的文件达到128M时生成一个文件 a4.sinks.k1.hdfs.rollSize = 134217728 #HDFS上的文件达到60秒生成一个文件 a4.sinks.k1.hdfs.rollInterval = 60 #组装source、channel、sink a4.sources.r1.channels = c1 a4.sinks.k1.channel = c1

Flume的体系结构

二、HUE 三、ZooKeeper

Oracle和Mysql的区别

相关文章推荐

- Sqoop Flume 数据采集引擎

- 详解大数据采集引擎之Sqoop&采集Oracle数据库中的数据

- 带你看懂大数据采集引擎之Flume&采集目录中的日志

- Flume简介与使用(二)——Thrift Source采集数据

- flume-1.6.0(3节点)安装与配置(数据读取涉及到snappy、自定义flume拦截器、flume采集节点和flume客户端)(升级)

- flume实现kafka到hdfs实时数据采集 - 有负载均衡策略

- 【备忘】Sqoop、Flume、Oozie、Hue大数据工具视频教程

- Hadoop数据收集与入库系统Flume与Sqoop

- Flume+Kafka+Zookeeper搭建大数据日志采集框架

- Flume+HBase采集和存储日志数据

- 急性子的开源大数据,第 1 部分: Hadoop 教程:Hello World 与 Java、Pig、Hive、Flume、Fuse、Oozie,以及 Sqoop 与 Informix、DB2 和

- Flume采集数据发送到elasticsearch 2.2上

- 电商之梳理Flume相关知识---数据采集

- flume采集数据导入elasticsearch 配置

- 数据采集之采集引擎学习路线

- flume数据采集测试+编码问题+运行指令+文件配置

- Nginx+FTP+Flume+hadoop+MR+hive+sqoop+mysql+kettle+web数据分析系统(一)

- flume + Kafka采集数据 超简单

- 大数据技术学习笔记之网站流量日志分析项目:Flume日志采集系统1

- flume LineDeserializer Line length exceeds max (2048), truncating line!扩大一行数据量大小的采集上限