Lua快速入门与Torch教程

2017-12-31 10:10

761 查看

Lua

变量和控制流

函数表示

哈希表

像类一样的table和继承

模块化

Torch

Tensor

math function

Torch的CNN相关的内容

奇异值SVD分解线性系统

单行: –

多行:

num = 42 – 所有的数字都是double,整数有52bits来存储。

s = ‘first expression’

s2 = ”second expression of string”

muli_line_strings = [[ass

ssss]]

t = nil – 未定义的t;会有垃圾回收

while 循环:

IF语句:

Undefined Variable will be nil

继承:继承了所有的变量和函数

还有一些标准库:

String library,【11】

Table Library 【12】:

math library【13】:

OS Library:

IO Library【14】:

Lua的安装:

另外在Lua社区有一个reference,还算比较全,可以看看【9】,中文的手册【15】,另外有一份绝世高手秘籍,学习X在Y minutes以内【10】。

没有source file的时候,参考【19】

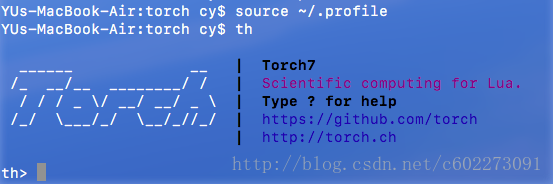

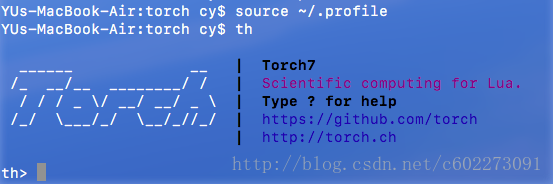

然后学习Torch的时候,在命令行输入th:

退出的话输入两次ctrl c,或者输入:os.exit()

运行lua的文件的话: th file.lua 或者 th -h

另外,可以看官方的cheatsheet:【20】,还有Torch的教程可以看:【16】【17】【18】

这里我介绍Torch的基本的东西。

首先是开辟数组和存储:

存储:

逻辑操作:

rnn和nn不能共存的问题:class nn.SpatialGlimpse has been already assigned a parent class 【21】

转载请注明出处: http://blog.csdn.net/c602273091/article/details/78940781

Ref Links:

【1】Lua官网:http://www.lua.org/start.html

【2】15 Min搞定Lua系列: http://tylerneylon.com/a/learn-lua/

【3】Lua菜鸟教程: http://www.runoob.com/lua/lua-tutorial.html

【4】Torch教程: https://www.cs.ox.ac.uk/people/nando.defreitas/machinelearning/

【5】更多参考资料: https://www.cs.ox.ac.uk/people/nando.defreitas/machinelearning/practicals/practical1.pdf

【6】Torch官网: http://torch.ch/docs/getting-started.html

【7】Torch 添加新的层: https://zhuanlan.zhihu.com/p/21550685

【8】小福利PyTorch: https://zhuanlan.zhihu.com/p/29779361

【9】Lua reference: http://lua-users.org/files/wiki_insecure/users/thomasl/luarefv51.pdf

【10】绝世高手秘籍: https://learnxinyminutes.com

【11】String Library:http://lua-users.org/wiki/StringLibraryTutorial

【12】Table Library: http://lua-users.org/wiki/TableLibraryTutorial

【13】Math Library: http://lua-users.org/wiki/MathLibraryTutorial

【14】IO Library: http://lua-users.org/wiki/IoLibraryTutorial

【15】Lua中文手册: http://www.runoob.com/manual/lua53doc/contents.html

【16】Torch Tensor的教程: https://github.com/torch/torch7/blob/master/doc/tensor.md

【17】Torch Math Function: https://github.com/torch/torch7/blob/master/doc/maths.md

【18】使用Torch实现的practice: https://github.com/oxford-cs-ml-2015/

【19】No Source File: http://www.voidcn.com/article/p-oeithnnf-bqk.html

【20】Torch Cheatsheet: https://github.com/torch/torch7/wiki/Cheatsheet

【21】rnn nn: https://github.com/Element-Research/dpnn/issues/95

变量和控制流

函数表示

哈希表

像类一样的table和继承

模块化

Torch

Tensor

math function

Torch的CNN相关的内容

奇异值SVD分解线性系统

Lua

最猛的版本还是在【2】里面,15 Min搞定Lua,因为Lua是一种脚本语言,用标准C语言编写并以源代码形式开放, 其设计目的是为了嵌入应用程序中,从而为应用程序提供灵活的扩展和定制功能。所以会Perl,Python,Shell的话应该很快上手。变量和控制流

注释:单行: –

多行:

--[[ --]]

num = 42 – 所有的数字都是double,整数有52bits来存储。

s = ‘first expression’

s2 = ”second expression of string”

muli_line_strings = [[ass

ssss]]

t = nil – 未定义的t;会有垃圾回收

while 循环:

while num < 50 do num = num + 1 end

IF语句:

if num > 40 then

print('Over 40')

elseif s~= 'hello' then

io.write('Not over 40\n')

else

thisIsGlobal = 5

local line = io.read()

print('Winter is coming,' .. line)

endUndefined Variable will be nil

foo = anUnknownVariable

aBoolValue = false

-- only nil and false are falsy

if not aBoolValue then

print('twas false')

end

karlSum = 0

for I = 1, 100 do

karlSum = karlSum + i

end

fredSum = 0

for j = 100, 1, -1 do

fredSum = fredSum + j

end

repeat

print('The way of the future')

num = num - 1

until num == 0函数表示

function fib(n) if n < 2 then return 1 end return fib(n-2) + fib(n-1) end

-- closures and anonymous functions

function adder(x)

return function(y) return x + y

end

a1 = adder(9)

a2 = adder(36)

print(a1(16)) -- >25

print(a2(64)) --> 100

x, y, z = 1,2,3,4 -- 4 被扔掉

function bar(a,b,c)

print(a,b,c)

return 4,8,15,16,23,42

end

x,y = bar('zaphod') -- print 'zaphod' nil nil

-- x = 4

-- y = 8

-- global function.

function f(x)

return x*x

end

f = function (x) return x*x end

-- local function

local function g(x)

return math.sin(x)

end

local g;

g = function(x)

return math.sin(x)

end哈希表

t = {key1 = 'value1', key2 = false}

print(t.key1)

t.newkey = {}

t.key2 = nil -- remove key2 from the table.

u = {['@!#'] = 'qbert', [{}] = 1729, [6.28] = 'tau'}

-- use any value as key

print(u[6.28])

a = u['@!#']

b = u[{}] -- b = nil since lookup fails.

function h(x) print(x.key1) end

h{key1 = 'Sonmi~451'} -- Prints 'Sonmi~451'

for key, val in pairs(u) do -- table iteration

print(key, val)

end

print(_G['_G'] == _G) -- Print 'true'

v = {'value1', 'value2', 1.21, 'gigawatts'}

for i = 1, #v do

print(v[i])

end

f1 = {a = 1, b = 2} -- f1 = 0.5

f2 = {a = 2, b = 3} -- fail: s = f1 + f2

metafraction = {}

function metafraction.__add(f1, f2)

sum = {}

sum.b = f1.b * f2.b

sum.a = f1.a * f2.b + f2.a * f1.b

return sum

end

setmetatable(f1, metafraction)

setmetatable(f2, metafraction)

s = f1 + f2 -- call __add(f1, f2) on f1's metatabledefaultFavs = {animal = 'gru', food = 'donuts'}

myFavs = {food = 'piazza'}

setmetatable(myFavs, {__index = defaultFavs})

eatenBy = myFavs.animal

-- __add(a, b) for a + b

-- __sub(a, b) for a - b

-- __mul(a, b) for a * b

-- __div(a, b) for a / b

-- __mod(a, b) for a % b

-- __pow(a, b) for a ^ b

-- __unm(a) for -a

-- __concat(a, b) for a .. b

-- __len(a) for #a

-- __eq(a, b) for a == b

-- __lt(a, b) for a < b

-- __le(a, b) for a <= b

-- __index(a, b) <fn or a table> for a.b

-- __newindex(a, b, c) for a.b = c

-- __call(a, ...) for a(...)像类一样的table和继承

Dog = {}

function Dog:new()

newObj = {sound = 'woof'}

self.__index = self

return setmetatable(newObj, self)

end

function Dog:makeSound()

print('I say' .. self.sound)

end

mrDog = Dog:new()

mrDog:makeSound() -- 'Print I say woof'

-- mrDog.makeSound(self)

-- 这里的Dog看起来像一个类,实际上是一个table,继承:继承了所有的变量和函数

LoudDog = Dog:new()

function LoudDog:makeSound()

s = self.sound .. ' '

print(s .. s .. s)

end

seymour = LoudDog:new()

seymour:makeSound() -- 'woof woof woof'

-- 以下的子类和基类一样

function LoudDog:new()

newObj = {}

self.__index = self

return setmetatable(newObj, self)

end模块化

写一个文件叫做: mod.lualocal M = {}

local function sayMyName()

print('Hrunkner')

end

function M.sayHello()

print('Why hello there')

sayMyName()

end

return M-- 另外一个文件可以使用mod.lua

local mod = require('mod') -- 运行mod.lua

local mod = (function ()

<contents of mod.lua>

end) () -- 可以使用mod.lua的非local function

mod.sayHello() -- 运行正常

mod.sayMyName() -- 报错,因为这是一个local method

-- 假设在mod2.lua里面存在 "print('Hi!')"

local a = require('mode2')

local b = require('mode2') -- 第二次不执行,因为有缓存

dofile('mod2.lua')

dofile('mod2.lua') -- 可以运行第二遍

-- load是加载到内存中,但是没有执行

f = loadfile('mod2.lua')

g = loadstring('print(343)')

g()还有一些标准库:

String library,【11】

> = string.byte("ABCDE") -- no index, so the first character

65

> = string.byte("ABCDE",1) -- indexes start at 1

65

> = string.byte("ABCDE",0) -- we're not using C

> = string.byte("ABCDE",100) -- index out of range, no value returned

> = string.byte("ABCDE",3,4)

67 68

> s = "ABCDE"

> = s:byte(3,4)

> -- can apply directly to string variable

67 68

> = string.char(65,66,67)

ABC

> = string.char() -- empty stringTable Library 【12】:

table.concat(table [, sep [, i [, j]]])

> = table.concat({ 1, 2, "three", 4, "five" })

12three4five

> = table.concat({ 1, 2, "three", 4, "five" }, ", ")

1, 2, three, 4, five

> = table.concat({ 1, 2, "three", 4, "five" }, ", ", 2)

2, three, 4, five

> = table.concat({ 1, 2, "three", 4, "five" }, ", ", 2, 4)

2, three, 4

table.foreach(table, f)

> table.foreach({1,"two",3}, print) -- print the key-value pairs

1 1

2 two

3 3

> table.foreach({1,"two",3,"four"}, function(k,v) print(string.rep(v,k)) end)

1

twotwo

333

fourfourfourfour

table.sort(table [, comp])

> t = { 3,2,5,1,4 }

> table.sort(t)

> = table.concat(t, ", ") -- display sorted values

1, 2, 3, 4, 5

table.insert(table, [pos,] value)

> t = { 1,3,"four" }

> table.insert(t, 2, "two") -- insert "two" at position before element 2

> = table.concat(t, ", ")

1, two, 3, four

table.remove(table [, pos])

> t = { 1,"two",3,"four" } -- create a table

> = # t -- find the size

4

> table.foreach(t, print) -- have a look at the elements

1 1

2 two

3 3

4 four

> = table.remove(t,2) -- remove element number 2 and display it

two

> table.foreach(t, print) -- display the updated table contents

1 1

2 3

3 four

> = # t -- find the size

3math library【13】:

math.abs math.acos math.asin math.atan math.ceil math.cos math.deg math.exp math.floor math.fmod math.huge math.log math.max math.maxinteger math.min math.mininteger math.modf math.pi math.rad math.random math.randomseed math.sin math.sqrt math.tan math.tointeger math.type math.ult

OS Library:

os.clock() -- CPU time

os.date([format [, time]])

> = os.date("%d.%m.%Y")

06.10.2012

os.difftime(t2, t1)

> t1 = os.time()

> -- wait a little while then type....

> = os.difftime(os.time(), t1)

31

> = os.difftime(os.time(), t1)

38

os.execute([command]) -- 执行命令行上的命令

> = os.execute("echo hello")

hello

0

> = os.execute("mmmmm") -- generate an error

'mmmmm' is not recognized as an internal or external command,

operable program or batch file.

1

os.exit([code]) -- 退出当前环境

> os.exit(0) -- kill the Lua shell we are in and pass 0 back to parent shell

os.getenv(varname) -- 获取系统变量

> = os.getenv("BANANA")

nil

> = os.getenv("USERNAME")

Nick

os.remove(filename) -- 删除文件

> os.execute("echo hello > banana.txt")

> = os.remove("banana.txt")

true

> = os.remove("banana.txt")

nil banana.txt: No such file or directory 2

os.rename(oldname, newname) -- 更改文件名字

> os.execute("echo hello > banana.txt")

> = os.rename("banana.txt", "apple.txt")

true

> = os.rename("banana.txt", "apple.txt")

nil banana.txt: No such file or directory 2

os.setlocale(locale [, category])

os.tmpname () -- 生成一个可以用来做临时文件的名字

> = os.tmpname() -- on windows

\s2js.

> = os.tmpname() -- on debian

/tmp/lua_5xPi18IO Library【14】:

file = io.open (filename [, mode]) io.close ([file]) -- f:close () io.flush () io.input ([file]) io.lines ([filename]) for line in io.lines(filename) do ... end io.write (value1, ...) -- io.output():write. f:seek ([whence] [, offset])

Lua的安装:

curl -R -O http://www.lua.org/ftp/lua-5.3.0.tar.gz tar zxf lua-5.3.0.tar.gz cd lua-5.3.0 make macosx test make install -- 接着再命令行输入lua就可以运行

另外在Lua社区有一个reference,还算比较全,可以看看【9】,中文的手册【15】,另外有一份绝世高手秘籍,学习X在Y minutes以内【10】。

Torch

安装Torch(Mac):安装完以后比如安装rnn那就是:luarocks install [packagename](rnn,dp)

git clone https://github.com/torch/distro.git ~/torch --recursive cd ~/torch; bash install-deps; ./install.sh source ~/.profile

没有source file的时候,参考【19】

然后学习Torch的时候,在命令行输入th:

退出的话输入两次ctrl c,或者输入:os.exit()

运行lua的文件的话: th file.lua 或者 th -h

另外,可以看官方的cheatsheet:【20】,还有Torch的教程可以看:【16】【17】【18】

这里我介绍Torch的基本的东西。

Tensor

Tensor就是n维矩阵,这个和TF一样。首先是开辟数组和存储:

--- creation of a 4D-tensor 4x5x6x2 z = torch.Tensor(4,5,6,2) --- for more dimensions, (here a 6D tensor) one can do: s = torch.LongStorage(6) s[1] = 4; s[2] = 5; s[3] = 6; s[4] = 2; s[5] = 7; s[6] = 3; x = torch.Tensor(s) --- 获取Tensor的维度和size x:nDimension() x:size() -- 获取元素 x = torch.Tensor(7,7,7) x[3][4][5] -- 等同于: x:storage()[x:storageOffset() +(3-1)*x:stride(1)+(4-1)*x:stride(2)+(5-1)*x:stride(3)]

存储:

x = torch.Tensor(4,5)

s = x:storage()

for i=1,s:size() do -- fill up the Storage

s[i] = i

end

> x -- s is interpreted by x as a 2D matrix

1 2 3 4 5

6 7 8 9 10

11 12 13 14 15

16 17 18 19 20

[torch.DoubleTensor of dimension 4x5]

> x:stride()

5

1 -- element in the last dimension are contiguous!

[torch.LongStorage of size 2]

根据数据类型不同,可以有:

ByteTensor -- contains unsigned chars

CharTensor -- contains signed chars

ShortTensor -- contains shorts

IntTensor -- contains ints

LongTensor -- contains longs

FloatTensor -- contains floats

DoubleTensor -- contains doubles

x = torch.Tensor(5):zero()

生成 0

> x:narrow(1, 2, 3):fill(1) -- narrow() returns a Tensor

-- referencing the same Storage as x

> x

0

1

1

1

0

[torch.Tensor of dimension 5]

--- 拷贝Tensor

y = torch.Tensor(x:size()):copy(x)

y = x:clone()

x = torch.Tensor(2,5):fill(3.14)

> x

3.1400 3.1400 3.1400 3.1400 3.1400

3.1400 3.1400 3.1400 3.1400 3.1400

[torch.DoubleTensor of dimension 2x5]

y = torch.Tensor(x)

> y

3.1400 3.1400 3.1400 3.1400 3.1400

3.1400 3.1400 3.1400 3.1400 3.1400

[torch.DoubleTensor of dimension 2x5]

y:zero()

> x -- elements of x are the same as y!

0 0 0 0 0

0 0 0 0 0

[torch.DoubleTensor of dimension 2x5]

所有的值赋予 1

x = torch.Tensor(torch.LongStorage({4}), torch.LongStorage({0})):zero() -- zeroes the tensor

x[1] = 1 -- all elements point to the same address!

> x

1

1

1

1

[torch.DoubleTensor of dimension 4]

a = torch.LongStorage({1,2}) -- We have a torch.LongStorage containing the values 1 and 2

-- General case for TYPE ~= Long, e.g. for TYPE = Float:

b = torch.FloatTensor(a)

-- Creates a new torch.FloatTensor with 2 dimensions, the first of size 1 and the second of size 2

> b:size()

1

2

[torch.LongStorage of size 2]

-- Special case of torch.LongTensor

c = torch.LongTensor(a)

-- Creates a new torch.LongTensor that uses a as storage and thus contains the values 1 and 2

> c

1

2

[torch.LongTensor of size 2]

-- creates a storage with 10 elements

s = torch.Storage(10):fill(1)

-- we want to see it as a 2x5 tensor

x = torch.Tensor(s, 1, torch.LongStorage{2,5})

> x

1 1 1 1 1

1 1 1 1 1

[torch.DoubleTensor of dimension 2x5]

x:zero()

> s -- the storage contents have been modified

0

0

0

0

0

0

0

0

0

0

[torch.DoubleStorage of size 10]

i = 0

z = torch.Tensor(3,3)

z:apply(function(x)

i = i + 1

return i

end) -- fill up the tensor

> z

1 2 3

4 5 6

7 8 9

[torch.DoubleTensor of dimension 3x3]

z:apply(math.sin) -- apply the sin function

> z

0.8415 0.9093 0.1411

-0.7568 -0.9589 -0.2794

0.6570 0.9894 0.4121

[torch.DoubleTensor of dimension 3x3]

sum = 0

z:apply(function(x)

sum = sum + x

end) -- compute the sum of the elements

> sum

1.9552094821074

> z:sum() -- it is indeed correct!

1.9552094821074math function

这里的东西主要是和Matlab一样的接口,所以简单一些。torch.log(x, x)

x:log()

> x = torch.rand(100, 100)

> k = torch.rand(10, 10)

> res1 = torch.conv2(x, k) -- case 1

> res2 = torch.Tensor()

> torch.conv2(res2, x, k) -- case 2

> res2:dist(res1)

0

case 2更好,因为不需要再进行内存分配。

把两个Tensor进行联合:

> torch.cat(torch.ones(3), torch.zeros(2))

1

1

1

0

0

[torch.DoubleTensor of size 5]

> torch.cat(torch.ones(3, 2), torch.zeros(2, 2), 1)

1 1

1 1

1 1

0 0

0 0

[torch.DoubleTensor of size 5x2]

> torch.cat(torch.ones(2, 2), torch.zeros(2, 2), 1)

1 1

1 1

0 0

0 0

[torch.DoubleTensor of size 4x2]

> torch.cat(torch.ones(2, 2), torch.zeros(2, 2), 2)

1 1 0 0

1 1 0 0

[torch.DoubleTensor of size 2x4]

> torch.cat(torch.cat(torch.ones(2, 2), torch.zeros(2, 2), 1), torch.rand(3, 2), 1)

1.0000 1.0000

1.0000 1.0000

0.0000 0.0000

0.0000 0.0000

0.3227 0.0493

0.9161 0.1086

0.2206 0.7449

[torch.DoubleTensor of size 7x2]

> torch.cat({torch.ones(2, 2), torch.zeros(2, 2), torch.rand(3, 2)}, 1)

1.0000 1.0000

1.0000 1.0000

0.0000 0.0000

0.0000 0.0000

0.3227 0.0493

0.9161 0.1086

0.2206 0.7449

[torch.DoubleTensor of size 7x2]

> torch.cat({torch.Tensor(), torch.rand(3, 2)}, 1)

0.3227 0.0493

0.9161 0.1086

0.2206 0.7449

[torch.DoubleTensor of size 3x2]

返回对角线元素:

y = torch.diag(x, k)

y = torch.diag(x)

单位矩阵:

y = torch.eye(n, m)

y = torch.eye(n)

直方图:

y = torch.histc(x)

y = torch.histc(x, n)

y = torch.histc(x, n, min, max)

生成连续的数组:

y = torch.linspace(x1, x2)

y = torch.linspace(x1, x2, n)

生成log space:

y = torch.logspace(x1, x2)

y = torch.logspace(x1, x2, n)

y = torch.ones(m, n) returns a m × n Tensor filled with ones.

生成随机数:

y = torch.rand(m, n)

torch.range(2, 5)

element-wise的操作:基本上和matlab的接口一样

y = torch.abs(x)

y = torch.sign(x)

y = torch.acos(x)

y = torch.asin(x)

x:asin() replaces all elements in-place with the arcsine of the elements of x.

y = torch.atan(x)

还有很多类似的操作。Torch的CNN相关的内容

torch.conv2([res,] x, k, [, 'F' or 'V']) -- F表示full convolution;V表示valid convolution x = torch.rand(100, 100) k = torch.rand(10, 10) c = torch.conv2(x, k) > c:size() 91 91 [torch.LongStorage of size 2] c = torch.conv2(x, k, 'F') > c:size() 109 109 [torch.LongStorage of size 2] torch.xcorr2([res,] x, k, [, 'F' or 'V']) -- 对输入增加了correlation的操作 torch.conv3([res,] x, k, [, 'F' or 'V']) x = torch.rand(100, 100, 100) k = torch.rand(10, 10, 10) c = torch.conv3(x, k) > c:size() 91 91 91 [torch.LongStorage of size 3] c = torch.conv3(x, k, 'F') > c:size() 109 109 109 [torch.LongStorage of size 3] torch.xcorr3([res,] x, k, [, 'F' or 'V'])

逻辑操作:

torch.lt(a, b) torch.le(a, b) torch.gt(a, b) torch.ge(a, b) torch.eq(a, b) torch.ne(a, b) torch.all(a) torch.any(a)

奇异值,SVD分解,线性系统

-- LU分解,解AX = B

torch.gesv([resb, resa,] B, A)

> a = torch.Tensor({{6.80, -2.11, 5.66, 5.97, 8.23},

{-6.05, -3.30, 5.36, -4.44, 1.08},

{-0.45, 2.58, -2.70, 0.27, 9.04},

{8.32, 2.71, 4.35, -7.17, 2.14},

{-9.67, -5.14, -7.26, 6.08, -6.87}}):t()

> b = torch.Tensor({{4.02, 6.19, -8.22, -7.57, -3.03},

{-1.56, 4.00, -8.67, 1.75, 2.86},

{9.81, -4.09, -4.57, -8.61, 8.99}}):t()

> b

4.0200 -1.5600 9.8100

6.1900 4.0000 -4.0900

-8.2200 -8.6700 -4.5700

-7.5700 1.7500 -8.6100

-3.0300 2.8600 8.9900

[torch.DoubleTensor of dimension 5x3]

> a

6.8000 -6.0500 -0.4500 8.3200 -9.6700

-2.1100 -3.3000 2.5800 2.7100 -5.1400

5.6600 5.3600 -2.7000 4.3500 -7.2600

5.9700 -4.4400 0.2700 -7.1700 6.0800

8.2300 1.0800 9.0400 2.1400 -6.8700

[torch.DoubleTensor of dimension 5x5]

> x = torch.gesv(b, a)

> x

-0.8007 -0.3896 0.9555

-0.6952 -0.5544 0.2207

0.5939 0.8422 1.9006

1.3217 -0.1038 5.3577

0.5658 0.1057 4.0406

[torch.DoubleTensor of dimension 5x3]

> b:dist(a * x)

1.1682163181673e-14

-- torch.trtrs([resb, resa,] b, a [, 'U' or 'L'] [, 'N' or 'T'] [, 'N' or 'U'])

X = torch.trtrs(B, A)

-- returns the solution of AX = B where A is upper-triangular.

-- orch.potrf([res,] A [, 'U' or 'L'] )

-- Cholesky Decomposition of 2D

> A = torch.Tensor({

{1.2705, 0.9971, 0.4948, 0.1389, 0.2381},

{0.9971, 0.9966, 0.6752, 0.0686, 0.1196},

{0.4948, 0.6752, 1.1434, 0.0314, 0.0582},

{0.1389, 0.0686, 0.0314, 0.0270, 0.0526},

{0.2381, 0.1196, 0.0582, 0.0526, 0.3957}})

> chol = torch.potrf(A)

> chol

1.1272 0.8846 0.4390 0.1232 0.2112

0.0000 0.4626 0.6200 -0.0874 -0.1453

0.0000 0.0000 0.7525 0.0419 0.0738

0.0000 0.0000 0.0000 0.0491 0.2199

0.0000 0.0000 0.0000 0.0000 0.5255

[torch.DoubleTensor of size 5x5]

> torch.potrf(chol, A, 'L')

> chol

1.1272 0.0000 0.0000 0.0000 0.0000

0.8846 0.4626 0.0000 0.0000 0.0000

0.4390 0.6200 0.7525 0.0000 0.0000

0.1232 -0.0874 0.0419 0.0491 0.0000

0.2112 -0.1453 0.0738 0.2199 0.5255

[torch.DoubleTensor of size 5x5]

-- torch.eig([rese, resv,] a [, 'N' or 'V'])

-- 奇异值分解

> a = torch.Tensor({{ 1.96, 0.00, 0.00, 0.00, 0.00},

{-6.49, 3.80, 0.00, 0.00, 0.00},

{-0.47, -6.39, 4.17, 0.00, 0.00},

{-7.20, 1.50, -1.51, 5.70, 0.00},

{-0.65, -6.34, 2.67, 1.80, -7.10}}):t()

> a

1.9600 -6.4900 -0.4700 -7.2000 -0.6500

0.0000 3.8000 -6.3900 1.5000 -6.3400

0.0000 0.0000 4.1700 -1.5100 2.6700

0.0000 0.0000 0.0000 5.7000 1.8000

0.0000 0.0000 0.0000 0.0000 -7.1000

[torch.DoubleTensor of dimension 5x5]

> b = a + torch.triu(a, 1):t()

> b

1.9600 -6.4900 -0.4700 -7.2000 -0.6500

-6.4900 3.8000 -6.3900 1.5000 -6.3400

-0.4700 -6.3900 4.1700 -1.5100 2.6700

-7.2000 1.5000 -1.5100 5.7000 1.8000

-0.6500 -6.3400 2.6700 1.8000 -7.1000

[torch.DoubleTensor of dimension 5x5]

> e = torch.eig(b)

> e

16.0948 0.0000

-11.0656 0.0000

-6.2287 0.0000

0.8640 0.0000

8.8655 0.0000

[torch.DoubleTensor of dimension 5x2]

> e, v = torch.eig(b, 'V')

> e

16.0948 0.0000

-11.0656 0.0000

-6.2287 0.0000

0.8640 0.0000

8.8655 0.0000

[torch.DoubleTensor of dimension 5x2]

> v

-0.4896 0.2981 -0.6075 -0.4026 -0.3745

0.6053 0.5078 -0.2880 0.4066 -0.3572

-0.3991 0.0816 -0.3843 0.6600 0.5008

0.4564 0.0036 -0.4467 -0.4553 0.6204

-0.1622 0.8041 0.4480 -0.1725 0.3108

[torch.DoubleTensor of dimension 5x5]

> v * torch.diag(e:select(2, 1))*v:t()

1.9600 -6.4900 -0.4700 -7.2000 -0.6500

-6.4900 3.8000 -6.3900 1.5000 -6.3400

-0.4700 -6.3900 4.1700 -1.5100 2.6700

-7.2000 1.5000 -1.5100 5.7000 1.8000

-0.6500 -6.3400 2.6700 1.8000 -7.1000

[torch.DoubleTensor of dimension 5x5]

> b:dist(v * torch.diag(e:select(2, 1)) * v:t())

3.5423944346685e-14

-- SVD分解

> a = torch.Tensor({{8.79, 6.11, -9.15, 9.57, -3.49, 9.84},

{9.93, 6.91, -7.93, 1.64, 4.02, 0.15},

{9.83, 5.04, 4.86, 8.83, 9.80, -8.99},

{5.45, -0.27, 4.85, 0.74, 10.00, -6.02},

{3.16, 7.98, 3.01, 5.80, 4.27, -5.31}}):t()

> a

8.7900 9.9300 9.8300 5.4500 3.1600

6.1100 6.9100 5.0400 -0.2700 7.9800

-9.1500 -7.9300 4.8600 4.8500 3.0100

9.5700 1.6400 8.8300 0.7400 5.8000

-3.4900 4.0200 9.8000 10.0000 4.2700

9.8400 0.1500 -8.9900 -6.0200 -5.3100

> u, s, v = torch.svd(a)

> u

-0.5911 0.2632 0.3554 0.3143 0.2299

-0.3976 0.2438 -0.2224 -0.7535 -0.3636

-0.0335 -0.6003 -0.4508 0.2334 -0.3055

-0.4297 0.2362 -0.6859 0.3319 0.1649

-0.4697 -0.3509 0.3874 0.1587 -0.5183

0.2934 0.5763 -0.0209 0.3791 -0.6526

[torch.DoubleTensor of dimension 6x5]

> s

27.4687

22.6432

8.5584

5.9857

2.0149

[torch.DoubleTensor of dimension 5]

> v

-0.2514 0.8148 -0.2606 0.3967 -0.2180

-0.3968 0.3587 0.7008 -0.4507 0.1402

-0.6922 -0.2489 -0.2208 0.2513 0.5891

-0.3662 -0.3686 0.3859 0.4342 -0.6265

-0.4076 -0.0980 -0.4933 -0.6227 -0.4396

[torch.DoubleTensor of dimension 5x5]

> u * torch.diag(s) * v:t()

8.7900 9.9300 9.8300 5.4500 3.1600

6.1100 6.9100 5.0400 -0.2700 7.9800

-9.1500 -7.9300 4.8600 4.8500 3.0100

9.5700 1.6400 8.8300 0.7400 5.8000

-3.4900 4.0200 9.8000 10.0000 4.2700

9.8400 0.1500 -8.9900 -6.0200 -5.3100

[torch.DoubleTensor of dimension 6x5]

> a:dist(u * torch.diag(s) * v:t())

2.8923773593204e-14rnn和nn不能共存的问题:class nn.SpatialGlimpse has been already assigned a parent class 【21】

转载请注明出处: http://blog.csdn.net/c602273091/article/details/78940781

Ref Links:

【1】Lua官网:http://www.lua.org/start.html

【2】15 Min搞定Lua系列: http://tylerneylon.com/a/learn-lua/

【3】Lua菜鸟教程: http://www.runoob.com/lua/lua-tutorial.html

【4】Torch教程: https://www.cs.ox.ac.uk/people/nando.defreitas/machinelearning/

【5】更多参考资料: https://www.cs.ox.ac.uk/people/nando.defreitas/machinelearning/practicals/practical1.pdf

【6】Torch官网: http://torch.ch/docs/getting-started.html

【7】Torch 添加新的层: https://zhuanlan.zhihu.com/p/21550685

【8】小福利PyTorch: https://zhuanlan.zhihu.com/p/29779361

【9】Lua reference: http://lua-users.org/files/wiki_insecure/users/thomasl/luarefv51.pdf

【10】绝世高手秘籍: https://learnxinyminutes.com

【11】String Library:http://lua-users.org/wiki/StringLibraryTutorial

【12】Table Library: http://lua-users.org/wiki/TableLibraryTutorial

【13】Math Library: http://lua-users.org/wiki/MathLibraryTutorial

【14】IO Library: http://lua-users.org/wiki/IoLibraryTutorial

【15】Lua中文手册: http://www.runoob.com/manual/lua53doc/contents.html

【16】Torch Tensor的教程: https://github.com/torch/torch7/blob/master/doc/tensor.md

【17】Torch Math Function: https://github.com/torch/torch7/blob/master/doc/maths.md

【18】使用Torch实现的practice: https://github.com/oxford-cs-ml-2015/

【19】No Source File: http://www.voidcn.com/article/p-oeithnnf-bqk.html

【20】Torch Cheatsheet: https://github.com/torch/torch7/wiki/Cheatsheet

【21】rnn nn: https://github.com/Element-Research/dpnn/issues/95

相关文章推荐

- PyTorch快速入门教程五(rnn)

- PyTorch快速入门教程四(cnn:卷积神经网络 )

- Lua脚本语言基本语法快速入门教程

- lua脚本语言快速入门教程

- PyTorch快速入门教程八(使用word embedding做自然语言处理的词语预测)

- PyTorch快速入门教程七(pytorch下RNN如何做自然语言处理)

- PyTorch快速入门教程六(使用LSTM做图片分类)

- PyTorch快速入门教程五(rnn)

- PyTorch快速入门教程四(cnn:卷积神经网络 )

- PyTorch快速入门教程九(使用LSTM来做判别每个词的词性)

- PyTorch快速入门教程三(神经网络)

- PyTorch快速入门教程九(使用LSTM来做判别每个词的词性)

- PyTorch快速入门教程二(线性回归以及logistic回归)

- PyTorch快速入门教程一(环境配置)

- CMake快速入门教程-实战

- numpy快速入门教程

- hadoop快速入门教程:hadoop安装包下载与监控参数说明

- Python 零基础 快速入门 趣味教程 (咪博士 海龟绘图 turtle) 4. 函数

- 10分钟交互式入门Lua教程

- 必收:Microsoft .NET Framework SDK 快速入门教程