【OVS2.5源码解读】datapath的netlink机制

2017-12-01 22:42

281 查看

datapath为 ovs内核模块,负责执行数据交换,也就是把从接收端口收到的数据包在流表中进行匹配,并执行匹配到的动作。

一个datapath可以对应多个vport,一个vport类似物理交换机的端口概念。一个datapth关联一个flow table,一个flow table包含多个条目,每个条目包括两个内容:一个match/key和一个action

首先来分析下upcall函数调用的原因。如果看了前面的源码分析的就会知道,在什么情况下会调用upcall函数呢?就是在一个数据包查找不到相应的流表项时,才会调用upcall函数(比如一个数据包第一次进入这个内核,里面没有为这个数据包设定相应的流表规则)。upcall函数的调用其实就是把数据包的信息发到用户空间去,而由内核空间到用户空间的通信则要用到linux中的NetLink机制。所以熟悉下NetLink通信可以知道upcall函数调用需要什么样的参数以及整个函数的作用和功能。

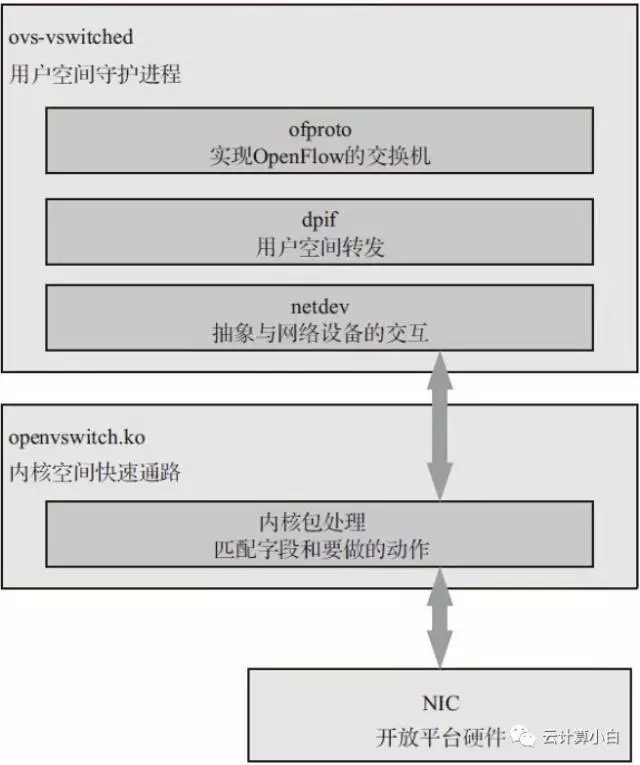

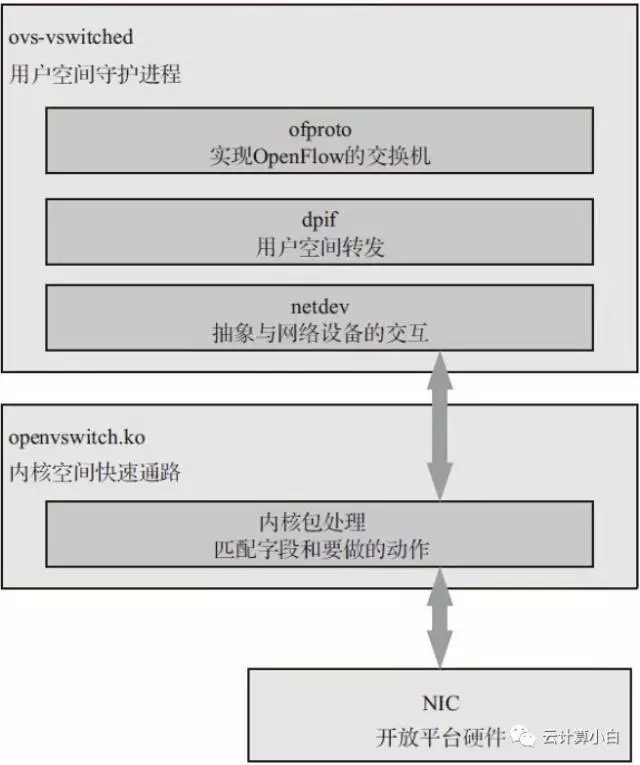

通过一个例子来看看 OVS 中数据包是如何进行转发的:

1)ovs 的 datapath 接收到从 ovs 连接的某个网络端口发来的数据包,从数据包中提取源/目的 IP、源/目的 MAC、端口等信息。

2)ovs 在内核态查看流表结构(通过 hash),如果命中,则快速转发。

3)如果没有命中,内核态不知道如何处置这个数据包,所以,通过 netlink upcall 机制从内核态通知用户态,发送给 ovs-vswitchd 组件处理。

4)ovs-vswitchd 查询用户态精确流表和模糊流表,如果还不命中,在 SDN 控制器接入的情况下,经过 OpenFlow 协议,通告给控制器,由控制器处理。

5)如果模糊命中, ovs-vswitchd 会同时刷新用户态精确流表和内核态精确流表,如果精确命中,则只更新内核态流表。

6)刷新后,重新把该数据包注入给内核态 datapath 模块处理。

7)datapath 重新发起选路,查询内核流表,匹配;报文转发,结束。

ovs datapath是通过netlink与用户态进行通信的,实现dp、端口、流表、packet的操作。 netlink的注册是在datapath模块的初始化函数中实现的。

1、dp_init函数

2、genl_register_family函数

3、rpl___genl_register_family函数

netlink的操作是由dp_genl_families定义的,一共有4类。

1、datapath netlink定义:

2、vport netlink定义:

3、精确流表 netlink定义:

4、packet netlink定义:

每个NetLink连接被对应的upcall handler线程接管,多个vport在同一线程中的NetLink连接被epoll管理。

1:每个vport下都挂多个NetLink连接,数量等同于upcall处理线程的数量

2:线程中routine函数为udpif_upcall_handler,伪码如下:

3:每个NetLink连接被称为一个upcall channel

一个datapath可以对应多个vport,一个vport类似物理交换机的端口概念。一个datapth关联一个flow table,一个flow table包含多个条目,每个条目包括两个内容:一个match/key和一个action

首先来分析下upcall函数调用的原因。如果看了前面的源码分析的就会知道,在什么情况下会调用upcall函数呢?就是在一个数据包查找不到相应的流表项时,才会调用upcall函数(比如一个数据包第一次进入这个内核,里面没有为这个数据包设定相应的流表规则)。upcall函数的调用其实就是把数据包的信息发到用户空间去,而由内核空间到用户空间的通信则要用到linux中的NetLink机制。所以熟悉下NetLink通信可以知道upcall函数调用需要什么样的参数以及整个函数的作用和功能。

通过一个例子来看看 OVS 中数据包是如何进行转发的:

1)ovs 的 datapath 接收到从 ovs 连接的某个网络端口发来的数据包,从数据包中提取源/目的 IP、源/目的 MAC、端口等信息。

2)ovs 在内核态查看流表结构(通过 hash),如果命中,则快速转发。

3)如果没有命中,内核态不知道如何处置这个数据包,所以,通过 netlink upcall 机制从内核态通知用户态,发送给 ovs-vswitchd 组件处理。

4)ovs-vswitchd 查询用户态精确流表和模糊流表,如果还不命中,在 SDN 控制器接入的情况下,经过 OpenFlow 协议,通告给控制器,由控制器处理。

5)如果模糊命中, ovs-vswitchd 会同时刷新用户态精确流表和内核态精确流表,如果精确命中,则只更新内核态流表。

6)刷新后,重新把该数据包注入给内核态 datapath 模块处理。

7)datapath 重新发起选路,查询内核流表,匹配;报文转发,结束。

NetLink的使用

NetLink由两部分程序构成,一部分是用户空间的,另外一部分是内核空间的。用户空间的和大多数socket编程一样,只是用的协议时AF_NETLINK,其他基本都是一样的步骤ovs datapath是通过netlink与用户态进行通信的,实现dp、端口、流表、packet的操作。 netlink的注册是在datapath模块的初始化函数中实现的。

1、dp_init函数

static int __init dp_init(void)

{

int err;

BUILD_BUG_ON(sizeof(struct ovs_skb_cb) > FIELD_SIZEOF(struct sk_buff, cb));

pr_info("Open vSwitch switching datapath %s\n", VERSION);

err = compat_init();

if (err)

goto error;

err = action_fifos_init();

if (err)

goto error_compat_exit;

err = ovs_internal_dev_rtnl_link_register();

if (err)

goto error_action_fifos_exit;

err = ovs_flow_init();

if (err)

goto error_unreg_rtnl_link;

err = ovs_vport_init();

if (err)

goto error_flow_exit;

err = register_pernet_device(&ovs_net_ops);

if (err)

goto error_vport_exit;

err = register_netdevice_notifier(&ovs_dp_device_notifier);

if (err)

goto error_netns_exit;

err = ovs_netdev_init();

if (err)

goto error_unreg_notifier;

err = dp_register_genl(); //注册netlink处理函数

if (err < 0)

goto error_unreg_netdev;

return 0;

error_unreg_netdev:

ovs_netdev_exit();

error_unreg_notifier:

unregister_netdevice_notifier(&ovs_dp_device_notifier);

error_netns_exit:

unregister_pernet_device(&ovs_net_ops);

error_vport_exit:

ovs_vport_exit();

error_flow_exit:

ovs_flow_exit();

error_unreg_rtnl_link:

ovs_internal_dev_rtnl_link_unregister();

error_action_fifos_exit:

action_fifos_exit();

error_compat_exit:

compat_exit();

error:

return err;

}2、genl_register_family函数

#define genl_register_family rpl_genl_register_family

static inline int rpl_genl_register_family(struct genl_family *family)

{

family->module = THIS_MODULE;

return rpl___genl_register_family(family); //注册netlink

}3、rpl___genl_register_family函数

int rpl___genl_register_family(struct rpl_genl_family *f)

{

int err;

f->compat_family.id = f->id;

f->compat_family.hdrsize = f->hdrsize;

strncpy(f->compat_family.name, f->name, GENL_NAMSIZ);

f->compat_family.version = f->version;

f->compat_family.maxattr = f->maxattr;

f->compat_family.netnsok = f->netnsok;

#ifdef HAVE_PARALLEL_OPS

f->compat_family.parallel_ops = f->parallel_ops;

#endif

err = genl_register_family_with_ops(&f->compat_family, //调用系统接口,可以不用关注消息处理,可以把ops作为netlink的处理入口函数

(struct genl_ops *) f->ops, f->n_ops);

if (err)

goto error;

if (f->mcgrps) {

/* Need to Fix GROUP_ID() for more than one group. */

BUG_ON(f->n_mcgrps > 1);

err = genl_register_mc_group(&f->compat_family, //调用系统接口,暂时只看到dp中用ovs_notify中在使用

(struct genl_multicast_group *) f->mcgrps);

if (err)

goto error;

}

error:

return err;

}netlink的操作是由dp_genl_families定义的,一共有4类。

static struct genl_family *dp_genl_families[] = {

&dp_datapath_genl_family,

&dp_vport_genl_family,

&dp_flow_genl_family,

&dp_packet_genl_family,

};1、datapath netlink定义:

static struct genl_family dp_datapath_genl_family = {

.id = GENL_ID_GENERATE,

.hdrsize = sizeof(struct ovs_header),

.name = OVS_DATAPATH_FAMILY,

.version = OVS_DATAPATH_VERSION,

.maxattr = OVS_DP_ATTR_MAX,

.netnsok = true,

.parallel_ops = true,

.ops = dp_datapath_genl_ops,

.n_ops = ARRAY_SIZE(dp_datapath_genl_ops),

.mcgrps = &ovs_dp_datapath_multicast_group,

.n_mcgrps = 1,

};

static struct genl_ops dp_datapath_genl_ops[] = {

{ .cmd = OVS_DP_CMD_NEW,

.flags = GENL_ADMIN_PERM, /* Requires CAP_NET_ADMIN privilege. */

.policy = datapath_policy,

.doit = ovs_dp_cmd_new /*创建datapath*/

},

{ .cmd = OVS_DP_CMD_DEL,

.flags = GENL_ADMIN_PERM, /* Requires CAP_NET_ADMIN privilege. */

.policy = datapath_policy,

.doit = ovs_dp_cmd_del /*删除datapath*/

},

{ .cmd = OVS_DP_CMD_GET,

.flags = 0, /* OK for unprivileged users. */

.policy = datapath_policy,

.doit = ovs_dp_cmd_get,

.dumpit = ovs_dp_cmd_dump /*导出datapath*/

},

{ .cmd = OVS_DP_CMD_SET,

.flags = GENL_ADMIN_PERM, /* Requires CAP_NET_ADMIN privilege. */

.policy = datapath_policy,

.doit = ovs_dp_cmd_set, /*修改datapath*/

},

};2、vport netlink定义:

struct genl_family dp_vport_genl_family = {

.id = GENL_ID_GENERATE,

.hdrsize = sizeof(struct ovs_header),

.name = OVS_VPORT_FAMILY,

.version = OVS_VPORT_VERSION,

.maxattr = OVS_VPORT_ATTR_MAX,

.netnsok = true,

.parallel_ops = true,

.ops = dp_vport_genl_ops,

.n_ops = ARRAY_SIZE(dp_vport_genl_ops),

.mcgrps = &ovs_dp_vport_multicast_group,

.n_mcgrps = 1,

};

static struct genl_ops dp_vport_genl_ops[] = {

{ .cmd = OVS_VPORT_CMD_NEW,

.flags = GENL_ADMIN_PERM, /* Requires CAP_NET_ADMIN privilege. */

.policy = vport_policy,

.doit = ovs_vport_cmd_new /*创建vport*/

},

{ .cmd = OVS_VPORT_CMD_DEL,

.flags = GENL_ADMIN_PERM, /* Requires CAP_NET_ADMIN privilege. */

.policy = vport_policy,

.doit = ovs_vport_cmd_del /*删除vport*/

},

{ .cmd = OVS_VPORT_CMD_GET,

.flags = 0, /* OK for unprivileged users. */

.policy = vport_policy,

.doit = ovs_vport_cmd_get,

.dumpit = ovs_vport_cmd_dump /*导出vport*/

},

{ .cmd = OVS_VPORT_CMD_SET,

.flags = GENL_ADMIN_PERM, /* Requires CAP_NET_ADMIN privilege. */

.policy = vport_policy,

.doit = ovs_vport_cmd_set, /*修改vport*/

},

};3、精确流表 netlink定义:

static struct genl_family dp_flow_genl_family = {

.id = GENL_ID_GENERATE,

.hdrsize = sizeof(struct ovs_header),

.name = OVS_FLOW_FAMILY,

.version = OVS_FLOW_VERSION,

.maxattr = OVS_FLOW_ATTR_MAX,

.netnsok = true,

.parallel_ops = true,

.ops = dp_flow_genl_ops, /* datapath流表更新的入口函数 */

.n_ops = ARRAY_SIZE(dp_flow_genl_ops),

.mcgrps = &ovs_dp_flow_multicast_group,

.n_mcgrps = 1,

};

static struct genl_ops dp_flow_genl_ops[] = {

{ .cmd = OVS_FLOW_CMD_NEW,

.flags = GENL_ADMIN_PERM, /* Requires CAP_NET_ADMIN privilege. */

.policy = flow_policy,

.doit = ovs_flow_cmd_new /*添加精确流表 */

},

{ .cmd = OVS_FLOW_CMD_DEL,

.flags = GENL_ADMIN_PERM, /* Requires CAP_NET_ADMIN privilege. */

.policy = flow_policy,

.doit = ovs_flow_cmd_del /*删除精确流表*/

},

{ .cmd = OVS_FLOW_CMD_GET,

.flags = 0, /* OK for unprivileged users. */

.policy = flow_policy,

.doit = ovs_flow_cmd_get,

.dumpit = ovs_flow_cmd_dump /*导出精确流表*/

},

{ .cmd = OVS_FLOW_CMD_SET,

.flags = GENL_ADMIN_PERM, /* Requires CAP_NET_ADMIN privilege. */

.policy = flow_policy,

.doit = ovs_flow_cmd_set, /*修改精确流表*/

},

};4、packet netlink定义:

static struct genl_family dp_packet_genl_family = {

.id = GENL_ID_GENERATE,

.hdrsize = sizeof(struct ovs_header),

.name = OVS_PACKET_FAMILY,

.version = OVS_PACKET_VERSION,

.maxattr = OVS_PACKET_ATTR_MAX,

.netnsok = true,

.parallel_ops = true,

.ops = dp_packet_genl_ops,

.n_ops = ARRAY_SIZE(dp_packet_genl_ops),

};

static struct genl_ops dp_packet_genl_ops[] = {

{ .cmd = OVS_PACKET_CMD_EXECUTE,

.flags = GENL_ADMIN_PERM, /* Requires CAP_NET_ADMIN privilege. */

.policy = packet_policy,

.doit = ovs_packet_cmd_execute /*执行报文action操作*/

}

};upcall线程与netlink

假设upcall handler线程有两个,vport有四个,那么每个vport下都将持有两个NetLink连接的信息,这两个NetLink连接将被用来上送upcall消息。每个NetLink连接被对应的upcall handler线程接管,多个vport在同一线程中的NetLink连接被epoll管理。

1:每个vport下都挂多个NetLink连接,数量等同于upcall处理线程的数量

2:线程中routine函数为udpif_upcall_handler,伪码如下:

routine {

while(线程不设自杀标记) {

if(从epoll中收到了一些upcall消息,则进一步处理upcall) {

continue;

} else {

注册poll:当epoll_fd有骚动时解除当前线程的block

}

block当前线程

}

}3:每个NetLink连接被称为一个upcall channel

相关文章推荐

- 【OVS2.5源码解读】datapath主流程分析

- 【OVS2.5源码解读】 用户态的flow table流表操作

- 【OVS2.5源码解读】 内核中的flow table流表操作

- 【OVS2.5.0源码分析】datapath之action分析(1)

- 【OVS2.5.0源码分析】datapath之action分析(5)

- 【OVS2.5.0源码分析】datapath之action分析(4)

- 【OVS2.5.0源码分析】datapath之action分析(8)

- 【OVS2.5.0源码分析】datapath之action分析(6)

- 【OVS2.5.0源码分析】datapath之流表查询

- Spring2.5源码解读 之 基于annotation的Controller实现原理分析(1)

- OVS源码研究 Datapath进行Packet处理

- Spring2.5源码解读 之 基于annotation的Controller实现原理分析(1)

- 【OVS2.5.0源码分析】datapath之流表创建过程

- 【OVS2.5.0源码分析】datapath之action分析(7)

- 【OVS2.5.0源码分析】datapath之netlink

- 【OVS2.5.0源码分析】datapath之action分析(2)

- 【OVS2.5.0源码分析】datapath之主流程分析

- CppUnit源码解读(3)

- Flink-CEP论文与源码解读之状态与状态转换

- Java6集合类源码解读-----ArrayList中一个有趣的变量oldData