Spark - 配置参数详解

2017-11-04 17:25

239 查看

Spark Configuration 官方文档

Spark Configuration 中文文档

系统配置:

Spark属性:控制大部分的应用程序参数,可以用SparkConf对象或者Java系统属性设置

环境变量:可以通过每个节点的conf/spark-env.sh脚本设置。例如IP地址、端口等信息

日志配置:可以通过log4j.properties配置

1. Spark 属性

These properties can be set directly on a SparkConf passed to your SparkContext. SparkConf allows you to configure some of the common properties (e.g. master URL and application name), as well as arbitrary key-value pairs through the set() method. For

example, we could initialize an application with two threads as follows:

Note that we run with local[2], meaning two threads - which represents “minimal” parallelism, which can help detect bugs that only exist when we run in a distributed context.

bin/spark-submit will also read configuration options from conf/spark-defaults.conf, in which each line consists of a key and a value separated by whitespace. For example:

优先级:

Spark Configuration 中文文档

系统配置:

Spark属性:控制大部分的应用程序参数,可以用SparkConf对象或者Java系统属性设置

环境变量:可以通过每个节点的conf/spark-env.sh脚本设置。例如IP地址、端口等信息

日志配置:可以通过log4j.properties配置

1. Spark 属性

These properties can be set directly on a SparkConf passed to your SparkContext. SparkConf allows you to configure some of the common properties (e.g. master URL and application name), as well as arbitrary key-value pairs through the set() method. For

example, we could initialize an application with two threads as follows:

Note that we run with local[2], meaning two threads - which represents “minimal” parallelism, which can help detect bugs that only exist when we run in a distributed context.

val conf = new SparkConf()

.setMaster("local[2]")

.setAppName("CountingSheep")

val sc = new SparkContext(conf)bin/spark-submit will also read configuration options from conf/spark-defaults.conf, in which each line consists of a key and a value separated by whitespace. For example:

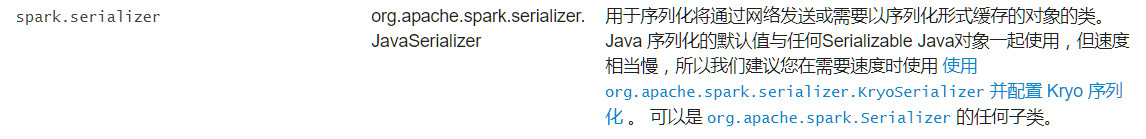

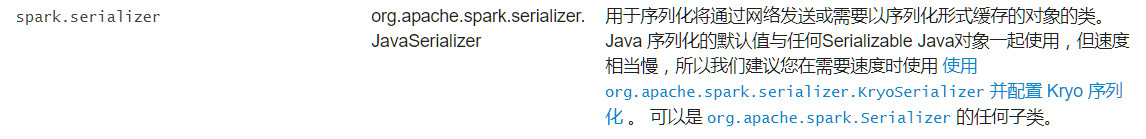

spark.master spark://5.6.7.8:7077 spark.executor.memory 4g spark.eventLog.enabled true spark.serializer org.apache.spark.serializer.KryoSerializer

优先级:

SparkConf > CLI > spark-defaults.conf

cat spark-env.sh JAVA_HOME=/data/jdk1.8.0_111 SCALA_HOME=/data/scala-2.11.8 SPARK_MASTER_IP=192.168.1.10 HADOOP_CONF_DIR=/data/hadoop-2.6.5/etc/hadoop SPARK_LOCAL_DIRS=/data/spark-1.6.3-bin-hadoop2.6/spark_data SPARK_WORKER_DIR=/data/spark-1.6.3-bin-hadoop2.6/spark_data/spark_works

cat slaves master slave1 slave2

cat spark-defaults.conf spark.master spark://master:7077 spark.serializer org.apache.spark.serializer.KryoSerializer

相关文章推荐

- Spark 性能相关参数配置详解-Storage篇

- Spark配置参数详解

- Spark 性能相关参数配置详解-压缩与序列化篇

- Spark配置参数详解

- Spark 性能相关参数配置详解-Storage篇

- Spark性能相关参数配置详解-Storage篇

- Spark性能相关参数配置详解-压缩与序列化篇

- Spark 性能相关参数配置详解-shuffle篇

- Spark性能相关参数配置详解-任务调度篇

- Spark配置参数详解

- Spark配置参数详解

- Spark 性能相关参数配置详解-shuffle篇

- Spark 性能相关参数配置详解-Storage篇

- Spark 性能相关参数配置详解-任务调度篇

- Spark 性能相关参数配置详解-shuffle篇

- Spark 性能相关参数配置详解

- Spark性能相关参数配置详解-shuffle篇

- Spark学习(三)---Spark Standalone Mode说明及参数配置详解

- Spark 性能相关参数配置详解-Storage篇

- Spark 性能相关参数配置详解-任务调度篇