1TensorFlow实现自编码器-1.6TensorFlow实现单隐层自编码器-启动会话训练模型

2017-10-31 10:59

531 查看

# 1.6TensorFlow实现单隐层自编码器-启动会话训练模型

import numpy as np

import sklearn.preprocessing as prep

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

#控制训练过程的参数

learning_rate = 0.01

training_epochs = 20

batch_size = 256

display_step = 1

examples_to_show = 10

#网络模型参数

n_hidden_units = 256 #隐藏层神经元数量(让编码器和解码器都有同样规模的隐藏层)

n_input_units = 784 #输入层神经元数量MNIST data input(img shape :28*28)

n_output_units = n_input_units #解码器输出晨神经元数量必须等于输入数据的units数量

#根据输入输出节点数量返回初始化好的指定名称的权重Variable

def WeightsVariable(n_in,n_out,name_str):

return tf.Variable(tf.random_normal([n_in,n_out]),dtype=tf.float32,name=name_str)

#根据输出节点数量返回初始化好的指定名称的偏置Variable

def BiasesVariable(n_out,name_str):

return tf.Variable(tf.random_normal([n_out]),dtype=tf.float32,name=name_str)

#构建编码器

def Encoder(x_origin,activate_func=tf.nn.sigmoid):

#编码器第一隐藏层

with tf.name_scope('Layer'):

weights = WeightsVariable(n_input_units,n_hidden_units,'weights')

biases = BiasesVariable(n_hidden_units,'biases')

x_code = activate_func(tf.add(tf.matmul(x_origin,weights),biases))

return x_code

#构建解码器

def Decoder(x_code,activate_func):

#解码器第一隐藏层

with tf.name_scope('Layer'):

weights = WeightsVariable(n_hidden_units, n_output_units, 'weights')

biases = BiasesVariable(n_output_units, 'biases')

x_decode = activate_func(tf.add(tf.matmul(x_code, weights), biases))

return x_decode

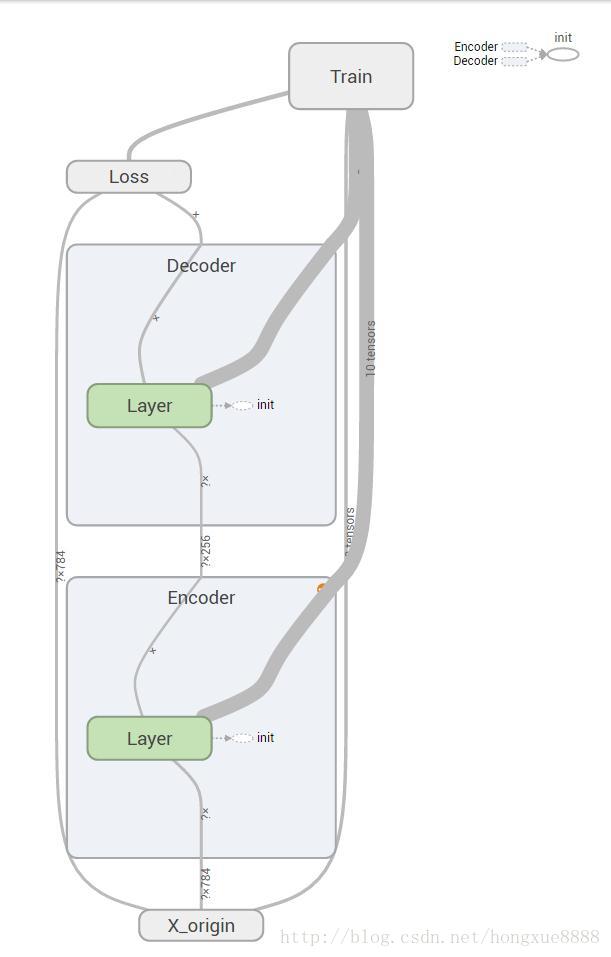

#调用上面写的函数构造计算图

with tf.Graph().as_default():

#计算图输入

with tf.name_scope('X_origin'):

X_Origin = tf.placeholder(tf.float32,[None,n_input_units])

#构建编码器模型

with tf.name_scope('Encoder'):

X_code = Encoder(X_Origin,activate_func=tf.nn.sigmoid)

# 构建解码器模型

with tf.name_scope('Decoder'):

X_decode = Decoder(X_code,activate_func=tf.nn.sigmoid)

# 定义损失节点:重构数据与原始数据的误差平方和损失

with tf.name_scope('Loss'):

Loss = tf.reduce_mean(tf.pow(X_Origin - X_decode , 2))

# 定义优化器,训练节点

with tf.name_scope('Train'):

Optimizer = tf.train.RMSPropOptimizer(learning_rate)

Train = Optimizer.minimize(Loss)

# 为所有变量添加初始化节点

Init = tf.global_variables_initializer()

print('把计算图写入事件文件,在Tesorboard里面查看')

summary_write = tf.summary.FileWriter(logdir='logs',graph=tf.get_default_graph())

summary_write.flush()

# 读取数据集

mnist = input_data.read_data_sets('MNIST_data/', one_hot=True)

with tf.Session() as sess:

sess.run(Init)

total_batch = int(mnist.train.num_examples / batch_size)

# 训练指定轮数,每一轮包含若干个批次

for epoch in range(training_epochs):

#每一轮(回合)都要把所有batch跑一遍

for i in range(total_batch):

batch_xs ,batch_ys = mnist.train.next_batch(batch_size)

#运行优化器Train节点(backprop)和Loss节点(获取损失值)

_,loss = sess.run([Train,Loss],feed_dict={X_Origin:batch_xs})

#每一轮训完之后,输出logs

if epoch % display_step == 0:

print("Epoch:",'%04d' % (epoch + 1),"Loss=","{:.9f}".format(loss))

#关闭summary_write

summary_write.close()

print("模型训练完毕")

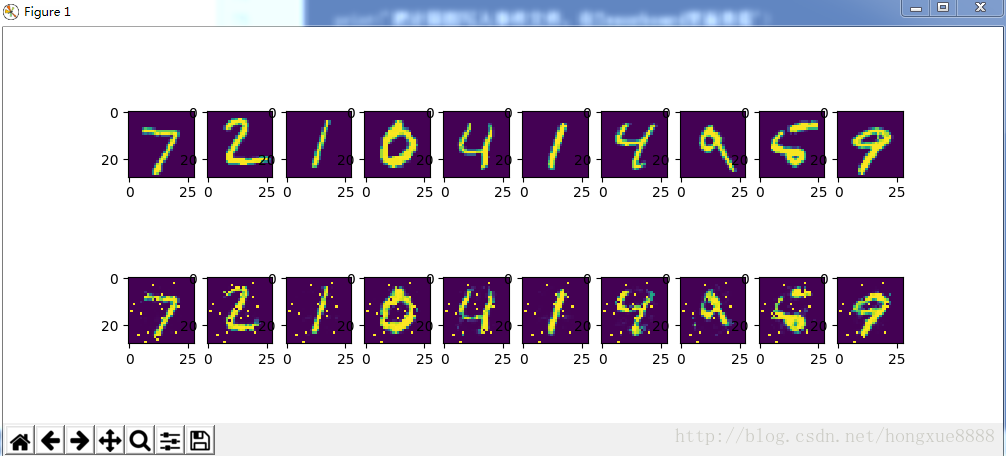

#把训练好的编码器-解码器模型用在测试集上,输出重建后的样本数据

reconstructions = sess.run(X_decode,feed_dict={X_Origin:mnist.test.images[:examples_to_show]})

#比较原始图像与重建后的图像

f,a = plt.subplots(2,10,figsize=(10,2))

for i in range(examples_to_show):

a[0][i].imshow(np.reshape(mnist.test.images[i],(28,28)))

a[1][i].imshow(np.reshape(reconstructions[i],(28,28)))

f.show()

plt.draw()

plt.waitforbuttonpress()输出:

把计算图写入事件文件,在Tesorboard里面查看 Extracting MNIST_data/train-images-idx3-ubyte.gz Extracting MNIST_data/train-labels-idx1-ubyte.gz Extracting MNIST_data/t10k-images-idx3-ubyte.gz Extracting MNIST_data/t10k-labels-idx1-ubyte.gz Epoch: 0001 Loss= 0.139829680 Epoch: 0002 Loss= 0.080248259 Epoch: 0003 Loss= 0.068188742 Epoch: 0004 Loss= 0.058322784 Epoch: 0005 Loss= 0.052633412 Epoch: 0006 Loss= 0.050207470 Epoch: 0007 Loss= 0.046964142 Epoch: 0008 Loss= 0.045169286 Epoch: 0009 Loss= 0.044805367 Epoch: 0010 Loss= 0.041068591 Epoch: 0011 Loss= 0.041089140 Epoch: 0012 Loss= 0.038747020 Epoch: 0013 Loss= 0.039305769 Epoch: 0014 Loss= 0.038323842 Epoch: 0015 Loss= 0.037289225 Epoch: 0016 Loss= 0.036899529 Epoch: 0017 Loss= 0.036632173 Epoch: 0018 Loss= 0.035721958 Epoch: 0019 Loss= 0.036257636 Epoch: 0020 Loss= 0.035449140 模型训练完毕

相关文章推荐

- 1TensorFlow实现自编码器-1.4 TensorFlow实现降噪自动编码器--运行会话,训练模型

- Tensorflow实现cnn模型的训练与使用

- TensorFlow教程03:MNIST实验——回归的实现、训练和模型评估

- TensorFlow使用C++加载使用训练好的模型,.cc文件代码实现的相关类及方法总结

- 在终端设备上实现语音识别:ARM开源了TensorFlow预训练模型

- Tensorflow学习笔记(四)降噪自动编码器—运行会话,训练模型

- TensorFlow实现人脸识别(4)--------对人脸样本进行训练,保存人脸识别模型

- Tensorflow实现cnn模型的训练与使用

- TensorFlow实现人脸识别(5)-------利用训练好的模型实时进行人脸检测

- Tensorflow实战学习(十六)【CNN实现、数据集、TFRecord、加载图像、模型、训练、调试】

- tensorflow训练自己的数据集实现CNN图像分类2(保存模型&测试单张图片)

- TensorFlow教程03:针对机器学习初学者的MNIST实验——回归的实现、训练和模型评估

- 81、Tensorflow实现LeNet-5模型,多层卷积层,识别mnist数据集

- Tensorflow使用的预训练的resnet_v2_50,resnet_v2_101,resnet_v2_152等模型预测,训练

- tensorflow从已经训练好的模型中,恢复(指定)权重(构建新变量、网络)并继续训练(finetuning)

- 1TensorFlow实现自编码器-1.5 TensorFlow实现单隐层自编码器--计算图设计

- TensorFlow实现mnist数字识别——CNN LeNet-5模型

- 跟着TensorFlow的进阶级教程实现MNIST库的训练

- Tensorflow实战学习(三十五)【实现基于LSTM语言模型】