Android Camera2 Opengles2.0 实时滤镜(冷暖色/放大镜/模糊/美颜)

2017-10-27 12:08

549 查看

demo:

http://download.csdn.net/download/keen_zuxwang/10041423

1、创建顶点位置、纹理数组

2、创建、编译、加载shader程序,获得shader中各变量的句柄(如获取纹理采样sampler2D变量的句柄)

3、程序通过program给shader传递各参量,如:顶点位置、纹理坐标,激活、绑定纹理,传递模型/视图/投影矩阵等, 然后通过glDrawArrays()/glDrawElements()绘制图元(片元着色器通过这些参量计算出每个像素的值、然后通过底层EGL 渲染到相应的ANativeWindow)

camera2 摄像头图像opengles渲染显示:

1、生成纹理

GLES20.glGenTextures(1, textures, 0); // 产生纹理id

GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textures[0]);//绑定 纹理id

2、创建SurfaceTexture

SurfaceTexture videoTexture = new SurfaceTexture(textures[0]); //通过创建的纹理id,生成SurfaceTexture

3、生成Surface

Surface surface0 = new Surface(videoTexture); // 通过创建的SurfaceTexture,生成Surface

4、添加camera2预览输出Surface,从而实现camera图像 -> Surface

mPreviewBuilder = camera.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW); // 创建camera2 捕获请求,预览模式

//添加预览输出的Surface, 从而实现camera图像 -> Surface

mPreviewBuilder.addTarget(surface);

mPreviewBuilder.addTarget(surface0);

camera.createCaptureSession(Arrays.asList(surface, surface0), mSessionStateCallback, null); // 创建捕获会话

所以整个摄像头图像渲染流程:

camera图像 -> Surface -> videoTexture/videoTexture.updateTexImage() -> GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textures[0]) ->

GLES20.glDrawElements()

1、vertex shader

2、fragment shader

3、shader工具类

4、GLViewMediaActivity类, shader操作类,实现GLSurfaceView.Renderer接口

通过创建的SurfaceTexture videoTexture(textures[0]生成的),生成Surface,所以整个摄像头图像渲染流程:

camera图像 -> Surface -> videoTexture/videoTexture.updateTexImage() -> GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textures[0]) ->

GLES20.glDrawElements()

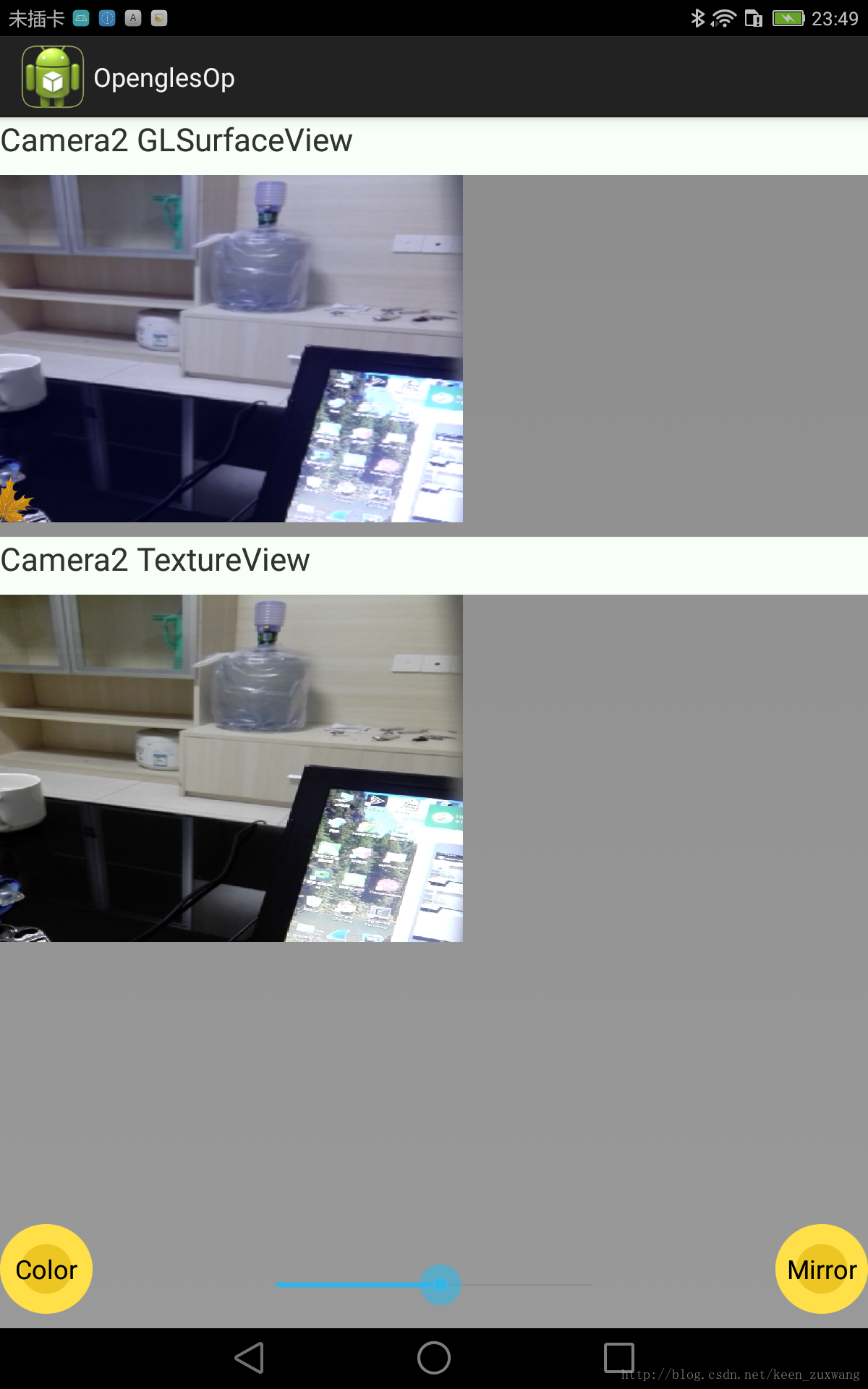

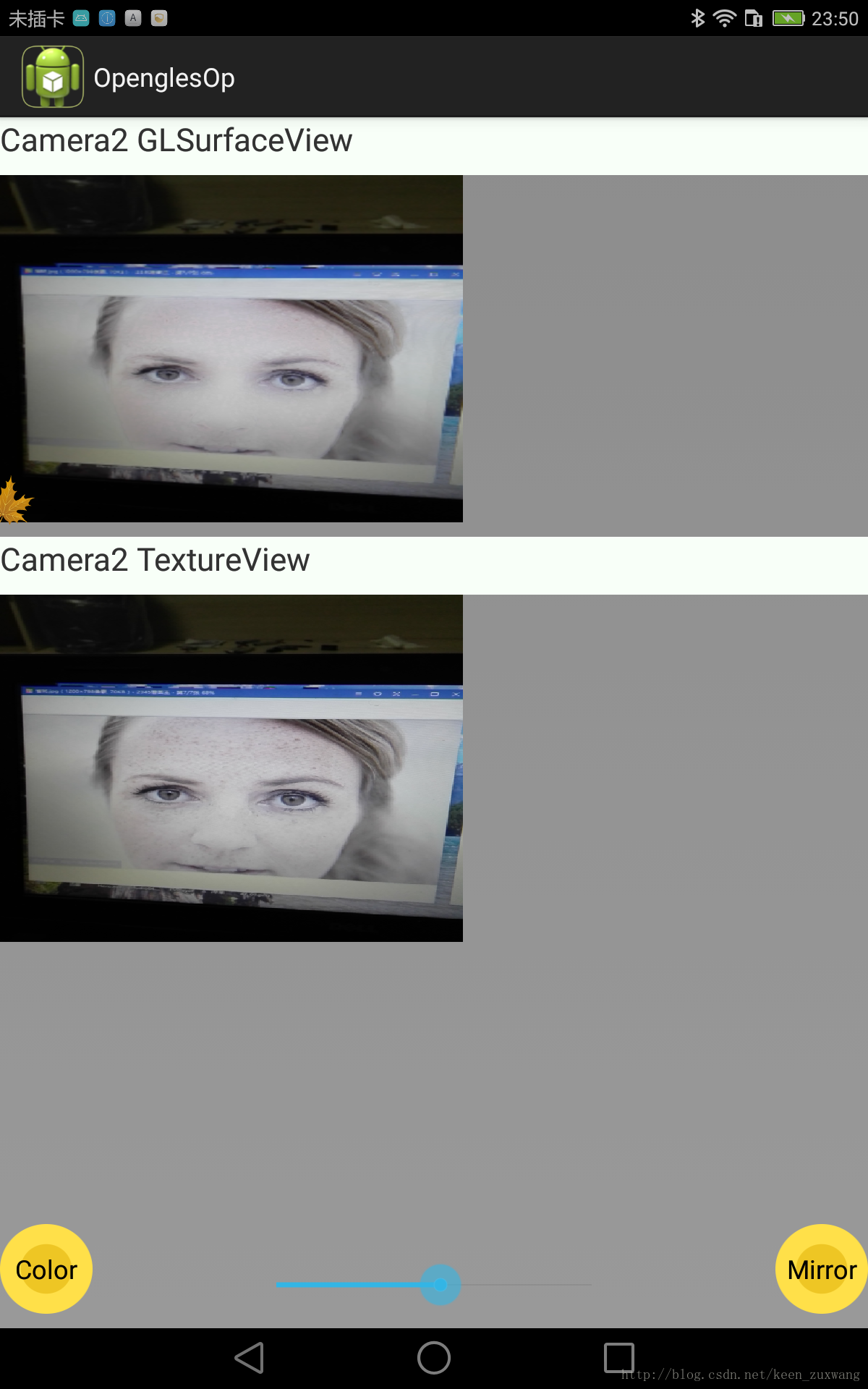

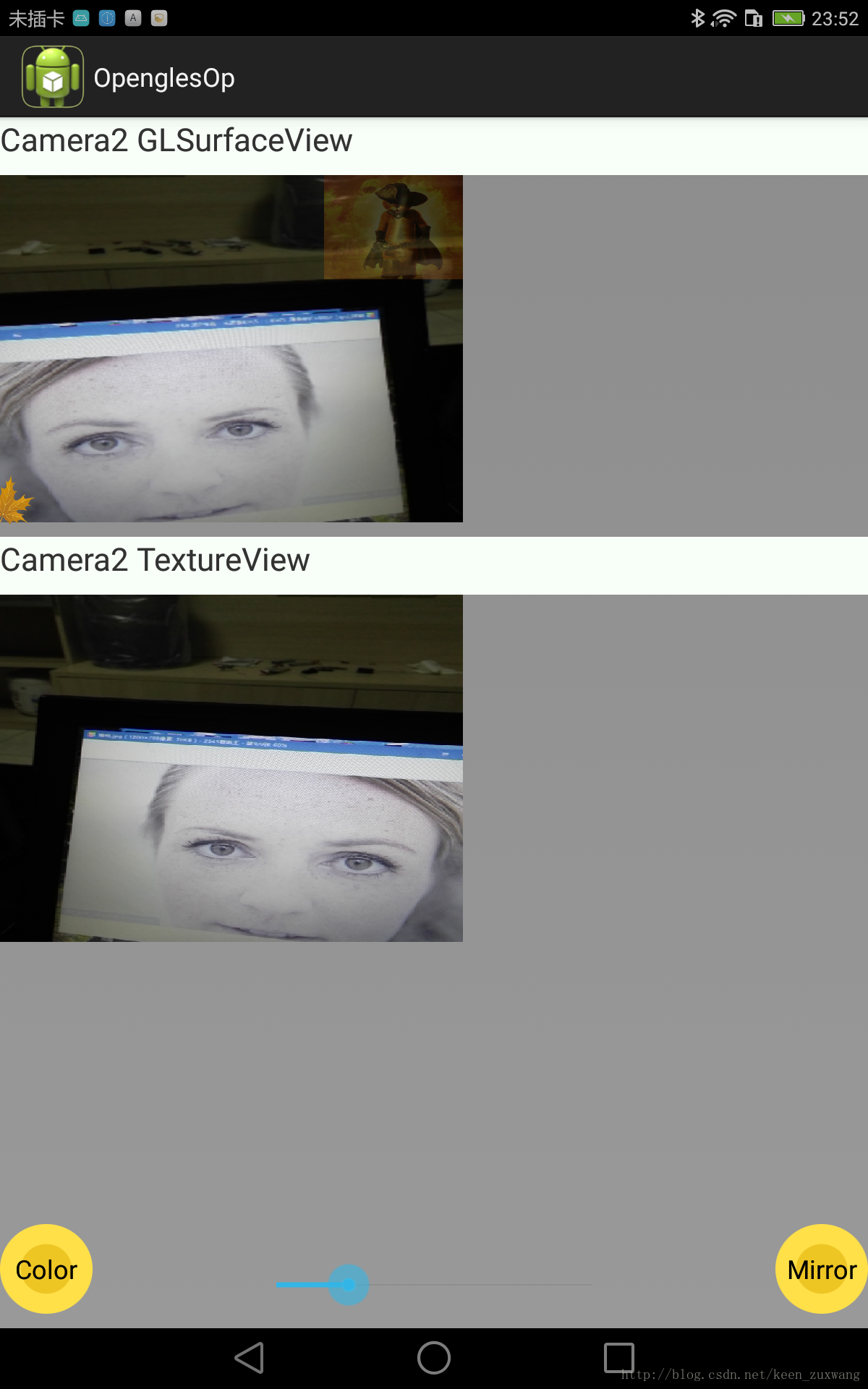

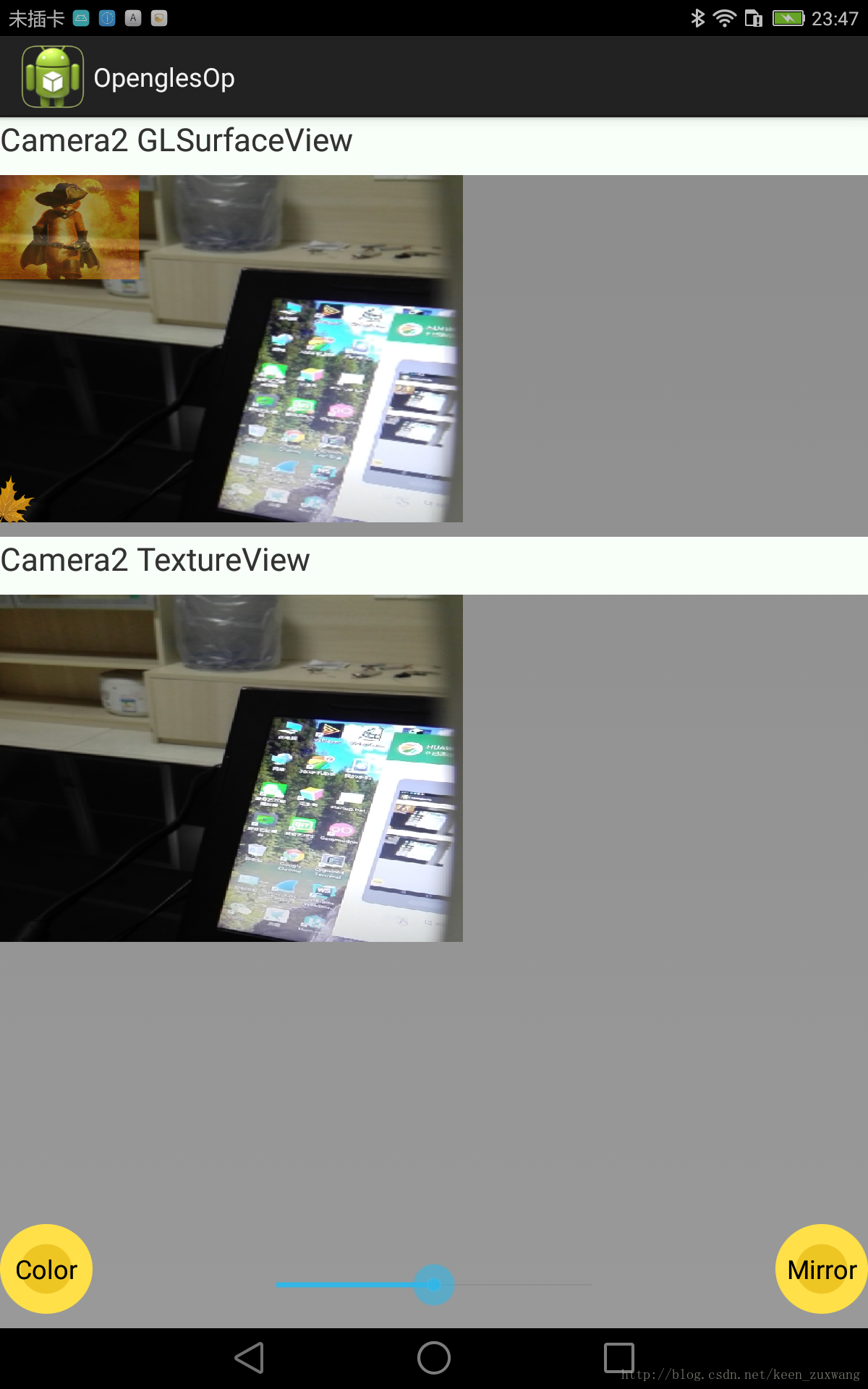

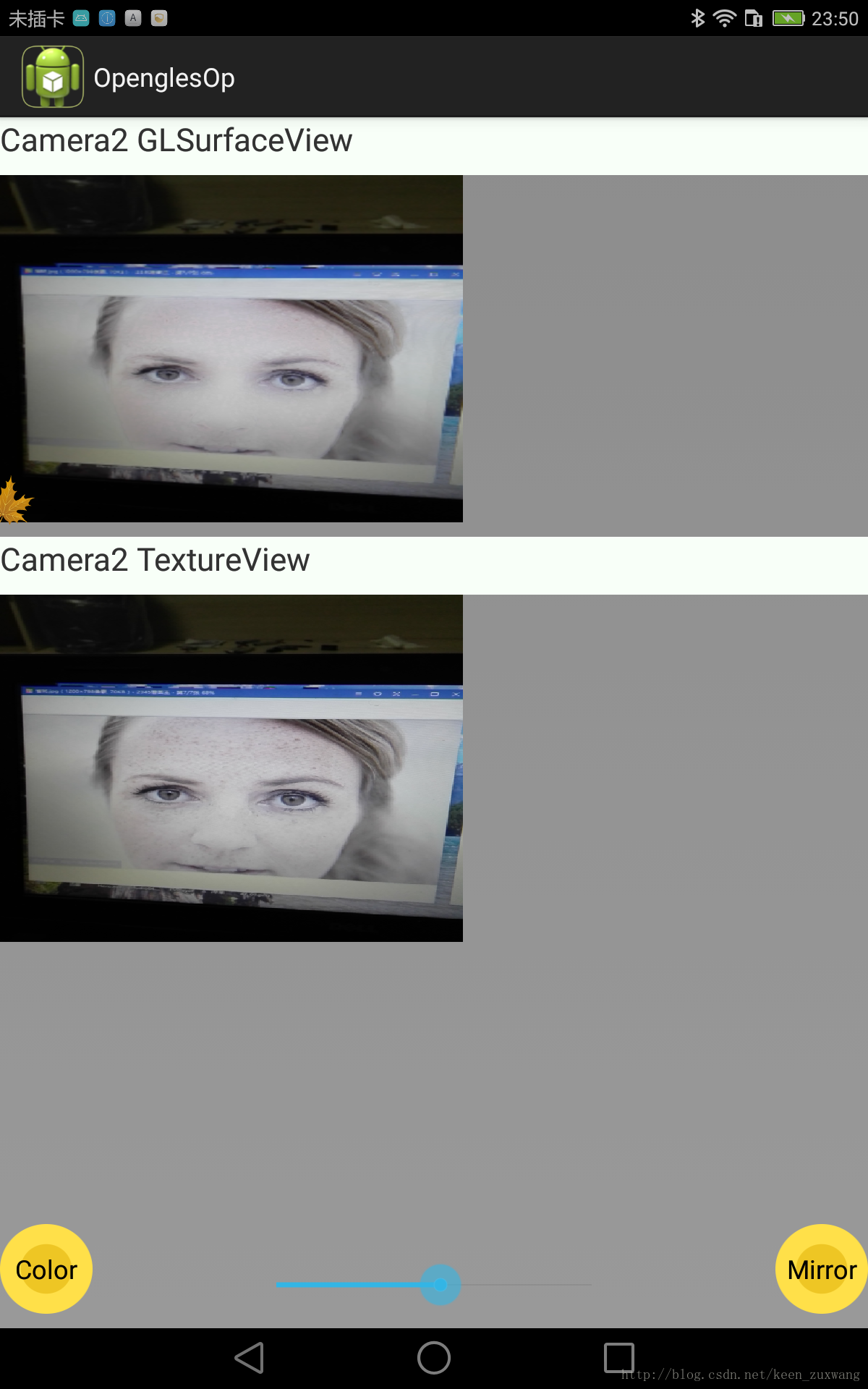

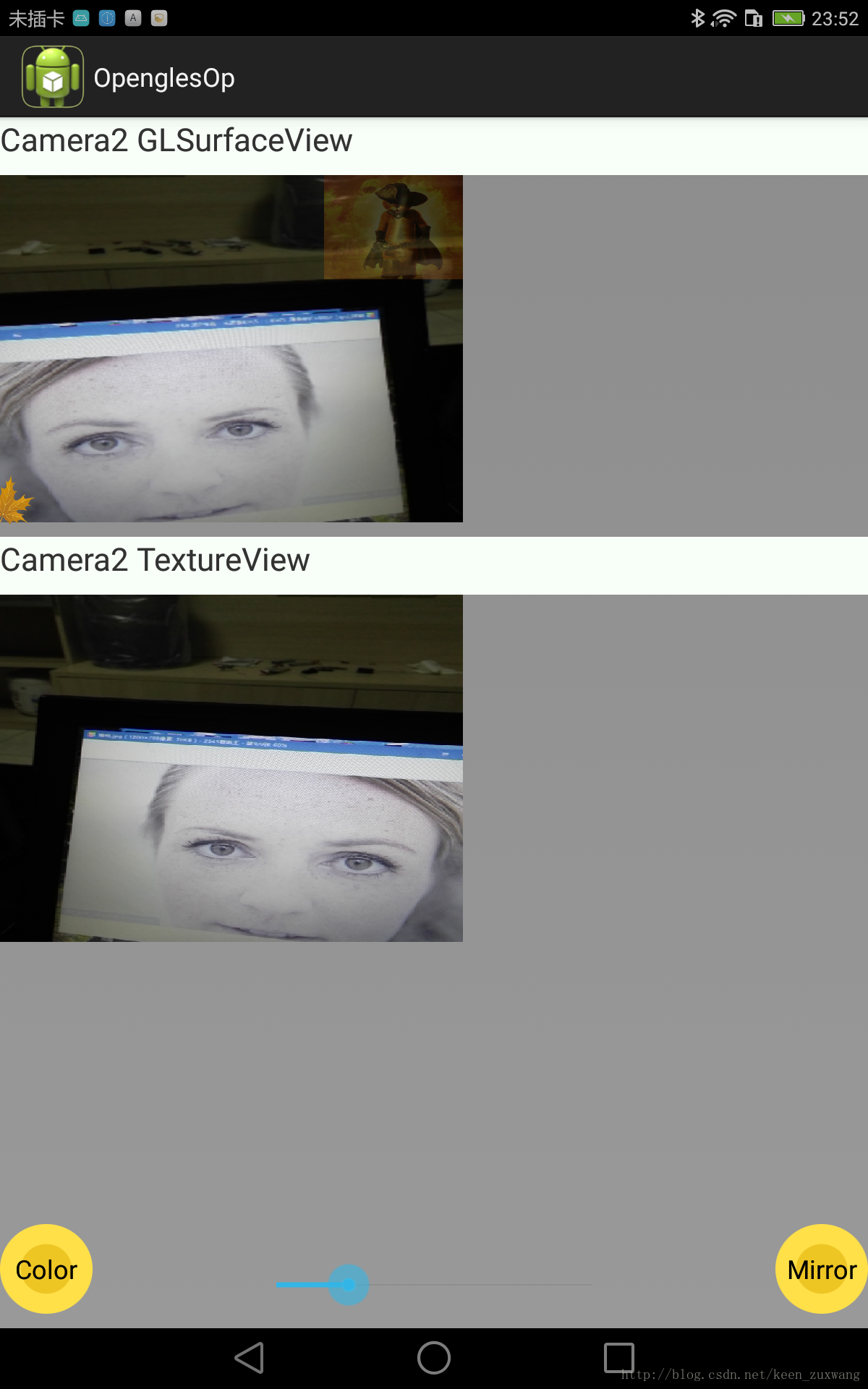

demo效果:

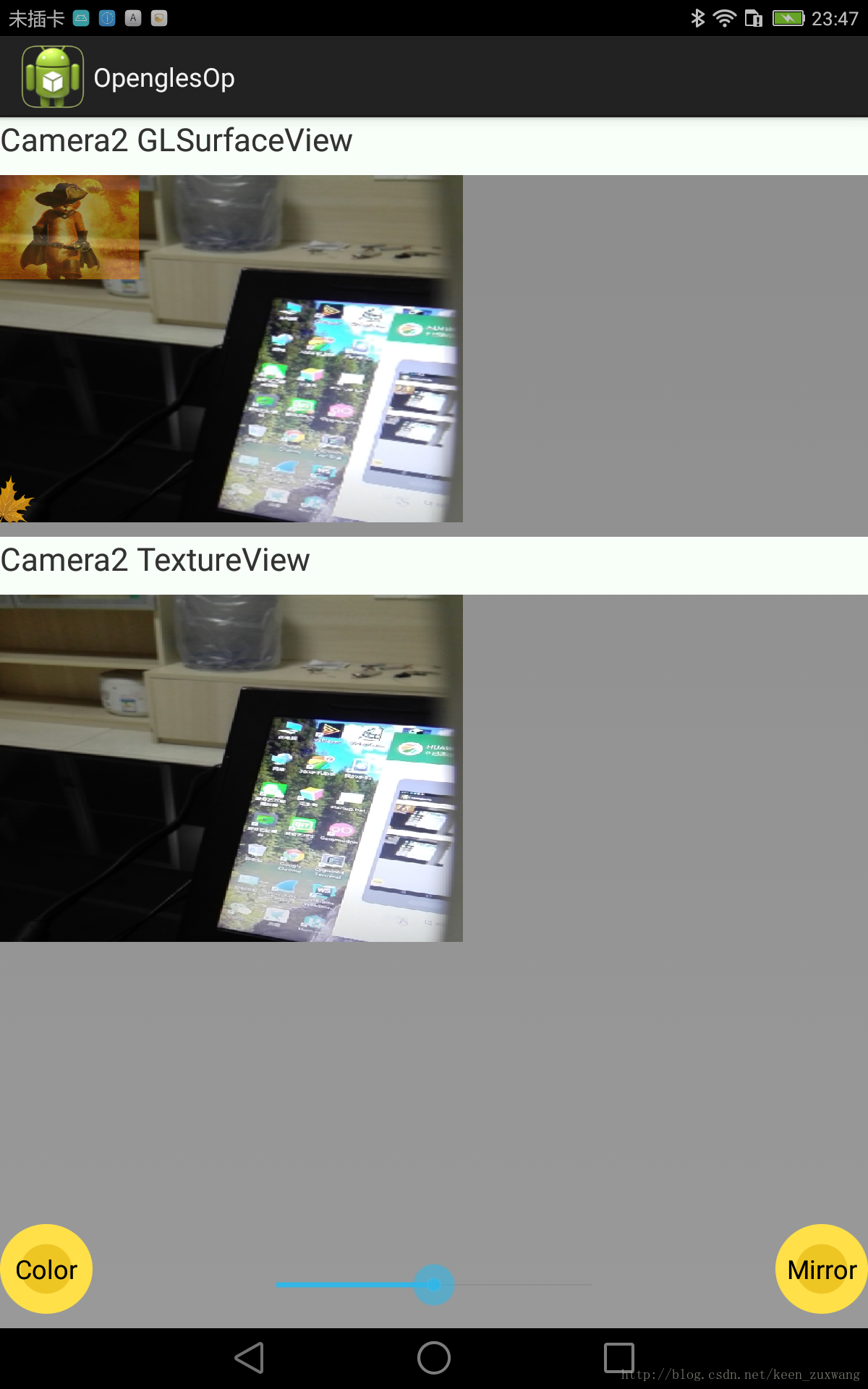

纹理融合

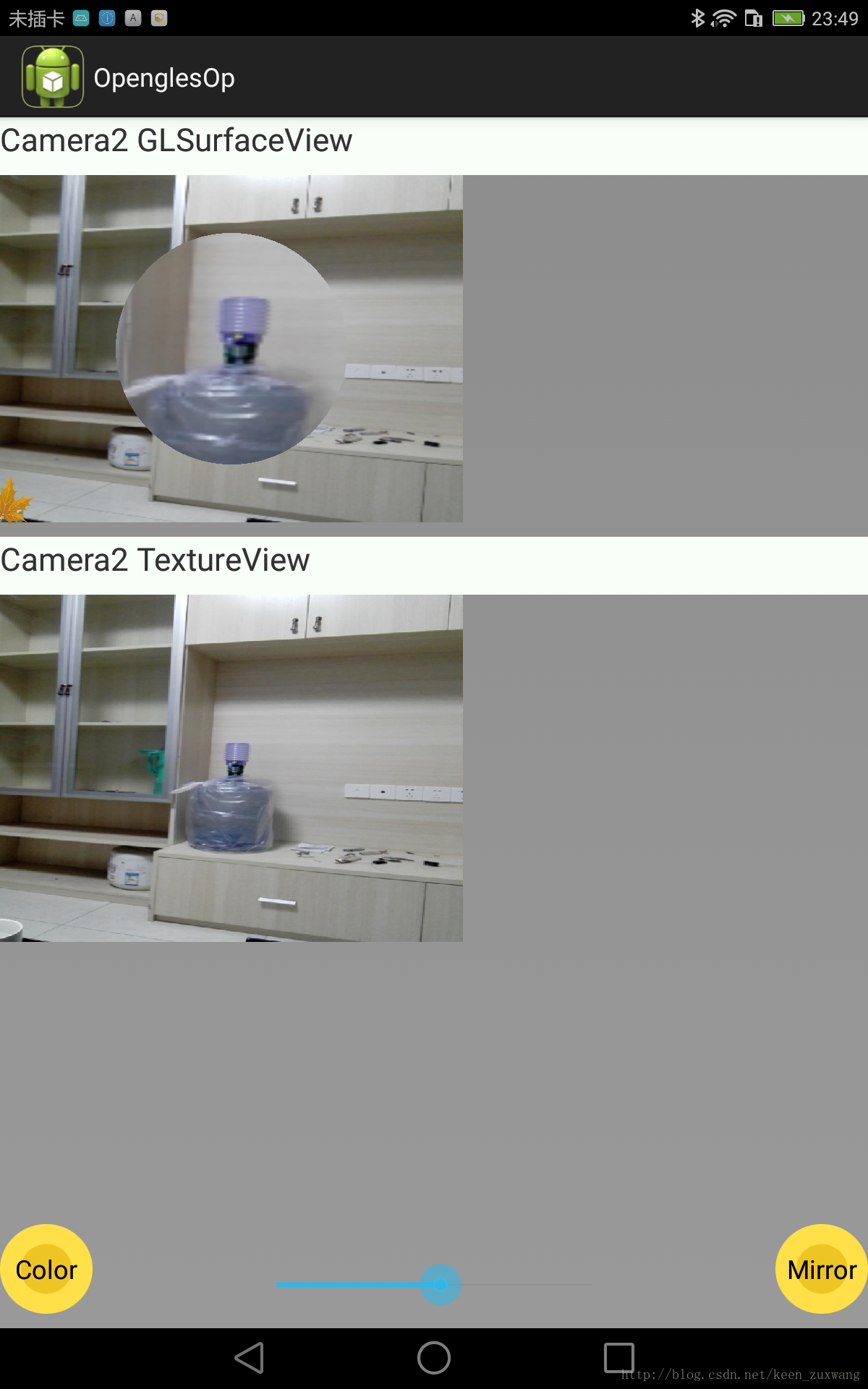

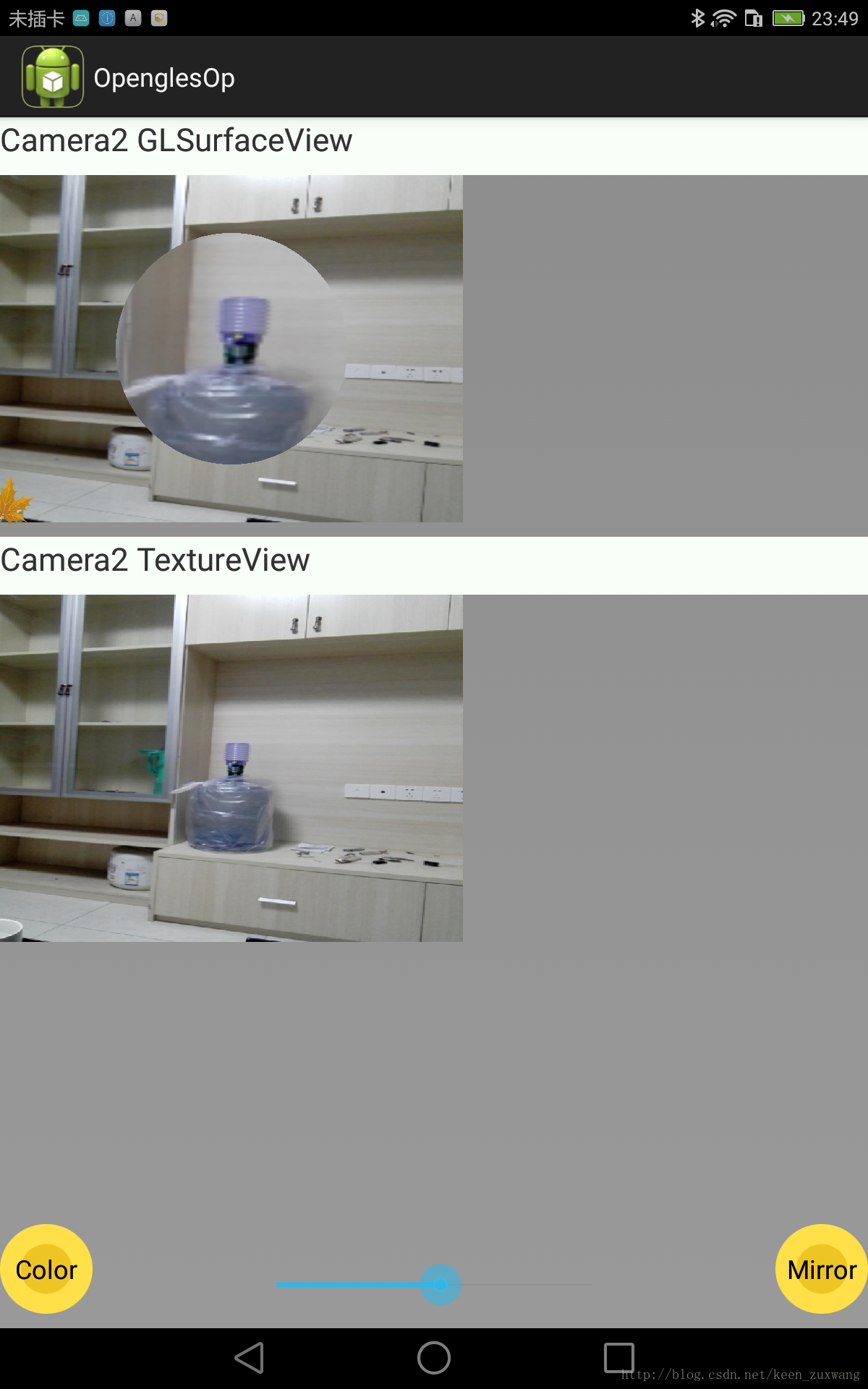

放大镜;

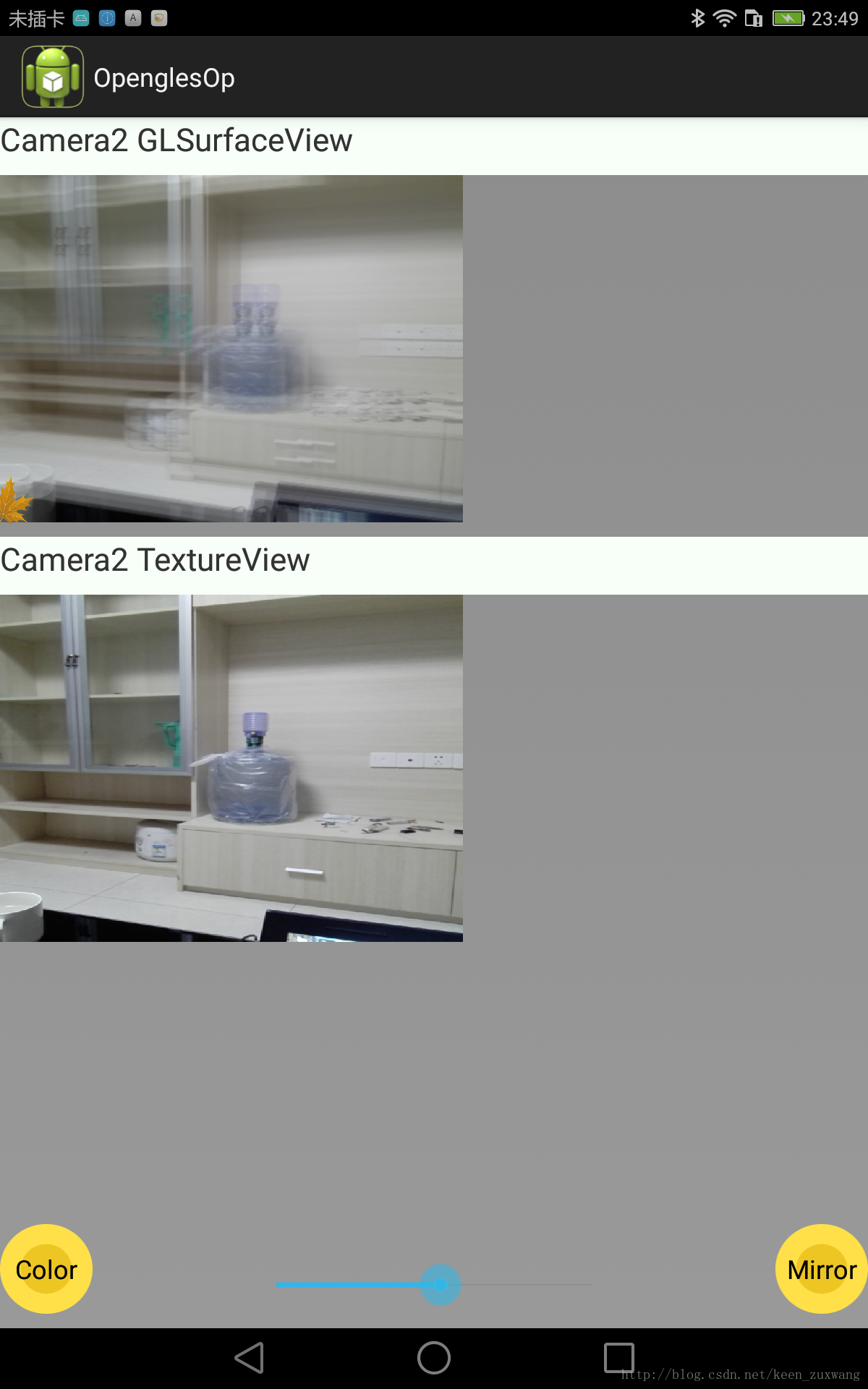

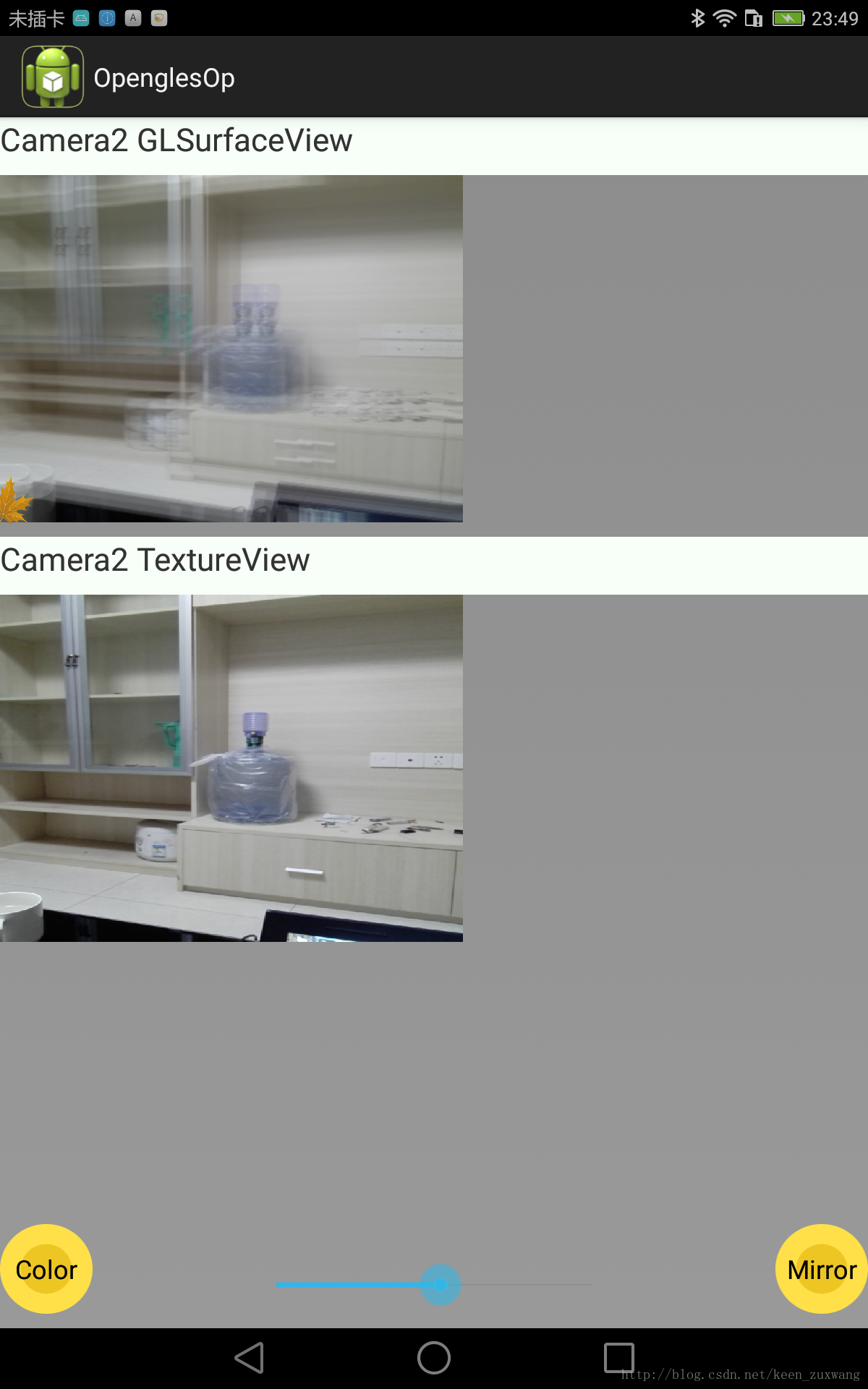

模糊:

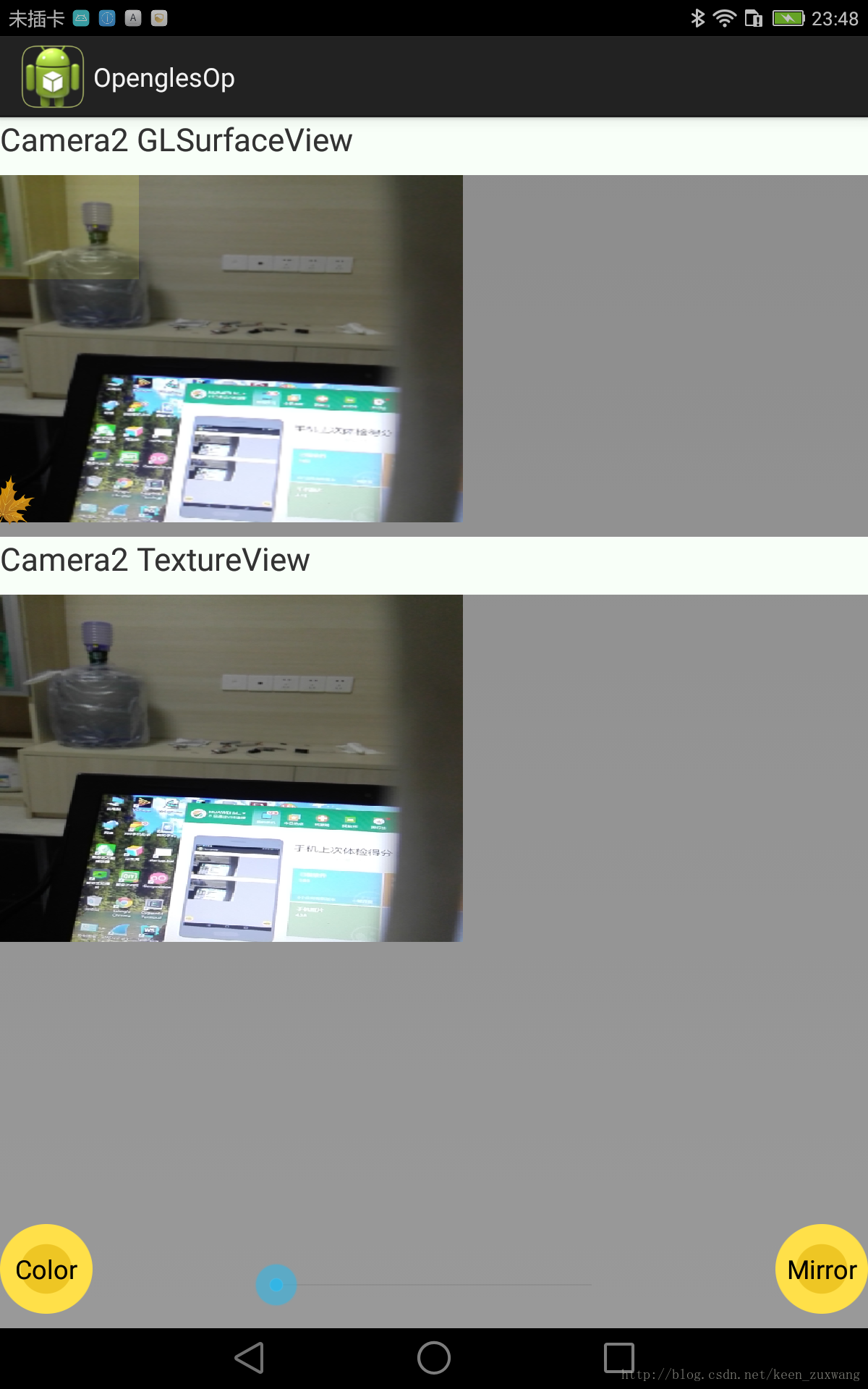

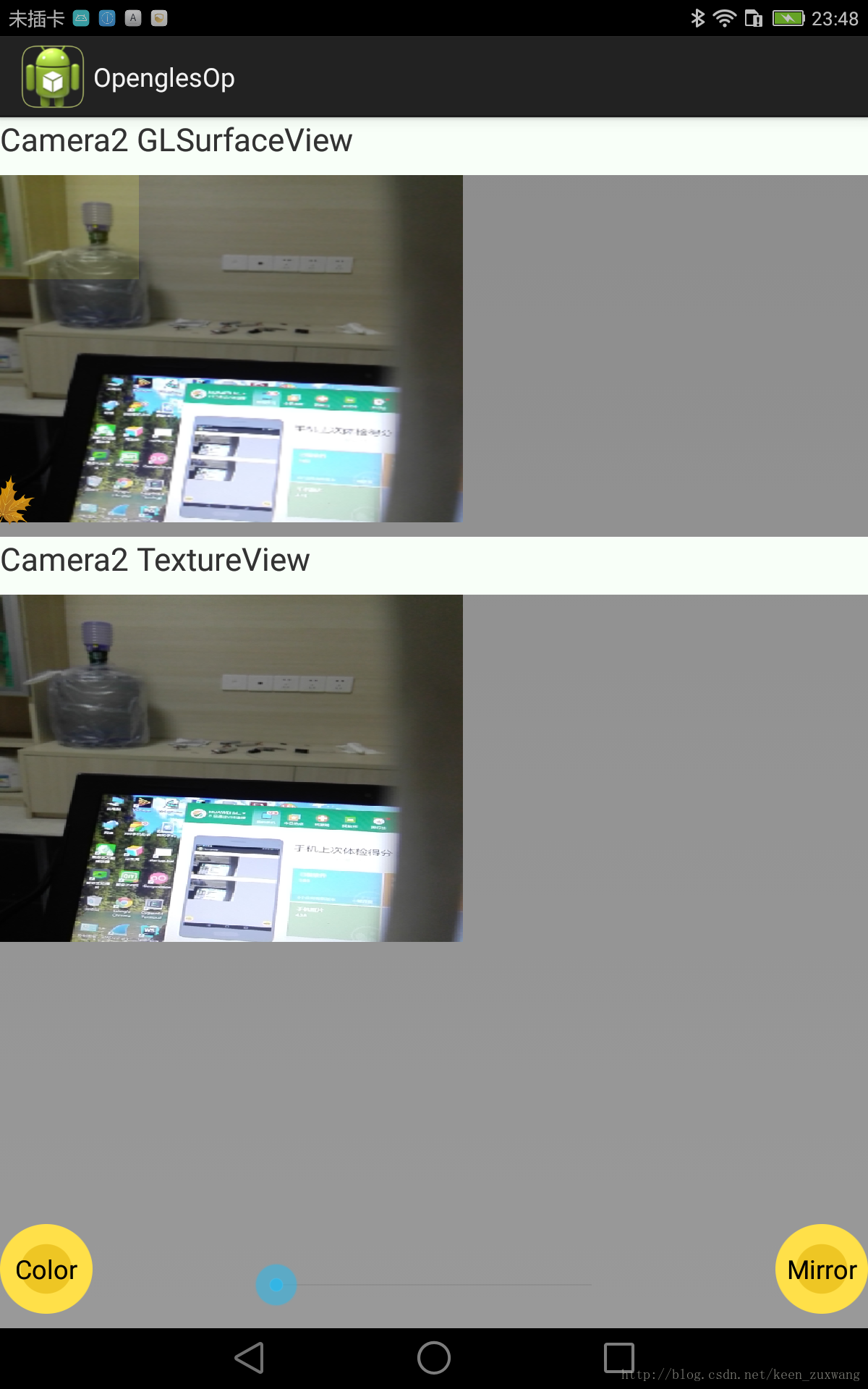

暖色:

冷色:

美颜:

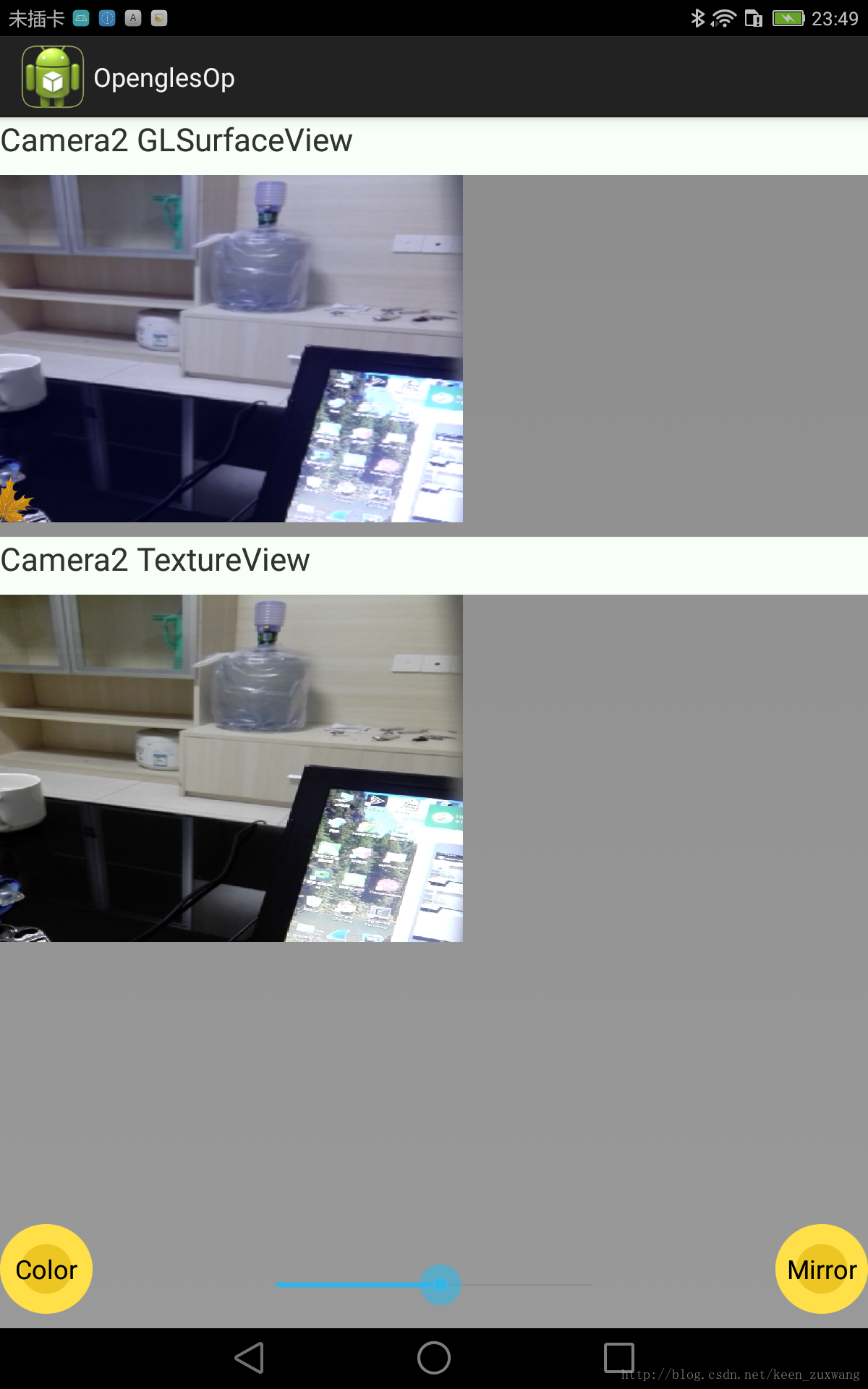

镜像:

http://download.csdn.net/download/keen_zuxwang/10041423

1、创建顶点位置、纹理数组

2、创建、编译、加载shader程序,获得shader中各变量的句柄(如获取纹理采样sampler2D变量的句柄)

3、程序通过program给shader传递各参量,如:顶点位置、纹理坐标,激活、绑定纹理,传递模型/视图/投影矩阵等, 然后通过glDrawArrays()/glDrawElements()绘制图元(片元着色器通过这些参量计算出每个像素的值、然后通过底层EGL 渲染到相应的ANativeWindow)

camera2 摄像头图像opengles渲染显示:

1、生成纹理

GLES20.glGenTextures(1, textures, 0); // 产生纹理id

GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textures[0]);//绑定 纹理id

2、创建SurfaceTexture

SurfaceTexture videoTexture = new SurfaceTexture(textures[0]); //通过创建的纹理id,生成SurfaceTexture

3、生成Surface

Surface surface0 = new Surface(videoTexture); // 通过创建的SurfaceTexture,生成Surface

4、添加camera2预览输出Surface,从而实现camera图像 -> Surface

mPreviewBuilder = camera.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW); // 创建camera2 捕获请求,预览模式

//添加预览输出的Surface, 从而实现camera图像 -> Surface

mPreviewBuilder.addTarget(surface);

mPreviewBuilder.addTarget(surface0);

camera.createCaptureSession(Arrays.asList(surface, surface0), mSessionStateCallback, null); // 创建捕获会话

所以整个摄像头图像渲染流程:

camera图像 -> Surface -> videoTexture/videoTexture.updateTexImage() -> GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textures[0]) ->

GLES20.glDrawElements()

1、vertex shader

attribute vec4 vPosition;

attribute vec4 vTexCoordinate;

uniform mat4 textureTransform;

uniform mat4 uProjMatrix;

uniform mat4 uProjMatrix0;

uniform int xyFlag; // 镜像选择

//参量传递->fragment shader

varying vec2 v_TexCoordinate;

varying vec4 gPosition;

varying vec2 varyPostion;

void main () {

v_TexCoordinate = (textureTransform * vTexCoordinate).xy;

//gl_Position = vPosition;

if(xyFlag==0){

gl_Position = vPosition;

}else if(xyFlag==1){

gl_Position = uProjMatrix*vPosition; //变换矩左乘

}else if(xyFlag==2){

gl_Position = uProjMatrix0*vPosition;

}

gPosition = gl_Position;

varyPostion = vPosition.xy;

}2、fragment shader

#extension GL_OES_EGL_image_external : require // 外部扩展图像纹理

precision mediump float;

uniform samplerExternalOES texture; //外部扩展纹理采样器变量

uniform sampler2D texture0; //贴图纹理采样器变量

uniform int colorFlag; // 滤镜类型

uniform float mratio; // 纹理融合因子

const highp float mWidth=640.0;

const highp float mHeight=480.0;

const highp vec3 W = vec3(0.299,0.587,0.114);

const mediump vec3 luminanceWeighting = vec3(0.2125, 0.7154, 0.0721); //光亮度里三个值相加要为1,各个值代表着颜色的百分比,中间是绿色的值,70%的比重会让效果更好点。

const lowp float saturation=0.5;

const highp float radius = 1.41;

const highp vec2 center = vec2(0.5, 0.5);

const highp float refractiveIndex=0.5;

//矩形融合区域

const vec2 leftBottom = vec2(-1.0, 0.40);

const vec2 rightTop = vec2(-0.40, 1.0);

//模拟坐标数组

vec2 blurCoordinates[24];

//从vertex shader传入的参量

varying vec4 gPosition;

varying vec2 v_TexCoordinate;

varying vec2 varyPostion;

float hardLight(float color)

{

if(color <= 0.5)

color = color * color * 2.0;

else

color = 1.0 - ((1.0 - color)*(1.0 - color) * 2.0);

return color;

}

void modifyColor(vec4 color){

color.r=max(min(color.r,1.0),0.0);

color.g=max(min(color.g,1.0),0.0);

color.b=max(min(color.b,1.0),0.0);

color.a=max(min(color.a,1.0),0.0);

}

void main(){

if(colorFlag==0){

//矩形区域融合

if (varyPostion.x >= leftBottom.x && varyPostion.x <= rightTop.x

&& varyPostion.y >= leftBottom.y && varyPostion.y <= rightTop.y) {

if(mratio < 0.0000001){ //暖色效果

vec4 color = texture2D(texture, v_TexCoordinate);

vec4 deltaColor = color + vec4(0.1, 0.1, 0.0, 0.0); // 暖色

modifyColor(deltaColor);

gl_FragColor=deltaColor;

}else if(mratio > 0.99){ //放大镜效果

gl_FragColor= texture2D(texture, vec2(v_TexCoordinate.x/2.0+0.25, v_TexCoordinate.y/2.0+0.25)); //nColor;

}else{

vec2 tex0 = vec2((varyPostion.x-leftBottom.x)/(rightTop.x-leftBottom.x),

1.0-(varyPostion.y-leftBottom.y)/(rightTop.y-leftBottom.y));

vec4 color = texture2D(texture0, tex0);

gl_FragColor = color*mratio + texture2D(texture,v_TexCoordinate)*(1.0-mratio); //1.0-v_TexCoordinate

//gl_FragColor = texture2D(texture, 1.0-v_TexCoordinate);

}

}else{

//vec4 color1 = texture2D(texture, v_TexCoordinate);

//vec4 color2 = texture2D(texture0, v_TexCoordinate);//vec2(v_TexCoordinate.s/10, v_TexCoordinate.t/10));

// gl_FragColor = mix(color1, color2, mratio);

gl_FragColor = texture2D(texture, v_TexCoordinate);

}

}

else if(colorFlag==7){

//光亮度里三个值相加要为1,各个值代表着颜色的百分比,中间是绿色的值,70%的比重会让效果更好点。

vec4 textureColor = texture2D(texture, v_TexCoordinate);

float luminance = dot(textureColor.rgb, luminanceWeighting); //GLSL中的点乘运算,线性代数的点运算符相乘两个数字。点乘计算需要将纹理颜色信息和相对应的亮度权重相乘。然后取出所有的三个值相加到一起计算得到这个像素的中和亮度值。

vec3 greyScaleColor = vec3(luminance);

gl_FragColor = vec4(mix(greyScaleColor, textureColor.rgb, saturation), textureColor.w); //用mix函数把计算的灰度值,初识的纹理颜色和得到的饱和度信息结合起来。

}

else if(colorFlag==8){

float aspectRatio = mWidth/mHeight;

vec2 textureCoordinateToUse = vec2(v_TexCoordinate.x, (v_TexCoordinate.y * aspectRatio + 0.5 - 0.5 * aspectRatio));

//归一化坐标空间需要考虑屏幕是一个单位宽和一个单位长。

float distanceFromCenter = distance(center, textureCoordinateToUse); //center

//计算特定像素点距离球形的中心有多远。使用GLSL内建的distance()函数,用勾股定律计算出中心坐标和长宽比矫正过的纹理坐标的距离

float checkForPresenceWithinSphere = step(distanceFromCenter, radius); //计算片段是否在球体内。

distanceFromCenter = distanceFromCenter / radius; //标准化到球心的距离,重新设置distanceFromCenter

float normalizedDepth = radius * sqrt(1.0 - distanceFromCenter * distanceFromCenter); //模拟一个玻璃球,需要计算球的“深度”是多少。

vec3 sphereNormal = normalize(vec3(textureCoordinateToUse - center, normalizedDepth)); //归一化

vec3 refractedVector = refract(vec3(0.0, 0.0, -1.0), sphereNormal, refractiveIndex);

//GLSL的refract()函数以刚才创建的球法线和折射率来计算当光线通过球时从任意一个点看起来如何。

gl_FragColor = texture2D(texture, (refractedVector.xy + 1.0) * 0.5) * checkForPresenceWithinSphere; //最后凑齐所有计算需要的颜色信息。

}

else if(colorFlag==1){ //将此灰度值作为输出颜色的RGB值,这样就会变成黑白滤镜

vec4 color = texture2D(texture, v_TexCoordinate);

float fGrayColor = (0.3*color.r + 0.59*color.g + 0.11*color.b); // 求灰度值

gl_FragColor = vec4(fGrayColor, fGrayColor, fGrayColor, 1.0);

}

else if(colorFlag==2){ //冷暖色调

vec4 color = texture2D(texture, v_TexCoordinate);

vec4 deltaColor = color + vec4(0.1, 0.1, 0.0, 0.0); // 暖色

modifyColor(deltaColor);

gl_FragColor=deltaColor;

}

else if(colorFlag==3){ //增加亮度、降低亮度等

vec4 color = texture2D(texture, v_TexCoordinate);

vec4 deltaColor = color + vec4(0.0, 0.0, 0.1, 0.0); //vec4(0.006, 0.004, 0.002, 0.0); // blue色

modifyColor(deltaColor);

gl_FragColor=deltaColor;

}

else if(colorFlag==4){ //放大镜效果

vec4 nColor=texture2D(texture, v_TexCoordinate);

float uXY = mWidth/mHeight;

vec2 vChange = vec2(0.0, 0.0);

float dis = distance(vec2(gPosition.x, gPosition.y/uXY), vChange);

if(dis < 0.5){ //圆形放大区域

nColor=texture2D(texture,vec2(v_TexCoordinate.x/2.0+0.25, v_TexCoordinate.y/2.0+0.25));

}

gl_FragColor=nColor;

}

else if(colorFlag==5){ //类似高斯模糊、径向模糊

vec4 nColor=texture2D(texture, v_TexCoordinate);

vec3 vChangeColor = vec3(0.025, 0.025, 0.025); // 定义边距

//取周边纹理像素值求平均

nColor+=texture2D(texture,vec2(v_TexCoordinate.x-vChangeColor.r,v_TexCoordinate.y-vChangeColor.r));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x-vChangeColor.r,v_TexCoordinate.y+vChangeColor.r));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x+vChangeColor.r,v_TexCoordinate.y-vChangeColor.r));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x+vChangeColor.r,v_TexCoordinate.y+vChangeColor.r));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x-vChangeColor.g,v_TexCoordinate.y-vChangeColor.g));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x-vChangeColor.g,v_TexCoordinate.y+vChangeColor.g));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x+vChangeColor.g,v_TexCoordinate.y-vChangeColor.g));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x+vChangeColor.g,v_TexCoordinate.y+vChangeColor.g));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x-vChangeColor.b,v_TexCoordinate.y-vChangeColor.b));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x-vChangeColor.b,v_TexCoordinate.y+vChangeColor.b));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x+vChangeColor.b,v_TexCoordinate.y-vChangeColor.b));

nColor+=texture2D(texture,vec2(v_TexCoordinate.x+vChangeColor.b,v_TexCoordinate.y+vChangeColor.b));

nColor/=13.0;

gl_FragColor=nColor;

}

else if(colorFlag==6)

{

float mul_x = 2.0 / mWidth;

float mul_y = 2.0 / mHeight;

float pParams = 0.0;

vec2 pStepOffset = vec2(mul_x, mul_y);

vec3 centralColor = texture2D(texture, v_TexCoordinate).rgb;

blurCoordinates[0] = v_TexCoordinate.xy + pStepOffset * vec2(0.0, -10.0);

blurCoordinates[1] = v_TexCoordinate.xy + pStepOffset * vec2(0.0, 10.0);

blurCoordinates[2] = v_TexCoordinate.xy + pStepOffset * vec2(-10.0, 0.0);

blurCoordinates[3] = v_TexCoordinate.xy + pStepOffset * vec2(10.0, 0.0);

blurCoordinates[4] = v_TexCoordinate.xy + pStepOffset * vec2(5.0, -8.0);

blurCoordinates[5] = v_TexCoordinate.xy + pStepOffset * vec2(5.0, 8.0);

blurCoordinates[6] = v_TexCoordinate.xy + pStepOffset * vec2(-5.0, 8.0);

blurCoordinates[7] = v_TexCoordinate.xy + pStepOffset * vec2(-5.0, -8.0);

blurCoordinates[8] = v_TexCoordinate.xy + pStepOffset * vec2(8.0, -5.0);

blurCoordinates[9] = v_TexCoordinate.xy + pStepOffset * vec2(8.0, 5.0);

blurCoordinates[10] = v_TexCoordinate.xy + pStepOffset * vec2(-8.0, 5.0);

blurCoordinates[11] = v_TexCoordinate.xy + pStepOffset * vec2(-8.0, -5.0);

blurCoordinates[12] = v_TexCoordinate.xy + pStepOffset * vec2(0.0, -6.0);

blurCoordinates[13] = v_TexCoordinate.xy + pStepOffset * vec2(0.0, 6.0);

blurCoordinates[14] = v_TexCoordinate.xy + pStepOffset * vec2(6.0, 0.0);

blurCoordinates[15] = v_TexCoordinate.xy + pStepOffset * vec2(-6.0, 0.0);

blurCoordinates[16] = v_TexCoordinate.xy + pStepOffset * vec2(-4.0, -4.0);

blurCoordinates[17] = v_TexCoordinate.xy + pStepOffset * vec2(-4.0, 4.0);

blurCoordinates[18] = v_TexCoordinate.xy + pStepOffset * vec2(4.0, -4.0);

blurCoordinates[19] = v_TexCoordinate.xy + pStepOffset * vec2(4.0, 4.0);

blurCoordinates[20] = v_TexCoordinate.xy + pStepOffset * vec2(-2.0, -2.0);

blurCoordinates[21] = v_TexCoordinate.xy + pStepOffset * vec2(-2.0, 2.0);

blurCoordinates[22] = v_TexCoordinate.xy + pStepOffset * vec2(2.0, -2.0);

blurCoordinates[23] = v_TexCoordinate.xy + pStepOffset * vec2(2.0, 2.0);

float sampleColor = centralColor.g * 22.0;

sampleColor += texture2D(texture, blurCoordinates[0]).g;

sampleColor += texture2D(texture, blurCoordinates[1]).g;

sampleColor += texture2D(texture, blurCoordinates[2]).g;

sampleColor += texture2D(texture, blurCoordinates[3]).g;

sampleColor += texture2D(texture, blurCoordinates[4]).g;

sampleColor += texture2D(texture, blurCoordinates[5]).g;

sampleColor += texture2D(texture, blurCoordinates[6]).g;

sampleColor += texture2D(texture, blurCoordinates[7]).g;

sampleColor += texture2D(texture, blurCoordinates[8]).g;

sampleColor += texture2D(texture, blurCoordinates[9]).g;

sampleColor += texture2D(texture, blurCoordinates[10]).g;

sampleColor += texture2D(texture, blurCoordinates[11]).g;

sampleColor += texture2D(texture, blurCoordinates[12]).g * 2.0;

sampleColor += texture2D(texture, blurCoordinates[13]).g * 2.0;

sampleColor += texture2D(texture, blurCoordinates[14]).g * 2.0;

sampleColor += texture2D(texture, blurCoordinates[15]).g * 2.0;

sampleColor += texture2D(texture, blurCoordinates[16]).g * 2.0;

sampleColor += texture2D(texture, blurCoordinates[17]).g * 2.0;

sampleColor += texture2D(texture, blurCoordinates[18]).g * 2.0;

sampleColor += texture2D(texture, blurCoordinates[19]).g * 2.0;

sampleColor += texture2D(texture, blurCoordinates[20]).g * 3.0;

sampleColor += texture2D(texture, blurCoordinates[21]).g * 3.0;

sampleColor += texture2D(texture, blurCoordinates[22]).g * 3.0;

sampleColor += texture2D(texture, blurCoordinates[23]).g * 3.0;

sampleColor = sampleColor / 62.0;

float highPass = centralColor.g - sampleColor + 0.5;

for(int i = 0; i < 5;i++)

{

highPass = hardLight(highPass);

}

float luminance = dot(centralColor, W);

float alpha = pow(luminance, pParams);

vec3 smoothColor = centralColor + (centralColor-vec3(highPass))*alpha*0.1;

gl_FragColor = vec4(mix(smoothColor.rgb, max(smoothColor, centralColor), alpha), 1.0);

}

}3、shader工具类

public class HelpUtils

{

private static final String TAG = "ShaderHelper";

public static int compileShader(final int shaderType, final String shaderSource)

{

int shaderHandle = GLES20.glCreateShader(shaderType);

if (shaderHandle != 0)

{

// Pass in the shader source.

GLES20.glShaderSource(shaderHandle, shaderSource);

// Compile the shader.

GLES20.glCompileShader(shaderHandle);

// Get the compilation status.

final int[] compileStatus = new int[1];

GLES20.glGetShaderiv(shaderHandle, GLES20.GL_COMPILE_STATUS, compileStatus, 0);

// If the compilation failed, delete the shader.

if (compileStatus[0] == 0)

{

Log.e(TAG, "Error compiling shader: " + GLES20.glGetShaderInfoLog(shaderHandle));

GLES20.glDeleteShader(shaderHandle);

shaderHandle = 0;

}

}

if (shaderHandle == 0){

throw new RuntimeException("Error creating shader.");

}

return shaderHandle;

}

public static int createAndLinkProgram(final int vertexShaderHandle, final int fragmentShaderHandle, final String[] attributes)

{

int programHandle = GLES20.glCreateProgram();

if (programHandle != 0)

{

// Bind the vertex shader to the program.

GLES20.glAttachShader(programHandle, vertexShaderHandle);

// Bind the fragment shader to the program.

GLES20.glAttachShader(programHandle, fragmentShaderHandle);

// Bind attributes

if (attributes != null){

final int size = attributes.length;

for (int i = 0; i < size; i++){

GLES20.glBindAttribLocation(programHandle, i, attributes[i]);

}

}

// Link the two shaders together into a program.

GLES20.glLinkProgram(programHandle);

// Get the link status.

final int[] linkStatus = new int[1];

GLES20.glGetProgramiv(programHandle, GLES20.GL_LINK_STATUS, linkStatus, 0);

// If the link failed, delete the program.

if (linkStatus[0] == 0) {

Log.e(TAG, "Error compiling program: " + GLES20.glGetProgramInfoLog(programHandle));

GLES20.glDeleteProgram(programHandle);

programHandle = 0;

}

}

if (programHandle == 0){

throw new RuntimeException("Error creating program.");

}

return programHandle;

}

public static int loadTexture(final Context context, final int resourceId) {

final int[] textureHandle = new int[1];

GLES20.glGenTextures(1, textureHandle, 0);

if (textureHandle[0] != 0) {

final BitmapFactory.Options options = new BitmapFactory.Options();

options.inScaled = false; // No pre-scaling

// Read in the resource

final Bitmap bitmap = BitmapFactory.decodeResource(

context.getResources(), resourceId, options);

// Bind to the texture in OpenGL

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureHandle[0]);

// Set filtering

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D,

GLES20.GL_TEXTURE_MIN_FILTER, GLES20.GL_NEAREST);

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D,

GLES20.GL_TEXTURE_MAG_FILTER, GLES20.GL_NEAREST);

// Load the bitmap into the bound texture.

GLUtils.texImage2D(GLES20.GL_TEXTURE_2D, 0, bitmap, 0);

// Recycle the bitmap, since its data has been loaded into OpenGL.

bitmap.recycle();

}

if (textureHandle[0] == 0) {

throw new RuntimeException("Error loading texture.");

}

return textureHandle[0];

}

public static String readTextFileFromRawResource(final Context context,

final int resourceId)

{

final InputStream inputStream = context.getResources().openRawResource(

resourceId);

final InputStreamReader inputStreamReader = new InputStreamReader(

inputStream);

final BufferedReader bufferedReader = new BufferedReader(

inputStreamReader);

String nextLine;

final StringBuilder body = new StringBuilder();

try{

while ((nextLine = bufferedReader.readLine()) != null){

body.append(nextLine);

body.append('\n');

}

}

catch (IOException e){

return null;

}

return body.toString();

}

//从sh脚本中加载shader内容的方法

public static String loadFromAssetsFile(String fname,Resources r)

{

String result=null;

try {

InputStream in=r.getAssets().open(fname);

int ch=0;

ByteArrayOutputStream baos = new ByteArrayOutputStream();

while((ch=in.read())!=-1) {

baos.write(ch);

}

byte[] buff=baos.toByteArray();

baos.close();

in.close();

result=new String(buff,"UTF-8");

result=result.replaceAll("\\r\\n","\n");

}

catch(Exception e){

e.printStackTrace();

}

return result;

}

}4、GLViewMediaActivity类, shader操作类,实现GLSurfaceView.Renderer接口

通过创建的SurfaceTexture videoTexture(textures[0]生成的),生成Surface,所以整个摄像头图像渲染流程:

camera图像 -> Surface -> videoTexture/videoTexture.updateTexImage() -> GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textures[0]) ->

GLES20.glDrawElements()

public class GLViewMediaActivity extends Activity implements TextureView.SurfaceTextureListener, GLSurfaceView.Renderer, SurfaceTexture.OnFrameAvailableListener {

public static final String videoPath = Environment.getExternalStorageDirectory()+"/live.mp4";

public static final String TAG = "GLViewMediaActivity";

private static float squareCoords[] = {

-1.0f, 1.0f, // top left

-1.0f, -1.0f, // bottom left

1.0f, -1.0f, // bottom right

1.0f, 1.0f // top right

};

private static short drawOrder[] = {0, 1, 2, 0, 2, 3}; // Texture to be shown in backgrund

private float textureCoords[] = {

0.0f, 1.0f, 0.0f, 1.0f,

0.0f, 0.0f, 0.0f, 1.0f,

1.0f, 0.0f, 0.0f, 1.0f,

1.0f, 1.0f, 0.0f, 1.0f

};

private int[] textures = new int[1];

private int width, height;

private int shaderProgram;

private FloatBuffer vertexBuffer;

private FloatBuffer textureBuffer;

private ShortBuffer drawListBuffer;

private float[] videoTextureTransform = new float[16];

private SurfaceTexture videoTexture;

private GLSurfaceView glView;

private Context context;

private RelativeLayout previewLayout = null;

private boolean frameAvailable = false;

int textureParamHandle;

int textureCoordinateHandle;

int positionHandle;

int textureTranformHandle;

public int mRatio;

public float ratio=0.5f;

public int mColorFlag=0;

public int xyFlag=0;

TextureView mPreviewView;

CameraCaptureSession mSession;

CaptureRequest.Builder mPreviewBuilder;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main_1);

context = this;

glView = new GLSurfaceView(this);

previewLayout = (RelativeLayout)findViewById(R.id.previewLayout);

//RelativeLayout.LayoutParams layoutParams = new RelativeLayout.LayoutParams(640,480 );

previewLayout.addView(glView);//, layoutParams);

mPreviewView = (TextureView) findViewById(R.id.id_textureview);

mPreviewView.setSurfaceTextureListener(this);

glView.setEGLContextClientVersion(2);

glView.setRenderer(this);

//glView.setRenderMode(GLSurfaceView.RENDERMODE_WHEN_DIRTY);

SeekBar seekBar = (SeekBar) findViewById(R.id.id_seekBar);

seekBar.setOnSeekBarChangeListener(new SeekBar.OnSeekBarChangeListener() {

@Override

public void onProgressChanged(SeekBar seekBar, int progress, boolean fromUser) {

// TODO Auto-generated method stub

ratio = progress/100.0f; // 贴图纹理 & 摄像头图像外部扩展纹理的融合因子

}

@Override

public void onStartTrackingTouch(SeekBar seekBar) {

// TODO Auto-generated method stub

}

@Override

public void onStopTrackingTouch(SeekBar seekBar) {

// TODO Auto-generated method stub

}

});

Button btn_color = (Button)findViewById(R.id.btn_color);

Button btn_mirror = (Button)findViewById(R.id.btn_mirror);

btn_color.setOnClickListener(new OnClickListener() {

@Override

public void onClick(View v) {

// TODO Auto-generated method stub

// 滤镜类型选择

if(mColorFlag == 0) {

mColorFlag = 7;

Toast.makeText(GLViewMediaActivity.this, "Saturation adjust!", Toast.LENGTH_SHORT).show();

}else if(mColorFlag == 7) {

mColorFlag = 1;

Toast.makeText(GLViewMediaActivity.this, "Gray Color!", Toast.LENGTH_SHORT).show();

}else if(mColorFlag == 1) {

mColorFlag = 2;

Toast.makeText(GLViewMediaActivity.this, "Warm Color!", Toast.LENGTH_SHORT).show();

}else if(mColorFlag == 2){

mColorFlag = 3;

Toast.makeText(GLViewMediaActivity.this, "Cool Color!", Toast.LENGTH_SHORT).show();

}else if(mColorFlag == 3){

mColorFlag = 4;

Toast.makeText(GLViewMediaActivity.this, "Amplify!", Toast.LENGTH_SHORT).show();

}else if(mColorFlag == 4){

mColorFlag = 5;

Toast.makeText(GLViewMediaActivity.this, "Vague!", Toast.LENGTH_SHORT).show();

}else if(mColorFlag == 5){

mColorFlag = 6;

Toast.makeText(GLViewMediaActivity.this, "Beauty!", Toast.LENGTH_SHORT).show();

}else if(mColorFlag ==6){

mColorFlag = 0;

Toast.makeText(GLViewMediaActivity.this, "Orignal Color!", Toast.LENGTH_SHORT).show();

}

}

});

btn_mirror.setOnClickListener(new OnClickListener() {

@Override

public void onClick(View v) {

// TODO Auto-generated method stub

//X 、Y轴镜像选择

if(xyFlag == 0) {

Toast.makeText(GLViewMediaActivity.this, "X Mirror!", Toast.LENGTH_SHORT).show();

xyFlag = 1;

}else if(xyFlag == 1){

xyFlag = 2;

Toast.makeText(GLViewMediaActivity.this, "Y Mirror!", Toast.LENGTH_SHORT).show();

}else if(xyFlag == 2) {

xyFlag = 0;

Toast.makeText(GLViewMediaActivity.this, "Normal!", Toast.LENGTH_SHORT).show();

}

}

});

}

@Override

protected void onPause() {

super.onPause();

}

@Override

protected void onDestroy() {

super.onDestroy();

}

@Override

public void onSurfaceTextureAvailable(SurfaceTexture surface, int width, int height) {

CameraManager cameraManager = (CameraManager) getSystemService(CAMERA_SERVICE);

try {

Log.i(TAG, "onSurfaceTextureAvailable: width = " + width + ", height = " + height);

String[] CameraIdList = cameraManager.getCameraIdList();

CameraCharacteristics characteristics = cameraManager.getCameraCharacteristics(CameraIdList[0]);

characteristics.get(CameraCharacteristics.INFO_SUPPORTED_HARDWARE_LEVEL);

// 6.0 动态获取权限

if (ActivityCompat.checkSelfPermission(this, Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED) {

return;

}

//startCodec();

cameraManager.openCamera(CameraIdList[0], mCameraDeviceStateCallback, null);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onSurfaceTextureSizeChanged(SurfaceTexture surface, int width, int height) {}

@Override

public boolean onSurfaceTextureDestroyed(SurfaceTexture surface) {

return false;

}

@Override

public void onSurfaceTextureUpdated(SurfaceTexture surface) {}

CameraDevice mCameraDevice;

private CameraDevice.StateCallback mCameraDeviceStateCallback = new CameraDevice.StateCallback() {

@Override

public void onOpened(CameraDevice camera) {

Log.i(TAG, " CameraDevice.StateCallback onOpened ");

try {

mCameraDevice = camera;

startPreview(camera);

} catch (CameraAccessException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

@Override

public void onDisconnected(CameraDevice camera) {

if (null != mCameraDevice) {

mCameraDevice.close();

GLViewMediaActivity.this.mCameraDevice = null;

}

}

@Override

public void onError(CameraDevice camera, int error) {}

};

private void startPreview(CameraDevice camera) throws CameraAccessException {

SurfaceTexture texture = mPreviewView.getSurfaceTexture();

texture.setDefaultBufferSize(mPreviewView.getWidth(), mPreviewView.getHeight());

Surface surface = new Surface(texture); // TextureView -> SurfaceTexture -> Surface

Surface surface0 = new Surface(videoTexture); // 通过创建的SurfaceTexture,生成Surface,videoTexture 由textures[0]生成的,所以整个摄像头图像渲染流程:camera图像 -> Surface -> videoTexture/ videoTexture.updateTexImage() -> GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textures[0]);

Log.i(TAG, " startPreview ");

try {

mPreviewBuilder = camera.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW); //CameraDevice.TEMPLATE_STILL_CAPTURE

} catch (CameraAccessException e) {

e.printStackTrace();

}

//添加预览输出的Surface: camera图像 -> Surface

mPreviewBuilder.addTarget(surface);

mPreviewBuilder.addTarget(surface0);

camera.createCaptureSession(Arrays.asList(surface, surface0), mSessionStateCallback, null);

}

//1、CameraCaptureSession.StateCallback

private CameraCaptureSession.StateCallback mSessionStateCallback = new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(CameraCaptureSession session) {

try {

Log.i(TAG, " onConfigured ");

//session.capture(mPreviewBuilder.build(), mSessionCaptureCallback, mHandler);

mSession = session;

//自动聚焦

mPreviewBuilder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

//自动曝光

mPreviewBuilder.set(CaptureRequest.CONTROL_AE_MODE, CaptureRequest.CONTROL_AE_MODE_ON_AUTO_FLASH);

//int rotation = getWindowManager().getDefaultDisplay().getRotation();

//mPreviewBuilder.set(CaptureRequest.JPEG_ORIENTATION, ORIENTATIONS.get(rotation));

session.setRepeatingRequest(mPreviewBuilder.build(), null, null); //null

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(CameraCaptureSession session) {}

};

int callback_time;

//2、 CameraCaptureSession.CaptureCallback()

private CameraCaptureSession.CaptureCallback mSessionCaptureCallback =new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureCompleted(CameraCaptureSession session, CaptureRequest request, TotalCaptureResult result) {

//Toast.makeText(GLViewMediaActivity.this, "picture success!", Toast.LENGTH_SHORT).show();

callback_time++;

Log.i(TAG, " CaptureCallback = "+callback_time);

}

@Override

public void onCaptureProgressed(CameraCaptureSession session, CaptureRequest request, CaptureResult partialResult) {

Toast.makeText(GLViewMediaActivity.this, "picture failed!", Toast.LENGTH_SHORT).show();

}

};

public int initTexture(int drawableId)

{

//生成纹理ID

int[] textures = new int[1];

GLES20.glGenTextures

(

1, //产生的纹理id的数量

textures, //纹理id的数组

0 //偏移量

);

int textureId = textures[0];

Log.i(TAG, " initTexture textureId = " + textureId);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureId);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MIN_FILTER,GLES20.GL_NEAREST);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D,GLES20.GL_TEXTURE_MAG_FILTER,GLES20.GL_LINEAR);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_WRAP_S,GLES20.GL_CLAMP_TO_EDGE);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_WRAP_T,GLES20.GL_CLAMP_TO_EDGE);

//加载图片

InputStream is = this.getResources().openRawResource(drawableId);

Bitmap bitmapTmp;

try {

bitmapTmp = BitmapFactory.decodeStream(is);

} finally {

try {

is.close();

}

catch(IOException e) {

e.printStackTrace();

}

}

//加载纹理

GLUtils.texImage2D

(

GLES20.GL_TEXTURE_2D, //纹理类型,在OpenGL ES中必须为GL10.GL_TEXTURE_2D

0, //纹理的层次,0表示基本图像层,直接贴图,多重纹理mipmap,可选其它level层

bitmapTmp, //纹理图像

0 //纹理边框尺寸

);

bitmapTmp.recycle(); //纹理加载成功后释放图片

return textureId;

}

int textureIdOne;

int textureHandle;

@Override

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

setupGraphics();

setupVertexBuffer();

setupTexture();

textureIdOne= initTexture(R.drawable.bg); // 生成贴图纹理

}

@Override

public void onSurfaceChanged(GL10 gl, int width, int height) {

this.width = width;

this.height = height;

GLES20.glViewport(0, 0, width, height);

setSize(width, height); //根据高、宽设置模型/视图/投影矩阵

}

@Override

public void onDrawFrame(GL10 gl) {

synchronized (this) {

if (frameAvailable) {

videoTexture.updateTexImage(); // 更新SurfaceTexture纹理图像信息,然后绑定的GLES11Ext.GL_TEXTURE_EXTERNAL_OES纹理才能渲染

videoTexture.getTransformMatrix(videoTextureTransform); // 获取SurfaceTexture纹理变换矩

frameAvailable = false;

}

}

GLES20.glClearColor(0.0f, 0.0f, 0.0f, 0.0f); //设置清除颜色

GLES20.glClear(GLES20.GL_COLOR_BUFFER_BIT | GLES20.GL_DEPTH_BUFFER_BIT);

//GL_COLOR_BUFFER_BIT 设置窗口颜色

//GL_DEPTH_BUFFER_BIT 设置深度缓存--把所有像素的深度值设置为最大值(一般为远裁剪面)

drawTexture();

}

@Override

public void onFrameAvailable(SurfaceTexture surfaceTexture) {

synchronized (this) {

frameAvailable = true;

}

}

private float[] mViewMatrix=new float[16];

private float[] mProjectMatrix=new float[16];

private float[] mModelMatrix=new float[16];

private float[] mModelMatrix0=new float[16];

private float[] matrix=new float[16];

private float[] matrix0=new float[16];

private int gHWidth;

private int gHHeight;

public void setSize(int width,int height){

float ratio=(float)width/height;

//投影矩 -- 视窗显示

Matrix.frustumM(mProjectMatrix, 0, -ratio, ratio, -1, 1, 1, 3);

//视图矩 -- 相机位置/相机目标位置/相机各朝向

Matrix.setLookAtM(mViewMatrix, 0,

0.0f, 0.0f, 1.0f, //0f, 0.0f, 0.0f,

0.0f, 0.0f, 0.0f, //0.0f, 0.0f,-1.0f,

0f, 1.0f, 0.0f);

//模型矩 -- 物体本身的位置、朝向

Matrix.setIdentityM(mModelMatrix,0);

Matrix.setIdentityM(mModelMatrix0,0);

//Matrix.scaleM(mModelMatrix,0,2,2,2);

Matrix.multiplyMM(matrix,0,mProjectMatrix,0,mViewMatrix,0); //矩阵乘法

Matrix.multiplyMM(matrix0,0,mProjectMatrix,0,mViewMatrix,0);

matrix = flip(mModelMatrix, true, false);

matrix0 = flip(mModelMatrix0, false, true);

}

public static float[] rotate(float[] m,float angle){

Matrix.rotateM(m,0,angle,0,0,1);

return m;

}

//镜像

public float[] flip(float[] m,boolean x,boolean y){

if(x||y){

Matrix.scaleM(m,0,x?-1:1,y?-1:1,1);

}

return m;

}

private void setupGraphics() {

final String vertexShader = HelpUtils.readTextFileFromRawResource(context, R.raw.vetext_sharder);

final String fragmentShader = HelpUtils.readTextFileFromRawResource(context, R.raw.fragment_sharder);

final int vertexShaderHandle = HelpUtils.compileShader(GLES20.GL_VERTEX_SHADER, vertexShader);

final int fragmentShaderHandle = HelpUtils.compileShader(GLES20.GL_FRAGMENT_SHADER, fragmentShader);

shaderProgram = HelpUtils.createAndLinkProgram(vertexShaderHandle, fragmentShaderHandle,

new String[]{"texture", "vPosition", "vTexCoordinate", "textureTransform"});

GLES20.glUseProgram(shaderProgram);

textureParamHandle = GLES20.glGetUniformLocation(shaderProgram, "texture"); // 摄像头图像外部扩展纹理

textureCoordinateHandle = GLES20.glGetAttribLocation(shaderProgram, "vTexCoordinate"); // 顶点纹理坐标

positionHandle = GLES20.glGetAttribLocation(shaderProgram, "vPosition"); // 顶点坐标

textureTranformHandle = GLES20.glGetUniformLocation(shaderProgram, "textureTransform");

textureHandle = GLES20.glGetUniformLocation(shaderProgram, "texture0"); // 获得贴图对应的纹理采样器句柄(索引)

mRatio = GLES20.glGetUniformLocation(shaderProgram, "mratio"); // 融合因子

gHWidth=GLES20.glGetUniformLocation(shaderProgram,"mWidth"); // 视窗宽、高

gHHeight=GLES20.glGetUniformLocation(shaderProgram,"mHeight");

GLES20.glUniform1i(gHWidth,width);

GLES20.glUniform1i(gHHeight,height);

}

private void setupVertexBuffer() {

// Draw list buffer

ByteBuffer dlb = ByteBuffer.allocateDirect(drawOrder.length * 2);

dlb.order(ByteOrder.nativeOrder()); // 转换成本地字节序

drawListBuffer = dlb.asShortBuffer();

drawListBuffer.put(drawOrder);

drawListBuffer.position(0);

// Initialize the texture holder

//顶点位置

ByteBuffer bb = ByteBuffer.allocateDirect(squareCoords.length * 4);

bb.order(ByteOrder.nativeOrder()); // 转换成本地字节序

vertexBuffer = bb.asFloatBuffer();

vertexBuffer.put(squareCoords);

vertexBuffer.position(0);

//纹理坐标

ByteBuffer texturebb = ByteBuffer.allocateDirect(textureCoords.length * 4);

texturebb.order(ByteOrder.nativeOrder()); // 转换成本地字节序

textureBuffer = texturebb.asFloatBuffer();

textureBuffer.put(textureCoords);

textureBuffer.position(0);

}

private void setupTexture() {

// Generate the actual texture

GLES20.glActiveTexture(GLES20.GL_TEXTURE0); // 激活(使能)相应的纹理单元

GLES20.glGenTextures(1, textures, 0); // 产生纹理id

checkGlError("Texture generate");

GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textures[0]);

//通过纹理id,绑定到相应的纹理单元,纹理单元内存放的类型可以很多种,比如GLES20.GL_TEXTURE_1D、GLES20.GL_TEXTURE_2D、GLES20.GL_TEXTURE_3D、GLES11Ext.GL_TEXTURE_EXTERNAL_OES等

checkGlError("Texture bind");

videoTexture = new SurfaceTexture(textures[0]); // 通过创建的纹理id,生成SurfaceTexture

videoTexture.setOnFrameAvailableListener(this);

}

private void drawTexture() {

int mHProjMatrix=GLES20.glGetUniformLocation(shaderProgram,"uProjMatrix");

GLES20.glUniformMatrix4fv(mHProjMatrix,1,false,matrix,0);

int mHProjMatrix0=GLES20.glGetUniformLocation(shaderProgram,"uProjMatrix0");

GLES20.glUniformMatrix4fv(mHProjMatrix0,1,false,matrix0,0);

int mXyFlag = GLES20.glGetUniformLocation(shaderProgram, "xyFlag"); //镜像类型: x镜像,y镜像---通过不同的变化矩阵与顶点位置向量进行左乘,如:uProjMatrix*vPosition;

GLES20.glUniform1i(mXyFlag, xyFlag);

int mColorFlagHandle = GLES20.glGetUniformLocation(shaderProgram, "colorFlag"); // 纹理操作类型(滤镜处理):饱和度/灰度/冷暖色/放大镜/模糊/美颜/纹理融合

GLES20.glUniform1i(mColorFlagHandle, mColorFlag);

//顶点属性一般包括位置、颜色、法线、纹理坐标属性

GLES20.glEnableVertexAttribArray(positionHandle); // 使能相应的顶点位置属性的顶点属性数组

GLES20.glVertexAttribPointer(positionHandle, 2, GLES20.GL_FLOAT, false, 0, vertexBuffer); // 指定(绑定)该相应的顶点位置属性的顶点属性数组

GLES20.glActiveTexture(GLES20.GL_TEXTURE0);

GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textures[0]); // 摄像头图像纹理

GLES20.glUniform1i(textureParamHandle, 0);

GLES20.glActiveTexture(GLES20.GL_TEXTURE1);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureIdOne); // 贴图的图像纹理

GLES20.glUniform1i(textureHandle, 1);

GLES20.glEnableVertexAttribArray(textureCoordinateHandle);

GLES20.glVertexAttribPointer(textureCoordinateHandle, 4, GLES20.GL_FLOAT, false, 0, textureBuffer);

GLES20.glUniformMatrix4fv(textureTranformHandle, 1, false, videoTextureTransform, 0); // GL_TEXTURE_EXTERNAL_OES纹理的变化矩

GLES20.glUniform1f(mRatio, ratio); // 纹理融合因子

GLES20.glDrawElements(GLES20.GL_TRIANGLE_STRIP, drawOrder.length, GLES20.GL_UNSIGNED_SHORT, drawListBuffer); // 根据顶点位置索引进行绘制片元

GLES20.glDisableVertexAttribArray(positionHandle);

GLES20.glDisableVertexAttribArray(textureCoordinateHandle);

}

public void checkGlError(String op) {

int error;

while ((error = GLES20.glGetError()) != GLES20.GL_NO_ERROR) {

Log.e("SurfaceTest", op + ": glError " + GLUtils.getEGLErrorString(error));

}

}

}demo效果:

纹理融合

放大镜;

模糊:

暖色:

冷色:

美颜:

镜像:

相关文章推荐

- Android Camera2 Opengles2.0 预览图像实时滤镜 视频编码

- Android Camera2 Opengles2.0 图像实时滤镜 显示 视频编码

- Android平台Camera实时滤镜实现方法探讨(八)--滤镜基本制作方法(二)简单美颜滤镜

- Android Camera API 2使用OpenGL ES 2.0和GLSurfaceView对预览进行实时二次处理(黑白滤镜)

- Android平台Camera实时滤镜实现方法探讨(十一)--实时美颜滤镜

- Android 实时滤镜 高斯模糊

- Android平台Camera实时滤镜实现方法探讨(八)--滤镜基本制作方法(二)简单美颜滤镜

- Android平台Camera实时滤镜实现方法探讨(十一)--实时美颜滤镜

- Android平台Camera实时滤镜实现方法探讨(十一)--实时美颜滤镜

- 关于Android例程中OpenGLES2.0例程的错误

- Android OpenGLES2.0(一)——了解OpenGLES2.0

- Android OpenGLES2.0(十八)——轻松搞定Blend颜色混合

- Android上使用OpenGLES2.0显示YUV数据

- Android平台Camera实时滤镜实现方法探讨(二)--Android-GPUImage探讨

- Android 关于美颜/滤镜 利用PBO从OpenGL录制视频

- Android平台Camera实时滤镜实现方法探讨(五)--GLSurfaceView实现Camera预览

- 视频流的处理(实时美颜、滤镜)并通过简单的coreImage渲染

- Android平台美颜相机/Camera实时滤镜/视频编解码/影像后期/人脸技术探索——2.2 来一份LOMO滤镜

- android 图像处理(黑白,模糊,浮雕,圆角,镜像,底片,油画,灰白,加旧,哈哈镜,放大镜)

- (转)使用OpenGL显示图像(七)Android OpenGLES2.0——纹理贴图之显示图片