通过Rancher部署并扩容Kubernetes集群基础篇二

2017-07-12 23:03

1066 查看

接上一篇通过Rancher部署并扩容Kubernetes集群基础篇一

7. 使用ConfigMap配置redis

https://github.com/kubernetes/kubernetes.github.io/blob/master/docs/user-guide/configmap/redis/redis-config

redis-config

redis-pod.yaml

8. 使用kubectl命令管理kubernetes对象

或者

使用kubectl create创建一个配置文件中定义的对象

更新对象

处理configs目录下所有的对象配置文件,创建新的对象或者打补丁现有的对象

9.1 使用deployment运行一个无状态应用

deployment.yaml

7. 使用ConfigMap配置redis

https://github.com/kubernetes/kubernetes.github.io/blob/master/docs/user-guide/configmap/redis/redis-config

redis-config

maxmemory 2mb maxmemory-policy allkeys-lru

# kubectl create configmap example-redis-config --from-file=./redis-config

# kubectl get configmap example-redis-config -o yaml

apiVersion: v1

data:

redis-config: |

maxmemory 2mb maxmemory-policy allkeys-lru

kind: ConfigMap

metadata:

creationTimestamp: 2017-07-12T13:27:56Z

name: example-redis-config

namespace: default

resourceVersion: "45707"

selfLink: /api/v1/namespaces/default/configmaps/example-redis-config

uid: eab522fd-6705-11e7-94da-02672b869d7f

redis-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: redis

spec:

containers:

- name: redis

image: kubernetes/redis:v1

env:

- name: MASTER

value: "true"

ports:

- containerPort: 6379

resources:

limits:

cpu: "0.1"

volumeMounts:

- mountPath: /redis-master-data

name: data

- mountPath: /redis-master

name: config

volumes:

- name: data

emptyDir: {}

- name: config

configMap:

name: example-redis-config

items:

- key: redis-config

path: redis.conf# kubectl create -f redis-pod.yaml pod "redis" created

# kubectl exec -it redis redis-cli 127.0.0.1:6379> 127.0.0.1:6379> 127.0.0.1:6379> config get maxmemory 1) "maxmemory" 2) "2097152" 127.0.0.1:6379> config get maxmemory-policy 1) "maxmemory-policy" 2) "allkeys-lru" 127.0.0.1:6379>

8. 使用kubectl命令管理kubernetes对象

# kubectl run nginx --image nginx deployment "nginx" created

或者

# kubectl create deployment nginx --image nginx

使用kubectl create创建一个配置文件中定义的对象

#kubectl create -f nginx.yaml删除两个配置文件中定义的对象

#kubectl delete -f nginx.yaml -f redis.yaml

更新对象

#kubectl replace -f nginx.yaml

处理configs目录下所有的对象配置文件,创建新的对象或者打补丁现有的对象

#kubectl apply -f configs/递推处理子目录下的对象配置文件

#kubectl apply -R -f configs/9. 部署无状态应用

9.1 使用deployment运行一个无状态应用

deployment.yaml

apiVersion: apps/v1beta1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 2 # tells deployment to run 2 pods matching the template template: # create pods using pod definition in this template metadata: # unlike pod-nginx.yaml, the name is not included in the meta data as a unique name is # generated from the deployment name labels: app: nginx spec: containers: - name: nginx image: nginx:1.7.9 ports: - containerPort: 80

kubectl create -f https://k8s.io/docs/tasks/run-application/deployment.yaml[/code]

这里需要注意一下,rancher1.6.2部署的是kubernetes 1.5.4

这里的apiVersion要改一下,改成extensions/v1beta1

kubernetes从1.6引入apps/v1beta1.Deployment 替代 extensions/v1beta1.Deployment

显示这个deployment的信息# kubectl describe deployment nginx-deployment# kubectl get pods -l app=nginx

更新deployment

deployment-update.yaml

和deployment.yaml除了image不同之外其余的内容相同image: nginx:1.8# kubectl apply -f deployment-update.yaml

执行更新后,kubernetes会先创建新的pods,然后再停掉并删除老的pods# kubectl get pods -l app=nginx # kubectl get pod nginx-deployment-148880595-9l07p -o yaml|grep image: - image: nginx:1.8 image: nginx:1.8

已经更新成功了

通过增加replicas的数量来扩展应用# cp deployment-update.yaml deployment-scale.yaml修改deployment-scale.yaml

replicas: 4# kubectl apply -f deployment-scale.yaml # kubectl get pods -l app=nginx NAME READY STATUS RESTARTS AGE nginx-deployment-148880595-1tb5m 1/1 Running 0 26s nginx-deployment-148880595-9l07p 1/1 Running 0 10m nginx-deployment-148880595-lc113 1/1 Running 0 26s nginx-deployment-148880595-z8l51 1/1 Running 0 10m

删除deployment# kubectl delete deployment nginx-deployment deployment "nginx-deployment" deleted

创建一个可以复制的应用首选的方式是使用的deployment, deployment会使用ReplicaSet. 在Deployment和ReplicaSet加入到Kubernetes之前,可复制的应用是通过ReplicationController来配置的

9.2 案例: 部署一个以Redis作为存储的PHP留言板

第一步: 启动一个redis master服务

redis-master-deployment.yamlapiVersion: extensions/v1beta1 kind: Deployment metadata: name: redis-master spec: replicas: 1 template: metadata: labels: app: redis role: master tier: backend spec: containers: - name: master image: gcr.io/google_containers/redis:e2e # or just image: redis resources: requests: cpu: 100m memory: 100Mi ports: - containerPort: 6379# kubectl create -f redis-master-deployment.yaml

redis-master-service.yamlapiVersion: v1 kind: Service metadata: name: redis-master labels: app: redis role: master tier: backend spec: ports: - port: 6379 targetPort: 6379 selector: app: redis role: master tier: backend# kubectl create -f redis-master-service.yaml# kubectl get services redis-master NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE redis-master 10.43.10.96 <none> 6379/TCP 2m

targetPort是后端容器接收外部流量的端口,port是其他任务pods访问service的端口

kubernetes支持两种主要的模式来发现一个service -- 环境变量和DNS

查看集群DNS# kubectl --namespace=kube-system get rs -l k8s-app=kube-dns NAME DESIRED CURRENT READY AGE kube-dns-1208858260 1 1 1 1d# kubectl get pods -n=kube-system -l k8s-app=kube-dns NAME READY STATUS RESTARTS AGE kube-dns-1208858260-c8dbv 4/4 Running 8 1d

第二步: 启动一个redis slave服务

redis-slave.yamlapiVersion: v1 kind: Service metadata: name: redis-slave labels: app: redis role: slave tier: backend spec: ports: - port: 6379 selector: app: redis role: slave tier: backend --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: redis-slave spec: replicas: 2 template: metadata: labels: app: redis role: slave tier: backend spec: containers: - name: slave image: gcr.io/google_samples/gb-redisslave:v1 resources: requests: cpu: 100m memory: 100Mi env: - name: GET_HOSTS_FROM value: dns # If your cluster config does not include a dns service, then to # instead access an environment variable to find the master # service's host, comment out the 'value: dns' line above, and # uncomment the line below: # value: env ports: - containerPort: 6379# kubectl create -f redis-slave.yaml service "redis-slave" created deployment "redis-slave" created# kubectl get deployments -l app=redis NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE redis-master 1 1 1 1 35m redis-slave 2 2 2 2 5m# kubectl get services -l app=redis NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE redis-master 10.43.10.96 <none> 6379/TCP 31m redis-slave 10.43.231.209 <none> 6379/TCP 6m# kubectl get pods -l app=redis NAME READY STATUS RESTARTS AGE redis-master-343230949-sc78h 1/1 Running 0 37m redis-slave-132015689-rc1xf 1/1 Running 0 7m redis-slave-132015689-vb0vl 1/1 Running 0 7m

第三步: 启动一个留言板前端

frontend.yamlapiVersion: v1 kind: Service metadata: name: frontend labels: app: guestbook tier: frontend spec: # if your cluster supports it, uncomment the following to automatically create # an external load-balanced IP for the frontend service. # type: LoadBalancer ports: - port: 80 selector: app: guestbook tier: frontend --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: frontend spec: replicas: 3 template: metadata: labels: app: guestbook tier: frontend spec: containers: - name: php-redis image: gcr.io/google-samples/gb-frontend:v4 resources: requests: cpu: 100m memory: 100Mi env: - name: GET_HOSTS_FROM value: dns # If your cluster config does not include a dns service, then to # instead access environment variables to find service host # info, comment out the 'value: dns' line above, and uncomment the # line below: # value: env ports: - containerPort: 80# kubectl create -f frontend.yaml service "frontend" created deployment "frontend" created# kubectl get services -l tier NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE frontend 10.43.39.188 <none> 80/TCP 3m redis-master 10.43.10.96 <none> 6379/TCP 47m redis-slave 10.43.231.209 <none> 6379/TCP 22m# kubectl get deployments -l tier NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE frontend 3 3 3 3 3m redis-master 1 1 1 1 53m redis-slave 2 2 2 2 22m# kubectl get pods -l tier NAME READY STATUS RESTARTS AGE frontend-88237173-b7x03 1/1 Running 0 13m frontend-88237173-qg6d7 1/1 Running 0 13m frontend-88237173-zb827 1/1 Running 0 13m redis-master-343230949-sc78h 1/1 Running 0 1h redis-slave-132015689-rc1xf 1/1 Running 0 32m redis-slave-132015689-vb0vl 1/1 Running 0 32m

guestbook.php<?php error_reporting(E_ALL); ini_set('display_errors', 1); require 'Predis/Autoloader.php'; Predis\Autoloader::register(); if (isset($_GET['cmd']) === true) { $host = 'redis-master'; if (getenv('GET_HOSTS_FROM') == 'env') { $host = getenv('REDIS_MASTER_SERVICE_HOST'); } header('Content-Type: application/json'); if ($_GET['cmd'] == 'set') { $client = new Predis\Client([ 'scheme' => 'tcp', 'host' => $host, 'port' => 6379, ]); $client->set($_GET['key'], $_GET['value']); print('{"message": "Updated"}'); } else { $host = 'redis-slave'; if (getenv('GET_HOSTS_FROM') == 'env') { $host = getenv('REDIS_SLAVE_SERVICE_HOST'); } $client = new Predis\Client([ 'scheme' => 'tcp', 'host' => $host, 'port' => 6379, ]); $value = $client->get($_GET['key']); print('{"data": "' . $value . '"}'); } } else { phpinfo(); } ?>

redis-slave 容器里面有个/run.shif [[ ${GET_HOSTS_FROM:-dns} == "env" ]]; then redis-server --slaveof ${REDIS_MASTER_SERVICE_HOST} 6379 else redis-server --slaveof redis-master 6379 fi

redis-master通过kube-dns解析

从外部访问这个留言板有两种方式: NodePort和LoadBalancer

更新frontend.yaml

设置 type: NodePort# kubectl apply -f frontend.yaml# kubectl get services -l tier NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE frontend 10.43.39.188 <nodes> 80:31338/TCP 4h redis-master 10.43.10.96 <none> 6379/TCP 5h redis-slave 10.43.231.209 <none> 6379/TCP 5h

可以看到frontend对集群外暴露一个31338端口,访问任意一个集群节点

http://172.30.30.217:31338/

更新设置frontend.yaml

设置 type: LoadBalancer# kubectl apply -f frontend.yaml

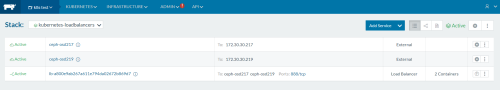

使用rancher部署kubernetes,这里设置type为LoadBalancer后,在rancher上可以看到

kubernetes-loadbalancers

默认对外的端口是80,可以自己调整,还可以rancher LB的容器数量

http://172.30.30.217:888/

http://172.30.30.219:888/

10.部署有状态应用

StatefulSets是用于部署有状态应用和分布式系统。部署有状态应用之前需要先部署有动态持久化存储

10.1 StatefulSet基础

使用Ceph集群作为Kubernetes的动态分配持久化存储

10.2 运行一个单实例的有状态应用

10.3 运行一个多实例复制的有状态应用

10.4 部署WordPress和MySQL案例

10.5 部署Cassandra案例

10.6 部署ZooKeeper案例

参考文档:

http://blog.kubernetes.io/2016/10/dynamic-provisioning-and-storage-in-kubernetes.html

相关文章推荐

- 【云星数据---Nik(精品版)】:通过ansible playbook实现自动化部署 KUBERNETES 集群

- 如何使用Rancher 2.0在Kubernetes集群上部署Istio

- 使用Rancher的RKE快速部署Kubernetes集群

- Kubernetes(二) - 使用Rancher部署K8S集群(搭建Rancher)

- kubernetes1.5.1集群安装部署指南之基础组件安装篇

- Kubernetes(三) - 使用Rancher部署K8S集群(搭建Kubernetes)

- 使用Rancher的RKE快速部署Kubernetes集群

- kubernetes1.5.1集群安装部署指南之基础环境准备篇

- 通过Rancher部署并扩容Kubernetes集群基础篇一

- 第1讲Spark纯实战公益大讲坛:通过案例实战掌握高可用HA下的Spark集群部署

- CentOS7.0上部署kubernetes集群

- 通过docker-machine和etcd部署docker swarm集群

- Kubernetes1.2 集群部署

- Docker集群管理工具-Kubernetes部署记录

- Shell脚本快速部署Kubernetes集群系统

- kubernetes 1.3 的安装和集群环境部署

- 在windows环境通过cygwin部署hadoop伪集群

- 在windows环境通过cygwin部署hadoop伪集群

- Kubernetes 集群的两种部署过程(daemon部署和容器化部署)以及glusterfs的应用!

- kubernetes集群部署