logstash_forward+flume+elasticsearch+kibana日志收集框架

2017-07-04 11:48

513 查看

摘要: 记录下为什么要采用这套架构,以及在压测时候遇到的问题及解决办法

最开始架构定的是采用elk来做日志的收集,但是测试一段时间后,由于logstash的性能很差,对cpu和内存消耗很大,放弃了logstash。为什么没有直接使用flume的agent来收集日志,这主要是根据实际的需求,众所周知,flume对目录的收集无法针对文件的动态变化,在传完文件之后,将会修改文件的后缀,变为.COMPLETED,无论是收集应用日志还是系统日志,我们都不希望改变原有的日志文件,最终收集日志使用了用go开发的更轻量级的logstash_forward,logstash_forward功能比较单一,目前只能用来收集文件。

1.安装logstash-forwarder。

https://github.com/elasticsearch/logstash-forwarder

2.flume安装很简单,解压包即可。

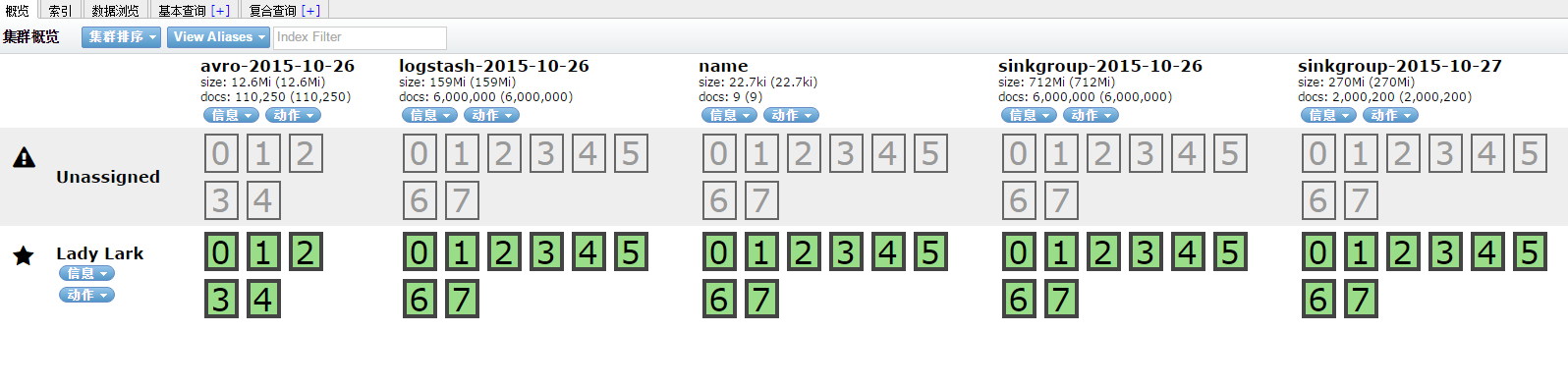

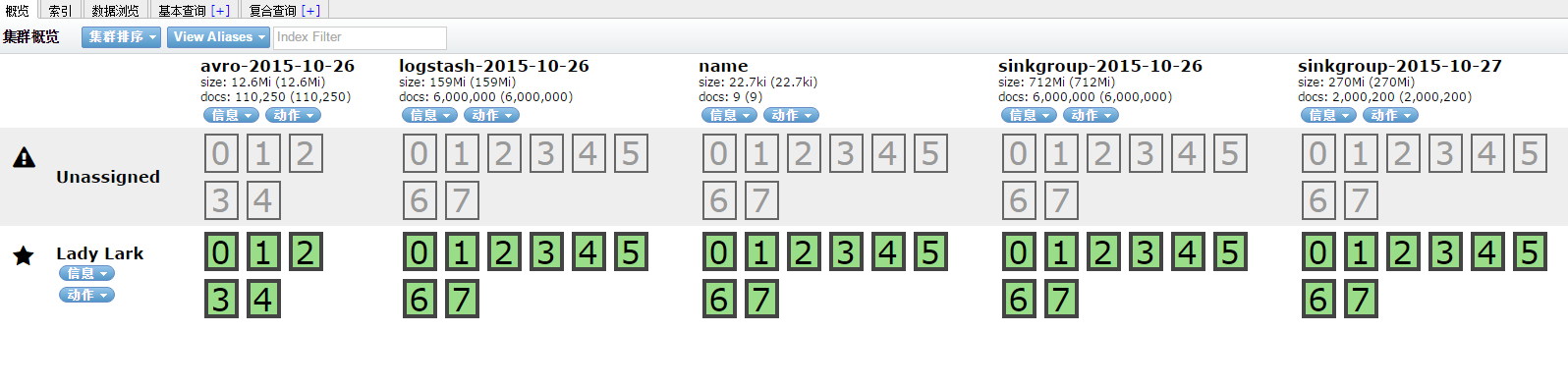

3.安装elasticsearch,测试只安装es单节点

下载https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticsearch-1.3.2.tar.gz

http://master:9200/_plugin/head/

4. 安装kibana

下载地址 : https://www.elastic.co/products/kibana

将kibana-4.1.1-linux-x64.tar.gz解压到apache或tomcat下,修改elasticsearch_url,

启动kibana,bin/kibana

页面:http://master:5601

5. 架构图

经测试,单台agent,入库速率可以达到约1.5w条/s。

在压测的过程中,agent批量发送的数据量大的时候,会导致flume OOM,调整flume JVM 启动参数

flume中心节点的配置:

参考文档:

http://blog.qiniu.com/archives/3928

http://mp.weixin.qq.com/s?__biz=MzA5OTAyNzQ2OA==&mid=207036526&idx=1&sn=b0de410e0d1026cd100ac2658e093160&scene=23&srcid=10228P1jGvZC20dC2FGAdoqh#rd

最开始架构定的是采用elk来做日志的收集,但是测试一段时间后,由于logstash的性能很差,对cpu和内存消耗很大,放弃了logstash。为什么没有直接使用flume的agent来收集日志,这主要是根据实际的需求,众所周知,flume对目录的收集无法针对文件的动态变化,在传完文件之后,将会修改文件的后缀,变为.COMPLETED,无论是收集应用日志还是系统日志,我们都不希望改变原有的日志文件,最终收集日志使用了用go开发的更轻量级的logstash_forward,logstash_forward功能比较单一,目前只能用来收集文件。

1.安装logstash-forwarder。

https://github.com/elasticsearch/logstash-forwarder

2.flume安装很简单,解压包即可。

3.安装elasticsearch,测试只安装es单节点

下载https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticsearch-1.3.2.tar.gz

tar zxf elasticsearch-1.3.2.tar.gz cd elasticsearch-1.3.2/ cd config 可以看到elasticsearch.yml,logging.yml两个文件,若没有请创建。 vi elasticsearch.yml,修改集群名称 cluster.name: elasticsearch 启动elasticsearch,bin/elasticsearch 安装elasticsearch head bin/plugin -install mobz/elasticsearch-head

http://master:9200/_plugin/head/

4. 安装kibana

下载地址 : https://www.elastic.co/products/kibana

将kibana-4.1.1-linux-x64.tar.gz解压到apache或tomcat下,修改elasticsearch_url,

cd kibana-4.1.1-linux-x64 vi config/kibana.yml elasticsearch_url: "http://master:9200"

启动kibana,bin/kibana

页面:http://master:5601

5. 架构图

经测试,单台agent,入库速率可以达到约1.5w条/s。

在压测的过程中,agent批量发送的数据量大的时候,会导致flume OOM,调整flume JVM 启动参数

vi bin/flume-ng JAVA_OPTS="-Xms2048m -Xmx2048m"

flume中心节点的配置:

# The configuration file needs to define the sources, # the channels and the sinks. # Sources, channels and sinks are defined per agent, # in this case called 'agent' a1.sources = r1 a1.sinks = k1 k2 a1.channels = c1 #sinks group a1.sinkgroups = g1 # For each one of the sources, the type is defined a1.sources.r1.type = http a1.sources.r1.bind = 192.168.137.118 a1.sources.r1.port = 5858 # The channel can be defined as follows. a1.channels.c1.type = SPILLABLEMEMORY a1.channels.c1.checkpointDir=/home/hadoop/.flume/channel1/file-channel/checkpoint a1.channels.c1.dataDirs=/home/hadoop/.flume/channel1/file-channel/data a1.channels.c1.keep-alive = 30 # Each sink's type must be defined # k1 sink a1.sinks.k1.channel = c1 a1.sinks.k1.type = avro # connect to CollectorMainAgent a1.sinks.k1.hostname = 192.168.137.119 a1.sinks.k1.port = 5858 # k2 sink a1.sinks.k2.channel = c1 a1.sinks.k2.type = avro # connect to CollectorBackupAgent a1.sinks.k2.hostname = 192.168.137.120 a1.sinks.k2.port = 5858 a1.sinkgroups.g1.sinks = k1 k2 # load_balance type a1.sinkgroups.g1.processor.type = load_balance a1.sinkgroups.g1.processor.backoff = true a1.sinkgroups.g1.processor.selector = ROUND_ROBIN a1.sources.r1.channels = c1

参考文档:

http://blog.qiniu.com/archives/3928

http://mp.weixin.qq.com/s?__biz=MzA5OTAyNzQ2OA==&mid=207036526&idx=1&sn=b0de410e0d1026cd100ac2658e093160&scene=23&srcid=10228P1jGvZC20dC2FGAdoqh#rd

相关文章推荐

- elasticsearch+logstash+kibana收集日志

- ElasticSearch + Logstash + Kibana 实时日志收集、查询和分析系统

- logstash+elasticsearch+kibana日志收集

- 使用kibana+logstash+elasticsearch+redis搭建分布式日志收集、分析、查询系统。

- logstash+elasticsearch+redis+kibana3 日志收集系统搭建

- ELK(ElasticSearch+Logstash+Kibana)+redis日志收集分析系统

- logstash+elasticsearch+kibana日志收集

- elasticsearch + logstash + kibana 搭建实时日志收集系统【原创】

- logstash+elasticsearch+kibana搭建日志收集分析系统

- Linux――ELK(Elasticsearch + Logstash + Kibana)企业日志分析之linux系统history收集展示

- ELK日志收集存储分析-----logstash+elasticsearch+kibana快速搭建日志平台

- windows下Elasticsearch+Logstash+Kibana日志收集分析系统安装教程

- logstash+elasticsearch+redis+kibana3 日志收集系统搭建

- ELK6.2.2(elasticsearch+logstash+kibana)开源日志分析平台搭建(三):logstash简单收集

- 使用logstash+elasticsearch+kibana快速搭建日志平台

- Kibana+Logstash+Elasticsearch 日志查询系统

- ElasticSearch+LogStash+Kibana+Redis日志服务的高可用方案

- logstash+elasticsearch +kibana 日志管理系统