kafka 使用中几个案例

2017-02-06 00:00

148 查看

环境:

kafka版本为0.10.1.0

##案例一

现象:

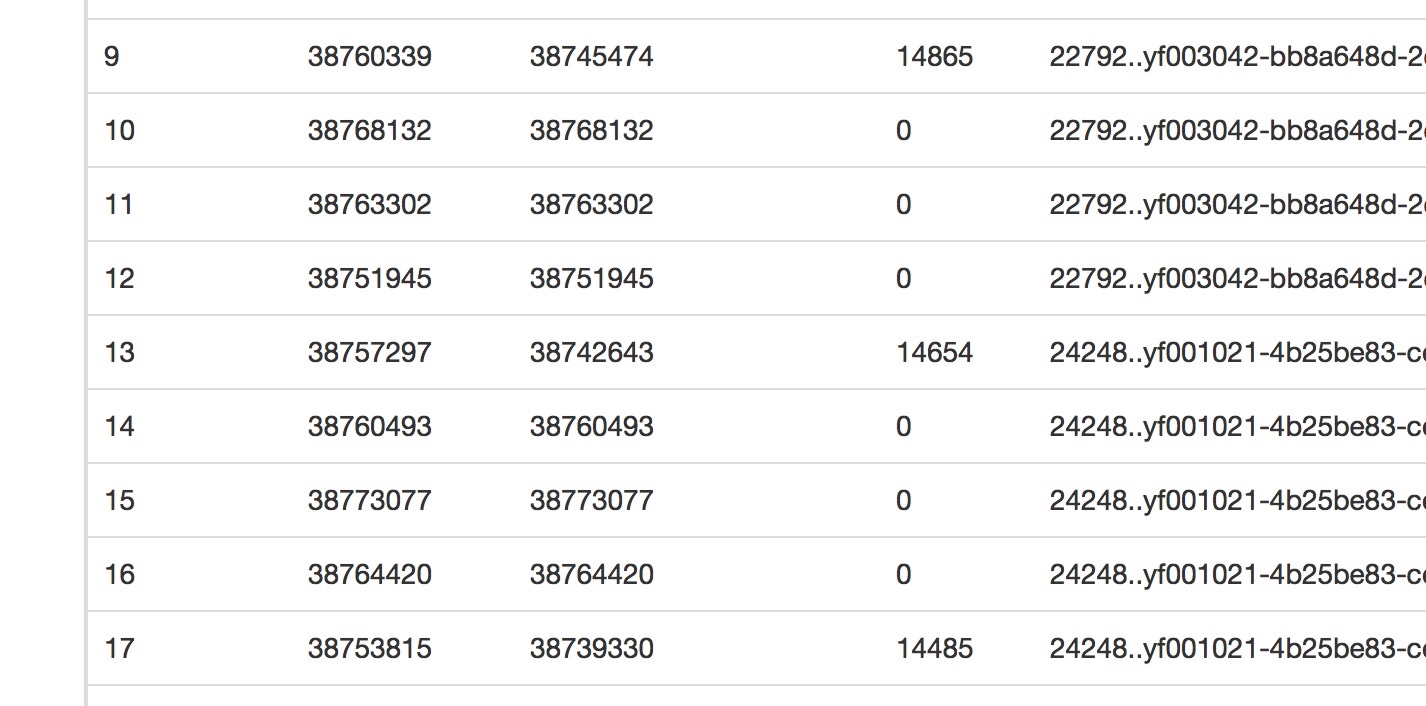

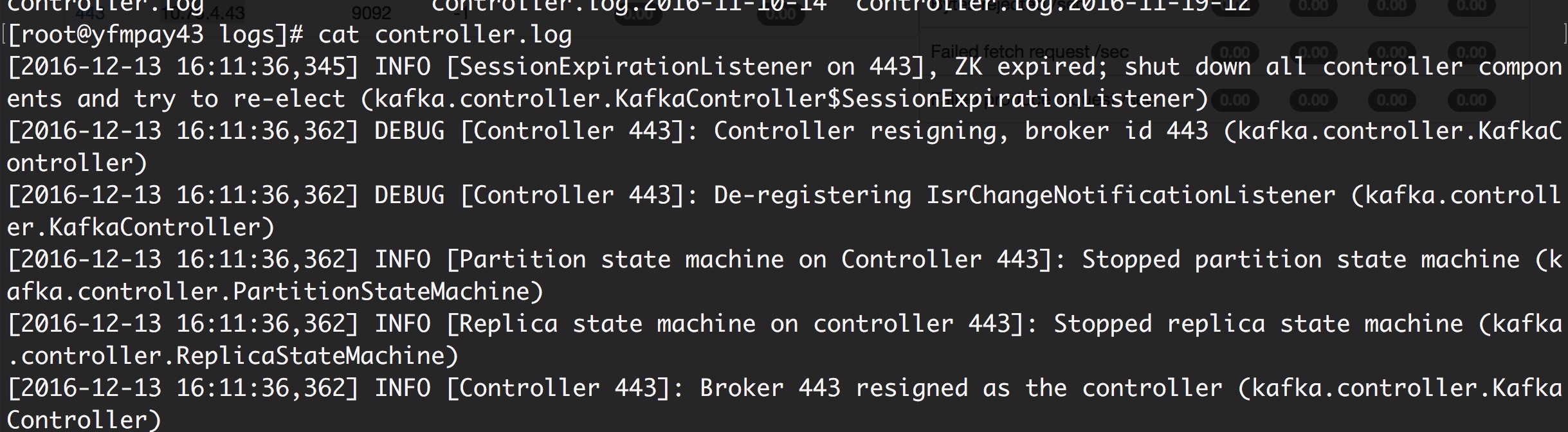

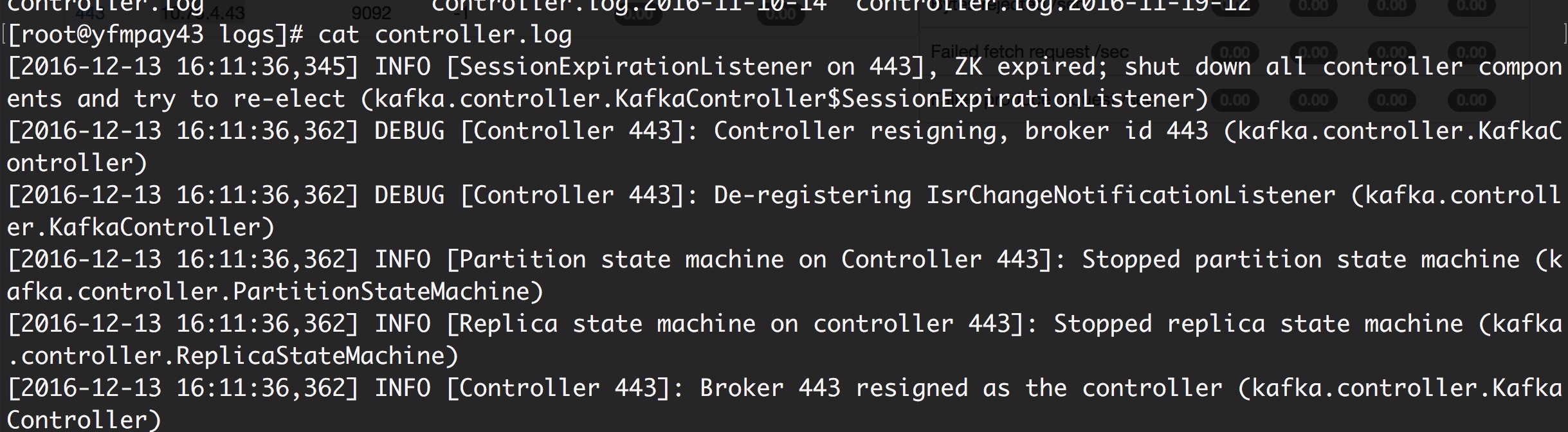

此时是10.75.4.43为control

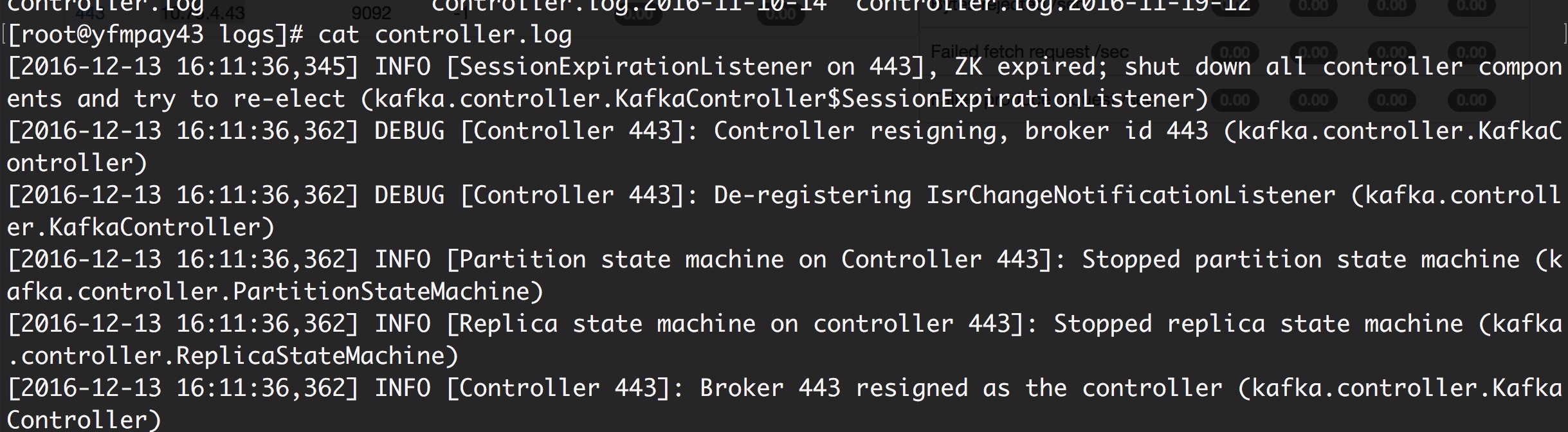

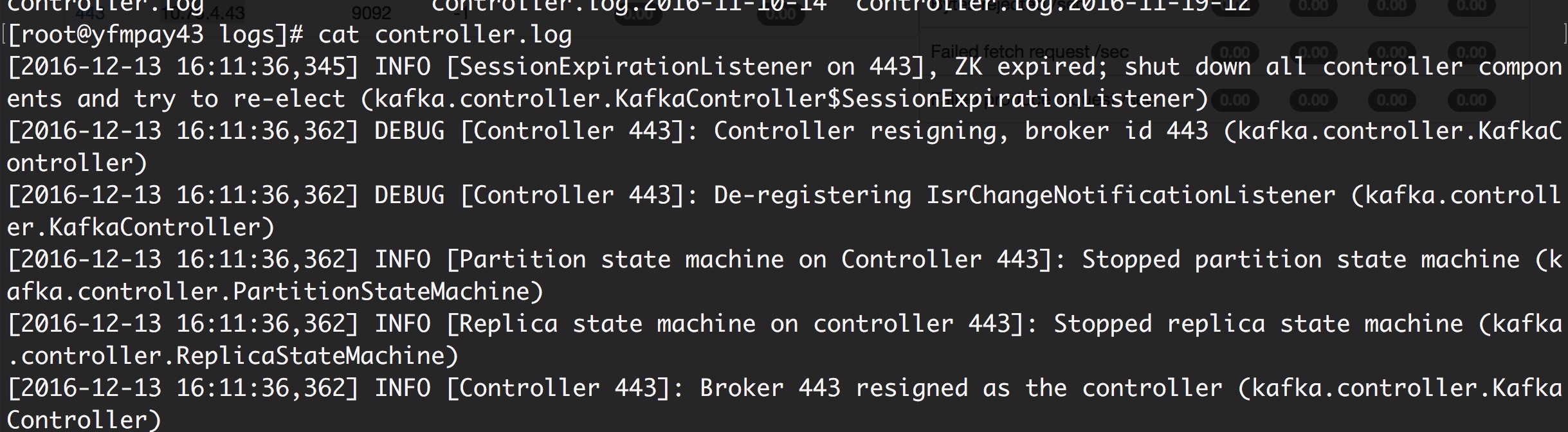

control 日志:

serverlog:

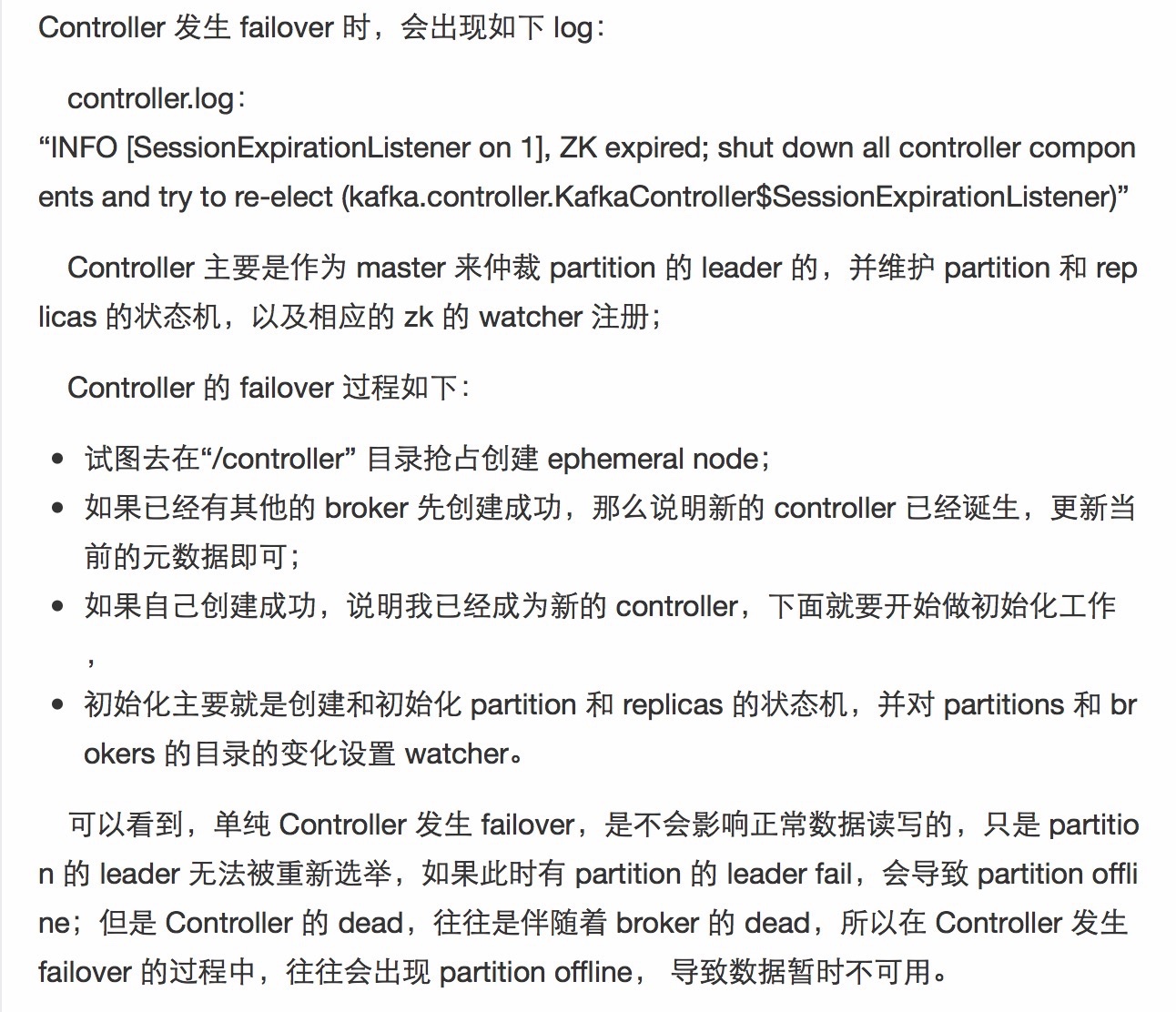

根据观察:应该是control 发生failover ,并且部分partition的leader 导致leader无法重新选举,通过重启生效,集群恢复

文章链接:

http://www.tuicool.com/articles/rArMjmM

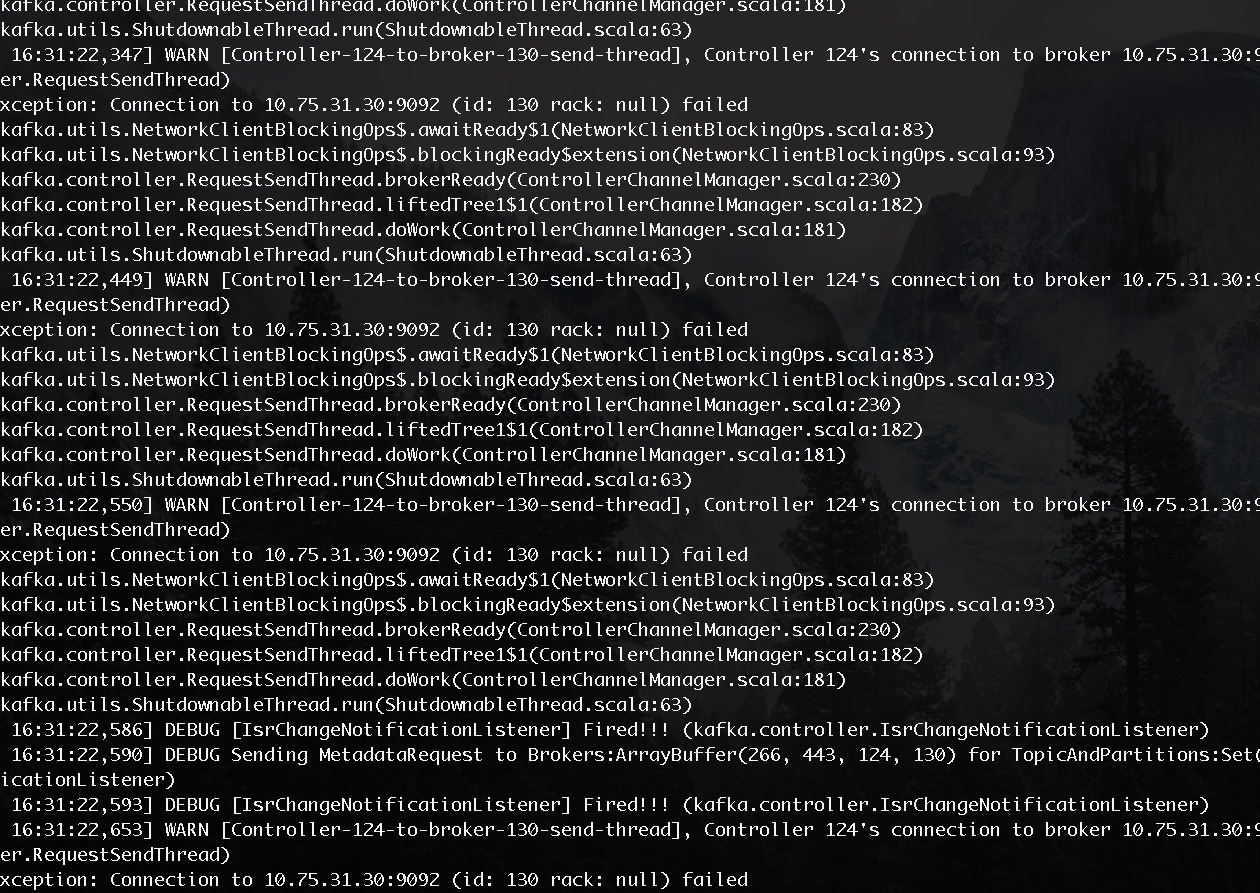

control 日志 此时control 为:10.75.31.24:

显示control 和130节点链接有问题:

注释:

[2016-12-18 09:53:42,235] DEBUG [IsrChangeNotificationListener] Fired!!! (kafka.controller.IsrChangeNotificationListener)

IsrChangeNotificationListener:用于监听当partition的leader发生变化时,更新partitionLeadershipInfo集合的内容,同时向所有的brokers节点发送metadata修改的请求.

这个问题也可以参考对应的bug:

kafka不可以删除对应partition 有问题的leader

操作:

重启130节点问题解决

kafka版本为0.10.1.0

##案例一

现象:

此时是10.75.4.43为control

control 日志:

serverlog:

根据观察:应该是control 发生failover ,并且部分partition的leader 导致leader无法重新选举,通过重启生效,集群恢复

文章链接:

http://www.tuicool.com/articles/rArMjmM

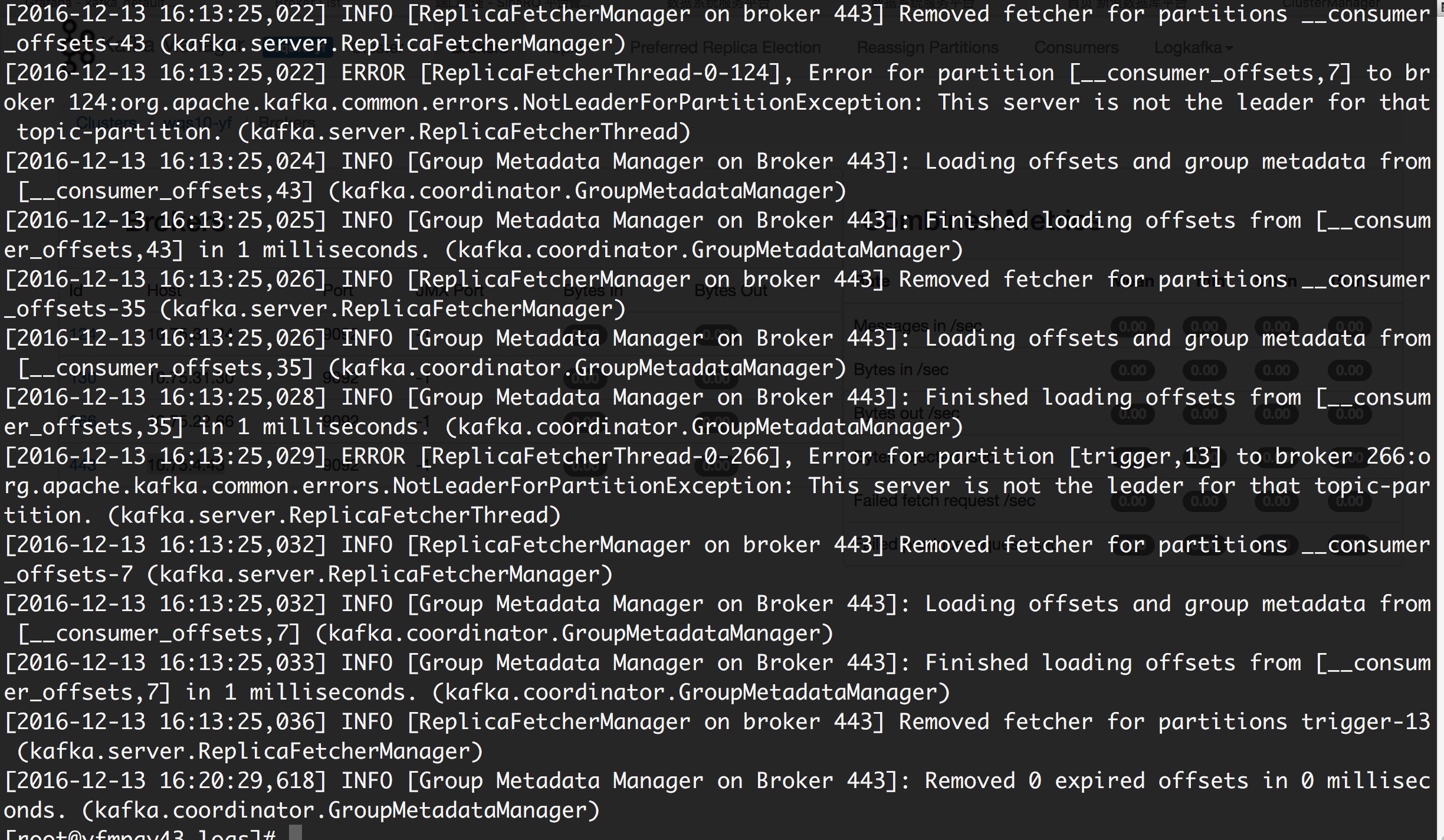

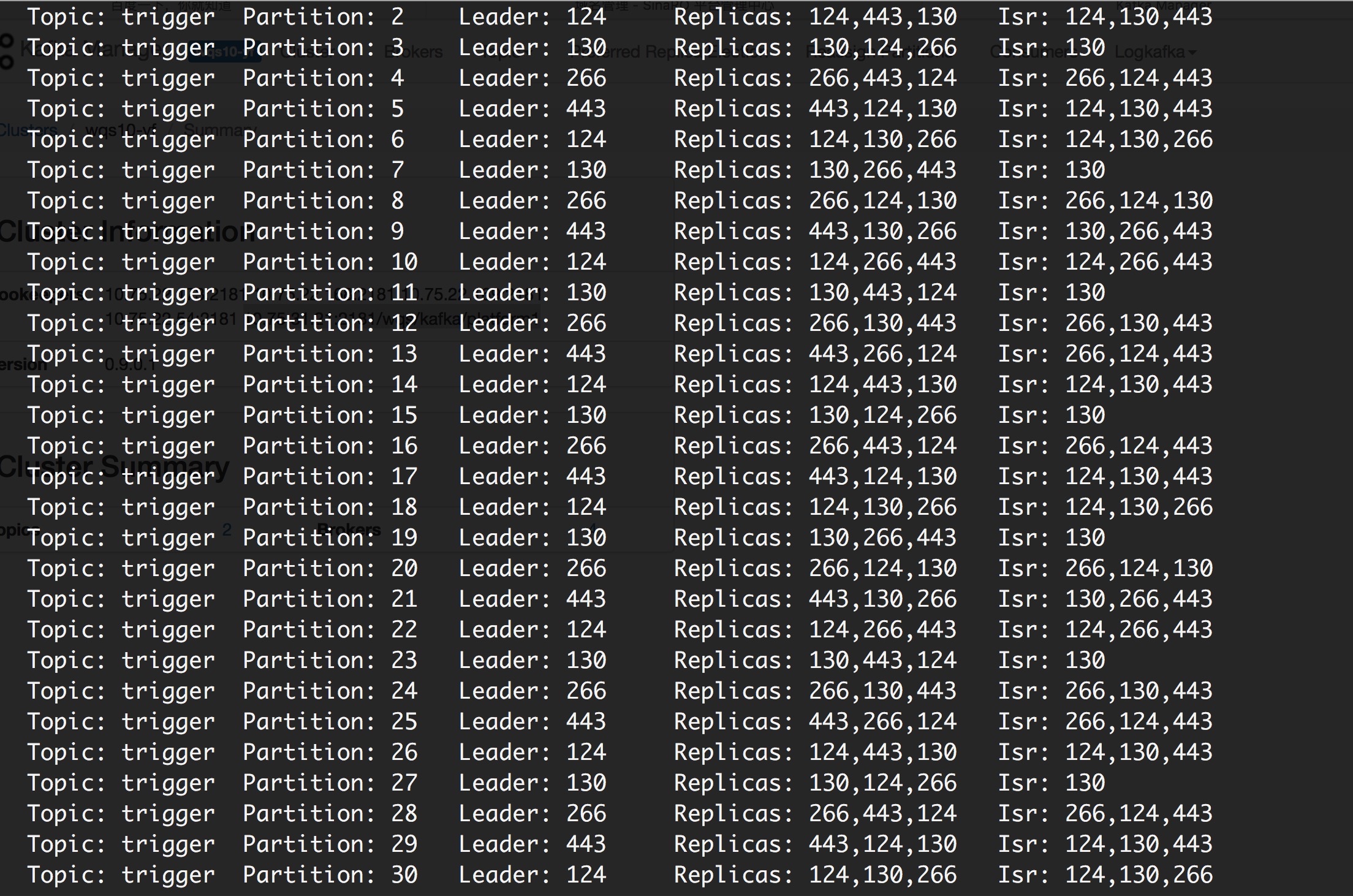

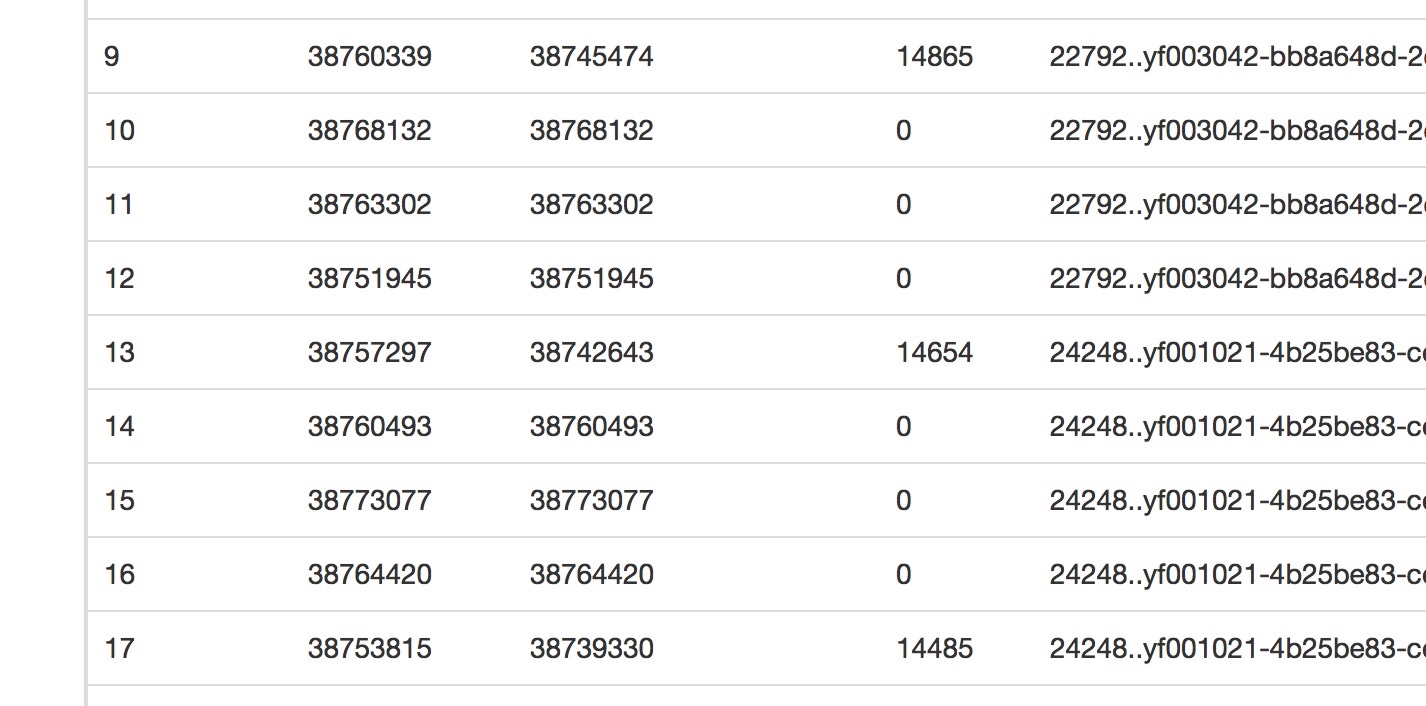

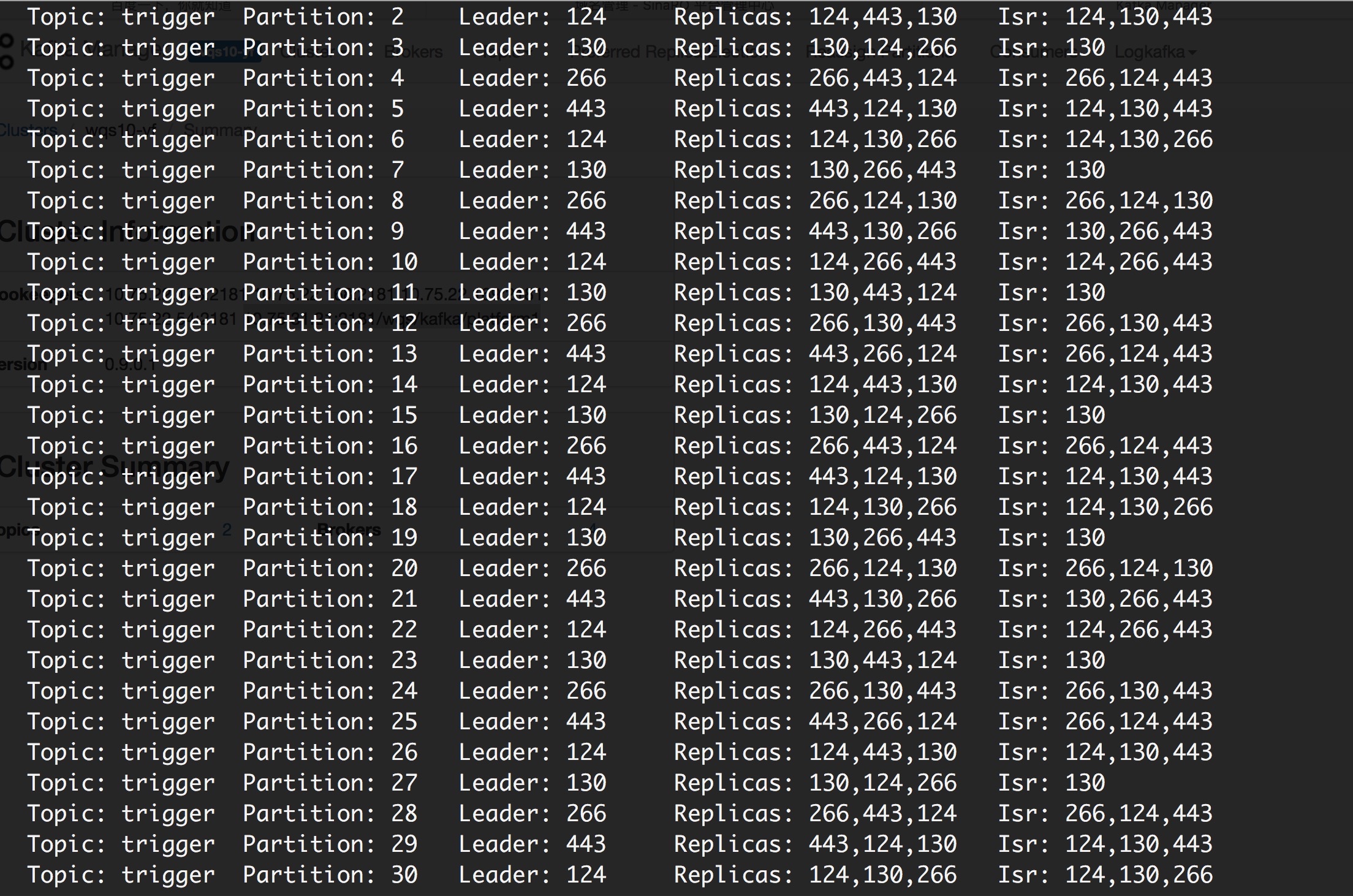

案例二:

现象:

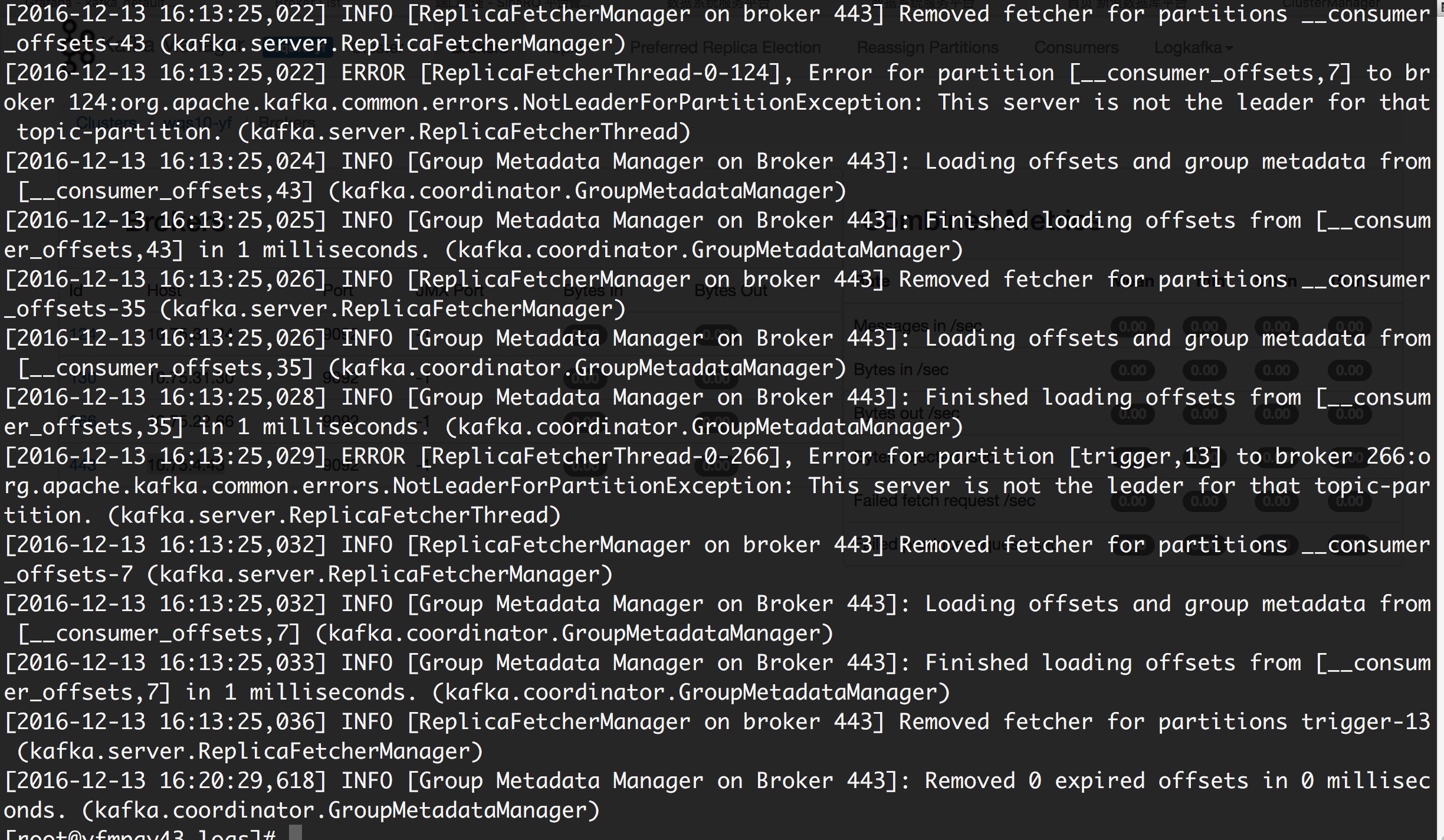

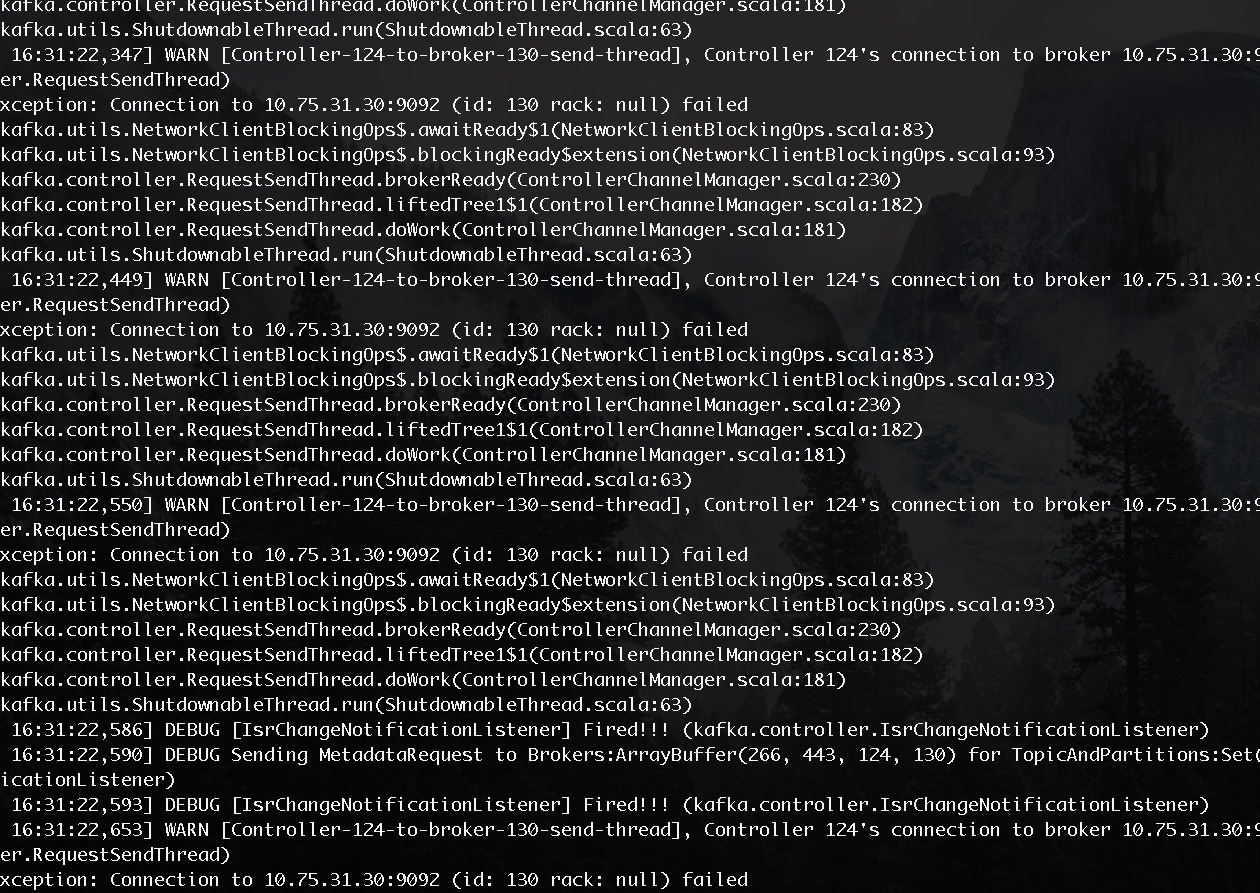

control 日志 此时control 为:10.75.31.24:

[2016-12-28 13:21:24,901] DEBUG [IsrChangeNotificationListener] Fired!!! (kafka.controller.IsrChangeNotificationListener)

[2016-12-28 13:21:24,917] DEBUG Sending MetadataRequest to Brokers:ArrayBuffer(266, 124, 443, 130) for TopicAndPartitions:Set([trigger,23], [__consumer_offsets,29], [__cons

umer_offsets,5], [trigger,31], [__consumer_offsets,33], [__consumer_offsets,37], [trigger,11]) (kafka.controller.IsrChangeNotificationListener)

[2016-12-28 13:21:24,925] INFO [Controller-124-to-broker-124-send-thread], Controller 124 connected to 10.75.31.24:9092 (id: 124 rack: null) for sending state change reques

ts (kafka.controller.RequestSendThread)

[2016-12-28 13:21:24,925] INFO [Controller-124-to-broker-130-send-thread], Controller 124 connected to 10.75.31.30:9092 (id: 130 rack: null) for sending state change reques

ts (kafka.controller.RequestSendThread)

[2016-12-28 13:21:24,926] INFO [Controller-124-to-broker-443-send-thread], Controller 124 connected to 10.75.4.43:9092 (id: 443 rack: null) for sending state change request

s (kafka.controller.RequestSendThread)

[2016-12-28 13:21:24,926] INFO [Controller-124-to-broker-266-send-thread], Controller 124 connected to 10.75.22.66:9092 (id: 266 rack: null) for sending state change reques

ts (kafka.controller.RequestSendThread)

[2016-12-28 13:21:24,928] DEBUG [IsrChangeNotificationListener] Fired!!! (kafka.controller.IsrChangeNotificationListener)

[2016-12-28 13:22:24,938] WARN [Controller-124-to-broker-130-send-thread], Controller 124 epoch 6 fails to send request {controller_id=124,controller_epoch=6,partition_stat

es=[{topic=trigger,partition=31,controller_epoch=5,leader=130,leader_epoch=14,isr=[130],zk_version=30,replicas=[130,266,443]},{topic=__consumer_offsets,partition=5,controll

er_epoch=5,leader=130,leader_epoch=13,isr=[130],zk_version=28,replicas=[130,266,443]},{topic=__consumer_offsets,partition=37,controller_epoch=4,leader=130,leader_epoch=10,i

sr=[130],zk_version=22,replicas=[130,124,266]},{topic=__consumer_offsets,partition=29,controller_epoch=5,leader=130,leader_epoch=13,isr=[130],zk_version=28,replicas=[130,26

6,443]},{topic=trigger,partition=11,controller_epoch=5,leader=130,leader_epoch=14,isr=[130],zk_version=30,replicas=[130,443,124]},{topic=trigger,partition=23,controller_epo

ch=5,leader=130,leader_epoch=16,isr=[130],zk_version=38,replicas=

3ff8

[130,443,124]},{topic=__consumer_offsets,partition=33,controller_epoch=5,leader=130,leader_epoch=15,isr=[13

0],zk_version=36,replicas=[130,443,124]}],live_brokers=[{id=266,end_points=[{port=9092,host=10.75.22.66,security_protocol_type=0}],rack=null},{id=124,end_points=[{port=9092

,host=10.75.31.24,security_protocol_type=0}],rack=null},{id=443,end_points=[{port=9092,host=10.75.4.43,security_protocol_type=0}],rack=null},{id=130,end_points=[{port=9092,

host=10.75.31.30,security_protocol_type=0}],rack=null}]} to broker 10.75.31.30:9092 (id: 130 rack: null). Reconnecting to broker. (kafka.controller.RequestSendThread)

java.io.IOException: Connection to 130 was disconnected before the response was read

at kafka.utils.NetworkClientBlockingOps$$anonfun$blockingSendAndReceive$extension$1$$anonfun$apply$1.apply(NetworkClientBlockingOps.scala:115)

at kafka.utils.NetworkClientBlockingOps$$anonfun$blockingSendAndReceive$extension$1$$anonfun$apply$1.apply(NetworkClientBlockingOps.scala:112)

at scala.Option.foreach(Option.scala:257)

at kafka.utils.NetworkClientBlockingOps$$anonfun$blockingSendAndReceive$extension$1.apply(NetworkClientBlockingOps.scala:112)

at kafka.utils.NetworkClientBlockingOps$$anonfun$blockingSendAndReceive$extension$1.apply(NetworkClientBlockingOps.scala:108)

at kafka.utils.NetworkClientBlockingOps$.recursivePoll$1(NetworkClientBlockingOps.scala:137)

at kafka.utils.NetworkClientBlockingOps$.kafka$utils$NetworkClientBlockingOps$$pollContinuously$extension(NetworkClientBlockingOps.scala:143)

at kafka.utils.NetworkClientBlockingOps$.blockingSendAndReceive$extension(NetworkClientBlockingOps.scala:108)

at kafka.controller.RequestSendThread.liftedTree1$1(ControllerChannelManager.scala:190)

at kafka.controller.RequestSendThread.doWork(ControllerChannelManager.scala:181)

at kafka.utils.ShutdownableThread.run(ShutdownableThread.scala:63)

[2016-12-28 13:22:25,061] INFO [Controller-124-to-broker-130-send-thread], Controller 124 connected to 10.75.31.30:9092 (id: 130 rack: null) for sending state change reques

ts (kafka.controller.RequestSendThread)显示control 和130节点链接有问题:

注释:

[2016-12-18 09:53:42,235] DEBUG [IsrChangeNotificationListener] Fired!!! (kafka.controller.IsrChangeNotificationListener)

IsrChangeNotificationListener:用于监听当partition的leader发生变化时,更新partitionLeadershipInfo集合的内容,同时向所有的brokers节点发送metadata修改的请求.

这个问题也可以参考对应的bug:

kafka不可以删除对应partition 有问题的leader

操作:

重启130节点问题解决

相关文章推荐

- _00017 Kafka的体系结构介绍以及Kafka入门案例(初级案例+Java API的使用)

- Android ViewPager使用详解,加载几个简单布局案例+代码

- struts2.X心得9--struts2自定义拦截器以及文件上传等几个拦截器使用案例

- 大数据IMF传奇行动绝密课程第100-101课:使用Spark Streaming+Spark SQL+Kafka+FileSystem综合案例

- kafka HighLevelConsumer API 使用案例

- Kafka 单机和分布式环境搭建与案例使用

- struts2.X心得9--struts2自定义拦截器以及文件上传等几个拦截器使用案例

- jstorm kafka插件使用案例

- 几个sql语句的使用

- 关于几个HTML文档接口的使用探讨

- 在nhibernate使用过程中遇到的几个问题02

- WiKi 在各領域的使用狀況跟案例介紹

- 使用DataGrid容易范的几个错误。

- Delphi中使用动态SQL的几个问题

- 使用 SQL Server 时需要经常用到的几个设置选项!

- 使用 SQL Server 时需要经常用到的几个设置选项!

- C#中使用XML——基于DOM的案例分析

- MapXtreme2004代码 几个地图工具的使用(C#)

- 再datagrid中使用droplist。。。。重要的是其中的几个用法

- cnblogs程序使用中的几个问题!