ubuntu1404_64单机安装Hadoop2.7.3

2016-11-28 21:02

375 查看

JDK、Hadoop、Hive官网下载,Hive默认(嵌入式derby 模式)

http://hadoop.apache.org/releases.html

http://www.apache.org/dyn/closer.cgi/hive/

http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

参考文档

http://www.powerxing.com/install-hadoop/

创建用户和组,设置密码

切换hadoop用户后,配置SSH免密登录

验证

配置Java环境

Hadoop配置

core-site.xml:包括HDFS、MapReduce的I/O以及namenode节点的url(协议、主机名、端口)等核心配置,datanode在namenode上注册后,通过此url跟client交互

hdfs-site.xml: HDFS守护进程配置,包括namenode,secondary namenode,datanode

mapred-site.xml:MapReduce守护进程配置,包括jobtracker和tasktrackers

全局资源管理配置

http://www.cnblogs.com/gw811/p/4077318.html

配置与hadoop运行环境相关的变量

nameNode 格式化并启动,如果修改了hostname,/etc/hosts文件也需要添加本地解析,否则初始化会报错namenode unknown

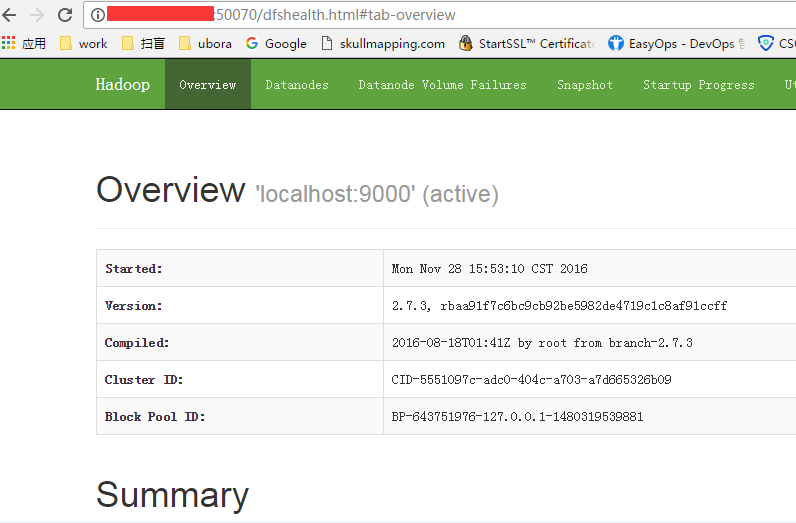

成功启动后,可访问web界面查看nameNode和datanode信息以及HDFS中的文件。

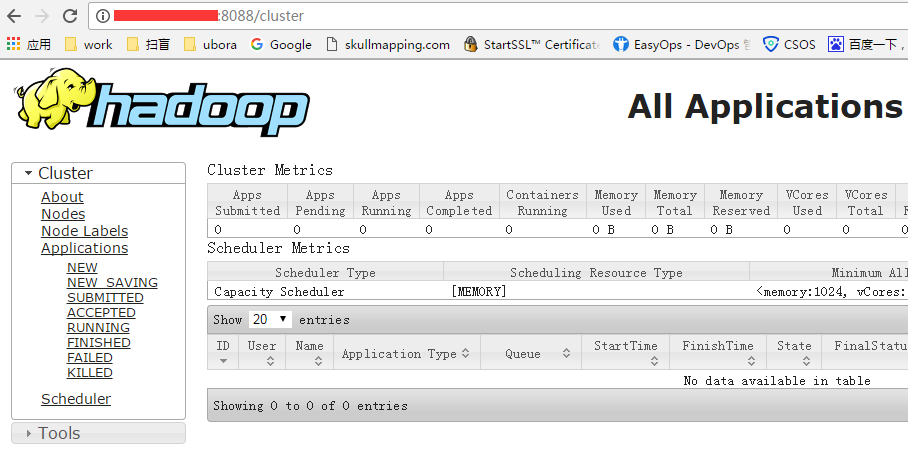

伪分布式启动 YARN为可选操作,启动后可以通过web界面查看任务运行情况

监听端口

解压Hive安装包,配置运行环境变量

HDFS上创建目录并设置权限

初始化数据库

测试

http://hadoop.apache.org/releases.html

http://www.apache.org/dyn/closer.cgi/hive/

http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

参考文档

http://www.powerxing.com/install-hadoop/

创建用户和组,设置密码

root@hive:~# useradd -m hadoop -s /bin/bash root@hive:~# passwd hadoop Enter new UNIX password: Retype new UNIX password: passwd: password updated successfully

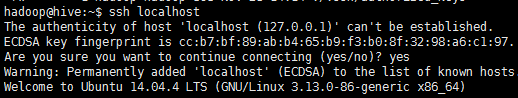

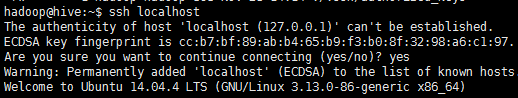

切换hadoop用户后,配置SSH免密登录

root@hive:~# su hadoop hadoop@hive:/root$ cd hadoop@hive:~$ ssh-keygen -t rsa -P '' #密钥默认存放在/home/hadoop/.ssh/目录下 hadoop@hive:~$ cat ./.ssh/id_rsa.pub >> ~/.ssh/authorized_keys hadoop@hive:~$ chmod 0600 !$ chmod 0600 ~/.ssh/authorized_keys

验证

配置Java环境

hadoop@hive:~# tar xvf jdk-8u111-linux-x64.tar.gz -C /usr/share/java/ hadoop@hive:~# vim .bash_profile hadoop@hive:~# cat !$ cat .bash_profile export JAVA_HOME=/usr/share/java/jdk1.8.0_111/ export PATH=$PATH:$JAVA_HOME/bin hadoop@hive:~# source !$ source .bash_profile hadoop@hive:~# java -version java version "1.8.0_111" Java(TM) SE Runtime Environment (build 1.8.0_111-b14) Java HotSpot(TM) 64-Bit Server VM (build 25.111-b14, mixed mode)

Hadoop配置

core-site.xml:包括HDFS、MapReduce的I/O以及namenode节点的url(协议、主机名、端口)等核心配置,datanode在namenode上注册后,通过此url跟client交互

hadoop@hive:~$ vim hadoop-2.7.3/etc/hadoop/core-site.xml <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property> </configuration>

hdfs-site.xml: HDFS守护进程配置,包括namenode,secondary namenode,datanode

hadoop@hive:~$ vim hadoop-2.7.3/etc/hadoop/hdfs-site.xml <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

mapred-site.xml:MapReduce守护进程配置,包括jobtracker和tasktrackers

hadoop@hive:~$ vim hadoop-2.7.3/etc/hadoop/mapred-site.xml <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

全局资源管理配置

http://www.cnblogs.com/gw811/p/4077318.html

hadoop@hive:~$ vim hadoop-2.7.3/etc/hadoop/yarn-site.xml <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_suffle</value> </property> </configuration>

配置与hadoop运行环境相关的变量

hadoop@hive:~$ vim hadoop-2.7.3/etc/hadoop/hadoop-env.sh export JAVA_HOME=/usr/share/java/jdk1.8.0_111/

nameNode 格式化并启动,如果修改了hostname,/etc/hosts文件也需要添加本地解析,否则初始化会报错namenode unknown

hadoop@hive:~$ hadoop-2.7.3/bin/hdfs namenode -format hadoop@hive:~$ hadoop-2.7.3/sbin/start-dfs.sh Starting namenodes on [localhost] localhost: starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-hive.out localhost: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-hive.out Starting secondary namenodes [0.0.0.0] 0.0.0.0: starting secondarynamenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-secondarynamenode-hive.out

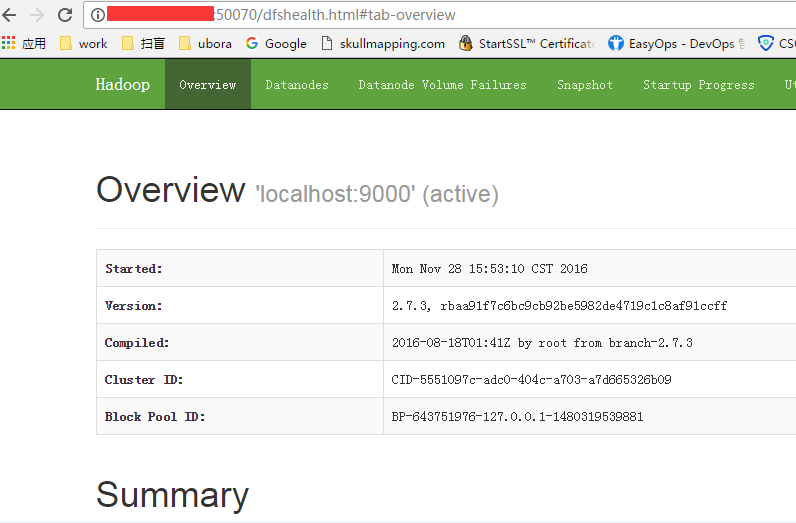

成功启动后,可访问web界面查看nameNode和datanode信息以及HDFS中的文件。

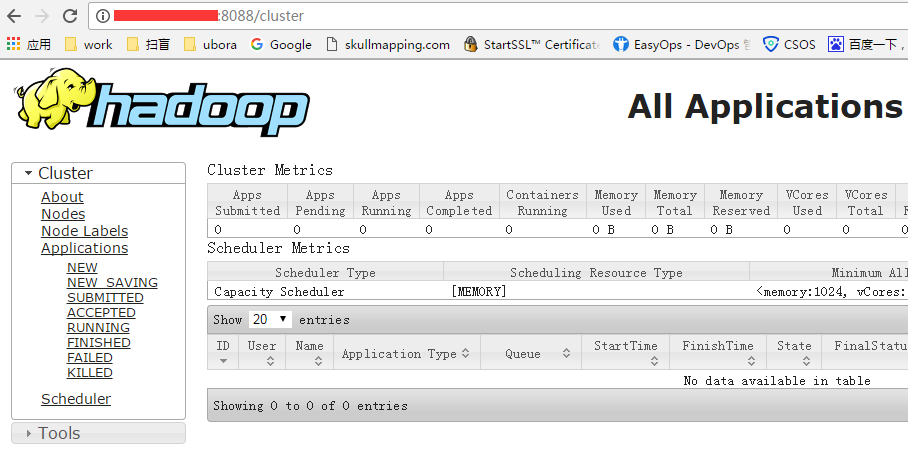

伪分布式启动 YARN为可选操作,启动后可以通过web界面查看任务运行情况

hadoop@hive:~$ hadoop-2.7.3/sbin/start-yarn.sh starting yarn daemons starting resourcemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-resourcemanager-hive.out localhost: starting nodemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-hive.out root@hive:/home/hadoop# jps 5366 ResourceManager 5014 DataNode 4904 NameNode 7354 Jps 5214 SecondaryNameNode 7055 RunJar

监听端口

| listen | conf | description |

|---|---|---|

| 9000 | core-site.xml | NameNode RPC交互 |

| 9001 | mapred-site.xml | JobTracker交互 |

| 50030 | mapred-site.xml | Tracker Web管理 |

| 50060 | mapred-site.xml | TaskTracker HTTP |

| 50070 | hdfs-site.xml | NameNode Web管理 |

| 50010 | hdfs-site.xml | DataNode控制端口 |

| 50020 | hdfs-site.xml | DataNode RPC交互 |

| 50075 | hdfs-site.xml | DataNode HTTP |

| 50090 | hdfs-site.xml | Secondary NameNode Web管理 |

hadoop@hive:~$ tar xvf apache-hive-2.1.0-bin.tar.gz hadoop@hive:~$ tail -3 .bash_profile export HADDOP_HOME=/home/hadoop/hadoop-2.7.3/ export HIVE_HOME=/home/hadoop/apache-hive-2.1.0-bin/ export PATH=$PATH:$HADDOP_HOME/bin:$HADDOP_HOME/bin:$HIVE_HOME/bin hadoop@hive:~$ source !$ source .bash_profile

HDFS上创建目录并设置权限

hadoop@hive:~$ hadoop fs -mkdir -p /tmp hadoop@hive:~$ hadoop fs -mkdir -p /user/hive/warehouse hadoop@hive:~$ hadoop fs -chmod g+w /tmp hadoop@hive:~$ hadoop fs -chmod g+w /user/hive/warehouse

初始化数据库

hadoop@hive:~$ schematool -dbType derby -initSchema SLF4J: Class path contains multiple SLF4J bindings. .......... Starting metastore schema initialization to 2.1.0 Initialization script hive-schema-2.1.0.derby.sql Initialization script completed schemaTool completed

测试

hive> show databases; OK default Time taken: 0.014 seconds, Fetched: 1 row(s)

hive> CREATE TABLE ss7_traffic (DATA_DATE string, > CdPA_SSN int, CdPA_ID int, > CgPA_SSN int, CgPA_ID int, > otid string, dtid string, > OPCODE int, imsi string, > msisdn string, MSRN string, > MSCN string, VLRN string) > ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' > WITH SERDEPROPERTIES ( "separatorChar" = ',',"quoteChar" = '"', "escapeChar" = '"' ) > STORED AS TEXTFILE; OK Time taken: 2.747 seconds hive> LOAD DATA LOCAL INPATH './data.csv' OVERWRITE INTO TABLE ss7_traffic; Loading data to table default.ss7_traffic OK Time taken: 2.552 seconds

hive> CREATE TABLE ss7_optype ( OPTYPE string, OPCODE int ) > ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' > WITH SERDEPROPERTIES ( > "separatorChar" = ',',"quoteChar" = '"', "escapeChar" = '"' ) > STORED AS TEXTFILE; OK Time taken: 0.142 seconds hive> LOAD DATA LOCAL INPATH './OPTYPE.csv' OVERWRITE INTO TABLE ss7_optype; Loading data to table default.ss7_optype OK Time taken: 0.512 seconds

hive> CREATE TABLE ss7_gtlist ( GTN string, WB string ) > ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' > WITH SERDEPROPERTIES ( "separatorChar" = ',', "quoteChar" = '"', > "escapeChar" = '"' ) STORED AS TEXTFILE; OK Time taken: 0.155 seconds

hive> SELECT t1.* FROM ss7_traffic t1 JOIN ss7_optype t2 ON t1.opcode = t2.opcode > AND t2.optype = 'intraPlmn' WHERE t1.CgPA_id NOT IN > ( SELECT gtn FROM ss7_gtlist WHERE wb = 'w');

相关文章推荐

- Hadoop安装教程_单机/伪分布式配置_Hadoop2.7.3/Ubuntu16.04

- Hadoop安装教程_单机/伪分布式配置_Hadoop2.6.0/Ubuntu14.04

- Hadoop安装全教程 Ubuntu16.04+Java1.8.0+Hadoop2.7.3

- Hadoop安装教程_单机/伪分布式配置_Hadoop2.6.0/Ubuntu14.04

- Ubuntu16安装Hadoop2.7.3完全分布式

- Hadoop安装教程_单机/伪分布式配置_Hadoop2.6.0/Ubuntu14.04

- ubuntu安装hadoop2.7.3集群

- ubuntu 下 Hadoop1.0.4 安装过程 (单机伪分布模式)

- Ubuntu 14.04编译安装hadoop 2.7.3

- Ubuntu 14.04编译安装hadoop 2.7.3

- 在Ubuntu上安装Hadoop(单机模式)

- Ubuntu16.04下Hadoop 2.7.3的安装与配置

- 【Hadoop入门学习系列之一】Ubuntu下安装Hadoop(单机模式+伪分布模式)

- Hadoop安装教程_单机配置_Hadoop1.2.1/Ubuntu16.04

- Hadoop安装教程_单机/伪分布式配置_Hadoop2.6.0/Ubuntu14.04

- Hadoop安装教程_单机/伪分布式配置_Hadoop2.6.0/Ubuntu14.04

- Hadoop安装教程_单机/伪分布式配置_Hadoop2.6.0/Ubuntu14.04(转)

- Hadoop单机模式安装入门(Ubuntu系统)

- Hadoop集群安装配置教程_Hadoop2.7.3Ubuntu/CentOS

- Ubuntu16.10下安装Hadoophadoop-2.8.0(单机模式)