READING NOTE:LCNN: Lookup-based Convolutional Neural Network

2016-11-24 08:20

405 查看

TITLE: LCNN: Lookup-based Convolutional Neural Network

AUTHOR: Hessam Bagherinezhad, Mohammad Rastegari, Ali Farhadi

ASSOCIATION: University of Washington, Allen Institute for AI

FROM: arXiv:1611.06473

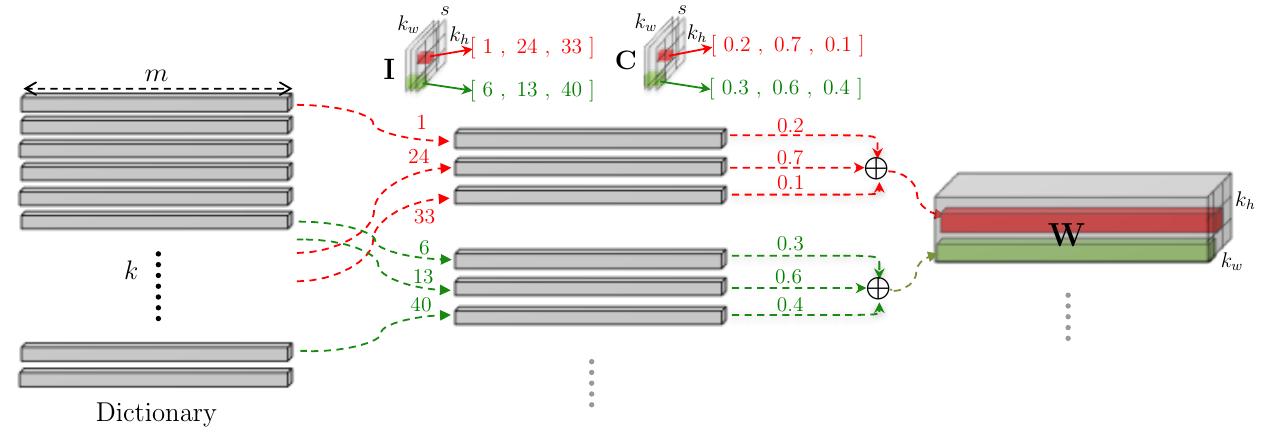

where k is the size of the dictionary D , m is the size of input channel. The weight tensor can be constructed by the linear combination of S words in dictionary D as follows:

W [:,r,c] =∑ t=1 S C [t,r,c] ⋅D [I [t,r,c] ,:] ∀r,c

where S is the size of number of components in the linear combinations. Then the convolution can be computed fast using a shared dictionary. we can convolve the input with all of the dictionary vectors, and then compute the output according to I and C . Since the dictionary D is shared among all weight filters in a layer, we can precompute the convolution between the input tensor X and all the dictionary vectors. Given S which is defined as:

S [i,:,:] =X∗D [i,:] ∀1≤i≤k

the convolution operation can be computed as

X∗W=S∗P

where P can be expressed by I and C :

P j,r,c ={C t,r,c 0 ∃t:I t,r,c =jotherwise

The idea can be illustrated in the following figure:

thus the the dictionary and the lookup parameters can be trained jointly.

Few-shot learning. The shared dictionary in LCNN allows a neural network to learn from very few training examples on novel categories

LCNN needs fewer iteration to train.

AUTHOR: Hessam Bagherinezhad, Mohammad Rastegari, Ali Farhadi

ASSOCIATION: University of Washington, Allen Institute for AI

FROM: arXiv:1611.06473

CONTRIBUTIONS

LCNN, a lookup-based convolutional neural network is introduced that encodes convolutions by few lookups to a dictionary that is trained to cover the space of weights in CNNs.METHOD

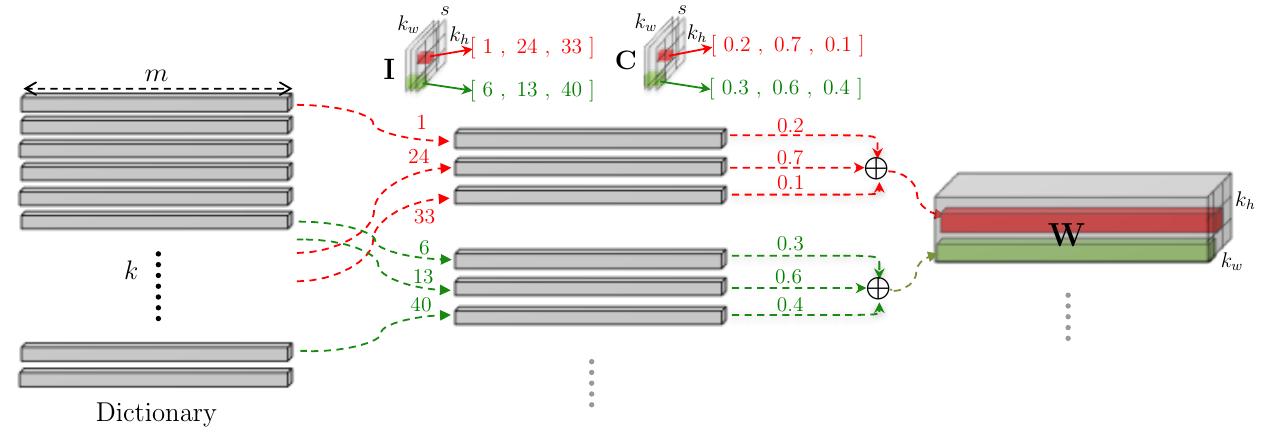

The main idea of the work is decoding the weights of the convolutional layer using a dictionary D and two tensors, I and C , like the following figure illustrated.

where k is the size of the dictionary D , m is the size of input channel. The weight tensor can be constructed by the linear combination of S words in dictionary D as follows:

W [:,r,c] =∑ t=1 S C [t,r,c] ⋅D [I [t,r,c] ,:] ∀r,c

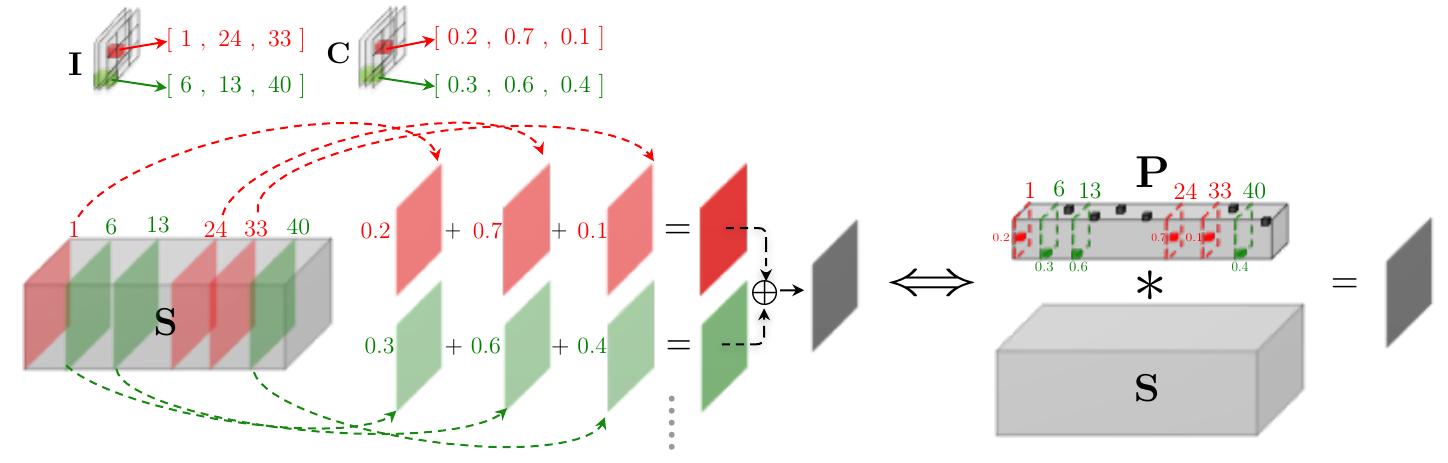

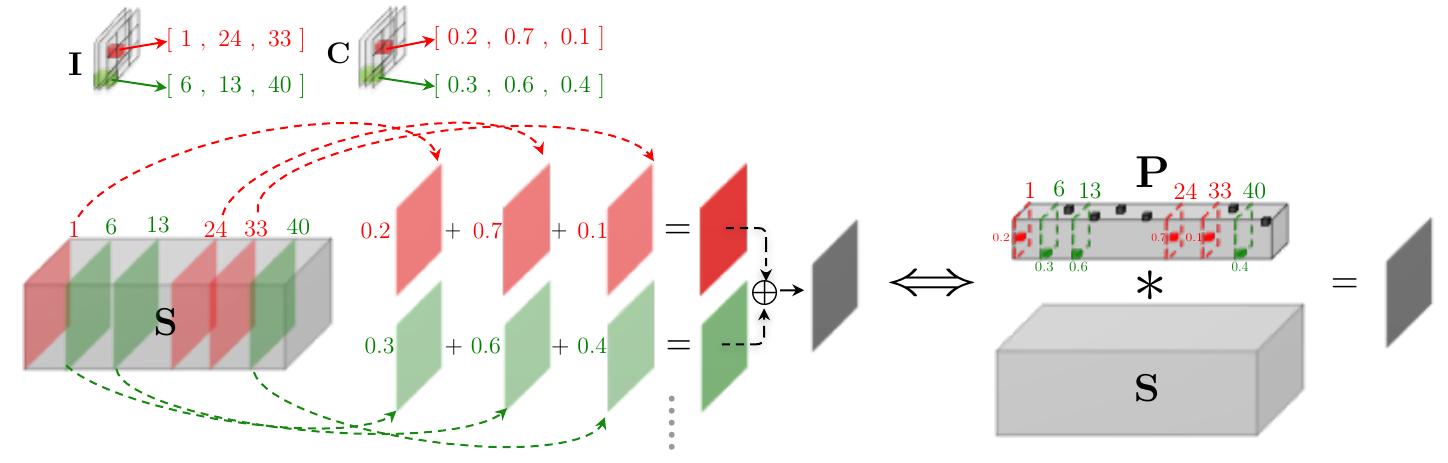

where S is the size of number of components in the linear combinations. Then the convolution can be computed fast using a shared dictionary. we can convolve the input with all of the dictionary vectors, and then compute the output according to I and C . Since the dictionary D is shared among all weight filters in a layer, we can precompute the convolution between the input tensor X and all the dictionary vectors. Given S which is defined as:

S [i,:,:] =X∗D [i,:] ∀1≤i≤k

the convolution operation can be computed as

X∗W=S∗P

where P can be expressed by I and C :

P j,r,c ={C t,r,c 0 ∃t:I t,r,c =jotherwise

The idea can be illustrated in the following figure:

thus the the dictionary and the lookup parameters can be trained jointly.

ADVANTAGES

It speeds up inference.Few-shot learning. The shared dictionary in LCNN allows a neural network to learn from very few training examples on novel categories

LCNN needs fewer iteration to train.

DISADVANTAGES

Performance is hurt because of the estimation of the weights相关文章推荐

- Some Improvements on Deep Convolutional Neural Network Based Image Classification(精读)

- Convolutional Neural Network-based Place Recognition 基于卷积神经网络的地点识别算法

- Reading Note: Deformable Part-based Fully Convolutional Network for Object Detection

- Age and gender estimation based on Convolutional Neural Network and TensorFlow

- ABC-CNN: An Attention Based Convolutional Neural Network for Visual Question Answering

- Some Improvements on Deep Convolutional Neural Network Based Image Classif ication

- 目标跟踪之“Robust Visual Tracking with Deep Convolutional Neural Network based Object Proposals on PETS”

- Convolutional Neural Network-based Place Recognition

- READING NOTE: SuperCNN: A Superpixelwise Convolutional Neural Network for Salient Object Detection

- [COLING2016]Attention-Based Convolutional Neural Network for Semantic Relation Extraction

- 学习笔记5 Supervised Convolutional Neural Network 之 Stochastic Gradient Descent

- 快速去阴影--Fast Shadow Detection from a Single Image Using a Patched Convolutional Neural Network

- 深度学习FPGA实现基础知识10(Deep Learning(深度学习)卷积神经网络(Convolutional Neural Network,CNN))

- 卷积神经网络(Convolutional Neural Network)学习资料

- 台大Machine Learning 2017Fall 卷积神经网络 Convolutional Neural Network

- 人群计数:Single-Image Crowd Counting via Multi-Column Convolutional Neural Network(CVPR2016)

- An End-to-End Trainable Neural Network for Image-based Sequence Recognition and Its Application to S

- [ACL2015]A Dependency-Based Neural Network for Relation Classification

- 卷积神经网络(Convolutional Neural Network, CNN)

- FSRCNN网络资源学习(Fast Region-based Convolutional Network)