Android 使用Face++进行人脸识别

2016-11-17 00:01

656 查看

识别精度很好,可获取的信息也很多,比如左右眼睛的位置、鼻子的位置、嘴巴的位置、人脸的大小、年龄、性别、微笑程度等等

首先,要在Face++官网上注册一个账号,获取key和secret

然后创建一个应用

进入管理中查看key和secret

然后下载SDK http://www.faceplusplus.com.cn/dev-tools-sdks/

接下来在Android Studio中创建一个项目

导入SDK jar包

MainActivity.java

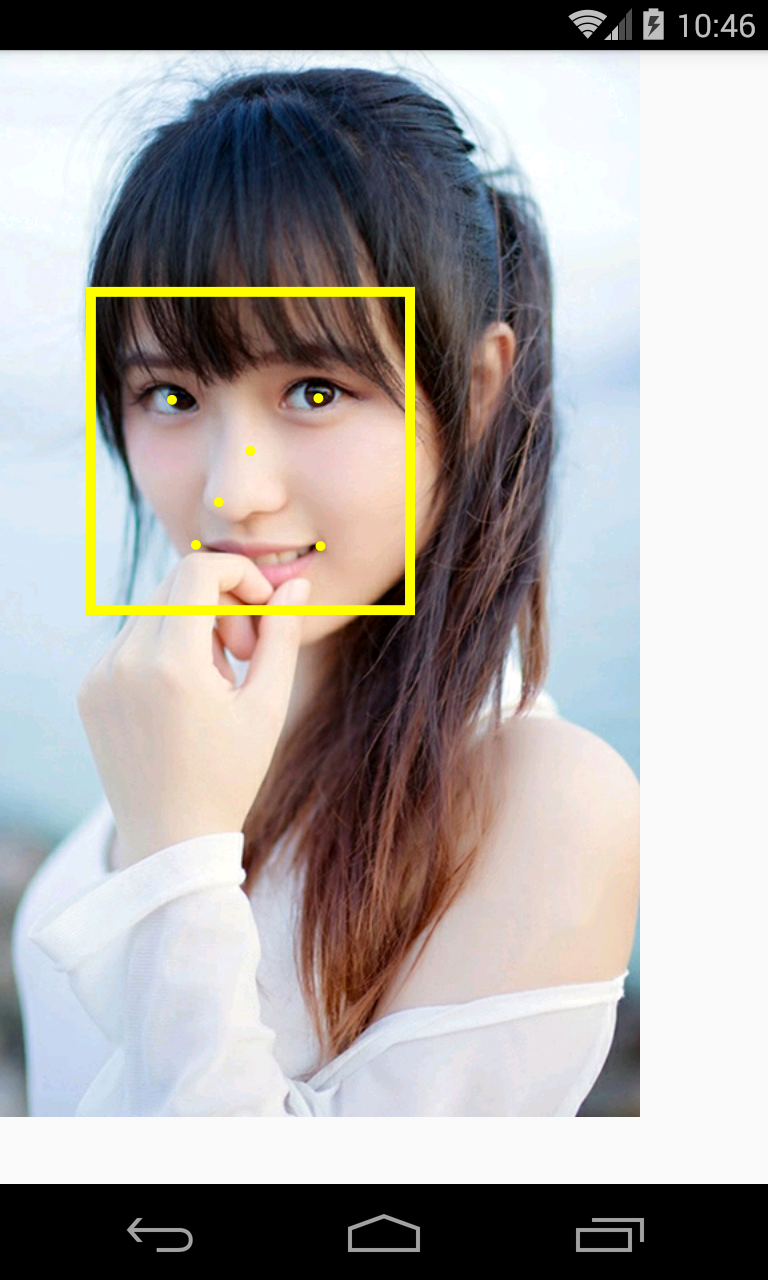

这是我的测试图片face.jpg

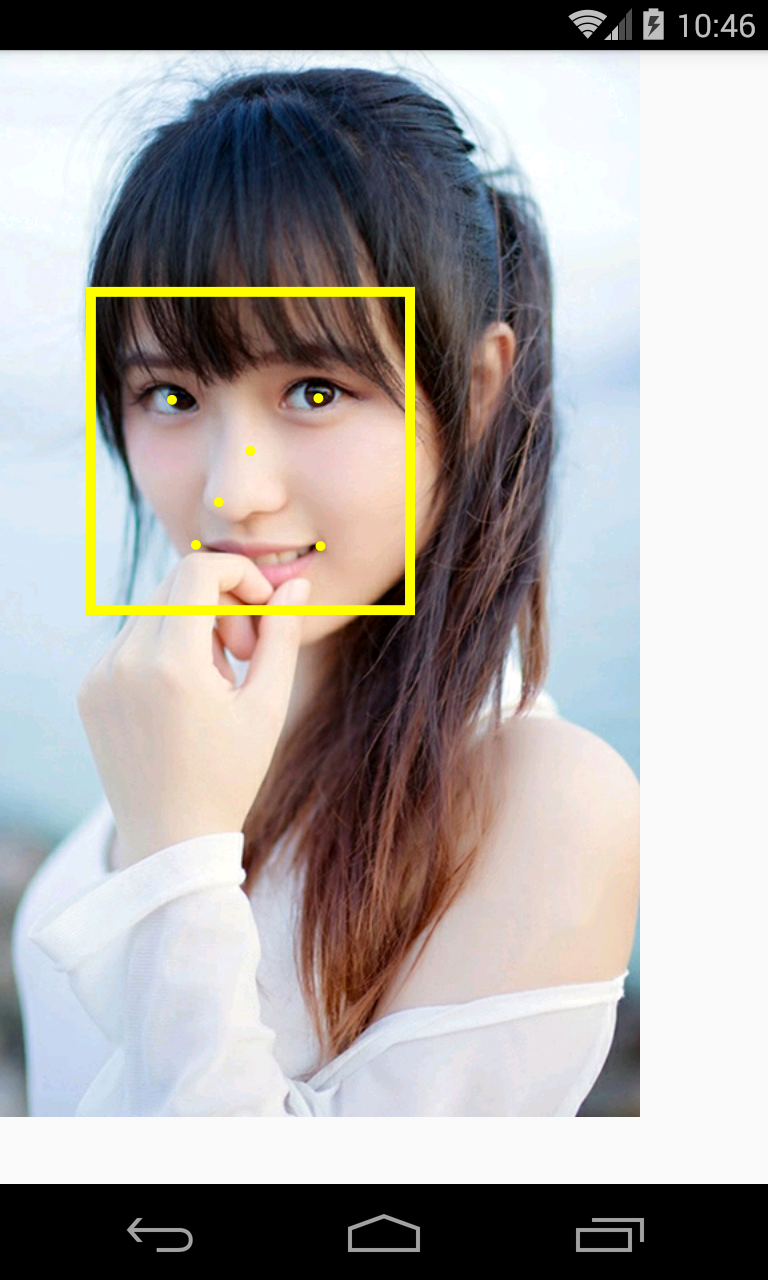

运行结果:

这个是我写的封装类FacePlus.java

首先,要在Face++官网上注册一个账号,获取key和secret

然后创建一个应用

进入管理中查看key和secret

然后下载SDK http://www.faceplusplus.com.cn/dev-tools-sdks/

接下来在Android Studio中创建一个项目

导入SDK jar包

MainActivity.java

import android.app.Activity;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.graphics.Canvas;

import android.graphics.Color;

import android.graphics.Paint;

import android.os.Bundle;

import android.util.Log;

import android.view.ViewGroup;

import android.widget.ImageView;

import com.facepp.error.FaceppParseException;

import com.facepp.http.HttpRequests;

import com.facepp.http.PostParameters;

import org.json.JSONArray;

import org.json.JSONException;

import org.json.JSONObject;

import java.io.ByteArrayOutputStream;

public class MainActivity extends Activity {

private ImageView mIv;

private Bitmap mFaceBitmap;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

//创建一个ImageView,加载到Activity视图上

mIv = new ImageView(this);

setContentView(mIv, new ViewGroup.LayoutParams(ViewGroup.LayoutParams.WRAP_CONTENT, ViewGroup.LayoutParams.WRAP_CONTENT));

//要进行人脸识别的图片

mFaceBitmap = BitmapFactory.decodeResource(getResources(), R.mipmap.face);

mIv.setImageBitmap(mFaceBitmap);

//网络操作,放到另一个线程中执行

new Thread(new Runnable() {

@Override

public void run() {

//创建http请求,参数为key和secret

HttpRequests httpRequests = new HttpRequests(yourKey, yourSecret);

ByteArrayOutputStream out = new ByteArrayOutputStream();

//将位图数据写入到字节流中

mFaceBitmap.compress(Bitmap.CompressFormat.JPEG, 100, out);

//字节流转换为字节数组

byte[] bytes = out.toByteArray();

//发送到服务器的参数

PostParameters parameters = new PostParameters();

//设置图片的字节数组

parameters.setImg(bytes);

try {

//接收服务器返回的JSONObject数据

JSONObject jsonObject = httpRequests.detectionDetect(parameters);

Log.e("tag", jsonObject.toString());

//根据JSONObject的数据在人脸图上标出对应的点

final Bitmap bitmap = parseBitmap(mFaceBitmap, jsonObject);

//把处理后的位图显示出来

mIv.post(new Runnable() {

@Override

public void run() {

mIv.setImageBitmap(bitmap);

}

});

} catch (FaceppParseException e) {

e.printStackTrace();

}

}

}).start();

}

/**

* 在人脸图上画点

*/

private Bitmap parseBitmap(Bitmap mFaceBitmap, JSONObject jsonObject) {

if (mFaceBitmap == null || jsonObject == null) return null;

//创建一个空的大小和mFaceBitmap一样的位图

Bitmap bitmap = Bitmap.createBitmap(mFaceBitmap.getWidth(), mFaceBitmap.getHeight(), Bitmap.Config.ARGB_8888);

//获取bitmap的画布,在画布上进行绘制

Canvas canvas = new Canvas(bitmap);

//创建画笔,抗锯齿

Paint mPaint = new Paint(Paint.ANTI_ALIAS_FLAG);

//画笔为黄色

mPaint.setColor(Color.YELLOW);

//画笔的大小为10

mPaint.setStrokeWidth(10);

//画笔为圆形

mPaint.setStrokeCap(Paint.Cap.ROUND);

//JSONObject数据解析

try {

JSONArray faces = jsonObject.getJSONArray("face");

//人脸数

int faceCount = faces.length();

//首先在画布上绘制mFaceBitmap图片

canvas.drawBitmap(mFaceBitmap, 0, 0, mPaint);

//在人脸上画点

for (int i = 0; i < faceCount; i++){

//拿到每张人脸的信息

JSONObject face = faces.getJSONObject(i);

//拿到人脸的详细位置信息

JSONObject position = face.getJSONObject("position");

//中心点

double centerX = position.getJSONObject("center").getDouble("x");

double centerY = position.getJSONObject("center").getDouble("y");

//拿到的并不是实际的像素值,而是一个相对于图片大小的比例,所以要处理一下

centerX = centerX * bitmap.getWidth() / 100;

centerY = centerY * bitmap.getHeight() / 100;

canvas.drawPoint((float) centerX, (float) centerY, mPaint);

//左眼睛

double eyeLeftX = position.getJSONObject("eye_left").getDouble("x");

double eyeLeftY = position.getJSONObject("eye_left").getDouble("y");

eyeLeftX = eyeLeftX * bitmap.getWidth() / 100;

eyeLeftY = eyeLeftY * bitmap.getHeight() / 100;

canvas.drawPoint((float) eyeLeftX, (float) eyeLeftY, mPaint);

//右眼睛

double eyeRightX = position.getJSONObject("eye_right").getDouble("x");

double eyeRightY = position.getJSONObject("eye_right").getDouble("y");

eyeRightX = eyeRightX * bitmap.getWidth() / 100;

eyeRightY = eyeRightY * bitmap.getHeight() / 100;

canvas.drawPoint((float) eyeRightX, (float) eyeRightY, mPaint);

//鼻子

double noseX = position.getJSONObject("nose").getDouble("x");

double noseY = position.getJSONObject("nose").getDouble("y");

noseX = noseX * bitmap.getWidth() / 100;

noseY = noseY * bitmap.getHeight() / 100;

canvas.drawPoint((float) noseX, (float) noseY, mPaint);

//嘴巴左边

double mouthLeftX = position.getJSONObject("mouth_left").getDouble("x");

double mouthLeftY = position.getJSONObject("mouth_left").getDouble("y");

mouthLeftX = mouthLeftX * bitmap.getWidth() / 100;

mouthLeftY = mouthLeftY * bitmap.getHeight() / 100;

canvas.drawPoint((float) mouthLeftX, (float) mouthLeftY, mPaint);

//嘴巴右边

double mouthRightX = position.getJSONObject("mouth_right").getDouble("x");

double mouthRightY = position.getJSONObject("mouth_right").getDouble("y");

mouthRightX = mouthRightX * bitmap.getWidth() / 100;

mouthRightY = mouthRightY * bitmap.getHeight() / 100;

canvas.drawPoint((float) mouthRightX, (float) mouthRightY, mPaint);

//人脸范围

double width = position.getDouble("width");

double height = position.getDouble("height");

width = width * bitmap.getWidth() / 100;

height = height * bitmap.getHeight() / 100;

mPaint.setStyle(Paint.Style.STROKE);

canvas.drawRect((float) (centerX - width / 2), (float) (centerY - height / 2), (float) (centerX + width / 2), (float) (centerY + height / 2), mPaint);

}

return bitmap;

} catch (JSONException e) {

e.printStackTrace();

return null;

}

}

}//创建http请求,参数为key和secret HttpRequests httpRequests = new HttpRequests(yourKey, yourSecret);在这里传入的就是上面申请的key和secret

这是我的测试图片face.jpg

运行结果:

这个是我写的封装类FacePlus.java

相关文章推荐

- Android使用Face++ SDK进行人脸识别和年龄检测

- Android 使用FACE++架构包实现人脸识别

- 使用face++接口实现在Android设备上的人脸识别

- android jni中将大数据回调到java层的时候用法,比如视频流,音频流等,图片流等 比如我用ffmpeg解码好视频流,想送到java层使用opengGL进行显示,opencv进行人脸识别等等

- 使用OpenCV进行人脸识别的三种算法(官方网翻译)

- 怎样使用OpenCV进行人脸识别

- Android入门(53)——第八章 使用GestureDetector进行手势识别

- Android使用Face++架构包实现人脸识别

- Java使用OpenCV进行人脸识别

- ROS使用笔记本自带USB摄像头运行pi_face_tracker,进行人脸识别

- 怎样使用OpenCV进行人脸识别 [停止更新]

- 怎样使用OpenCV进行人脸识别 [停止更新]

- Android入门(54)——第九章 使用GestureOverlayView进行手势识别

- java调用dll进行人脸识别(JNI的使用)

- php使用face++实现一个简单的人脸识别系统

- 怎样使用OpenCV进行人脸识别

- 使用Haar特征进行人脸识别

- 使用opencv进行人脸识别

- 怎样使用OpenCV进行人脸识别

- 怎样使用OpenCV进行人脸识别