spark集群详细搭建过程及遇到的问题解决(二)

2016-11-16 15:51

676 查看

(二)配置ssh无密码访问集群机器

master节点

与master节点执行过程相同

worker2节点与master节点执行过程相同

执行完上述操作之后:

master节点:

从master节点分别登陆自身、worker1节点与worker2节点(可能第一次需要密码、退出后,第二次重新ssh则不需要密码)

从worker1节点登陆自身、master节点与worker2节点(可能第一次需要密码、退出后,第二次重新ssh则不需要密码)

从worker2节点登陆自身、master节点与worker1节点(可能第一次需要密码、退出后,第二次重新ssh则不需要密码)

注意,登陆到其他节点后,一定要记得退出

下文中,将开始安装hadoop。。。

master节点

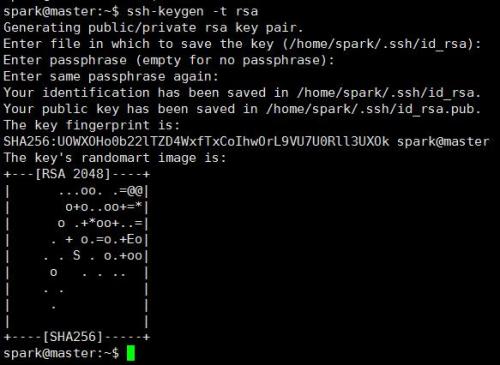

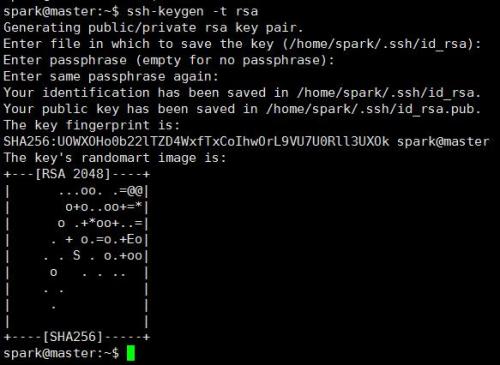

root@master:/home# su - spark spark@master:~$ spark@master:~$ ssh-keygen -t rsa #一直enter键

spark@master:~$ cd .ssh/ spark@master:~/.ssh$ ls id_rsa id_rsa.pub spark@master:~/.ssh$ cat id_rsa.pub > authorized_keys spark@master:~/.ssh$ scp spark@master:~/.ssh/id_rsa.pub ./master_rsa.pub

spark@master:~/.ssh$ ls authorized_keys id_rsa id_rsa.pub known_hosts master_rsa.pub spark@master:~/.ssh$ cat master_rsa.pub >>authorized_keysworker1节点

与master节点执行过程相同

worker2节点与master节点执行过程相同

执行完上述操作之后:

master节点:

spark@master:~/.ssh$ scp spark@worker1:~/.ssh/id_rsa.pub ./worker1_rsa.pub注意是在./ssh目录下

spark@master:~/.ssh$ cat worker1_rsa.pub >>authorized_keys spark@master:~/.ssh$ scp spark@worker2:~/.ssh/id_rsa.pub ./worker2_rsa.pub

spark@master:~/.ssh$ cat worker2_rsa.pub >>authorized_keysworker1节点

spark@worker1:~/.ssh$ scp spark@worker2:~/.ssh/id_rsa.pub ./worker2_rsa.pub cat worker2_rsa.pub >>authorized_keysworker2节点

spark@worker2:~/.ssh$ scp spark@worker1:~/.ssh/id_rsa.pub ./worker1_rsa.pub cat worker1_rsa.pub >>authorized_keys验证是否配置成功

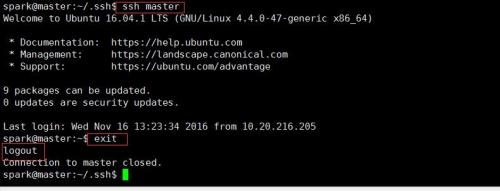

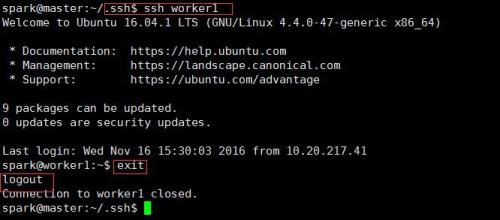

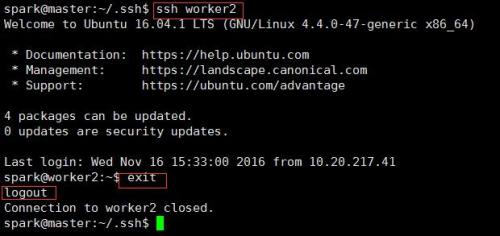

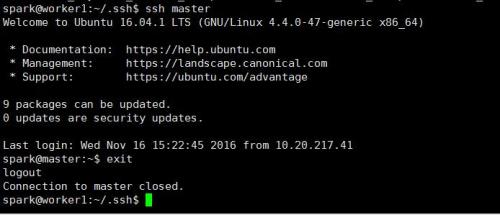

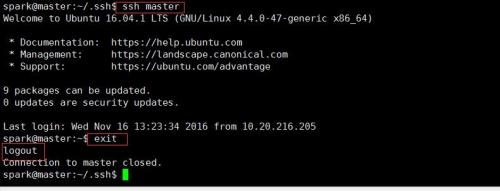

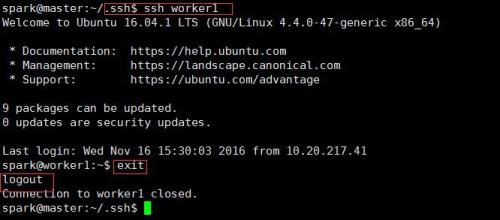

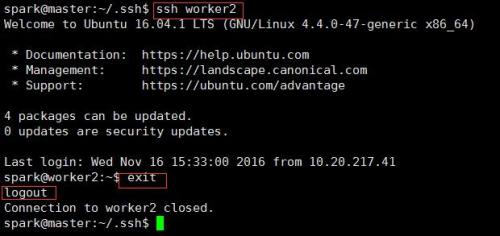

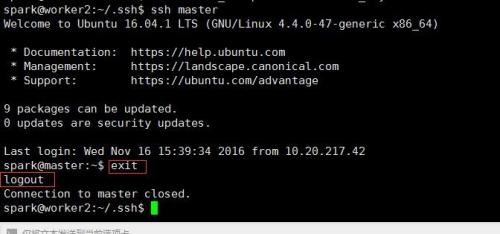

从master节点分别登陆自身、worker1节点与worker2节点(可能第一次需要密码、退出后,第二次重新ssh则不需要密码)

spark@master:~/.ssh$ ssh master

spark@master:~/.ssh$ ssh worker1

spark@master:~/.ssh$ ssh worker2

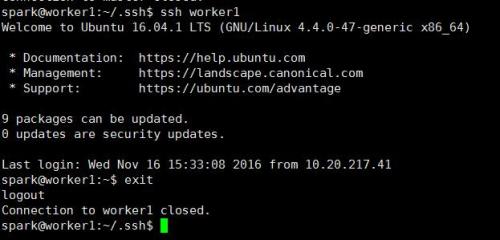

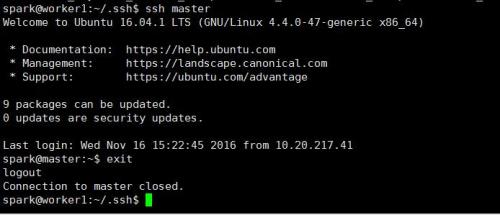

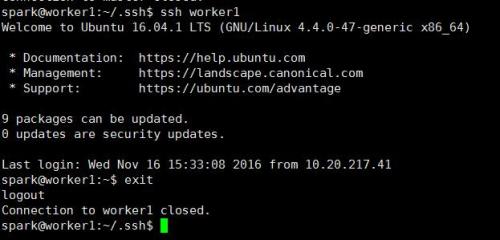

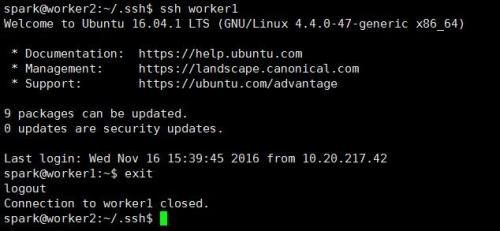

从worker1节点登陆自身、master节点与worker2节点(可能第一次需要密码、退出后,第二次重新ssh则不需要密码)

spark@worker1:~/.ssh$ ssh master

spark@worker1:~/.ssh$ ssh worker1

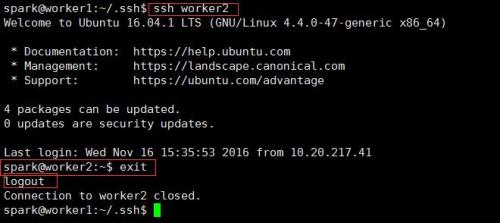

spark@worker1:~/.ssh$ ssh worker2

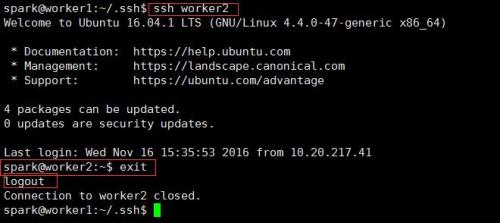

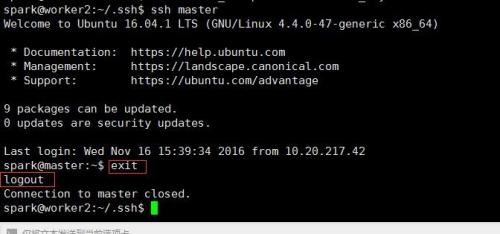

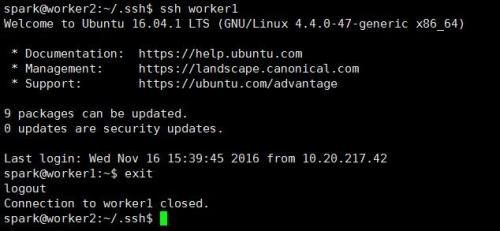

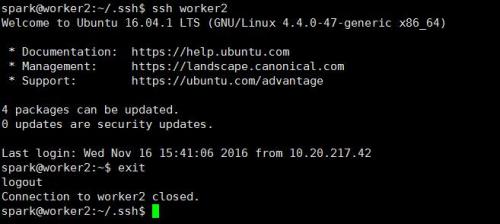

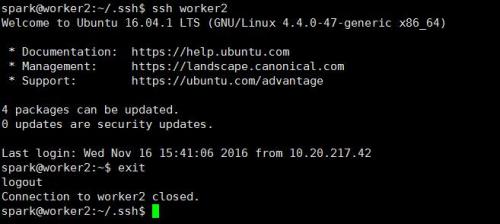

从worker2节点登陆自身、master节点与worker1节点(可能第一次需要密码、退出后,第二次重新ssh则不需要密码)

spark@worker2:~/.ssh$ ssh master

spark@worker2:~/.ssh$ ssh worker1

spark@worker2:~/.ssh$ ssh worker2

注意,登陆到其他节点后,一定要记得退出

下文中,将开始安装hadoop。。。

相关文章推荐

- spark集群详细搭建过程及遇到的问题解决(一)

- spark集群详细搭建过程及遇到的问题解决(三)

- spark集群详细搭建过程及遇到的问题解决(四)

- 复制虚拟机vmware centos搭建集群节点过程中网络配置eth0和eth1遇到的问题以及NAT模式下虚拟机静态IP配置方法

- 【解决】Android环境搭建过程中遇到adb.exe文件丢失的问题

- 【Database-cluster】mycat集群搭建过程中遇到的几个问题

- Ubuntu下Crtmp服务器的搭建及过程中遇到的问题解决方法

- spark集群搭建整理之解决亿级人群标签问题

- 搭建配置服务器过程中遇到的问题及其解决办法(转)

- RabbitMQ集群过程中遇到的一些问题的解决办法

- CentOS搭建Hadoop分布式集群详细步骤和常见问题解决

- Android环境搭建的过程中遇到的问题及解决方法

- CUnit开发环境搭建过程可能遇到的一些问题及解决方法

- Kubernetes集群搭建过程中遇到的问题

- Spark程序执行过程中遇到的线程安全问题及解决办法

- Win7系统搭建WiFi热点详细攻略(遇到的各种问题的解决办法如:手机始终显示正在获取IP)(没有第三方软件)

- 【解决】Android环境搭建过程中遇到adb.exe文件丢失的问题

- Kubernetes集群搭建过程中遇到的问题

- Moses搭建过程遇到的一些问题与解决

- hadoop环境搭建过程及搭建过程遇到的问题及解决的办法