xtion pro live 单目视觉半直接法(SVO)实践

2016-07-20 10:30

441 查看

svo的下载编译可以查看教程:https://github.com/uzh-rpg/rpg_svo/wiki

跑完下作者提供的数据集后,我就想用自己手边的摄像头来看下实际效果。

但要用自己的摄像头首先得对摄像头进行标定,获得相机的参数,这里我采用的是

ATAN模型,标定的是xtion_pro_live 的rgb摄像头。

(相机标定:https://github.com/uzh-rpg/rpg_svo/wiki/Camera-Calibration)

要标定它的话,首先我们要下载PTAM,用ptam的cameracalibrator来标定。

下载ptam包,编译之前安装依赖项:

成功编译后,进入cameracalibrator.launch修改输入topic :

这里你会有疑问输入图像/mono_image从哪里来呢?

ptam的cameracalibrator需要的图像是单通道的灰度图像,而xtion_pro_live提供的/camera/rgb/image_raw是3通道的rgb图像,所以需要转换格式:/camera/rgb/image_raw –>/mono_image。所以/mono_image就是转换后的单通道灰度图像。

那怎么进行转换呢?不用担心,这里有转换程序:

你需要把这个程序放入ros的空间的某个包中编译,我放到了自己的workspace空间下的imu_dep_fusion包中来编译,生成节点convertimage。

好麻烦啊,我不想编译代码,可以啊。来个现成的,在launch中加入这句,就ok了:

好了搞定了输入数据,下面需要对配置参数进行修改:进入PtamFixParams.yaml文件第2、3行修改xtion_pro_live的图像分辨率为:

做完了这些,你还需要把ptam中的calib_pattern.pdf打印出来作为标定棋盘,你就可以运行校准程序了,在终端中输入:

cameracalibrator.launch具体内容:

效果如下:

校准的具体过程见Tutorials:

http://wiki.ros.org/ethzasl_ptam/Tutorials/camera_calibration

得到校准参数后,在 svo_ros 下的新建param/camera_xtion.yaml文件,将参数写如其中:

svo需要的灰度图像已经有了:/mono_image,svo需要的相机模型已经标定了:camera_xtion.yaml。下面就来用xtion_pro_live来运行下svo吧:

把svo下的live.launch运行起来:

live.launch 具体内容:

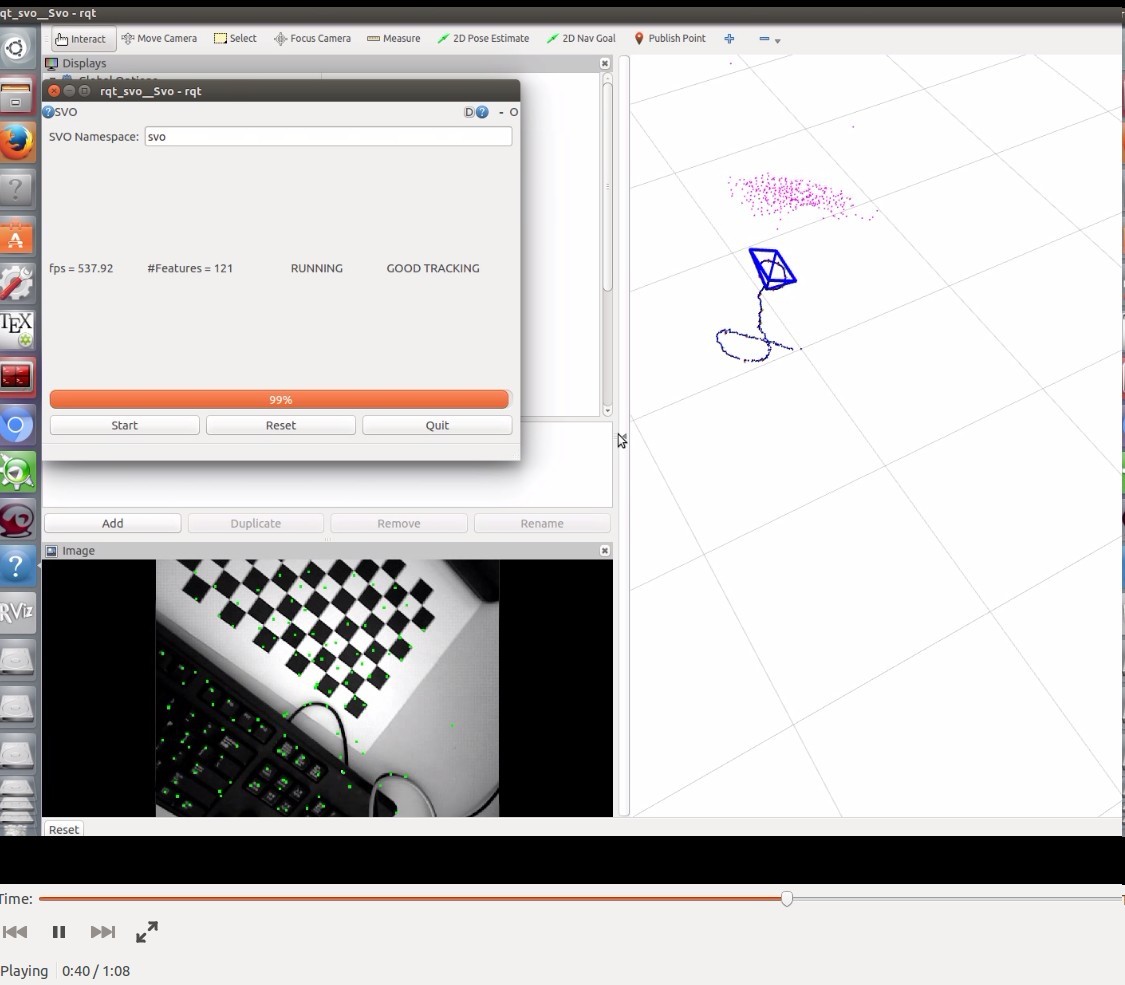

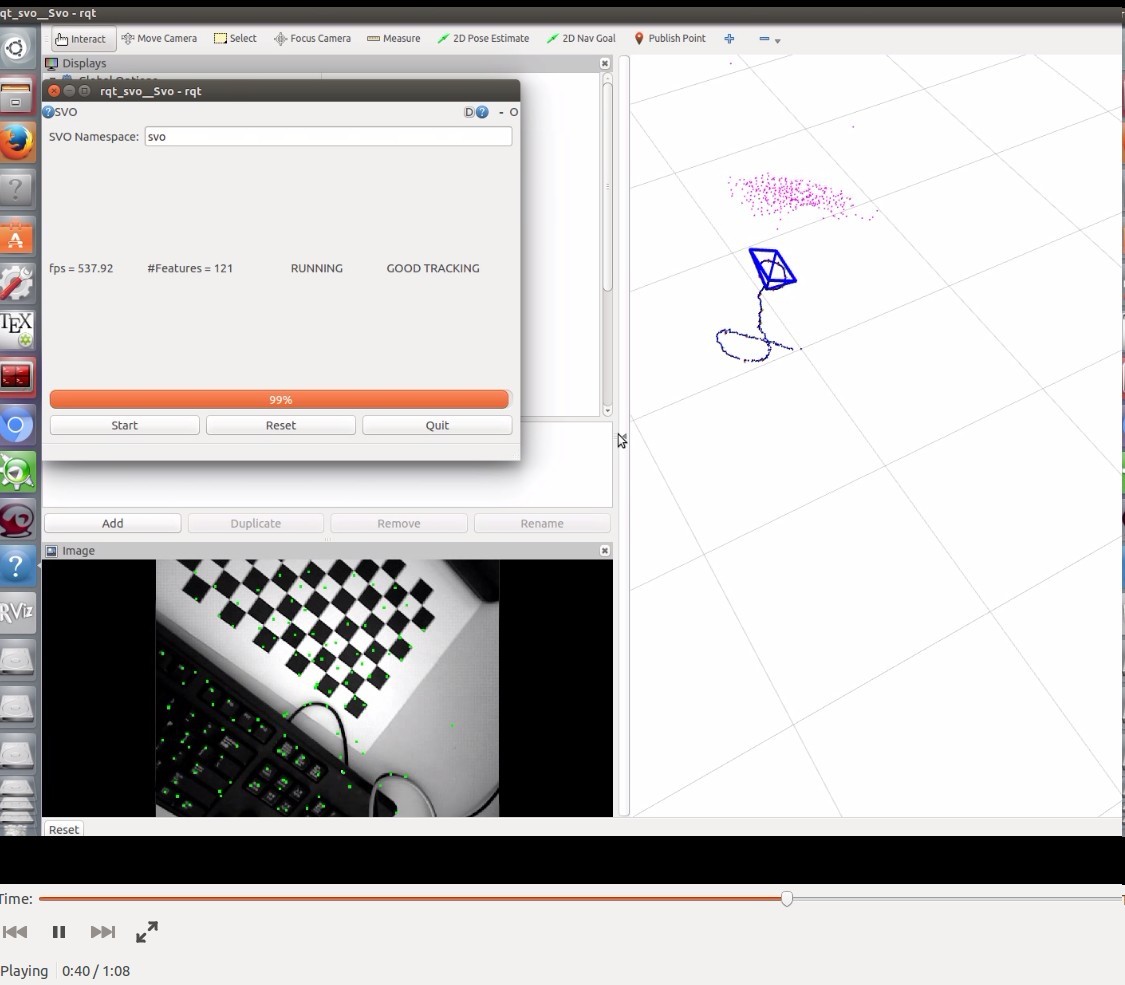

效果:

实验视频:

http://pan.baidu.com/s/1miuB0vu 密码:3rr6

进行到这里好像基本结束了,但有人可能会说xtion_pro_live不是针孔相机模型吗?( ⊙ o ⊙ )啊哦!好像是啊!这岂不是全错了????其实用atan和pinhole模型标定好像都可以,只不过效果上看好像atan的更鲁棒些,所以上面讲的是atan模型,下面给出pinhole模型标定过程:

先把标定程序下载下来:

跑完下作者提供的数据集后,我就想用自己手边的摄像头来看下实际效果。

但要用自己的摄像头首先得对摄像头进行标定,获得相机的参数,这里我采用的是

ATAN模型,标定的是xtion_pro_live 的rgb摄像头。

(相机标定:https://github.com/uzh-rpg/rpg_svo/wiki/Camera-Calibration)

要标定它的话,首先我们要下载PTAM,用ptam的cameracalibrator来标定。

下载ptam包,编译之前安装依赖项:

sudo apt-get install freeglut3-dev sudo apt-get install libblas-dev sudo apt-get install liblapacke-dev

成功编译后,进入cameracalibrator.launch修改输入topic :

<launch> <node name="cameracalibrator" pkg="ptam" type="cameracalibrator" clear_params="true" output="screen"> <remap from="image" to="/mono_image" /> <remap from="pose" to="pose"/> <rosparam file="$(find ptam)/PtamFixParams.yaml"/> </node> </launch>

这里你会有疑问输入图像/mono_image从哪里来呢?

ptam的cameracalibrator需要的图像是单通道的灰度图像,而xtion_pro_live提供的/camera/rgb/image_raw是3通道的rgb图像,所以需要转换格式:/camera/rgb/image_raw –>/mono_image。所以/mono_image就是转换后的单通道灰度图像。

那怎么进行转换呢?不用担心,这里有转换程序:

//rgb图像转换为灰度图像的程序

#include <ros/ros.h>

#include <opencv2/opencv.hpp>

#include <opencv/cv.h>

#include <opencv/highgui.h>

#include <image_transport/image_transport.h>

#include <cv_bridge/cv_bridge.h>

#include <sensor_msgs/image_encodings.h>

#include "ros/ros.h"

#include "std_msgs/String.h"

ros::Publisher image_pub ;

void chatterCallback(const sensor_msgs::ImageConstPtr& msg)

{

cv_bridge::CvImagePtr cv_ptr;

cv_ptr = cv_bridge::toCvCopy(msg,sensor_msgs::image_encodings::BGR8);

cv::Mat image_gray;

cv::cvtColor(cv_ptr->image, image_gray,CV_BGR2GRAY);//灰度化

cv_bridge::CvImage cvi;

sensor_msgs::Image ros_img;

ros::Time time=ros::Time::now();

cvi.header.stamp = time;

cvi.header.frame_id = "image";

cvi.encoding = "mono8";

cvi.image = image_gray;

cvi.toImageMsg(ros_img);

image_pub.publish(cvi.toImageMsg());

}

int main(int argc, char **argv)

{

ros::init(argc, argv, "img_tran");

ros::NodeHandle n;

ros::Subscriber sub = n.subscribe("/camera/rgb/image_raw", 1000, chatterCallback);

image_pub = n.advertise<sensor_msgs::Image>("/mono_image", 1000);

ros::spin();

return 0;

}你需要把这个程序放入ros的空间的某个包中编译,我放到了自己的workspace空间下的imu_dep_fusion包中来编译,生成节点convertimage。

好麻烦啊,我不想编译代码,可以啊。来个现成的,在launch中加入这句,就ok了:

<node name="image_proc" pkg="image_proc" type="image_proc" ns="camera"> <remap from="camera_info" to="/camera/rgb/image_info" /> <remap from="image_raw" to="/camera/rgb/image_raw" /> <remap from="image_mono" to="/mono_image" /> </node>

好了搞定了输入数据,下面需要对配置参数进行修改:进入PtamFixParams.yaml文件第2、3行修改xtion_pro_live的图像分辨率为:

ImageSizeX: 640 ImageSizeY: 480

做完了这些,你还需要把ptam中的calib_pattern.pdf打印出来作为标定棋盘,你就可以运行校准程序了,在终端中输入:

roslaunch ptam cameracalibrator.launch

cameracalibrator.launch具体内容:

<?xml version="1.0"?> <launch> <include file="$(find openni2_launch)/launch/openni2.launch" /> <node pkg="imu_dep_fusion" type="convertimage" name="convertimage" output="screen"/> //或者这样: <!--<node name="image_proc" pkg="image_proc" type="image_proc" ns="camera"> <remap from="camera_info" to="/camera/rgb/image_info" /> <remap from="image_raw" to="/camera/rgb/image_raw" /> <remap from="image_mono" to="/mono_image" />--> <node name="cameracalibrator" pkg="ptam" type="cameracalibrator" clear_params="true" output="screen"> <!-- <remap from="image" to="bluefox_ros_node/image_raw" /> --> <remap from="image" to="/mono_image" /> <remap from="pose" to="pose"/> <rosparam file="$(find ptam)/PtamFixParams.yaml"/> </node> </launch>

效果如下:

校准的具体过程见Tutorials:

http://wiki.ros.org/ethzasl_ptam/Tutorials/camera_calibration

得到校准参数后,在 svo_ros 下的新建param/camera_xtion.yaml文件,将参数写如其中:

cam_model: ATAN cam_width: 640 cam_height: 480 cam_fx: 0.832241 cam_fy: 1.10712 cam_cx: 0.488962 cam_cy: 0.492997 cam_d0: 0.066145

svo需要的灰度图像已经有了:/mono_image,svo需要的相机模型已经标定了:camera_xtion.yaml。下面就来用xtion_pro_live来运行下svo吧:

把svo下的live.launch运行起来:

roslaunch svo_ros live.launch

live.launch 具体内容:

<?xml version="1.0"?> <launch> <include file="$(find openni2_launch)/launch/openni2.launch" /> <node pkg="imu_dep_fusion" type="convertimage" name="convertimage" output="screen"/> //或者这样: <!--<node name="image_proc" pkg="image_proc" type="image_proc" ns="camera"> <remap from="camera_info" to="/camera/rgb/image_info" /> <remap from="image_raw" to="/camera/rgb/image_raw" /> <remap from="image_mono" to="/mono_image" />--> <node pkg="svo_ros" type="vo" name="svo" clear_params="true" output="screen"> <!-- Camera topic to subscribe to --> <param name="cam_topic" value="/mono_image" type="str" /> <!-- Camera calibration file --> <rosparam file="$(find svo_ros)/param/camera_xtion.yaml" /> <!-- Default parameter settings: choose between vo_fast and vo_accurate --> <rosparam file="$(find svo_ros)/param/vo_fast.yaml" /> </node> <node pkg="rqt_svo" type="rqt_svo" name="rqt_svo" /> <node pkg="rviz" type="rviz" name="odometry_rviz" args="-d $(find svo_ros)/rviz_config.rviz"/> </launch>

效果:

实验视频:

http://pan.baidu.com/s/1miuB0vu 密码:3rr6

进行到这里好像基本结束了,但有人可能会说xtion_pro_live不是针孔相机模型吗?( ⊙ o ⊙ )啊哦!好像是啊!这岂不是全错了????其实用atan和pinhole模型标定好像都可以,只不过效果上看好像atan的更鲁棒些,所以上面讲的是atan模型,下面给出pinhole模型标定过程:

先把标定程序下载下来:

git clone https://github.com/ros-perception/image_pipeline.git[/code]

别着急编译,去把最新的依赖文件vision_opencv下载下来:git clone https://github.com/ros-perception/vision_opencv.git

扔到空间内的src下编译,然后开始标定吧:roslaunch openni2_launch openni2.launch rosrun camera_calibration cameracalibrator.py --size 11x7 --square 0.02 image:=/camera/rgb/image camera:=/my_camera --no-service-check

有人会问了不是12x8个方格吗?对啊,所以有11x7内点啊。

有人会问camera:=/my_camera这是什么东东?相机device。因为有–no-service-check所以/my_camera可以随便写!!不检查。但不可以省略。下面的过程可以看下:

教程:http://wiki.ros.org/camera_calibration/Tutorials/MonocularCalibration

校准完了把参数写入camera_xtion_pinhole.yaml文件,在live.launch中将camera_xtion.yaml改为camera_pinhole.yaml。

标定参数:cam_model: Pinhole cam_width: 640 cam_height: 480 cam_fx: 534.609558 cam_fy: 536.659729 cam_cx: 325.287103 cam_cy: 240.129954 cam_d0: 0.037906 cam_d1: -0.101329 cam_d2: 0.000831 cam_d3: 0.005041

live.launch:<?xml version="1.0"?> <launch> <include file="$(find openni2_launch)/launch/openni2.launch" /> <node pkg="imu_dep_fusion" type="convertimage" name="convertimage" output="screen"/> //或者这样: <!--<node name="image_proc" pkg="image_proc" type="image_proc" ns="camera"> <remap from="camera_info" to="/camera/rgb/image_info" /> <remap from="image_raw" to="/camera/rgb/image_raw" /> <remap from="image_mono" to="/mono_image" />--> <node pkg="svo_ros" type="vo" name="svo" clear_params="true" output="screen"> <!-- Camera topic to subscribe to --> <param name="cam_topic" value="/mono_image" type="str" /> <!-- Camera calibration file --> <rosparam file="$(find svo_ros)/param/camera_xtion_pinhole.yaml" /> <!-- Default parameter settings: choose between vo_fast and vo_accurate --> <rosparam file="$(find svo_ros)/param/vo_fast.yaml" /> </node> <node pkg="rqt_svo" type="rqt_svo" name="rqt_svo" /> <node pkg="rviz" type="rviz" name="odometry_rviz" args="-d $(find svo_ros)/rviz_config.rviz"/> </launch>

下面就可以跑live.launch了。这是实验视频:

http://pan.baidu.com/s/1jIO1IkQ 密码:qq6n

相关文章推荐

- SLAM 介绍

- slam算法研究

- SVO深度解析(三)之深度滤波(建图部分)

- SVO深度解析(二)之跟踪部分

- SVO深度解析(一)之简介和评价

- 非滤波单目视觉SLAM系统研究

- SVO-REMODE编译运行方法

- Ubuntu14.04+ROS Indigo+SVO(Semi-direct Visual Odometry)

- svo的Supplementary matterial 推导过程

- ubuntu16.04 ROS环境下配置和运行SVO

- linux下安装lnmp环境

- 为mutable类型的容器(array,set等)添加kvo,有点麻烦,供参考和了解下吧

- ThinkPHP中initialize和construct的不同

- HDU 5724 Chess (博弈)

- Hbase基本使用示例

- 纯原生js改良版

- Spring使用Cache

- Java 定时器的使用:每天定时执行任务

- Android中Toolbar的使用 AppCompatActivity必备

- Java 定时器的使用:每天定时执行任务