win7基于maven和eclipse连接远程Linux服务器的hadoop2.0集群的入门程序

2016-07-07 19:43

666 查看

1. 用Maven创建一个标准化的Java项目

如果以上能顺利执行,跳过这里,直接看第2步!一般都能很快的顺利通过,如果出现exception,则按照以下配置(注:出现这种情况最好重新执行上面命令,不然后面可能出现不好解决的问题)

Choose a number or apply filter (format: [groupId:]artifactId, case sensitive co

ntains): 493: 1(注:若后面有hint:enter or space is。。。直接按回车或者空格键)

Choose am.ik.archetype:spring-boot-blank-archetype version:

1: 0.9.0

2: 0.9.1

3: 0.9.2

Choose a number: 3: 3(选择一个version id输入)

Downloading: https://repo.maven.apache.org/maven2/am/ik/archetype/spring-boot-bl ank-archetype/0.9.2/spring-boot-blank-archetype-0.9.2.jar

Downloaded: https://repo.maven.apache.org/maven2/am/ik/archetype/spring-boot-bla nk-archetype/0.9.2/spring-boot-blank-archetype-0.9.2.jar (6 KB at 3.5 KB/sec)

Downloading: https://repo.maven.apache.org/maven2/am/ik/archetype/spring-boot-bl ank-archetype/0.9.2/spring-boot-blank-archetype-0.9.2.pom

Downloaded: https://repo.maven.apache.org/maven2/am/ik/archetype/spring-boot-bla nk-archetype/0.9.2/spring-boot-blank-archetype-0.9.2.pom (3 KB at 5.7 KB/sec)

[INFO] Using property: groupId = org.conan.myhadoop.mr

Define value for property 'artifactId': : myHadoop(根据需要输入)

Define value for property 'version': 1.0-SNAPSHOT: : 1.0-SNAPSHOT(照着前面输入即可)

[INFO] Using property: package = org.conan.myhadoop.mr

Confirm properties configuration:

groupId: org.conan.myhadoop.mr

artifactId: myHadoop

version: 1.0-SNAPSHOT

package: org.conan.myhadoop.mr

Y: : Y(如果正确,输入Y)

[INFO] -------------------------------------------------------------------------

---

[INFO] Using following parameters for creating project from Archetype: spring-bo

ot-blank-archetype:0.9.2

[INFO] -------------------------------------------------------------------------

---

[INFO] Parameter: groupId, Value: org.conan.myhadoop.mr

[INFO] Parameter: artifactId, Value: myHadoop

[INFO] Parameter: version, Value: 1.0-SNAPSHOT

[INFO] Parameter: package, Value: org.conan.myhadoop.mr

[INFO] Parameter: packageInPathFormat, Value: org/conan/myhadoop/mr

[INFO] Parameter: version, Value: 1.0-SNAPSHOT

[INFO] Parameter: package, Value: org.conan.myhadoop.mr

[INFO] Parameter: groupId, Value: org.conan.myhadoop.mr

[INFO] Parameter: artifactId, Value: myHadoop

[WARNING] The directory D:\workspace\java\myHadoop already exists.

[INFO] project created from Archetype in dir: D:\workspace\java\myHadoop

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 12:05 min

[INFO] Finished at: 2014-10-23T14:23:02+08:00

[INFO] Final Memory: 10M/26M

[INFO] ------------------------------------------------------------------------

D:\workspace\java>

2. 进入项目,执行mvn clean install 命令(可以先mvn clean,再mvn install)

r to C:\Users\michael\.m2\repository\siat\hadoop\TestHadoop\1.0-SNAPSHOT\TestHa

oop-1.0-SNAPSHOT.jar

[INFO] Installing D:\workspace\java\TestHadoop\pom.xml to C:\Users\michael\.m2\

epository\siat\hadoop\TestHadoop\1.0-SNAPSHOT\TestHadoop-1.0-SNAPSHOT.pom

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 25.122 s

[INFO] Finished at: 2014-10-23T15:50:55+08:00

[INFO] Final Memory: 11M/28M

[INFO] ------------------------------------------------------------------------

D:\workspace\java\TestHadoop>

D:\workspace\java>mvn archetype:generate -DarchetypeGroupId=org.apache.maven.archetypes -DgroupId=siat.hadoop -DartifactId=TestHadoop -DpackageName=siat.hadoop -Dversion=1.0-SNAPSHOT -DinteractiveMode=false

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building Maven Stub Project (No POM) 1

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] >>> maven-archetype-plugin:2.2:generate (default-cli) > generate-sources

@ standalone-pom >>>

[INFO]

[INFO] <<< maven-archetype-plugin:2.2:generate (default-cli) < generate-sources

@ standalone-pom <<<</pre>

解释一下,-DgroupId=siat.hadoop -DartifactId=TestHadoop -DpackageName=siat.hadoop这些参数指定好之后会自动创建,不需要手动创建

如果以上能顺利执行,跳过这里,直接看第2步!一般都能很快的顺利通过,如果出现exception,则按照以下配置(注:出现这种情况最好重新执行上面命令,不然后面可能出现不好解决的问题)

Choose a number or apply filter (format: [groupId:]artifactId, case sensitive co

ntains): 493: 1(注:若后面有hint:enter or space is。。。直接按回车或者空格键)

Choose am.ik.archetype:spring-boot-blank-archetype version:

1: 0.9.0

2: 0.9.1

3: 0.9.2

Choose a number: 3: 3(选择一个version id输入)

Downloading: https://repo.maven.apache.org/maven2/am/ik/archetype/spring-boot-bl ank-archetype/0.9.2/spring-boot-blank-archetype-0.9.2.jar

Downloaded: https://repo.maven.apache.org/maven2/am/ik/archetype/spring-boot-bla nk-archetype/0.9.2/spring-boot-blank-archetype-0.9.2.jar (6 KB at 3.5 KB/sec)

Downloading: https://repo.maven.apache.org/maven2/am/ik/archetype/spring-boot-bl ank-archetype/0.9.2/spring-boot-blank-archetype-0.9.2.pom

Downloaded: https://repo.maven.apache.org/maven2/am/ik/archetype/spring-boot-bla nk-archetype/0.9.2/spring-boot-blank-archetype-0.9.2.pom (3 KB at 5.7 KB/sec)

[INFO] Using property: groupId = org.conan.myhadoop.mr

Define value for property 'artifactId': : myHadoop(根据需要输入)

Define value for property 'version': 1.0-SNAPSHOT: : 1.0-SNAPSHOT(照着前面输入即可)

[INFO] Using property: package = org.conan.myhadoop.mr

Confirm properties configuration:

groupId: org.conan.myhadoop.mr

artifactId: myHadoop

version: 1.0-SNAPSHOT

package: org.conan.myhadoop.mr

Y: : Y(如果正确,输入Y)

[INFO] -------------------------------------------------------------------------

---

[INFO] Using following parameters for creating project from Archetype: spring-bo

ot-blank-archetype:0.9.2

[INFO] -------------------------------------------------------------------------

---

[INFO] Parameter: groupId, Value: org.conan.myhadoop.mr

[INFO] Parameter: artifactId, Value: myHadoop

[INFO] Parameter: version, Value: 1.0-SNAPSHOT

[INFO] Parameter: package, Value: org.conan.myhadoop.mr

[INFO] Parameter: packageInPathFormat, Value: org/conan/myhadoop/mr

[INFO] Parameter: version, Value: 1.0-SNAPSHOT

[INFO] Parameter: package, Value: org.conan.myhadoop.mr

[INFO] Parameter: groupId, Value: org.conan.myhadoop.mr

[INFO] Parameter: artifactId, Value: myHadoop

[WARNING] The directory D:\workspace\java\myHadoop already exists.

[INFO] project created from Archetype in dir: D:\workspace\java\myHadoop

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 12:05 min

[INFO] Finished at: 2014-10-23T14:23:02+08:00

[INFO] Final Memory: 10M/26M

[INFO] ------------------------------------------------------------------------

D:\workspace\java>

2. 进入项目,执行mvn clean install 命令(可以先mvn clean,再mvn install)

~ D:\workspace\java>cd TestHadoop[/code]

~ D:\workspace\java\TestHadoop>mvn clean install

。。。

[INFO] Installing D:\workspace\java\TestHadoop\target\TestHadoop-1.0-SNAPSHOT.j

r to C:\Users\michael\.m2\repository\siat\hadoop\TestHadoop\1.0-SNAPSHOT\TestHa

oop-1.0-SNAPSHOT.jar

[INFO] Installing D:\workspace\java\TestHadoop\pom.xml to C:\Users\michael\.m2\

epository\siat\hadoop\TestHadoop\1.0-SNAPSHOT\TestHadoop-1.0-SNAPSHOT.pom

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 25.122 s

[INFO] Finished at: 2014-10-23T15:50:55+08:00

[INFO] Final Memory: 11M/28M

[INFO] ------------------------------------------------------------------------

D:\workspace\java\TestHadoop>

[INFO]

[INFO] --- maven-archetype-plugin:2.2:generate (default-cli) @ standalone-pom --

-

[INFO] Generating project in Batch mode

[INFO] No archetype defined. Using maven-archetype-quickstart (org.apache.maven.

archetypes:maven-archetype-quickstart:1.0)

Downloading: https://repo.maven.apache.org/maven2/org/apache/maven/archetypes/ma[/code]ven-archetype-quickstart/1.0/maven-archetype-quickstart-1.0.jarDownloaded: https://repo.maven.apache.org/maven2/org/apache/maven/archetypes/mav[/code]en-archetype-quickstart/1.0/maven-archetype-quickstart-1.0.jar (5 KB at 2.6 KB/sec)Downloading: https://repo.maven.apache.org/maven2/org/apache/maven/archetypes/ma[/code]ven-archetype-quickstart/1.0/maven-archetype-quickstart-1.0.pomDownloaded: https://repo.maven.apache.org/maven2/org/apache/maven/archetypes/mav[/code]en-archetype-quickstart/1.0/maven-archetype-quickstart-1.0.pom (703 B at 1.5 KB/sec)[INFO] ----------------------------------------------------------------------------[INFO] Using following parameters for creating project from Old (1.x) Archetype:maven-archetype-quickstart:1.0[INFO] ----------------------------------------------------------------------------[INFO] Parameter: groupId, Value: siat.hadoop[INFO] Parameter: packageName, Value: siat.hadoop[INFO] Parameter: package, Value: siat.hadoop[INFO] Parameter: artifactId, Value: TestHadoop[INFO] Parameter: basedir, Value: D:\workspace\java[INFO] Parameter: version, Value: 1.0-SNAPSHOT[INFO] project created from Old (1.x) Archetype in dir: D:\workspace\java\TestHadoop[INFO] ------------------------------------------------------------------------[INFO] BUILD SUCCESS[INFO] ------------------------------------------------------------------------[INFO] Total time: 11.390 s[INFO] Finished at: 2014-10-23T15:45:02+08:00[INFO] Final Memory: 11M/27M[INFO] ------------------------------------------------------------------------D:\workspace\java>

以下错误是在第1步不能顺利执行的时候才会出现的问题,试了网上提供的很多方法,也未能解决。出现这个问题,还是从第1步开始吧!so,第1步顺利执行是保证这一步不出问题的基础。

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.

1:compile (default-compile) on project myHadoop: Fatal error compiling: 无效的目

标发行版: 1.8 -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e swit

ch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please rea

d the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoExecutionE xception

D:\workspace\java\myHadoop>

pom.xml文件内容为:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.conan.myhadoop.mr</groupId>

<artifactId>myHadoop</artifactId>

<version>1.0-SNAPSHOT</version>

<packaging>jar</packaging>

<name>myHadoop</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.4.1</version>

<exclusions>

<exclusion>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.4.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.4.1</version>

</dependency>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.4</version>

<scope>test</scope>

</dependency>

</dependencies>

</project>

将core-site.xml,hdfs-site.xml,mapred-site.xml和slaves文件放在src目录下,否则写代码的时候不能读取,或者配置eclipse也可以,方法如下:

将上面的配置文件放在conf目录下的配置文件,要为主类运行而服务还需要Run Configuration:

Run As->Run Configuration->Java Application ->Classpath ->Bootstrap Entries -> Advanced ->Add Folders,选择conf文件夹

这样,就可以运行hadoop程序了!

package cn.bigdata.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import sun.tools.java.ClassPath;

import java.io.InputStream;

import java.net.URI;

import java.net.URL;

import java.util.Properties;

public class Test {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

conf.addResource("core-site.xml");//默认加载此文件,必须放在src下,此行可以注释掉,但下面两行不能注释,否则不能读取

conf.addResource("hdfs-site.xml");

conf.addResource("mapred-site.xml");

FileSystem fs = FileSystem.get(new URI("/"),conf,"hadoop");//必须设置用户为hadoop集群的用户,否则无法创建文件,即使设置了dfs.permisions为false也不行

FileStatus[] statuses = fs.listStatus(new Path("/hbase"));

for (FileStatus status : statuses) {

System.out.println(status);

}

FSDataOutputStream os = fs.create(new Path("/hbase/test.log"));

os.write("Hello World!".getBytes());

os.flush();

os.close();

InputStream is = fs.open(new Path("/hbase/test.log"));

IOUtils.copyBytes(is, System.out, 1024, true);

}

}

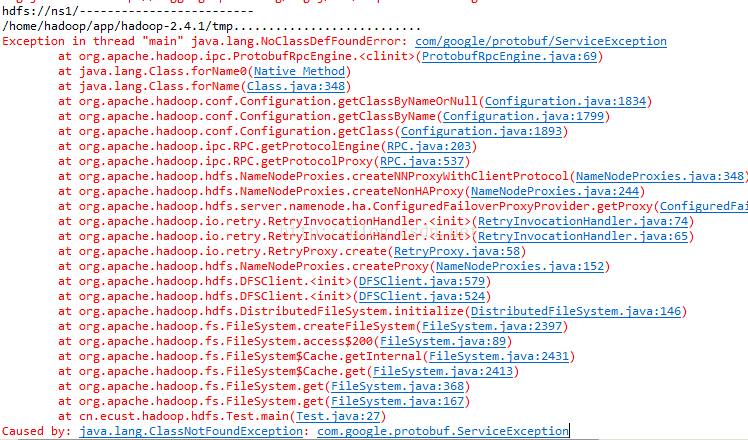

如果报下面错误:

原因是protobuf-2.5.0.jar有问题,要么丢失,要么就是maven下载的有问题

解决办法:删除原来的jar包(通过jar包旁边的路径,定位到文件夹,全部删除),然后用maven重新下载

之前就遇到hadoop的核心jar下载的不正确,通过上面的方法解决。

如果报下面的错误:

Exception in thread "main" org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyException): Operation category READ is not supported in state standby

at org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:87)

at org.apache.hadoop.hdfs.server.namenode.NameNode$NameNodeHAContext.checkOperation(NameNode.java:1639)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkOperation(FSNamesystem.java:1193)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getListingInt(FSNamesystem.java:4171)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getListing(FSNamesystem.java:4159)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getListing(NameNodeRpcServer.java:767)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getListing(ClientNamenodeProtocolServerSideTranslatorPB.java:570)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:585)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:928)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2013)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2009)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1556)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2007)

at org.apache.hadoop.ipc.Client.call(Client.java:1410)

at org.apache.hadoop.ipc.Client.call(Client.java:1363)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:206)

at com.sun.proxy.$Proxy9.getListing(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getListing(ClientNamenodeProtocolTranslatorPB.java:515)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:190)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:103)

at com.sun.proxy.$Proxy10.getListing(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.listPaths(DFSClient.java:1743)

at org.apache.hadoop.hdfs.DFSClient.listPaths(DFSClient.java:1726)

at org.apache.hadoop.hdfs.DistributedFileSystem.listStatusInternal(DistributedFileSystem.java:650)

at org.apache.hadoop.hdfs.DistributedFileSystem.access$600(DistributedFileSystem.java:102)

at org.apache.hadoop.hdfs.DistributedFileSystem$14.doCall(DistributedFileSystem.java:712)

at org.apache.hadoop.hdfs.DistributedFileSystem$14.doCall(DistributedFileSystem.java:708)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.listStatus(DistributedFileSystem.java:708)

at cn.ecust.hadoop.hdfs.Test.main(Test.java:45)

原因就是hadoop集群的active的namenode出问题了,重新启动即可

相关文章推荐

- linux用户权限

- Linux中find常见用法示例

- linux查看内核版本、系统版本、系统位数(32or64)

- 利用securecrt在linux与windows之间传输文件

- 基于tiny4412的Linux内核移植 -- 设备树的展开

- 在Linux下使用gradle自动打包

- Linux中gcc编译过程分解

- Linux目录结构和常用命令

- Linux一键部署Web环境

- linux进程间通信-信号通信

- Linux入门:常用命令:scp上传下载文件

- CentOS安装NTFS-3g

- 如何使用Unix/Linux grep命令——磨刀不误砍柴工系列

- linux 快速分析JVM信息

- Linux下iptables 禁止端口和开放端口

- [Linux]ps命令参数应用说明

- linux一些内核参数

- Centos6.5 python升级成2.7版本出现的一些问题解决方法

- Linux内核中的fastcall和asmlinkage宏

- Linux下Chrome或Chromium出现“Your profile could not be opened correctly”解决方法