spark学习13之RDD的partitions数目获取

2016-05-22 16:07

435 查看

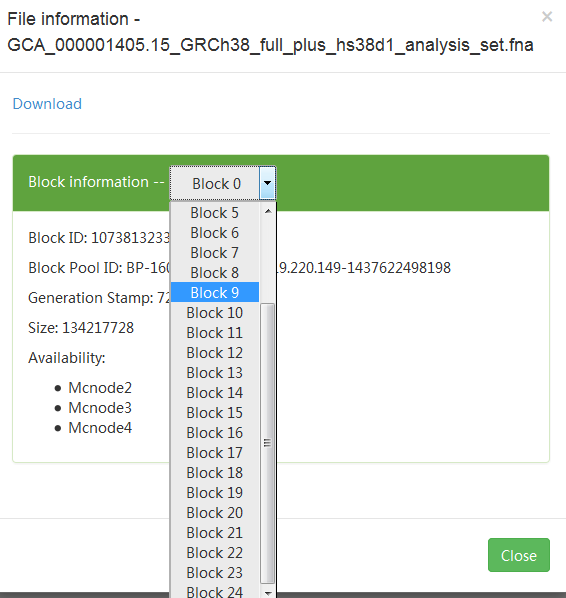

更多代码请见:https://github.com/xubo245/SparkLearning

spark1.5.2

1解释

获取RDD的partitions数目和index信息

疑问:为什么纯文本的partitions数目与HDFS的block数目一样,但是.gz的压缩文件的partitions数目却为1?

2.代码:

spark1.6中可以直接获取:

3.结果:

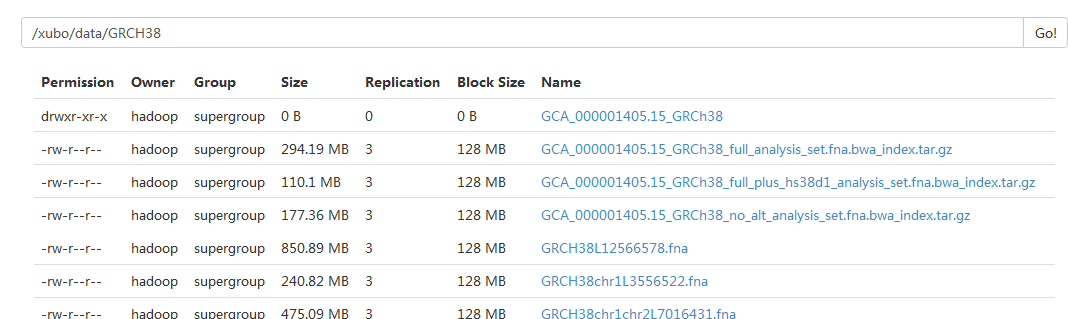

(1)第一个文件

partitions数:

详细信息:

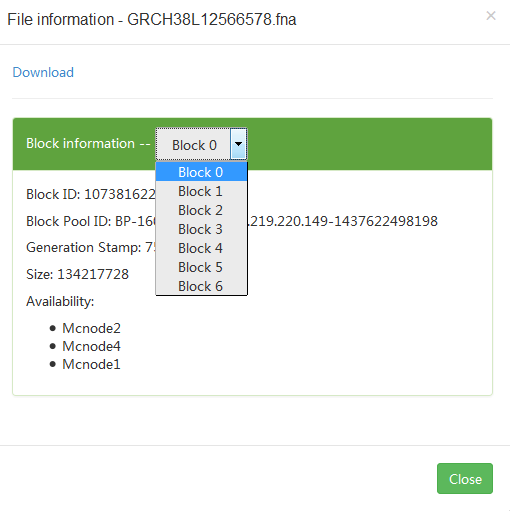

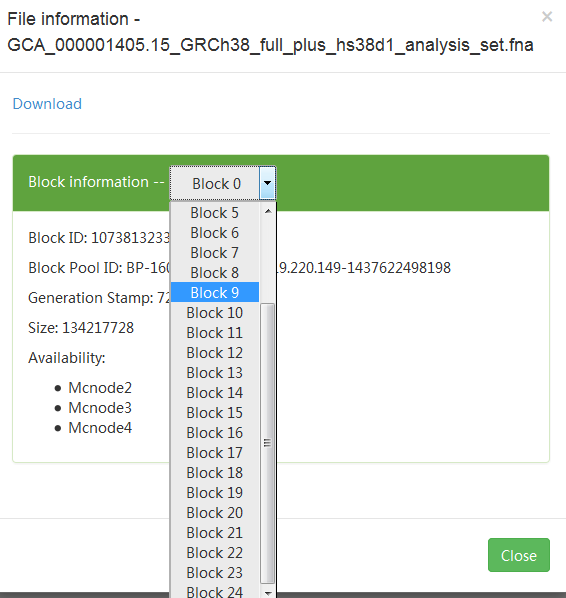

(2)第二个文件:

(3)第三个文件:

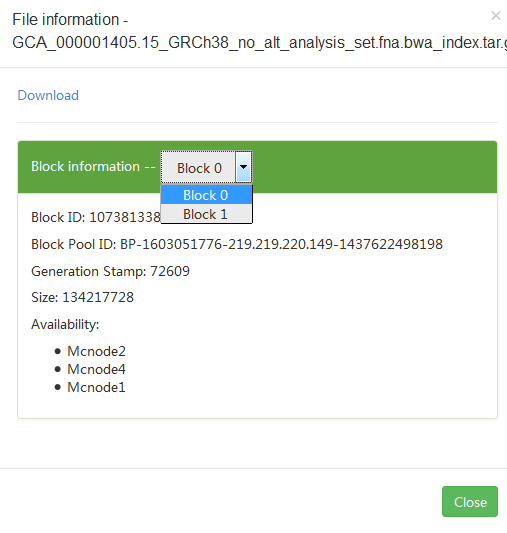

但是gz文件: 大小差不多,但是partition却为1

index:

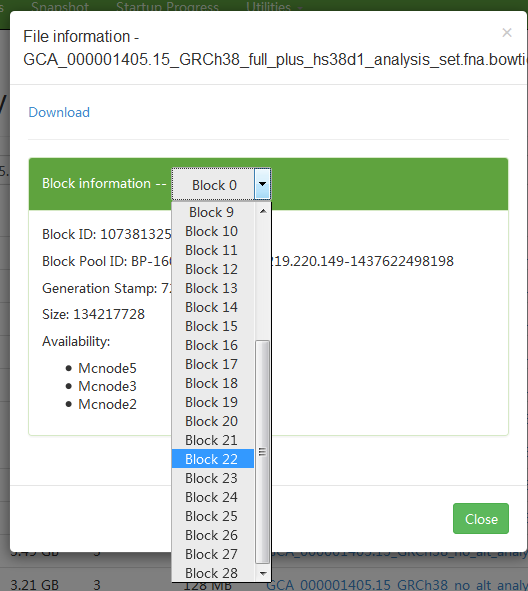

(4)大文件(3G),同样的:

4.本来想在RDD加一个获取partitions数量的函数或者属性,但是已看代码,1.6中有人加了:

目前不确定为什么blocks数一样,生成的partitions数不一样的原因,所以有待学习

参考

【1】http://spark.apache.org/docs/1.5.2/mllib-guide.html

【2】http://spark.apache.org/docs/1.5.2/mllib-collaborative-filtering.html#collaborative-filtering

【3】https://github.com/xubo245/SparkLearning

spark1.5.2

1解释

获取RDD的partitions数目和index信息

疑问:为什么纯文本的partitions数目与HDFS的block数目一样,但是.gz的压缩文件的partitions数目却为1?

2.代码:

sc.textFile("/xubo/GRCH38Sub/GRCH38L12566578.fna").partitions.lengthsc.textFile("/xubo/GRCH38Sub/GRCH38L12566578.fna.bwt").partitions.foreach(each=>println(each.index))spark1.6中可以直接获取:

@Since("1.6.0")

final def getNumPartitions: Int = partitions.length3.结果:

(1)第一个文件

partitions数:

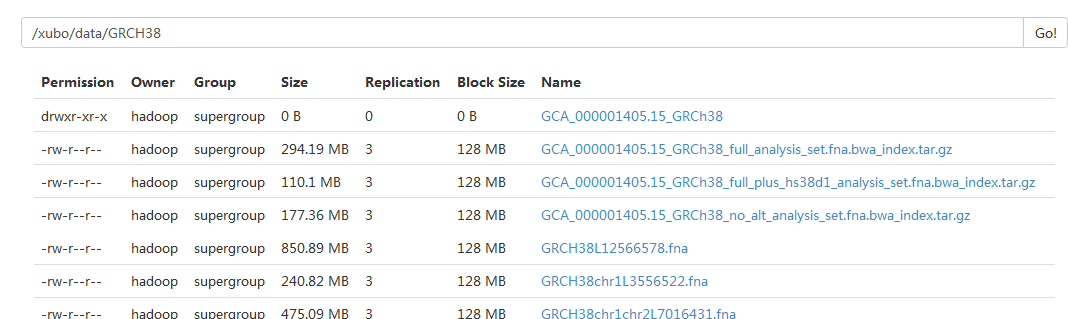

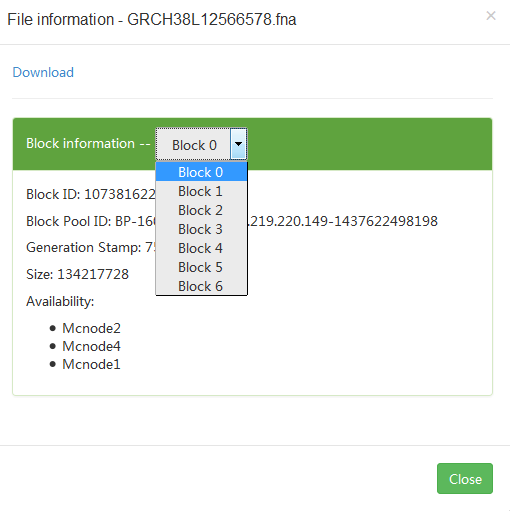

scala> sc.textFile("/xubo/GRCH38Sub/GRCH38L12566578.fna").partitions.length

res2: Int = 7详细信息:

scala> sc.textFile("/xubo/GRCH38Sub/GRCH38L12566578.fna.bwt").partitions.foreach(each=>println(each.index))

0

1

2

3

4

5

6(2)第二个文件:

scala> sc.textFile(file).partitions.foreach(each=>println(each.index)) 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

(3)第三个文件:

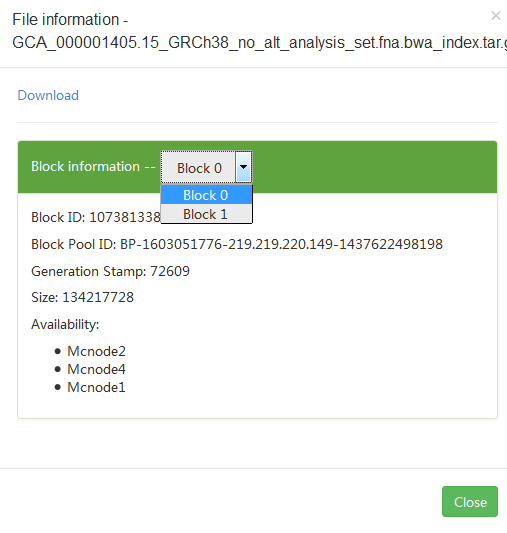

但是gz文件: 大小差不多,但是partition却为1

scala> sc.textFile("/xubo/data/GRCH38/GCA_000001405.15_GRCh38_full_analysis_set.fna.bwa_index.tar.gz").partitions.length

res5: Int = 1index:

scala> sc.textFile("/xubo/data/GRCH38/GCA_000001405.15_GRCh38_full_analysis_set.fna.bwa_index.tar.gz").partitions.foreach(each=>println(each.index))

0

(4)大文件(3G),同样的:

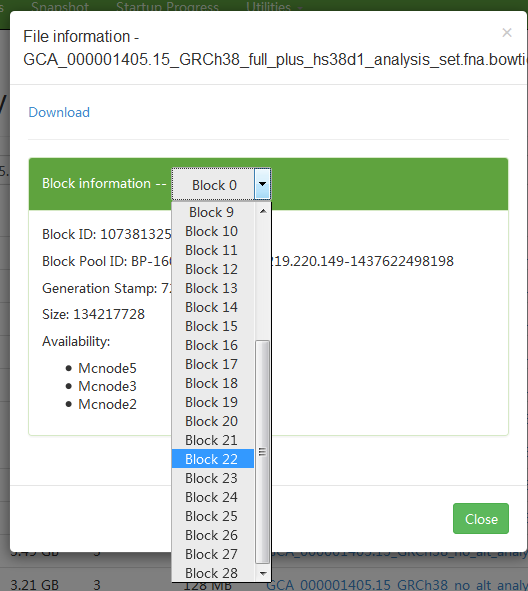

scala> val file="/xubo/data/GRCH38/GCA_000001405.15_GRCh38/seqs_for_alignment_pipelines.ucsc_ids/GCA_000001405.15_GRCh38_full_plus_hs38d1_analysis_set.fna.bowtie_index.tar.gz" file: String = /xubo/data/GRCH38/GCA_000001405.15_GRCh38/seqs_for_alignment_pipelines.ucsc_ids/GCA_000001405.15_GRCh38_full_plus_hs38d1_analysis_set.fna.bowtie_index.tar.gz scala> sc.textFile(file).partitions.foreach(each=>println(each.index)) 0

4.本来想在RDD加一个获取partitions数量的函数或者属性,但是已看代码,1.6中有人加了:

/**

* Returns the number of partitions of this RDD.

*/

@Since("1.6.0")

final def getNumPartitions: Int = partitions.length目前不确定为什么blocks数一样,生成的partitions数不一样的原因,所以有待学习

参考

【1】http://spark.apache.org/docs/1.5.2/mllib-guide.html

【2】http://spark.apache.org/docs/1.5.2/mllib-collaborative-filtering.html#collaborative-filtering

【3】https://github.com/xubo245/SparkLearning

相关文章推荐

- Spark RDD API详解(一) Map和Reduce

- 使用spark和spark mllib进行股票预测

- Spark随谈——开发指南(译)

- Spark,一种快速数据分析替代方案

- eclipse 开发 spark Streaming wordCount

- Understanding Spark Caching

- ClassNotFoundException:scala.PreDef$

- Windows 下Spark 快速搭建Spark源码阅读环境

- Spark中将对象序列化存储到hdfs

- 使用java代码提交Spark的hive sql任务,run as java application

- Spark机器学习(一) -- Machine Learning Library (MLlib)

- Spark机器学习(二) 局部向量 Local-- Data Types - MLlib

- Spark机器学习(三) Labeled point-- Data Types

- Spark初探

- Spark Streaming初探

- Spark本地开发环境搭建

- 搭建hadoop/spark集群环境

- Spark HA部署方案

- Spark HA原理架构图

- spark内存概述