...python の 学习

2016-05-14 09:44

429 查看

5.14...上次学python 好像是一个月前..写点东西记录下叭..现在在看李老大写的博客写..可能直接开抄代码...感觉自己写的总是爬不成功,之前写的爬豆瓣影评的爬虫还是残的...1.最简单的爬取一个网页 不懂啊解决了......

不懂啊解决了......

http://m.ithao123.cn/content-6589593.html

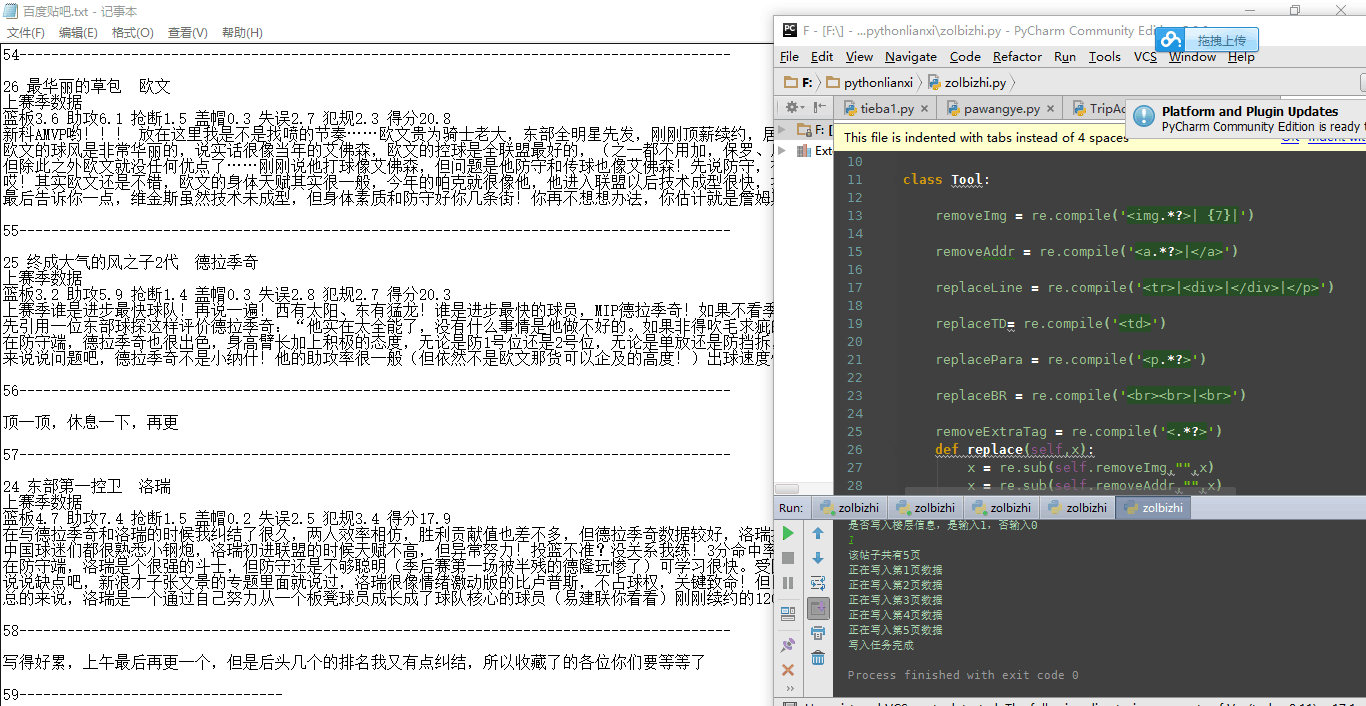

应该用 "wb" 去打开文件 5.15今天试了下李老大爬ZOL 壁纸 的代码,爬出来的文件夹里面是空的啊...而且文件名是乱码..不过李老大说了那个只适用于linux于是开始看 崔庆才 教程1.爬取贴吧帖子效果图

5.15今天试了下李老大爬ZOL 壁纸 的代码,爬出来的文件夹里面是空的啊...而且文件名是乱码..不过李老大说了那个只适用于linux于是开始看 崔庆才 教程1.爬取贴吧帖子效果图 然后在抄代码的过程中遇到三个问题1) print 中文的时候会报错一种解决方案是 这个

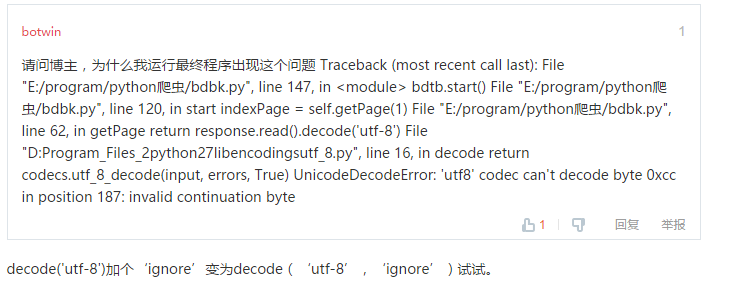

然后在抄代码的过程中遇到三个问题1) print 中文的时候会报错一种解决方案是 这个 解决办法就是图里面说的这样3.最后一个问题 就是 贴吧改版了要换成

解决办法就是图里面说的这样3.最后一个问题 就是 贴吧改版了要换成

import urllib2

html = urllib2.urlopen('http://music.163.com/')

print html.read()2.把爬取到的网页存起来可是好像因为之前用了那个网页映射工具,现在生成 的 html 里面是当前目录下的东西,而不是自己爬的那个网页里面的内容...sigh..import urllib2

response = urllib2.urlopen('http://music.163.com/')

html = response.read()

open('testt.html',"w").write(html)3.爬取ZOL 的一张壁纸import urllib2

import re # 正则表达式所用到的库

# 我们所要下载的图片所在网址

url = 'http://desk.zol.com.cn/bizhi/6377_78500_2.html'

response = urllib2.urlopen(url)

# 获取网页内容

html = response.read()

# 确定一个正则表达式,用来找到图片的所在地址

reg = re.compile(r'<img id="bigImg" src="(.*?jpg)" .*>');

imgurl = reg.findall(html)[0]

# 打开图片并保存为haha.jpg

imgsrc = urllib2.urlopen(imgurl).read()

open("haha.jpg","w").write(imgsrc)是直接抄的老大的代码然而我爬出来的壁纸是这样的 不懂啊解决了......

不懂啊解决了......http://m.ithao123.cn/content-6589593.html

应该用 "wb" 去打开文件

import urllib2

import re

url = 'http://desk.zol.com.cn/bizhi/6377_78500_2.html'

response = urllib2.urlopen(url)

html = response.read()

reg = re.compile(r'<img id="bigImg" src="(.*?jpg)" .*>');

imgurl = reg.findall(html)[0]

imgsrc = urllib2.urlopen(imgurl).read()

open("haha.jpg","wb").write(imgsrc)然后可以看到壁纸了,感人!!! 5.15今天试了下李老大爬ZOL 壁纸 的代码,爬出来的文件夹里面是空的啊...而且文件名是乱码..不过李老大说了那个只适用于linux于是开始看 崔庆才 教程1.爬取贴吧帖子效果图

5.15今天试了下李老大爬ZOL 壁纸 的代码,爬出来的文件夹里面是空的啊...而且文件名是乱码..不过李老大说了那个只适用于linux于是开始看 崔庆才 教程1.爬取贴吧帖子效果图 然后在抄代码的过程中遇到三个问题1) print 中文的时候会报错一种解决方案是 这个

然后在抄代码的过程中遇到三个问题1) print 中文的时候会报错一种解决方案是 这个#!/usr/bin/python #coding:utf-8这篇博客讲的2)然后在改了上面那个问题后会报错,像下面这样

解决办法就是图里面说的这样3.最后一个问题 就是 贴吧改版了要换成

解决办法就是图里面说的这样3.最后一个问题 就是 贴吧改版了要换成<h3 class="core_title_txt".*?>(.*?)</h3> 最后代码是这样的

#!/usr/bin/python #coding:utf-8还不懂原理,再看5.18爬取贴吧内容的 一个类

__author__ = 'CQC'

# -*- coding:utf-8 -*-import urllib

import urllib2

import reclass Tool:removeImg = re.compile('<img.*?>| {7}|')removeAddr = re.compile('<a.*?>|</a>')replaceLine = re.compile('<tr>|<div>|</div>|</p>')replaceTD= re.compile('<td>')replacePara = re.compile('<p.*?>')replaceBR = re.compile('<br><br>|<br>')removeExtraTag = re.compile('<.*?>')

def replace(self,x):

x = re.sub(self.removeImg,"",x)

x = re.sub(self.removeAddr,"",x)

x = re.sub(self.replaceLine,"\n",x)

x = re.sub(self.replaceTD,"\t",x)

x = re.sub(self.replacePara,"\n ",x)

x = re.sub(self.replaceBR,"\n",x)

x = re.sub(self.removeExtraTag,"",x)return x.strip()class BDTB:def __init__(self,baseUrl,seeLZ,floorTag):self.baseURL = baseUrlself.seeLZ = '?see_lz='+str(seeLZ)self.tool = Tool()self.file = Noneself.floor = 1self.defaultTitle = u"百度贴吧"self.floorTag = floorTagdef getPage(self,pageNum):

try:url = self.baseURL+ self.seeLZ + '&pn=' + str(pageNum)

request = urllib2.Request(url)

response = urllib2.urlopen(request)return response.read().decode('utf-8','ignore')except urllib2.URLError, e:

if hasattr(e,"reason"):

print u"连接百度贴吧失败,错误原因",e.reason

return Nonedef getTitle(self,page):pattern = re.compile('<h3 class=core_title_txt.*?>(.*?)</h3>',re.S)

result = re.search(pattern,page)

if result:return result.group(1).strip()

else:

return Nonedef getPageNum(self,page):pattern = re.compile('<li class="l_reply_num.*?</span>.*?<span.*?>(.*?)</span>',re.S)

result = re.search(pattern,page)

if result:

return result.group(1).strip()

else:

return Nonedef getContent(self,page):pattern = re.compile('<div id="post_content_.*?>(.*?)</div>',re.S)

items = re.findall(pattern,page)

contents = []

for item in items:content = "\n"+self.tool.replace(item)+"\n"

contents.append(content.encode('utf-8'))

return contentsdef setFileTitle(self,title):if title is not None:

self.file = open(title + ".txt","w+")

else:

self.file = open(self.defaultTitle + ".txt","w+")def writeData(self,contents):for item in contents:

if self.floorTag == '1':floorLine = "\n" + str(self.floor) + u"-----------------------------------------------------------------------------------------\n"

self.file.write(floorLine)

self.file.write(item)

self.floor += 1def start(self):

indexPage = self.getPage(1)

pageNum = self.getPageNum(indexPage)

title = self.getTitle(indexPage)

self.setFileTitle(title)

if pageNum == None:

print "URL已失效,请重试"

return

try:

print "该帖子共有" + str(pageNum) + "页"

for i in range(1,int(pageNum)+1):

print "正在写入第" + str(i) + "页数据"

page = self.getPage(i)

contents = self.getContent(page)

self.writeData(contents)except IOError,e:

print "写入异常,原因" + e.message

finally:

print "写入任务完成"print u"请输入帖子代号"

baseURL = 'http://tieba.baidu.com/p/' + str(raw_input(u'http://tieba.baidu.com/p/'))

seeLZ = raw_input("是否只获取楼主发言,是输入1,否输入0\n")

floorTag = raw_input("是否写入楼层信息,是输入1,否输入0\n")

bdtb = BDTB(baseURL,seeLZ,floorTag)

bdtb.start()

#!/usr/bin/python #coding:utf-85.19模拟登陆学校的信息门户要用 ie 才能够看到成绩,但是看不到表单,就是 form data这个时候再用回搜狗

import urllib

import urllib2

import reclass bdtb:

def __init__(self,baseurl,seelz):

self.baseurl = baseurl

self.seelz = '?see_lz='+str(seelz)def getPage(self,pagenum):

try:

url = self.baseurl + self.seelz + '&pn=' + str(pagenum)

request = urllib2.Request(url)

response = urllib2.urlopen(request)

print response.read()

return response

except urllib2.URLError,e:

if hasattr(e,"reason"):

print u"连接百度贴吧失败,错误原因",e.reason

return Nonebaseurl = 'http://tieba.baidu.com/p/3138733512'

bb = bdtb(baseurl,1)

bb.getPage(1)

#coding=utf-8

import urllib

import urllib2

import cookielib

import re

class CHD:

def __init__(self):

self.loginUrl = 'http://bksjw.chd.edu.cn/loginAction.do'

self.cookies = cookielib.CookieJar()

self.postdata = urllib.urlencode({

'dllx':dldl

'zjh':xxxx

'mm':xxxx

})

self.opener = urllib2.build_opener(urllib2.HTTPCookieProcessor(self.cookies))

def getPage(self):

request = urllib2.Request(

url = self.loginUrl,

data = self.postdata)

result = self.opener.open(request)

print result.read().decode('gbk')

chd = CHD()

chd.getPage()

相关文章推荐

- Python Django Web服务搭建基础

- python学习笔记(matplotlib下载安装)

- python pyodbc文档翻译

- python学习笔记-Day1

- python3 使用pyperclip读写剪贴板(windows)

- 爬虫闯关之旅-2

- 爬虫闯关之旅-1

- Python初识

- python matplotlib

- python爬取51job中hr的邮箱

- 详解Python函数作用域的LEGB顺序

- python实现SMTP邮件发送功能

- python3.5 记事本源程序

- python爬取51job中hr的邮箱

- NumPy常见函数和使用示例

- python第一天

- python中字典的比较

- python中字典的比较

- Python 线程的使用(threading模块)

- Python菜鸟之路:Python基础