基于QT和opencv的瞳孔定位及跟踪程序

2016-04-26 21:11

609 查看

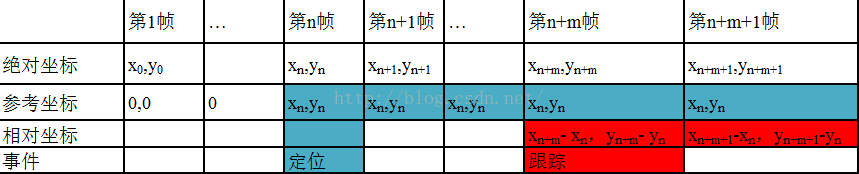

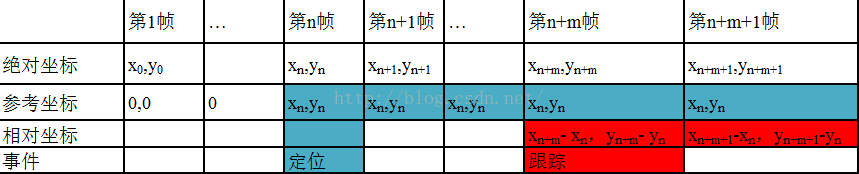

本篇博客是基于上一篇博客《基于opencv和QT的瞳孔精确检测程序》的进一步开发和完善,实现了瞳孔坐标和位置的输出功能,本文将给出完整的瞳孔定位及跟踪程序,本文是作者的独立开发成果,转载请注明出处http://blog.csdn.net/zyx1990412/article/details/51254127。实现原理:通过上一篇博客的介绍,我们可以实现对每一帧图像进行瞳孔检测,并得到瞳孔的精确坐标,即实现了瞳孔定位的功能。为了实现瞳孔跟踪的功能,我们可以通过手动按钮记录下瞳孔当前帧的坐标,作为跟踪的参考标准,然后将参考坐标与后续定位的坐标作比较,得到后续帧的相对坐标。本文的瞳孔跟踪功能是简单地计算当前帧相对于参考帧的相对位置,以确定瞳孔的当前相对位置。其原理图可以用图一表示。 图一2.首先利用QT搭建一个界面,如图二。

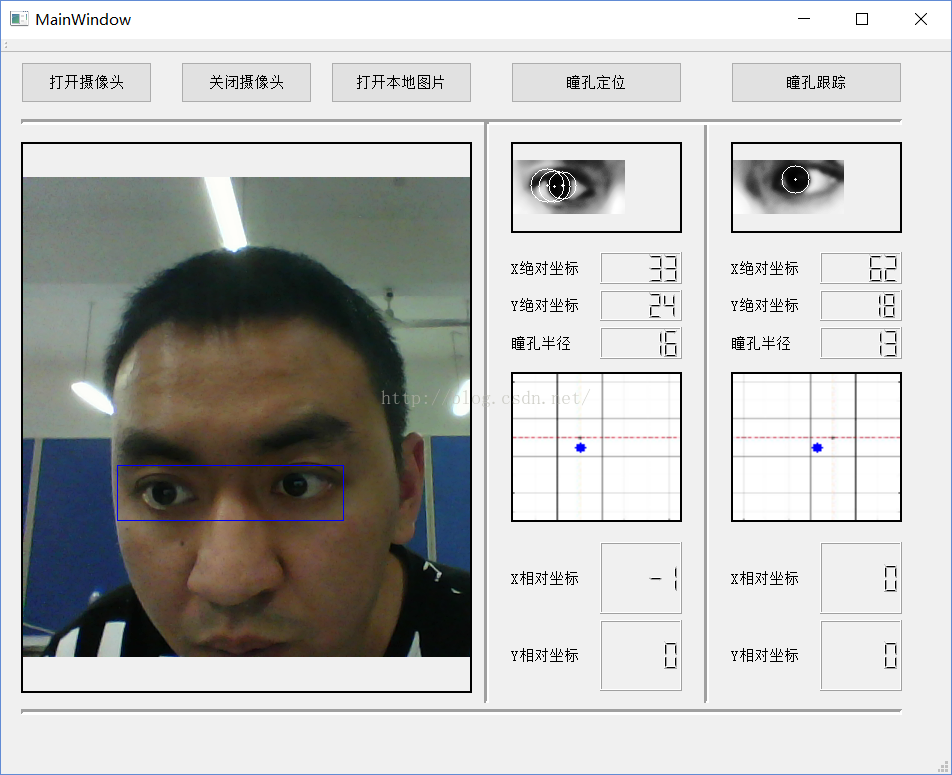

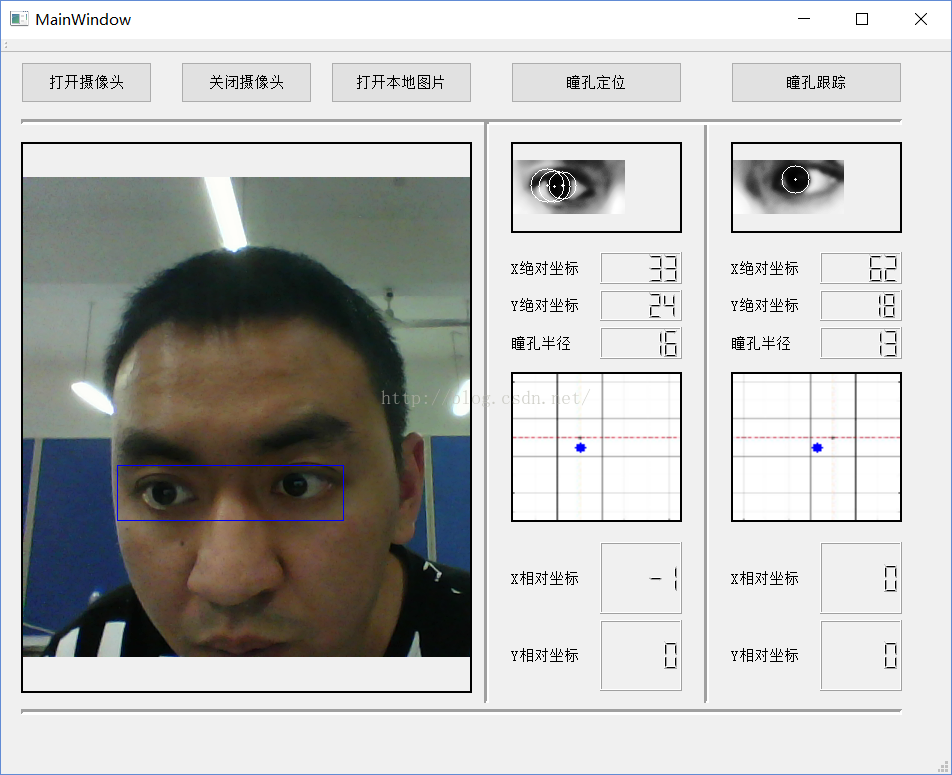

图一2.首先利用QT搭建一个界面,如图二。 图二2.实现摄像头视频的读取和显示功能,详细的方法在《基于QT和opencv的摄像头(本地图片)读取并输出程序》这篇博客中有介绍。3.建立一个图像处理类,对每一帧图像进行处理。主要功能是检测瞳孔中心,显示瞳孔中心定位结果图,并将瞳孔中心的绝对坐标保存在Lcircles和Rcircles中,方便外部函数读取。这个类的实现在后文给出。

图二2.实现摄像头视频的读取和显示功能,详细的方法在《基于QT和opencv的摄像头(本地图片)读取并输出程序》这篇博客中有介绍。3.建立一个图像处理类,对每一帧图像进行处理。主要功能是检测瞳孔中心,显示瞳孔中心定位结果图,并将瞳孔中心的绝对坐标保存在Lcircles和Rcircles中,方便外部函数读取。这个类的实现在后文给出。 图三最终的效果如图三,由于参数的设置和函数的精确等问题,其定位的效果还不能达到最佳效果。佩戴眼镜时的检测效果不好。图中的坐标图是直接读取已经画好的坐标图,如图四,图中的蓝点是根据相对坐标在坐标图上用opencv的画圆函数画出的。

图三最终的效果如图三,由于参数的设置和函数的精确等问题,其定位的效果还不能达到最佳效果。佩戴眼镜时的检测效果不好。图中的坐标图是直接读取已经画好的坐标图,如图四,图中的蓝点是根据相对坐标在坐标图上用opencv的画圆函数画出的。 图四PS:本文所编写的瞳孔跟踪函数实际上核心是瞳孔的实时检测和定位,并没有用到传统意义上的目标跟踪函数。作者在毕业设计中的研究重点是基于MeanShift算法的目标跟踪算法,针对瞳孔跟踪,进行了加入帧差法和LBP的改进,作者将在以后的博客中进行详细介绍,并利用vs2013+opencv进行编程验证。源代码mainwindow.h

图四PS:本文所编写的瞳孔跟踪函数实际上核心是瞳孔的实时检测和定位,并没有用到传统意义上的目标跟踪函数。作者在毕业设计中的研究重点是基于MeanShift算法的目标跟踪算法,针对瞳孔跟踪,进行了加入帧差法和LBP的改进,作者将在以后的博客中进行详细介绍,并利用vs2013+opencv进行编程验证。源代码mainwindow.h

图一2.首先利用QT搭建一个界面,如图二。

图一2.首先利用QT搭建一个界面,如图二。 图二2.实现摄像头视频的读取和显示功能,详细的方法在《基于QT和opencv的摄像头(本地图片)读取并输出程序》这篇博客中有介绍。3.建立一个图像处理类,对每一帧图像进行处理。主要功能是检测瞳孔中心,显示瞳孔中心定位结果图,并将瞳孔中心的绝对坐标保存在Lcircles和Rcircles中,方便外部函数读取。这个类的实现在后文给出。

图二2.实现摄像头视频的读取和显示功能,详细的方法在《基于QT和opencv的摄像头(本地图片)读取并输出程序》这篇博客中有介绍。3.建立一个图像处理类,对每一帧图像进行处理。主要功能是检测瞳孔中心,显示瞳孔中心定位结果图,并将瞳孔中心的绝对坐标保存在Lcircles和Rcircles中,方便外部函数读取。这个类的实现在后文给出。class ImgProcess

{

private:

Mat inimg;//输入图像

Mat outimg;//输出结果

Mat Leye;

Mat Reye;

Mat Leye_G;

Mat Reye_G;

CvRect drawing_box;

public:

vector<Vec3f> Lcircles;

vector<Vec3f> Rcircles;

ImgProcess(Mat image):inimg(image),drawing_box(cvRect(0, 0, 50, 20)){}

void EyeDetect();//人眼检测

Mat Outputimg();//输出结果

void DivideEye();//分左右眼

Mat OutLeye();//输出结果

Mat OutReye();

Mat EdgeDetect(Mat &edgeimg);//边缘检测

void EyeEdge();//分别检测左右眼

vector<Vec3f> Hough(Mat &midImage);//hough变换

void FindCenter();//定位中心

Mat PlotC(vector<Vec3f> circles,Mat &midImage);//画HOUGH变换的检测结果

};4.在mainwindow中编写槽函数,触发定位和跟踪事件。namespace Ui {

class MainWindow;

}

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

explicit MainWindow(QWidget *parent = 0);

~MainWindow();

private slots:

void on_pushButton_clicked();//打开摄像头,进行瞳孔精确检测及定位

void on_pushButton_2_clicked();//打开本地图片

void on_pushButton_3_clicked();//瞳孔定位,建立标准坐标

void on_pushButton_4_clicked();//是够启动瞳孔跟踪

void on_pushButton_5_clicked();//关闭摄像头

private:

Ui::MainWindow *ui;

CvRect lpc_box=cvRect( 0,0,0,0);//保存当前帧的瞳孔坐标

CvRect rpc_box=cvRect( 0,0,0,0);

CvRect lp_bz=cvRect( 0,0,0,0);//保存标准瞳孔坐标

CvRect rp_bz=cvRect( 0,0,0,0);

Mat pic1;//坐标图

Mat pic2;

bool Lstop=false;

bool Tack=false;

void eyeTack();//瞳孔跟踪函数

//cv::Mat image;

};(1)打开摄像头后,图像处理类对每一帧图像进行处理,并将图像处理类得到的瞳孔绝对坐标保存到lpc_box和rpc_box中,详细介绍参考《基于opencv和QT的瞳孔精确检测程序》。(2)触发瞳孔定位函数后,将图像处理类中得到的瞳孔绝对坐标保存到参考坐标lp_bz和rp_bz中。并将结果在lcdNumber上输出(3)触发瞳孔跟踪函数后,通过当前帧绝对坐标和参考坐标的计算,得到相对坐标。并将结果在lcdNumber上输出。(4)关闭摄像头,循环终止。 图三最终的效果如图三,由于参数的设置和函数的精确等问题,其定位的效果还不能达到最佳效果。佩戴眼镜时的检测效果不好。图中的坐标图是直接读取已经画好的坐标图,如图四,图中的蓝点是根据相对坐标在坐标图上用opencv的画圆函数画出的。

图三最终的效果如图三,由于参数的设置和函数的精确等问题,其定位的效果还不能达到最佳效果。佩戴眼镜时的检测效果不好。图中的坐标图是直接读取已经画好的坐标图,如图四,图中的蓝点是根据相对坐标在坐标图上用opencv的画圆函数画出的。 图四PS:本文所编写的瞳孔跟踪函数实际上核心是瞳孔的实时检测和定位,并没有用到传统意义上的目标跟踪函数。作者在毕业设计中的研究重点是基于MeanShift算法的目标跟踪算法,针对瞳孔跟踪,进行了加入帧差法和LBP的改进,作者将在以后的博客中进行详细介绍,并利用vs2013+opencv进行编程验证。源代码mainwindow.h

图四PS:本文所编写的瞳孔跟踪函数实际上核心是瞳孔的实时检测和定位,并没有用到传统意义上的目标跟踪函数。作者在毕业设计中的研究重点是基于MeanShift算法的目标跟踪算法,针对瞳孔跟踪,进行了加入帧差法和LBP的改进,作者将在以后的博客中进行详细介绍,并利用vs2013+opencv进行编程验证。源代码mainwindow.h#ifndef MAINWINDOW_H

#define MAINWINDOW_H

#include <QMainWindow>

#include "ui_mainwindow.h"

#include "detectanddisplay.h"

#include "imgprocess.h"

#include <QLabel>

#include"Mat2QImage.h"

namespace Ui {

class MainWindow;

}

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

explicit MainWindow(QWidget *parent = 0);

~MainWindow();

private slots:

void on_pushButton_clicked();//打开摄像头,进行瞳孔精确检测及定位

void on_pushButton_2_clicked();//打开本地图片

void on_pushButton_3_clicked();//瞳孔定位,建立标准坐标

void on_pushButton_4_clicked();//是够启动瞳孔跟踪

void on_pushButton_5_clicked();//关闭摄像头

private:

Ui::MainWindow *ui;

CvRect lpc_box=cvRect( 0,0,0,0);//保存当前帧的瞳孔坐标

CvRect rpc_box=cvRect( 0,0,0,0);

CvRect lp_bz=cvRect( 0,0,0,0);//保存标准瞳孔坐标

CvRect rp_bz=cvRect( 0,0,0,0);

Mat pic1;//坐标图

Ma

4000

t pic2;

bool Lstop=false;

bool Tack=false;

void eyeTack();//瞳孔跟踪函数

//cv::Mat image;

};

#endif // MAINWINDOW_Hmainwindow.cpp#include "mainwindow.h"

MainWindow::MainWindow(QWidget *parent) :

QMainWindow(parent),

ui(new Ui::MainWindow)

{

ui->setupUi(this);

}

MainWindow::~MainWindow()

{

delete ui;

}

void MainWindow::on_pushButton_clicked()//打开摄像头,进行瞳孔精确检测及定位

{

VideoCapture cap(0); //打开默认摄像头

if(!cap.isOpened())

{

cout<<"no cap"<<endl;

}

Mat frame; //保存当前帧的图像

Lstop=false;//控制是否循环检测

while(!Lstop)

{

cap>>frame;//将摄像头的当前帧输出到frame

ImgProcess pro(frame);//建立视频处理类

pro.EyeDetect();//人眼检测

Mat image=pro.Outputimg();//输出检测图像

imshow( "camera", image );

QImage img=Mat2QImage(image);//将mat格式转换为Qimage格式

ui->label->setPixmap(QPixmap::fromImage(img));//将结果在label上显示

//ui->label->setScaledContents(true);//使图像尺寸与label大小匹配

pro.DivideEye();//分成左右眼

pro.EyeEdge();//瞳孔边缘检测

pro.FindCenter();//hough变换求圆心

Mat mleye=pro.OutLeye();//输出瞳孔定位结果

QImage qleye=Mat2QImage(mleye);

ui->label_2->setPixmap(QPixmap::fromImage(qleye));

//ui->label_2->setScaledContents(true);

Mat mreye=pro.OutReye();

QImage qreye=Mat2QImage(mreye);

ui->label_3->setPixmap(QPixmap::fromImage(qreye));

//ui->label_3->setScaledContents(true);

if (pro.Lcircles.size()>0)//将检测结果输出

{

lpc_box.x=pro.Lcircles[0][0];

lpc_box.y=pro.Lcircles[0][1];

lpc_box.height=pro.Lcircles[0][2];

lpc_box.width=pro.Lcircles[0][2];

}

ui->lcdNumber_6->display(lpc_box.x);//显示坐标

ui->lcdNumber_5->display(lpc_box.y);

ui->lcdNumber_9->display(lpc_box.height);

if (pro.Rcircles.size()>0)

{

rpc_box.x=pro.Rcircles[0][0];

rpc_box.y=pro.Rcircles[0][1];

rpc_box.height=pro.Rcircles[0][2];

rpc_box.width=pro.Rcircles[0][2];

}

ui->lcdNumber_8->display(rpc_box.x);

ui->lcdNumber_7->display(rpc_box.y);

ui->lcdNumber_10->display(rpc_box.height);

if( Tack==true)//是够启动跟踪功能

{

eyeTack();

}

int c = waitKey(30);//每一帧间隔30ms

if (c >= 0)//任意键暂停

{

waitKey(0);

}

}

cvDestroyWindow("camera");

}

void MainWindow::on_pushButton_2_clicked()//打开本地图片

{

Mat image0;

image0=cv::imread("IF.jpg");//图片的路径

ImgProcess pro(image0);

pro.EyeDetect();

Mat image=pro.Outputimg();

QImage img=Mat2QImage(image);

ui->label->setPixmap(QPixmap::fromImage(img));

// ui->label->setScaledContents(true);

pro.DivideEye();

pro.EyeEdge();

pro.FindCenter();

Mat mleye=pro.OutLeye();

QImage qleye=Mat2QImage(mleye);

ui->label_2->setPixmap(QPixmap::fromImage(qleye));

//ui->label_2->setScaledContents(true);

Mat mreye=pro.OutReye();

QImage qreye=Mat2QImage(mreye);

ui->label_3->setPixmap(QPixmap::fromImage(qreye));

//ui->label_3->setScaledContents(true);

waitKey(1000);

}

void MainWindow::on_pushButton_3_clicked()//瞳孔定位,建立标准坐标

{

lp_bz=lpc_box;

rp_bz=rpc_box;

ui->lcdNumber->display(lpc_box.x);

ui->lcdNumber_2->display(lpc_box.y);

ui->lcdNumber_4->display(rpc_box.x);

ui->lcdNumber_3->display(rpc_box.y);

pic1=cv::imread("zb.jpg");//读坐标图

cv::resize(pic1,pic1,Size(100,100));

QImage zbimg1=Mat2QImage(pic1);

ui->label_4->setPixmap(QPixmap::fromImage(zbimg1));

ui->label_4->setScaledContents(true);

pic2=cv::imread("zb1.jpg");

cv::resize(pic2,pic2,cvSize(100,100));

QImage zbimg2=Mat2QImage(pic2);

ui->label_5->setPixmap(QPixmap::fromImage(zbimg2));

ui->label_5->setScaledContents(true);

}

void MainWindow::on_pushButton_4_clicked()//是够启动瞳孔跟踪

{

Tack=true;

}

void MainWindow::eyeTack()//瞳孔跟踪函数

{

float ldx,ldy,rdx,rdy,plx,ply,prx,pry;//左右眼瞳孔坐标,d相对坐标差

ldx=(lpc_box.x-lp_bz.x)/lp_bz.width;

ldy=(lpc_box.y-lp_bz.y)/lp_bz.width;

rdx=(rpc_box.x-rp_bz.x)/rp_bz.width;

rdy=(rpc_box.y-rp_bz.y)/rp_bz.width;

plx=ldx*10+50;

ply=ldy*10+50;

Point Lcenter(plx, ply);//瞳孔中心在坐标图中的位置

pic1=cv::imread("zb.jpg");

cv::resize(pic1,pic1,Size(100,100));

circle( pic1, Lcenter, 3, Scalar(255,0,0), -1,8);//画瞳孔中心

QImage zbimg1=Mat2QImage(pic1);

ui->label_4->setPixmap(QPixmap::fromImage(zbimg1));

ui->label_4->setScaledContents(true);

prx=rdx*10+50;//右眼

pry=rdy*10+50;

Point Rcenter(prx, pry);

pic2=cv::imread("zb1.jpg");

cv::resize(pic2,pic2,Size(100,100));

circle( pic2, Rcenter, 3, Scalar(255,0,0), -1,8);

QImage zbimg2=Mat2QImage(pic2);

ui->label_5->setPixmap(QPixmap::fromImage(zbimg2));

ui->label_5->setScaledContents(true);

ui->lcdNumber->display(ldx);

ui->lcdNumber_2->display(ldy);

ui->lcdNumber_4->display(rdx);

ui->lcdNumber_3->display(rdy);

}

void MainWindow::on_pushButton_5_clicked()//关闭摄像头

{

Lstop=true;

}imgprocess.h#ifndef IMGPROCESS_H

#define IMGPROCESS_H

#include <opencv2/opencv.hpp>

#include <iostream>

#include <stdio.h>

#include "detectanddisplay.h"

//视频处理类

class ImgProcess

{

private:

Mat inimg;//输入图像

Mat outimg;//输出结果

Mat Leye;

Mat Reye;

Mat Leye_G;

Mat Reye_G;

CvRect drawing_box;

public:

vector<Vec3f> Lcircles;

vector<Vec3f> Rcircles;

ImgProcess(Mat image):inimg(image),drawing_box(cvRect(0, 0, 50, 20)){}

void EyeDetect();//人眼检测

Mat Outputimg();//输出结果

void DivideEye();//分左右眼

Mat OutLeye();//输出结果

Mat OutReye();

Mat EdgeDetect(Mat &edgeimg);//边缘检测

void EyeEdge();//分别检测左右眼

vector<Vec3f> Hough(Mat &midImage);//hough变换

void FindCenter();//定位中心

Mat PlotC(vector<Vec3f> circles,Mat &midImage);//画HOUGH变换的检测结果

};

#endif // IMGPROCESS_Himgprocess.cpp

#include "imgprocess.h"void ImgProcess::EyeDetect(){detectAndDisplay( inimg,drawing_box );outimg=inimg;}Mat ImgProcess::Outputimg(){return outimg;}void ImgProcess::DivideEye(){if (drawing_box.width>0){CvRect leye_box;leye_box.x=drawing_box.x+1;leye_box.y=drawing_box.y+1;leye_box.height=drawing_box.height-1;leye_box.width=floor(drawing_box.width/2)-1;CvRect reye_box;reye_box.x=leye_box.x+leye_box.width;reye_box.y=drawing_box.y+1;reye_box.height=drawing_box.height-1;reye_box.width=leye_box.width-1;Leye=inimg(leye_box);Reye=inimg(reye_box);// imshow("L",Leye);// imshow("R",Reye);}}Mat ImgProcess::OutLeye(){return Leye;}Mat ImgProcess::OutReye(){return Reye;}Mat ImgProcess::EdgeDetect(Mat &edgeimg){Mat edgeout;cvtColor(edgeimg,edgeimg,CV_BGR2GRAY);GaussianBlur( edgeimg,edgeimg, Size(9, 9), 2, 2 );equalizeHist( edgeimg, edgeimg );Canny(edgeimg,edgeout,100,200,3);//输入图像,输出图像,低阈值,高阈值,opencv建议是低阈值的3倍,内部sobel滤波器大小return edgeout;}void ImgProcess::EyeEdge(){Leye_G=EdgeDetect(Leye);Reye_G=EdgeDetect(Reye);//imshow("L",Leye_G);//imshow("R",Reye_G);}vector<Vec3f> ImgProcess::Hough(Mat &midImage){vector<Vec3f> circles;HoughCircles( midImage, circles, CV_HOUGH_GRADIENT,1.5, 5, 100, 20, drawing_box.height/4, drawing_box.height/3 );return circles;}Mat ImgProcess::PlotC(vector<Vec3f> circles,Mat &midImage){for( size_t i = 0; i < circles.size(); i++ ){Point center(cvRound(circles[i][0]), cvRound(circles[i][1]));int radius = cvRound(circles[i][2]);//cout<<i<<":"<<circles[i][0]<<","<<circles[i][1]<<","<<circles[i][2]<<endl;//绘制圆心cvRound进行四舍五入circle( midImage, center, 1, Scalar(255,0,0), -1,8);//绘制圆轮廓circle( midImage, center, radius, Scalar(255,0,0), 1,8 );}return midImage;}void ImgProcess::FindCenter(){Lcircles=Hough(Leye_G);Rcircles=Hough(Reye_G);Leye=PlotC(Lcircles,Leye);Reye=PlotC(Rcircles,Reye);}detectanddisplay.h#ifndef DETECTANDDISPLAY_H#define DETECTANDDISPLAY_H#include <opencv2/opencv.hpp>#include <iostream>#include <stdio.h>using namespace std;using namespace cv;//检测人眼区域void detectAndDisplay( Mat &frame,CvRect &box );#endif // DETECTANDDISPLAY_H

detectanddisplay.cpp

#include "detectanddisplay.h"void detectAndDisplay( Mat &frame,CvRect &box ){string face_cascade_name = "haarcascade_mcs_eyepair_big.xml";//导入已经训练完成的样本CascadeClassifier face_cascade;//建立分类器string window_name = "camera";if( !face_cascade.load( face_cascade_name ) ){printf("[error] no cascade\n");}std::vector<Rect> faces;//用于保存检测结果的向量Mat frame_gray;cvtColor( frame, frame_gray, CV_BGR2GRAY );//转换成灰度图equalizeHist( frame_gray, frame_gray );//直方图均值化face_cascade.detectMultiScale( frame_gray, faces, 1.1, 2, 0|CV_HAAR_SCALE_IMAGE, Size(30, 30) );//用于检测人眼的函数//画方框for( int i = 0; i < faces.size(); i++ ){Point centera( faces[i].x, faces[i].y);Point centerb( faces[i].x + faces[i].width, faces[i].y + faces[i].height );rectangle(frame,centera,centerb,Scalar(255,0,0));box=faces[0];}//imshow( window_name, frame );}Mat2QImage.h

#ifndef MAT2QIMAGE_H#define MAT2QIMAGE_H#include <QtGui>#include <QDebug>#include <iostream>#include <opencv/cv.h>#include <opencv/highgui.h>using namespace cv;using namespace std;QImage Mat2QImage(const Mat&);//mat格式转换为Qimage格式#endif

Mat2QImage.cpp

#include "Mat2QImage.h"QImage Mat2QImage(const Mat& mat){// 8-bits unsigned, NO. OF CHANNELS=1if(mat.type()==CV_8UC1){// cout<<"1"<<endl;// Set the color table (used to translate colour indexes to qRgb values)QVector<QRgb> colorTable;for (int i=0; i<256; i++)colorTable.push_back(qRgb(i,i,i));// Copy input Matconst uchar *qImageBuffer = (const uchar*)mat.data;// Create QImage with same dimensions as input MatQImage img(qImageBuffer, mat.cols, mat.rows, mat.step, QImage::Format_Indexed8);img.setColorTable(colorTable);return img;}// 8-bits unsigned, NO. OF CHANNELS=3if(mat.type()==CV_8UC3){// cout<<"3"<<endl;// Copy input Matconst uchar *qImageBuffer = (const uchar*)mat.data;// Create QImage with same dimensions as input MatQImage img(qImageBuffer, mat.cols, mat.rows, mat.step, QImage::Format_RGB888);return img.rgbSwapped();}else{qDebug() << "ERROR: Mat could not be converted to QImage.";return QImage();}}main.cpp#include "mainwindow.h"#include <QApplication>int main(int argc, char *argv[]){QApplication a(argc, argv);MainWindow w;w.show();return a.exec();}test0.pro#-------------------------------------------------## Project created by QtCreator 2016-03-16T10:24:54##-------------------------------------------------QT += core guigreaterThan(QT_MAJOR_VERSION, 4): QT += widgetsTARGET = test0TEMPLATE = appSOURCES += main.cpp\mainwindow.cpp \imgprocess.cpp \detectanddisplay.cpp \others.cpp \Mat2QImage.cppHEADERS += mainwindow.h \detectanddisplay.h \imgprocess.h \others.h \Mat2QImage.hFORMS += mainwindow.uiINCLUDEPATH += d:\opencv\build\include\INCLUDEPATH += d:\opencv\build\include\opencv\INCLUDEPATH += d:\opencv\build\include\opencv2\CONFIG(debug,debug|release) {LIBS += -Ld:\opencv\build\x64\vc12\lib \-lopencv_core2410d \-lopencv_highgui2410d \-lopencv_imgproc2410d \-lopencv_features2d2410d \-lopencv_calib3d2410d \-lopencv_video2410d \-lopencv_objdetect2410d} else {LIBS += -Ld:\opencv\build\x64\vc12\lib \-lopencv_core2410 \-lopencv_highgui2410 \-lopencv_imgproc2410 \-lopencv_features2d2410 \-lopencv_calib3d2410 \-lopencv_video2410 \-lopencv_objdetect2410}

相关文章推荐

- QT学习 第一章:基本对话框

- 使用Shiboken为C++和Qt库创建Python绑定

- Qt 5.6更新至RC版,最终版本近在咫尺

- Qt定时器和随机数详解

- python中使用OpenCV进行人脸检测的例子

- opencv 做人脸识别 opencv 人脸匹配分析

- 使用opencv拉伸图像扩大分辨率示例

- Qt实现图片移动实例(图文教程)

- Qt for Android开发实例教程

- 基于C++实现kinect+opencv 获取深度及彩色数据

- OpenCV 2.4.3 C++ 平滑处理分析

- Python中使用OpenCV库来进行简单的气象学遥感影像计算

- 利用Python和OpenCV库将URL转换为OpenCV格式的方法

- python结合opencv实现人脸检测与跟踪

- Python环境搭建之OpenCV的步骤方法

- Python实现OpenCV的安装与使用示例

- 在树莓派2或树莓派B+上安装Python和OpenCV的教程

- QModelIndex/Role/Model介紹<二>

- Qt Model/View/Delegate浅谈 - QAbstractListModel

- Qt Model/View/Delegate浅谈 - roleNames()