MSM平台RPM

2016-03-31 11:17

441 查看

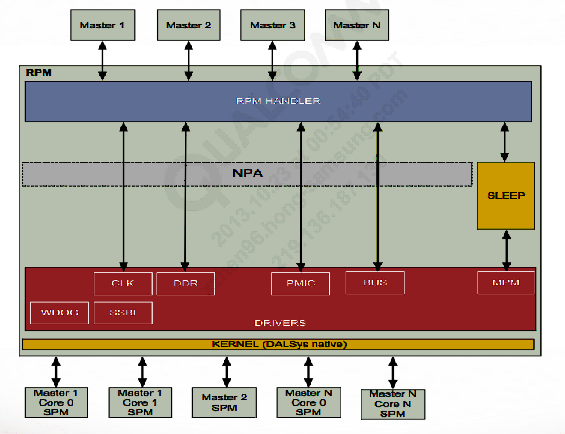

Software Component Block Diagram

RPM(Resource Power Manager)是高通MSM平台另外加的一块芯片,虽然与AP芯片打包在一起,但其是一个独立的ARM Core。之所以加这个东西,就是要控制整个电源相关的shared resources,比如ldo,clock。负责与SMP,MPM交互进入睡眠或者唤醒整个系统。以下是高通文档中对各个功能模块的说明。

Kernel – DALSys-based lightweight kernel

RPM handler – RPM handler abstracts the RPM message protocol away

from other software

Drivers – Drivers for each of the resources supported by the RPM will

register with RPM handler to request notification when requests are

received for the resource which the driver controls

NPA – A driver may use the Node Power Architecture (NPA) to represent

resources controlled by the driver

Clock driver – RPM clock driver directly handles aggregating requests

from each of the masters for any of the systemwide clock resources

controlled by the RPM

Bus arbitration driver – RPM bus arbiter driver takes bus arbiter settings

as requests from the different masters in the system and aggregates them

to represent the frequency-independent system settings

PMIC – RPM PMIC driver directly aggregates requests from each of the

masters for any of the systemwide PMIC resources controlled by the RPM

Watch Dog driver – Watch Dog driver is a fail-safe for incorrect or stuck

code

MPM driver – Used to program the MPM hardware block during

systemwide sleep

RPM message driver – RPM message protocol driver abstracts the RPM

message protocol away from other subsystem software

Messaging Masters

与RPM通过shared memory region交互进行dynamic and static resource/power management的可以有很多种。这个可以查看smd_type.h中的smd_channel_type。但目前看只有AP,MODEM,RIVA,TZ与RPM有交互,这个可以看message_ram_malloc()函数中的设置。

其实也可以间接从rpm_config.c文件中的SystempData temp_config_data这个变量中看出来到底有几个部分是与RPM进行交互的。

RPM Initialization

在main.c文件中会逐个调用init_fcns[]变量中的函数进行初始化。当然也包括上面的资源的初始化。const init_fcn init_fcns[] =

{

populate_image_header,

npa_init,

#if (!defined(MSM8909_STUBS) )

railway_init_v2,

#endif

PlatformInfo_Init, /* pm_init is using PlatformInfo APIs */

pm_init,//LDO等资源的注册

acc_init,

#if (!defined(MSM8909_STUBS) )

railway_init_early_proxy_votes,

#endif

// xpu_init, /* cookie set here also indicates to SBL that railway is ready */

(init_fcn)Clock_Init, //Clock资源的注册

__init_stack_chk_guard,

ddr_init,

smem_init,

init_smdl,

version_init, /* Needs to be after smem_init */

rpmserver_init,

rpm_server_init_done,

railway_init_proxies_and_pins,

#if (!defined(MSM8909_STUBS) )

rbcpr_init,

#endif

svs_init,

vmpm_init,

sleep_init,

#if (!defined(MSM8909_STUBS) )

QDSSInit,

#endif

exceptions_enable,

swevent_qdss_init,

icb_init,

#if (!defined(MSM8909_STUBS) )

debug_init,

system_db_init,

zqcal_task_init,

#endif

rpm_settling_timer_init,

gpio_toggle_init,

rpm_set_changer_common_init,

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

pm_init : LDO等资源的注册,然后接收rpm_message。接收rpm_message的部分高通代码没有给,所以看不到,但从rpm log来看,是有接收处理并反馈的过程的。

107.764902: rpm_message_received (master: "APSS") (message id: 723) 107.764908: rpm_svs (mode: RPM_SVS_FAST) (reason: imminent processing) 107.764924: rpm_svs (mode: RPM_SVS_FAST) (reason: imminent processing) 107.764939: rpm_process_request (master: "APSS") (resource type: ldoa) (id: 14) 107.764942: rpm_xlate_request (resource type: ldoa) (resource id: 14) 107.764946: rpm_apply_request (resource type: ldoa) (resource id: 14) 107.765033: rpm_send_message_response (master: "APSS")1

2

3

4

5

6

7

ldoa对应的resource type为RPM_LDO_A_REQ。这个在pm_rom_device_init()里的pm_rpm_ldo_register_resources(RPM_LDO_A_REQ, num_of_ldoa); 这里被注册,所以看进去最后xlate和apply最终都会被pm_rpm_ldo_translation()和pm_rpm_ldo_apply进行处理。pm_rpm_ldo_tranlation()读取kvp内容,pm_rpm_ldo_apply最终把request的内容设置上去。 kernel端在msm-pm8916-rpm-regulator.dtsi文件中定如下ldo内容2. (init_fcn)Clock_Init:Clock资源的注册,这个过程和上面的也差不多

3. rpmserver_init:启动接收message的进程比如要设置LDO3的电压和电流,kvp的内容如下:

{

“req\0” : {

{“rsrc” : “ldo\0”}

{“id” : 3}

{“set” : 0}

{“data” : {

{“uv\0\0” : 1100000}

{“mA\0\0” : 130}

}

}

}

}12

3

4

5

6

7

8

9

10

11

12

RPM log里边显示是APSS,这个在kernel里边也是有设置的,在msm-pm8916-rpm-regulator.dtsi里的

rpm-regulator-ldoa14 {

compatible = "qcom,rpm-smd-regulator-resource";

qcom,resource-name = "ldoa";

qcom,resource-id = <14>;

qcom,regulator-type = <0>;

qcom,hpm-min-load = <5000>;

status = "disabled";

regulator-l14 {

compatible = "qcom,rpm-smd-regulator";

regulator-name = "8916_l14";

qcom,set = <3>;

status = "disabled";

};

};12

3

4

5

6

7

8

9

10

11

12

13

14

15

MSM的retulator相关的驱动会读取这个,如果有需要发请求的话,会根据这个dtsi的内容,组织kvp,然后通过rpm_request通道发过去。

rpm_request通道在rpm里边打开的地方是在rpm_handler.cpp里边的Hander::init()函数

void Handler::init()

{

smdlPort_ = smdl_open("rpm_requests",

rpm->ees[ee_].edge,

SMDL_OPEN_FLAGS_MODE_PACKET,

rpm->ees[ee_].smd_fifo_sz,

(smdl_callback_t)rpm_smd_handler,

this);

}12

3

4

5

6

7

8

9

在对应的kernel端也有打开,可以参考msm8916.dtsi文件里边的设置。

rpm_bus: qcom,rpm-smd {

compatible = "qcom,rpm-smd";

rpm-channel-name = "rpm_requests";

rpm-channel-type = <15>; /* SMD_APPS_RPM */

};12

3

4

5

以下是注册资源的函数和资源的类型:

所有的资源都通过rpm_register_resource()函数注册。这些资源包括clock, ldo等。具体可以看下面的类型定义。

typedef enum

{

RPM_TEST_REQ = 0x74736574, // 'test' in little endiant

RPM_CLOCK_0_REQ = 0x306b6c63, // 'clk0' in little endian; misc clocks [CXO, QDSS, dcvs.ena]

RPM_CLOCK_1_REQ = 0x316b6c63, // 'clk1' in little endian; bus clocks [pcnoc, snoc, sysmmnoc]

RPM_CLOCK_2_REQ = 0x326b6c63, // 'clk2' in little endian; memory clocks [bimc]

RPM_CLOCK_QPIC_REQ = 0x63697071, // 'qpic' in little endian; clock [qpic]

RPM_BUS_SLAVE_REQ = 0x766c7362, // 'bslv' in little endian

RPM_BUS_MASTER_REQ = 0x73616d62, // 'bmas' in little endian

RPM_BUS_SPDM_CLK_REQ = 0x63707362, // 'bspc' in little endian

RPM_BUS_MASTER_LATENCY_REQ = 0x74616c62, // 'blat' in little endian

RPM_SMPS_A_REQ = 0x61706D73, // 'smpa' in little endian

RPM_LDO_A_REQ = 0x616F646C, // 'ldoa' in little endian

RPM_NCP_A_REQ = 0x6170636E, // 'ncpa' in little endian

RPM_VS_A_REQ = 0x617376, // 'vsa' in little endian

RPM_CLK_BUFFER_A_REQ = 0x616B6C63, // 'clka' in little endian

RPM_BOOST_A_REQ = 0x61747362, // 'bsta' in little endian

RPM_SMPS_B_REQ = 0x62706D73, // 'smpb' in little endian

RPM_LDO_B_REQ = 0x626F646C, // 'ldob' in little endian

RPM_NCP_B_REQ = 0x6270636E, // 'ncpb' in little endian

RPM_VS_B_REQ = 0x627376, // 'vsb' in little endian

RPM_CLK_BUFFER_B_REQ = 0x626B6C63, // 'clk' in little endian

RPM_BOOST_B_REQ = 0x62747362, // 'bstb' in little endian

RPM_SWEVENT_REQ = 0x76657773, // 'swev' in little endian

RPM_OCMEM_POWER_REQ = 0x706d636f, // 憃cmp?in little endian

RPM_RBCPR_REQ = 0x727063, // 'cpr'in little endian

RPM_GPIO_TOGGLE_REQ = 0x6F697067, // 'gpio' in little endian:[gpio0,gpio1,gpio2]

} rpm_resource_type;12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

RPM Resouce Registration

rpm_register_resource():Gpio_toggle.c (x:\j3_ctc\nhlos\rpm_proc\core\power\rpm\common): rpm_register_resource(RPM_GPIO_TOGGLE_REQ, 3, sizeof(gpio_toggle_inrep), gpio_toggle_xlate, gpio_toggle_apply, 0); Icb_rpm_spdm_req.c (x:\j3_ctc\nhlos\rpm_proc\core\buses\icb\src\common): rpm_register_resource( RPM_BUS_SPDM_CLK_REQ, Ocmem_resource.c (x:\j3_ctc\nhlos\rpm_proc\core\power\ocmem\src): rpm_register_resource(RPM_OCMEM_POWER_REQ, 3, sizeof(rpm_ocmem_vote_int_rep), rpm_ocmem_xlate, rpm_ocmem_apply, 0); Pm_rpm_boost_trans_apply.c (x:\j3_ctc\nhlos\rpm_proc\core\systemdrivers\pmic\npa\src\rpm): rpm_register_resource(resourceType, num_npa_resources + 1 , sizeof(pm_npa_boost_int_rep), pm_rpm_boost_translation, pm_rpm_boost_apply, (void *)boost_data); Pm_rpm_clk_buffer_trans_apply.c (x:\j3_ctc\nhlos\rpm_proc\core\systemdrivers\pmic\npa\src\rpm): rpm_register_resource(resourceType, num_npa_resources + 1, Pm_rpm_ldo_trans_apply.c (x:\j3_ctc\nhlos\rpm_proc\core\systemdrivers\pmic\npa\src\rpm): rpm_register_resource(resourceType, num_npa_resources + 1, sizeof(pm_npa_ldo_int_rep), pm_rpm_ldo_translation, pm_rpm_ldo_apply, (void *)ldo_data); Pm_rpm_smps_trans_apply.c (x:\j3_ctc\nhlos\rpm_proc\core\systemdrivers\pmic\npa\src\rpm): rpm_register_resource(resourceType, num_npa_resources + 1, sizeof(pm_npa_smps_int_rep), pm_rpm_smps_translation, pm_rpm_smps_apply, (void *)smps_data); Pm_rpm_vs_trans_apply.c (x:\j3_ctc\nhlos\rpm_proc\core\systemdrivers\pmic\npa\src\rpm): rpm_register_resource(resourceType, num_npa_resources + 1, sizeof(pm_npa_vs_int_rep), pm_rpm_vs_translation, pm_rpm_vs_apply, (void *)vs_data); Rpmserver.cpp (x:\j3_ctc\nhlos\rpm_proc\core\power\rpm\server):void rpm_register_resource Rpmserver.h (x:\j3_ctc\nhlos\rpm_proc\core\api\power):void rpm_register_resource Rpm_npa.cpp (x:\j3_ctc\nhlos\rpm_proc\core\power\rpm\server): rpm_register_resource(resource, num_npa_resources, sizeof(npa_request_data_t), rpm_npa_xlate, rpm_npa_apply, adapter); Rpm_npa.cpp (x:\j3_ctc\nhlos\rpm_proc\core\power\rpm\server): rpm_register_resource(resource, num_npa_resources, sizeof(npa_request_data_t), rpm_npa_xlate, rpm_npa_apply, adapter); Rpm_test_resource.cpp (x:\j3_ctc\nhlos\rpm_proc\core\power\rpm\server): rpm_register_resource(RPM_TEST_REQ, 1, 4, rpm_test_xlate, rpm_test_apply, 0); Swevent.c (x:\j3_ctc\nhlos\rpm_proc\core\power\rpm\common): rpm_register_resource(RPM_SWEVENT_REQ, 1, sizeof(swevent_inrep), rpm_swevent_xlate, rpm_trace_control, 0);1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

所有的资源都是通过rpm_register_resource函数注册。可以看到像RPM_BUS_SPDM_CLK_REQ,RPM_GPIO_TOGGLE_REQ都是通过这个接口直接注册的。NPA相关也是通过rpm_register_resource注册,但是用了NPA的driver去实际注册资源和使用资源。

NPA相关的两个注册接口是:rpm_create_npa_adapter(),rpm_create_npa_settling_adapter()。

可以看到这两个就注册了三个CLOCK相关的资源,

---- rpm_create_npa_adapter Matches (8 in 5 files) ---- ClockRPM.c : clk0_adapter = rpm_create_npa_adapter(RPM_CLOCK_0_REQ, 3); // Misc clocks: [CXO, QDSS, dcvs.ena] ClockRPM.c : clk1_adapter = rpm_create_npa_adapter(RPM_CLOCK_1_REQ, 2); // Bus clocks: [pcnoc, snoc] Rpmserver.h :rpm_npa_adapter rpm_create_npa_adapter(rpm_resource_type resource, unsigned num_npa_resources); Rpm_npa.cpp :rpm_npa_adapter rpm_create_npa_adapter(rpm_resource_type resource, unsigned num_npa_resources) ---- rpm_create_npa_settling_adapter Matches (5 in 5 files) ---- ClockRPM.c : clk2_adapter = rpm_create_npa_settling_adapter(RPM_CLOCK_2_REQ, 1); // Memory clocks: [bimc ] Rpmserver.h :rpm_npa_adapter rpm_create_npa_settling_adapter(rpm_resource_type resource, unsigned num_npa_resources); Rpm_npa.cpp :rpm_npa_adapter rpm_create_npa_settling_adapter(rpm_resource_type resource, unsigned num_npa_resources)1

2

3

4

5

6

7

8

9

10

NPA client的创建函数是:npa_create_sync_client()

NPA clien request的函数是:npa_issue_required_request()

NPA client创建并request这个资源,必须要适用像下面这样的npa node。这个可以直接像下面这样定义。然后再使用。步骤如下:1.定义npa node definition

static npa_resource_definition sleep_uber_resource[] =

{

{

"/sleep/uber", /* Name */

"on/off", /* Units */

0x7, /* Max State */

&npa_or_plugin, /* Plugin */

NPA_RESOURCE_DEFAULT, /* Attributes */

NULL, /* User Data */

}

};

npa_node_definition sleep_uber_node =

{

"/node/sleep/uber", /* name */

sleep_uber_driver, /* driver_fcn */

NPA_NODE_DEFAULT, /* attributes */

NULL, /* data */

0, NULL, /* dependency count, dependency list */

NPA_ARRAY(sleep_uber_resource)

};12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

2.npa_define_node(&sleep_uber_node, initial_state, NULL),调用这个函数初始化这个NPA node

3.创建Client

uber_node_handle = npa_create_sync_client("/sleep/uber",

"sleep",

NPA_CLIENT_REQUIRED);12

3

4.npa_issue_required_request(uber_node_handle, request) : request

RPM Resource handle

当子系统通过share memory发送请求给RPM。RPM负责处理这些请求并设置。RPM处理请求的流程如下:SMD IRQ ->smd_isr()->rpm_smd_handler() [在Handler::init函数中注册的,rpm_request channel的SMD处理函数]->Handler::queue()->schedule_me()->Handler::execute_until()->Handler::processMessage()->resource_ee_request()->发到每个资源注册xlate然后再调用apply等下面以高通控制DDR频率(BIMC)的过程为例,看一下kernel这边怎么发送请求给RPM的。

device tree设置如下:

cpubw: qcom,cpubw@0 {

reg = <0 4>;

compatible = "qcom,devbw";

governor = "cpufreq";

qcom,src-dst-ports = <1 512>;

qcom,active-only;

qcom,bw-tbl = //这个频率表对应RPM中BIMC频率表,不过删掉了50MHz和9.6MHz。

/* 73 9.60 MHz */

/* 381 50MHz */

< 762 /* 100 MHz */>,

< 1525 /* 200 MHz */>,

< 3051 /* 400 MHz */>,

< 4066 /* 533 MHz */>;

};12

3

4

5

6

7

8

9

10

11

12

13

14

对应的kernel代码在devfeq_devbw.c文件。根据算法算出当前应该设定的ddr总线频率之后,最后通过以下顺序发消息给RPM。这里暂时不讨论按什么规则选择需要的频率的,只看按什么路径发频率给RPM的。

set_bw()->msm_bus_scale_client_update_request()->update_request_adhoc()[msm_bus_arb_adhoc.c]->update_path()->msm_bus_commit_data()->flush_bw_data()->send_rpm_msg()->msm_rpm_send_message()

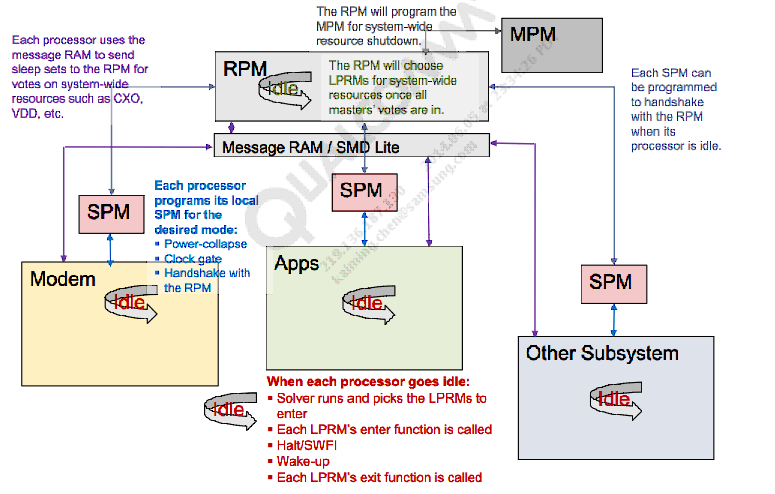

System Sleep Overview

RPM除了对Clock和LDO等资源的管理之外,还管理整个系统睡眠。

可以看到睡眠并不是通过shared memory发送消息给RPM的,而是子系统通过设置对应的SPM,SPM触发RPM相应的中断来完成的。

kernel这边设置spm的模式的设置如下:

enum {

MSM_SPM_MODE_DISABLED,

MSM_SPM_MODE_CLOCK_GATING,

MSM_SPM_MODE_RETENTION,

MSM_SPM_MODE_GDHS,

MSM_SPM_MODE_POWER_COLLAPSE,//设置成这个状态之后,对应的SPM应该就会触发RPM的对应的shutdown中断

MSM_SPM_MODE_NR

};12

3

4

5

6

7

8

rpm中,与哪几个子系统传递接收message,然后和哪几个子系统的SPM进行交互,交互的中断号是多少的设置如下:

static SystemData temp_config_data =

{

.num_ees = 4, // 4 EE's, [apps, modem, pronto, tz]

.ees = (EEData[] ) {

[0] = {

.edge = SMD_APPS_RPM,

.smd_fifo_sz = 1024,

.ee_buflen = 256,

.priority = 4,

.wakeupInt = (1 << 5) | (1 << 7),

.spm = {

.numCores = 1,

.bringupInts = (unsigned[]) { 15 },

.bringupAcks = (unsigned[]) { 20 },

.shutdownInts = (unsigned[]) { 14 },//这个应该是SPM睡眠的中断号

.shutdownAcks = (unsigned[]) { 4 },

},

},

[1] = {

.edge = SMD_MODEM_RPM,

.smd_fifo_sz = 1024,

.ee_buflen = 1024,

.priority = 2,

.wakeupInt = (1 << 13) | (1 << 15),

.spm = {

.numCores = 1,

.bringupInts = (unsigned[]) { 25 },

.bringupAcks = (unsigned[]) { 22 },

.shutdownInts = (unsigned[]) { 24 },

.shutdownAcks = (unsigned[]) { 6 },

},

},

[2] = {

.edge = SMD_RIVA_RPM,

.smd_fifo_sz = 1024,

.ee_buflen = 256,

.priority = 1,

.wakeupInt = (1 << 17) | (1 << 19),

.spm = {

.numCores = 1,

.bringupInts = (unsigned[]) { 31 },

.bringupAcks = (unsigned[]) { 23 },

.shutdownInts = (unsigned[]) { 30 },

.shutdownAcks = (unsigned[]) { 7 },

},

},

[3] = {

.edge = SMD_RPM_TZ,

.smd_fifo_sz = 1024,

.ee_buflen = 256,

.priority = 5,

.wakeupInt = 0,

.spm = {

.numCores = 0,

.bringupInts = (unsigned[]) { 31 },

.bringupAcks = (unsigned[]) { 23 },

.shutdownInts = (unsigned[]) { 30 },

.shutdownAcks = (unsigned[]) { 7 },

},

},

},

.supported_classes = SUPPORTED_CLASSES,

.supported_resources = SUPPORTED_RESOURCES,

.classes = (ResourceClassData[SUPPORTED_CLASSES]) { 0 },

.resources = (ResourceData[SUPPORTED_RESOURCES]) { 0 },

.resource_seeds = (int16_t[SUPPORTED_RESOURCES]) { 0 },

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

但还不知道这些中断号到底怎么来的,,中断的个数都是1个,,这个怎么来的也还是不知~

这些内容在rpm_spm_init中会读取,然后设置中断。shutdownISR对应的中断处理函数为rpm_spm_shutdown_high_isr。

这个中断函数会调用rpm_spm_state_machine()处理RPM状态机,进入或者阻止进入睡眠模式等。

void rpm_spm_state_machine(unsigned ee, rpm_spm_entry_reason reason)

{

INTLOCK();

bool changed_state = false;

EEData *ee_state = &(rpm->ees[ee]);

SetChanger *changer = ee_state->changer;

do

{

switch(ee_state->subsystem_status)

{

case SPM_AWAKE:

changed_state = FALSE;

if(0 == ee_state->num_active_cores)

{//等待所有的core都进入睡眠!!之后才能走到GOITONG TO SLEEP状态!!

SPM_CHANGE_STATE(SPM_GOING_TO_SLEEP);

}

else

{

// We're awake, so make sure we keep up with any incoming bringup reqs.

rpm_acknowledge_spm_handshakes(ee);

}

break;

case SPM_GOING_TO_SLEEP:

if(changed_state)

{

// check for scheduled wakeup

uint64_t deadline = 0;

if(! rpm_get_wakeup_deadline(ee, deadline))

{

deadline = 0;

}

changer->setWakeTime (deadline);

// enqueue immediate set transition to sleep

changer->enqueue(RPM_SLEEP_SET, 0);

}

changed_state = FALSE;

// When we've finished selecting the sleep set, we're officially asleep.

if((SPM_TRANSITION_COMPLETE == reason) && (RPM_SLEEP_SET == changer->currentSet()))

{

SPM_CHANGE_STATE(SPM_SLEEPING);

}

// However, we might get a wakeup request before we've made it all the way to sleep.

if(SPM_BRINGUP_REQ == reason)

{

// Set the preempt flag; this will force the set change to recycle if

// it's currently running. It will notice the processor has woken up

// and stop performing its work.

theSchedule().preempt();

}

break;

case SPM_SLEEPING:

if(changed_state)

{

// check for scheduled wakeup

uint64_t deadline = changer->getWakeTime ();

// enqueue scheduled wakeup request

changer->enqueue(RPM_ACTIVE_SET, deadline);

}

changed_state = FALSE;

if(ee_state->num_active_cores > 0)

{

SPM_CHANGE_STATE(SPM_WAKING_UP);

}

break;

case SPM_WAKING_UP:

if(changed_state)

{

// work our way back to the active set

if(RPM_SLEEP_SET == changer->currentSet() || changer->inTransition())

{

changer->enqueue(RPM_ACTIVE_SET, 0);

}

}

changed_state = FALSE;

// check for completion

if(RPM_ACTIVE_SET == changer->currentSet() && !changer->inTransition())

{

SPM_CHANGE_STATE(SPM_AWAKE);

}

break;

}

} while(changed_state);

INTFREE();

}12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

最终会跑到SetChanger::enqueue()里。

RPM与MPM的交互

RPM 状态读取

rpm的状态读取,在/d/rpm_stats里边可以读到AP这边设置的几个APSS,MPSS,PRONTO等几个对应的RPM状态。读的内容当然也是从rpm_request这个shared memory里边读的。相应的设置在msm8916-pm.dts文件里边有。

qcom,rpm-stats@29dba0 {

compatible = "qcom,rpm-stats";

reg = <0x29dba0 0x1000>;

reg-names = "phys_addr_base";

qcom,sleep-stats-version = <2>;

};

qcom,rpm-master-stats@60150 {

compatible = "qcom,rpm-master-stats";

reg = <0x60150 0x2030>;

qcom,masters = "APSS", "MPSS", "PRONTO";

qcom,master-stats-version = <2>;

qcom,master-offset = <4096>;

};

qcom,rpm-rbcpr-stats@0x29daa0 {

compatible = "qcom,rpmrbcpr-stats";

reg = <0x29daa0 0x1a0000>;

qcom,start-offset = <0x190010>;

};

相关文章推荐

- MSM平台RPM

- rpm卸载命令

- PMAC上位机编程

- 如果做好测试PM【转载】

- APM启动流程及ArduPilot函数入口

- Head First PMP – 14 – 职业道德(Professional Responsibility)

- 利用 Behavior Driven Development 技术加强软件自动化测试

- 以叫车软件为例,说一下高阶产品经理都是怎么分析问题的

- yum使用总结

- 产品经理应该竖立的工作态度(自勉)

- 进程管理,及性能监控 ps, pstree, pidof, top, htop, pmap, vmstat, dstat

- (OK) cBPM(段错误(吐核))—((EndWorkflowEvent*)evt)->getProcessID()—getenv 返NULL

- 研发产品经理培训什么?(摘抄)

- 利用JBPM4.4的AssignmentHandler实现用户角色整合另一种构思

- error C2146: syntax error : missing ';' before identifier 'lpMenu'

- 本地录制视频和MPMoviePlayerController播放本地视频

- 《人人都是产品经理》读书笔记

- Mipmap纹理技术简介

- Windows下 修改npm文件安装路径

- 最受产品经理关注的10个原型设计工具