Hive-1.2.0学习笔记(一)安装配置

2015-09-11 14:47

435 查看

鲁春利的工作笔记,好记性不如烂笔头

下载hive:http://hive.apache.org/index.html

Hive是基于Hadoop的一个数据仓库工具,提供了SQL查询功能,能够将SQL语句解析成MapReduce任务对存储在HDFS上的数据进行处理。

MySQ安装

Hive有三种运行模式:

1.内嵌模式:默认情况下,Hive元数据保存在内嵌的 Derby 数据库中,只能允许一个会话连接,只适合简单的测试;

2.本地模式:将元数据库保存在本地的独立数据库中(比如说MySQL),能够支持多会话和多用户连接;

3.远程模式:如果我们的Hive客户端比较多,在每个客户端都安装MySQL服务还是会造成一定的冗余和浪费,这种情况下,就可以更进一步,将MySQL也独立出来,将元数据保存在远端独立的MySQL服务中。

1、内嵌模式

通过上述方式直接运行,不做其他任何配置将会作为内嵌模式运行,Hive的元数据(metadata)信息存储在Derby数据库中(元数据是指的其他数据的描述信息,如MySQL的information_schema库)。

使用Derby存储元数据问题见:/article/4522514.html

查看hive-default.xml文件

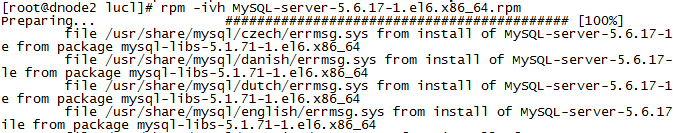

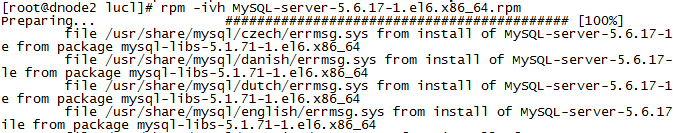

2、MySQL安装

MySQL5.6.17版本

由于版本冲突,卸载之前的MySQL5.1.71版本

再次安装MySQL就可以了。

安装后的目录说明:

4、验证安装

5、修改配置文件my.cnf

说明:不是必须的,可以采用默认配置。

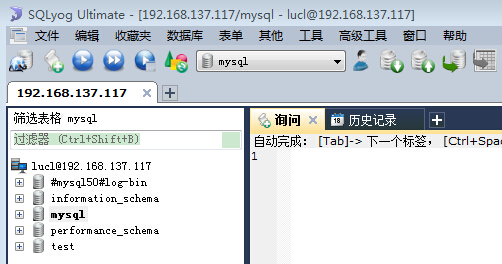

6、启用远程访问

至此,MySQL安装配置完成。

7、准备Hive用户

在MySQL为Hive创建一个库,并新建Hive连接MySQL时的用户。

查看新创建的hive用户的权限

Hive环境配置

1、解压

2、配置Hive环境变量

3、配置hive-env.sh

4、配置hive-site.xml

5、拷贝MySQL的jar到Hive的lib目录下

6、执行bin/hive

错误原因

Hive has upgraded to Jline2 but jline 0.94 exists in the Hadoop lib.

解决方式

将hive安装目录lib/jline-2.12.jar拷贝到hadoop-2.6.0/share/hadoop/yarn/lib/目录下。

再次执行

能够正确进入到hive的命令行,此时去查看MySQL的hive数据库,看都生成了那些表来存储元数据

通过dbs表能够看到实际上Hive对应的数据存储目录是我的hdfs集群

简单SQL语句操作

查看HDFS

通过MySQL确认元数据

本文出自 “闷葫芦的世界” 博客,请务必保留此出处http://luchunli.blog.51cto.com/2368057/1693817

下载hive:http://hive.apache.org/index.html

Hive是基于Hadoop的一个数据仓库工具,提供了SQL查询功能,能够将SQL语句解析成MapReduce任务对存储在HDFS上的数据进行处理。

MySQ安装

Hive有三种运行模式:

1.内嵌模式:默认情况下,Hive元数据保存在内嵌的 Derby 数据库中,只能允许一个会话连接,只适合简单的测试;

2.本地模式:将元数据库保存在本地的独立数据库中(比如说MySQL),能够支持多会话和多用户连接;

3.远程模式:如果我们的Hive客户端比较多,在每个客户端都安装MySQL服务还是会造成一定的冗余和浪费,这种情况下,就可以更进一步,将MySQL也独立出来,将元数据保存在远端独立的MySQL服务中。

1、内嵌模式

通过上述方式直接运行,不做其他任何配置将会作为内嵌模式运行,Hive的元数据(metadata)信息存储在Derby数据库中(元数据是指的其他数据的描述信息,如MySQL的information_schema库)。

使用Derby存储元数据问题见:/article/4522514.html

查看hive-default.xml文件

<configuration> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:derby:;databaseName=metastore_db;create=true</value> <description>JDBC connect string for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>org.apache.derby.jdbc.EmbeddedDriver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>APP</value> <description>username to use against metastore database</description> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>mine</value> <description>password to use against metastore database</description> </property> </configuration>说明:Derby实际上也有network模式,但在实际的项目中MySQL应用较多,因此采用本地模式时基于MySQL数据库。

2、MySQL安装

MySQL5.6.17版本

[root@nnode lucl]# rpm -ivh MySQL-server-5.6.17-1.el6.x86_64.rpm

由于版本冲突,卸载之前的MySQL5.1.71版本

[root@nnodelucl]# yum -y remove mysql-libs-5.1.71*

再次安装MySQL就可以了。

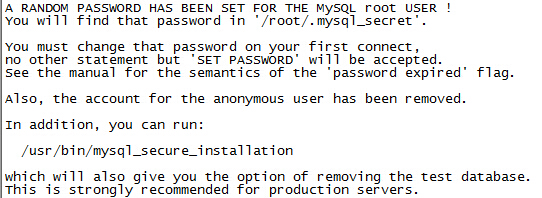

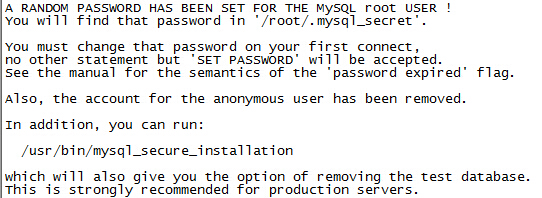

[root@nnode lucl]# rpm -ivh MySQL-server-5.6.17-1.el6.x86_64.rpm [root@nnode lucl]# rpm -ivh MySQL-client-5.6.17-1.el6.x86_64.rpm说明:通过RPM命令安装完MySQ后,会在密码文件生成随机密码。

安装后的目录说明:

| 目录 | 功能 | 备注 |

| /usr/bin | Client programs and scripts | |

| /usr/sbin | The mysqld server | |

| /var/lib/mysql | 数据文件 | |

| /usr/my.cnf | 配置文件 |

[root@nnode lucl]# service mysql start

Starting MySQL.. SUCCESS!

[root@nnode lucl]# service mysql status

SUCCESS! MySQL running (4564)

[root@nnode lucl]# cat /root/.mysql_secret

# The random password set for the root user at Tue Jan 19 15:20:09 2016 (local time):

IhuKPeHDw5Ro0tW5

[root@nnode lucl]# mysql -uroot -pIhuKPeHDw5Ro0tW5

Warning: Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 1

Server version: 5.6.17

Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

ERROR 1820 (HY000): You must SET PASSWORD before executing this statement

mysql> set password=password("root");

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> exit

Bye

[root@nnode lucl]# mysql -uroot -proot

Warning: Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.6.17 MySQL Community Server (GPL)

Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| test |

+--------------------+

4 rows in set (0.00 sec)

mysql> exit;

Bye

[root@nnode lucl]#5、修改配置文件my.cnf

说明:不是必须的,可以采用默认配置。

[mysql] port=3306 socket=/var/lib/mysql/mysql.sock [mysqld] server-id=117 innodb_flush_log_at_trx_commit=2 port=3306 socket=/var/lib/mysql/mysql.sock slave-skip-errors=all lower_case_table_names=1 max_connections=300 innodb_flush_log_at_trx_commit=2 innodb_file_per_table=1 datadir=/var/lib/mysql [mysqldump] quick max_allowed_packet = 16M说明:实际上通过service mysql start命令也是通过调用mysqld_safe来启动的mysql服务,并且在启动的时候默认指定了用户mysql,因此需要设定log目录log-bin所属用户和组为mysql:mysql。

[root@nnode mysql]# chown -R mysql:mysql log-bin/

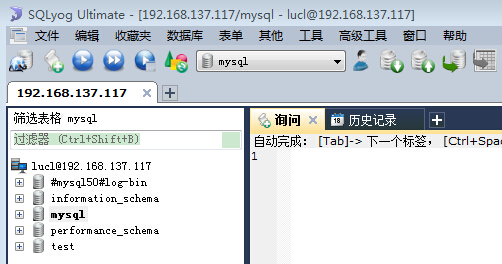

6、启用远程访问

[root@nnode mysql]# mysql -uroot -proot Warning: Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 1 Server version: 5.6.17-log MySQL Community Server (GPL) Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> use mysql; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed mysql> select host, user, password, password_expired from user; +-----------+------+-------------------------------------------+------------------+ | host | user | password | password_expired | +-----------+------+-------------------------------------------+------------------+ | localhost | root | *81F5E21E35407D884A6CD4A731AEBFB6AF209E1B | N | | nnode | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y | | 127.0.0.1 | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y | | ::1 | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y | +-----------+------+-------------------------------------------+------------------+ 4 rows in set (0.00 sec) # 说明:host表名了可以连接到该server的主机,如果为%则表示可以从任意主机连接。 mysql> CREATE USER 'lucl'@'%' IDENTIFIED BY 'lucl'; GRANT ALL PRIVILEGES ON *.* TO lucl@'%';Query OK, 0 rows affected (0.04 sec) mysql> GRANT ALL PRIVILEGES ON *.* TO lucl@'%'; Query OK, 0 rows affected (0.00 sec) mysql> flush privileges; Query OK, 0 rows affected (0.00 sec) mysql> select host, user, password, password_expired from user; +-----------+------+-------------------------------------------+------------------+ | host | user | password | password_expired | +-----------+------+-------------------------------------------+------------------+ | localhost | root | *81F5E21E35407D884A6CD4A731AEBFB6AF209E1B | N | | nnode | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y | | 127.0.0.1 | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y | | ::1 | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y | | % | lucl | *A5DC323CE3368879D65443454066316A01521C95 | N | +-----------+------+-------------------------------------------+------------------+ 5 rows in set (0.00 sec) mysql>

至此,MySQL安装配置完成。

7、准备Hive用户

在MySQL为Hive创建一个库,并新建Hive连接MySQL时的用户。

[hadoop@nnode conf]$ mysql -uroot -proot Warning: Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 5 Server version: 5.6.17 MySQL Community Server (GPL) Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> create database hive; Query OK, 1 row affected (0.01 sec) mysql> CREATE USER 'hive'@'localhost' IDENTIFIED BY 'hive'; Query OK, 0 rows affected (0.01 sec) mysql> CREATE USER 'hive'@'%' IDENTIFIED BY 'hive'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON hive.* TO hive@'localhost'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON hive.* TO hive@'%'; Query OK, 0 rows affected (0.00 sec) mysql> flush privileges; Query OK, 0 rows affected (0.00 sec) mysql> exit Bye [hadoop@nnode conf]$

查看新创建的hive用户的权限

[hadoop@nnode conf]$ mysql -uhive -phive Warning: Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 8 Server version: 5.6.17 MySQL Community Server (GPL) Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show grants for hive@localhost; +-------------------------------------------------------------------------------------------------------------+ | Grants for hive@localhost | +-------------------------------------------------------------------------------------------------------------+ | GRANT USAGE ON *.* TO 'hive'@'localhost' IDENTIFIED BY PASSWORD '*4DF1D66463C18D44E3B001A8FB1BBFBEA13E27FC' | | GRANT ALL PRIVILEGES ON `hive`.* TO 'hive'@'localhost' | +-------------------------------------------------------------------------------------------------------------+ 2 rows in set (0.00 sec) mysql> show grants for hive@'%'; +-----------------------------------------------------------------------------------------------------+ | Grants for hive@% | +-----------------------------------------------------------------------------------------------------+ | GRANT USAGE ON *.* TO 'hive'@'%' IDENTIFIED BY PASSWORD '*4DF1D66463C18D44E3B001A8FB1BBFBEA13E27FC' | | GRANT ALL PRIVILEGES ON `hive`.* TO 'hive'@'%' | +-----------------------------------------------------------------------------------------------------+ 2 rows in set (0.00 sec) mysql> mysql> exit; Bye [hadoop@nnode conf]$

Hive环境配置

1、解压

[hadoop@nnode lucl]$ tar -xzv -f apache-hive-1.2.0-bin.tar.gz # 重命名 [hadoop@nnode lucl]$ mv apache-hive-1.2.0-bin/ hive-1.2.0

2、配置Hive环境变量

[hadoop@nnode ~]$ vim .bash_profile # Hive export HIVE_HOME=/lucl/hive-1.2.0 export PATH=$HIVE_HOME/bin:$PATH

3、配置hive-env.sh

[hadoop@nnode conf]$ cp hive-env.sh.template hive-env.sh [hadoop@nnode conf]$ vim hive-env.sh export JAVA_HOME=/lucl/jdk1.7.0_80 export HADOOP_HOME=/lucl/hadoop-2.6.0 export HIVE_HOME=/lucl/hive-1.2.0 export HIVE_CONF_DIR=/lucl/hive-1.2.0/conf

4、配置hive-site.xml

[hadoop@nnode conf]$ cp hive-default.xml.template hive-site.xml [hadoop@nnode conf]$ cat hive-site.xml <?xml version="1.0" encoding="UTF-8" standalone="no"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <!-- WARNING!!! This file is auto generated for documentation purposes ONLY! --> <!-- WARNING!!! Any changes you make to this file will be ignored by Hive. --> <!-- WARNING!!! You must make your changes in hive-site.xml instead. --> <!-- Hive Execution Parameters --> <!-- 空 --> </configuration> [hadoop@nnode conf]$配置hive-site.xml

<configuration> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://nnode:3306/hive?createDatabaseIfNotExist=true</value> <description>JDBC connect string for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hive</value> <description>username to use against metastore database</description> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>hive</value> <description>password to use against metastore database</description> </property> </configuration>

5、拷贝MySQL的jar到Hive的lib目录下

[hadoop@nnode conf]$ cp /mnt/hgfs/Share/mysql-connector-java-5.1.27.jar $HIVE_HOME/lib/

6、执行bin/hive

[hadoop@nnode hive-1.2.0]$ bin/hive Logging initialized using configuration in jar:file:/usr/local/hive1.2.0/lib/hive-common-1.2.0.jar!/hive-log4j.properties [ERROR] Terminal initialization failed; falling back to unsupported java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected at jline.TerminalFactory.create(TerminalFactory.java:101) at jline.TerminalFactory.get(TerminalFactory.java:158) at jline.console.ConsoleReader.<init>(ConsoleReader.java:229) at jline.console.ConsoleReader.<init>(ConsoleReader.java:221) at jline.console.ConsoleReader.<init>(ConsoleReader.java:209) at org.apache.hadoop.hive.cli.CliDriver.setupConsoleReader(CliDriver.java:787) at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:721) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:681) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:621) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.util.RunJar.run(RunJar.java:221) at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

错误原因

Hive has upgraded to Jline2 but jline 0.94 exists in the Hadoop lib.

解决方式

将hive安装目录lib/jline-2.12.jar拷贝到hadoop-2.6.0/share/hadoop/yarn/lib/目录下。

[hadoop@nnode lucl]$ ll hive-1.2.0/lib/|grep jline -rw-rw-r-- 1 hadoop hadoop 213854 4月 30 2015 jline-2.12.jar [hadoop@nnode lucl]$ ll hadoop-2.6.0/share/hadoop/yarn/lib/|grep jline -rw-r--r-- 1 hadoop hadoop 87325 11月 14 2014 jline-0.9.94.jar [hadoop@nnode lucl]$ mv hadoop-2.6.0/share/hadoop/yarn/lib/jline-0.9.94.jar hadoop-2.6.0/share/hadoop/yarn/lib/jline-0.9.94.jar.backup [hadoop@nnode lucl]$ cp hive-1.2.0/lib/jline-2.12.jar hadoop-2.6.0/share/hadoop/yarn/lib/ [hadoop@nnode lucl]$ ll hadoop-2.6.0/share/hadoop/yarn/lib/|grep jline -rw-r--r-- 1 hadoop hadoop 87325 11月 14 2014 jline-0.9.94.jar.backup -rw-rw-r-- 1 hadoop

再次执行

[hadoop@nnode lucl]$ hive-1.2.0/bin/hive Logging initialized using configuration in jar:file:/usr/local/hive1.2.0/lib/hive-common-1.2.0.jar!/hive-log4j.properties hive> exit; [hadoop@nnode lucl]$

能够正确进入到hive的命令行,此时去查看MySQL的hive数据库,看都生成了那些表来存储元数据

[root@nnode ~]# mysql -uhive -phive Warning: Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 25 Server version: 5.6.17 MySQL Community Server (GPL) Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> use hive; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed mysql> show tables; +---------------------------+ | Tables_in_hive | +---------------------------+ | bucketing_cols | | cds | | columns_v2 | | database_params | | dbs | | func_ru | | funcs | | global_privs | | part_col_stats | | partition_key_vals | | partition_keys | | partition_params | | partitions | | roles | | sd_params | | sds | | sequence_table | | serde_params | | serdes | | skewed_col_names | | skewed_col_value_loc_map | | skewed_string_list | | skewed_string_list_values | | skewed_values | | sort_cols | | tab_col_stats | | table_params | | tbls | | version | +---------------------------+ 29 rows in set (0.00 sec) mysql> select * from dbs; +-------+-----------------------+------------------------------------+---------+------------+------------+ | DB_ID | DESC | DB_LOCATION_URI | NAME | OWNER_NAME | OWNER_TYPE | +-------+-----------------------+------------------------------------+---------+------------+------------+ | 1 | Default Hive database | hdfs://cluster/user/hive/warehouse | default | public | ROLE | +-------+-----------------------+------------------------------------+---------+------------+------------+ 1 row in set (0.00 sec) mysql> select * from tbls; Empty set (0.00 sec) mysql> select * from version; +--------+----------------+-----------------------------------------+ | VER_ID | SCHEMA_VERSION | VERSION_COMMENT | +--------+----------------+-----------------------------------------+ | 1 | 1.2.0 | Set by MetaStore hadoop@192.168.137.117 | +--------+----------------+-----------------------------------------+ 1 row in set (0.00 sec) mysql> exit; Bye [root@nnode ~]#

通过dbs表能够看到实际上Hive对应的数据存储目录是我的hdfs集群

[hadoop@nnode local]$ hdfs dfs -ls -R hdfs://cluster/user/hive ls: `hdfs://cluster/user/hive': No such file or directory [hadoop@nnode local]$

简单SQL语句操作

[hadoop@nnode local]$ hive1.2.0/bin/hive Logging initialized using configuration in jar:file:/usr/local/hive1.2.0/lib/hive-common-1.2.0.jar!/hive-log4j.properties hive> create table student(sno int, sname varchar(50)); OK Time taken: 4.054 seconds hive> show tables; OK student Time taken: 0.772 seconds, Fetched: 1 row(s) # insert操作竟然是MapReduce hive> insert into student(sno, sname) values(100, 'zhangsan'); Query ID = hadoop_20151203234553_5f722629-2322-486b-97f2-f5b803e950ee Total jobs = 3 Launching Job 1 out of 3 Number of reduce tasks is set to 0 since there's no reduce operator Starting Job = job_1449147095699_0001, Tracking URL = http://nnode:8088/proxy/application_1449147095699_0001/ Kill Command = /usr/local/hadoop2.6.0/bin/hadoop job -kill job_1449147095699_0001 Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0 2015-12-03 23:47:07,405 Stage-1 map = 0%, reduce = 0% 2015-12-03 23:48:07,554 Stage-1 map = 0%, reduce = 0% 2015-12-03 23:48:51,645 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.01 sec MapReduce Total cumulative CPU time: 5 seconds 10 msec Ended Job = job_1449147095699_0001 Stage-4 is selected by condition resolver. Stage-3 is filtered out by condition resolver. Stage-5 is filtered out by condition resolver. Moving data to: hdfs://cluster/user/hive/warehouse/student/.hive-staging_hive_2015-12-03_23-45-53_234_4559428877079063192-1/-ext-10000 Loading data to table default.student Table default.student stats: [numFiles=1, numRows=1, totalSize=13, rawDataSize=12] MapReduce Jobs Launched: Stage-Stage-1: Map: 1 Cumulative CPU: 5.63 sec HDFS Read: 3697 HDFS Write: 84 SUCCESS Total MapReduce CPU Time Spent: 5 seconds 630 msec OK Time taken: 183.786 seconds # 查询数据 hive> select * from student; OK 100 zhangsan Time taken: 0.192 seconds, Fetched: 1 row(s) hive> select sno from student; OK 100 Time taken: 0.186 seconds, Fetched: 1 row(s) hive> exit; [hadoop@nnode local]$

查看HDFS

# 查看目录 [hadoop@nnode local]$ hdfs dfs -ls -R /user/hive/ drwxrw-rw- - hadoop hadoop 0 2015-12-03 23:42 /user/hive/warehouse drwxrw-rw- - hadoop hadoop 0 2015-12-03 23:48 /user/hive/warehouse/student -rwxrw-rw- 2 hadoop hadoop 13 2015-12-03 23:48 /user/hive/warehouse/student/000000_0 # 查看文件 [hadoop@nnode local]$ hdfs dfs -text /user/hive/warehouse/student/000000_0 100zhangsan [hadoop@nnode local]$

通过MySQL确认元数据

# 查询表信息 mysql> SELECT tbl_id, tbl_name, tbl_type, create_time, db_id, owner, sd_id from tbls; +--------+----------+---------------+-------------+-------+--------+-------+ | tbl_id | tbl_name | tbl_type | create_time | db_id | owner | sd_id | +--------+----------+---------------+-------------+-------+--------+-------+ | 1 | student | MANAGED_TABLE | 1449157379 | 1 | hadoop | 1 | +--------+----------+---------------+-------------+-------+--------+-------+ 1 row in set (0.00 sec) # 查询存储信息 mysql> SELECT sd_id, input_format, output_format, location from sds; +-------+------------------------------------------+------------------------------------------------------------+--------------------------------------------+ | sd_id | input_format | output_format | location | +-------+------------------------------------------+------------------------------------------------------------+--------------------------------------------+ | 1 | org.apache.hadoop.mapred.TextInputFormat | org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat | hdfs://cluster/user/hive/warehouse/student | +-------+------------------------------------------+------------------------------------------------------------+--------------------------------------------+ 1 row in set (0.00 sec) mysql>

本文出自 “闷葫芦的世界” 博客,请务必保留此出处http://luchunli.blog.51cto.com/2368057/1693817

相关文章推荐

- Java 验证 身份证号码是否规范

- 8月小节

- Leetcode: Paint House II

- solr 5.2.1学习笔记-4-python客户端

- 美团2015部分笔试题

- 一种让 IE6/7/8 支持 media query 响应式设计的方法

- [Java Concurrency in Practice]第十五章 原子变量与非阻塞同步机制

- 正则表达式 手机号 车牌号 身份证 姓名

- Struts 2 Hello World Example

- 使用solr的DIHandler 构建mysql大表全量索引,内存溢出问题的解决方法

- 一个以黄梅戏相关的登录界面

- 记忆碎片 - 2015.09.11

- Android的EditText控件常用属性

- 记忆碎片 - 2015.09.11

- Android Studio为APP签名,提取签名的SHA1/MD5编码

- Dom的基本操作回顾

- flask (python web app framework)

- centos下MySQL升级

- apple is girl

- 八大种必知排序算法(二) 选择排序,插入排序,希尔算法(续)