爬腾讯视频所有类型的电影

2015-06-28 20:18

260 查看

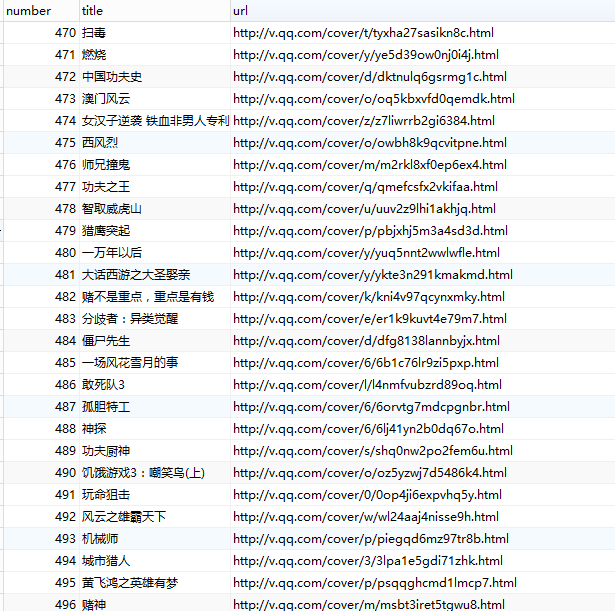

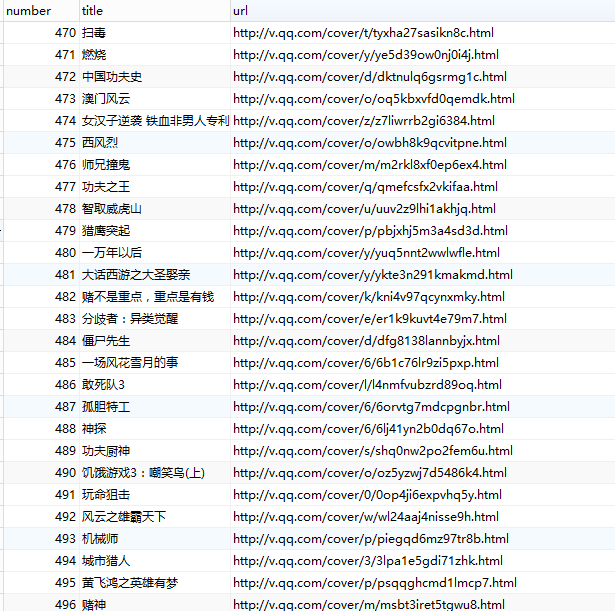

抓取腾讯视频存入数据库!

数据存入MySQL:

将上述程序根据面向对象的思想进行改写,并对电影列表获取部分进行多线程的改写。

代码主要分成两个部分,一个是方法类methods,一个是面向对象的改写

methods.py

movies.py

#coding: utf-8

import re

import urllib2

from bs4 import BeautifulSoup

import time

import MySQLdb

import sys

reload(sys)

sys.setdefaultencoding('utf8')

NUM = 0 #全局变量。电影数量

m_type = u'' #全局变量。电影类型

m_site = u'qq' #全局变量。电影网站

movieStore = [] #存储电影信息

#根据指定的URL获取网页内容

def getHtml(url):

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 6.3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36',

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8'}

timeout = 30

req = urllib2.Request(url, None, headers)

response = urllib2.urlopen(req, None, timeout)

return response.read()

#从电影分类列表页面获取电影分类

def getTags(html):

global m_type

soup = BeautifulSoup(html)

#return soup

tags_all = soup.find_all('ul', {'class': 'clearfix _group', 'gname': 'mi_type'})

#print len(tags_all), tags_all

#print str(tags_all[0]).replace('\n', '')

#<a _hot="tag.sub" class="_gtag _hotkey" href="http://v.qq.com/list/1_0_-1_-1_1_0_0_20_0_-1_0.html" title="动作" tvalue="0">动作</a>

reTags = r'<a _hot=\"tag\.sub\" class=\"_gtag _hotkey\" href=\"(.+?)\" title=\"(.+?)\" tvalue=\"(.+?)\">.+?</a>'

pattern = re.compile(reTags, re.DOTALL)

tags = pattern.findall(str(tags_all[0]))

if tags:

tagsURL = {}

for tag in tags:

#print tag

tagURL = tag[0].decode('utf-8')

m_type = tag[1].decode('utf-8')

tagsURL[m_type] = tagURL

else:

print "Not Find"

return tagsURL

#获取每个分类的页数

def getPages(tagUrl):

tag_html = getHtml(tagUrl)

#div class="paginator

soup = BeautifulSoup(tag_html) #过滤出标记页面的html

#print soup

#<div class="mod_pagenav" id="pager">

div_page = soup.find_all('div', {'class': 'mod_pagenav', 'id': 'pager'})

#print div_page[0]

#<a _hot="movie.page2." class="c_txt6" href="http://v.qq.com/list/1_18_-1_-1_1_0_1_20_0_-1_0_-1.html" title="2"><span>2</span></a>

re_pages = r'<a _hot=.+?><span>(.+?)</span></a>'

p = re.compile(re_pages, re.DOTALL)

pages = p.findall(str(div_page[0]))

#print pages

if len(pages) > 1:

return pages[-2]

else:

return 1

#获取电影列表

def getMovieList(html):

soup = BeautifulSoup(html)

#<ul class="mod_list_pic_130">

divs = soup.find_all('ul', {'class': 'mod_list_pic_130'})

#print divs

for divHtml in divs:

divHtml = str(divHtml).replace('\n', '')

#print divHtml

getMovie(divHtml)

def getMovie(html):

global NUM

global m_type

global m_site

reMovie = r'<li><a _hot=\"movie\.image\.link\.1\.\" class=\"mod_poster_130\" href=\"(.+?)\" target=\"_blank\" title=\"(.+?)\">.+?</li>'

p = re.compile(reMovie, re.DOTALL)

movies = p.findall(html)

#print movies

if movies:

for movie in movies:

#print movie

NUM += 1

#print "%s : %d" % ("=" * 70, NUM)

'''

values = dict(

movieTitle=movie[1],

movieUrl=movie[0],

movieSite=m_site,

movieType=m_type

)

print values

'''

eachMovie = [NUM, movie[1], movie[0], m_type]

movieStore.append(eachMovie)

#数据库插入数据,自己创建表,字段为:number, title, url, type

def db_insert(insert_list):

try:

conn = MySQLdb.connect(host="127.0.0.1", user="root", passwd="meimei1118", db="ctdata", charset='utf8')

cursor = conn.cursor()

cursor.execute('delete from movies')

cursor.execute('alter table movies AUTO_INCREMENT=1')

cursor.executemany("INSERT INTO movies(number,title,url,type) VALUES(%s, %s, %s,%s)", insert_list)

conn.commit()

cursor.close()

conn.close()

except MySQLdb.Error, e:

print "Mysql Error %d: %s" %(e.args[0], e.args[1])

if __name__ == "__main__":

url = "http://v.qq.com/list/1_-1_-1_-1_1_0_0_20_0_-1_0.html"

html = getHtml(url)

tagUrls = getTags(html)

#print tagHtml

#print tagUrls

for url in tagUrls.items():

#print str(url[1]).encode('utf-8'), url[0]

#getPages(str(url[1]))

maxPage = int(getPages(str(url[1]).encode('utf-8')))

print maxPage

for x in range(0, 5):

#http://v.qq.com/list/1_18_-1_-1_1_0_0_20_0_-1_0_-1.html

m_url = str(url[1]).replace('0_20_0_-1_0_-1.html', '')

#print m_url

movie_url = "%s%d_20_0_-1_0_-1.html" % (m_url, x)

#print movie_url

movie_html = getHtml(movie_url.encode('utf-8'))

#print movie_html

getMovieList(movie_html)

time.sleep(10)

db_insert(movieStore)数据存入MySQL:

#coding: utf-8

import re

import urllib2

import datetime

import Queue

from bs4 import BeautifulSoup

import time

import MySQLdb

import sys

reload(sys)

sys.setdefaultencoding('utf8')

NUM = 0 #全局变量。电影数量

m_type = u'' #全局变量。电影类型

m_site = u'qq' #全局变量。电影网站

movieStore = [] #存储电影信息

#根据指定的URL获取网页内容

def getHtml(url):

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 6.3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36',

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8'}

timeout = 30

req = urllib2.Request(url, None, headers)

response = urllib2.urlopen(req, None, timeout)

return response.read()

#从电影分类列表页面获取电影分类

def getTags(html):

global m_type

soup = BeautifulSoup(html)

#return soup

tags_all = soup.find_all('ul', {'class': 'clearfix _group', 'gname': 'mi_type'})

#print len(tags_all), tags_all

#print str(tags_all[0]).replace('\n', '')

#<a _hot="tag.sub" class="_gtag _hotkey" href="http://v.qq.com/list/1_0_-1_-1_1_0_0_20_0_-1_0.html" title="动作" tvalue="0">动作</a>

reTags = r'<a _hot=\"tag\.sub\" class=\"_gtag _hotkey\" href=\"(.+?)\" title=\"(.+?)\" tvalue=\"(.+?)\">.+?</a>'

pattern = re.compile(reTags, re.DOTALL)

tags = pattern.findall(str(tags_all[0]))

if tags:

tagsURL = {}

for tag in tags:

#print tag

tagURL = tag[0].decode('utf-8')

m_type = tag[1].decode('utf-8')

tagsURL[m_type] = tagURL

else:

print "Not Find"

return tagsURL

#获取每个分类的页数

def getPages(tagUrl):

tag_html = getHtml(tagUrl)

#div class="paginator

soup = BeautifulSoup(tag_html) #过滤出标记页面的html

#print soup

#<div class="mod_pagenav" id="pager">

div_page = soup.find_all('div', {'class': 'mod_pagenav', 'id': 'pager'})

#print div_page[0]

#<a _hot="movie.page2." class="c_txt6" href="http://v.qq.com/list/1_18_-1_-1_1_0_1_20_0_-1_0_-1.html" title="2"><span>2</span></a>

re_pages = r'<a _hot=.+?><span>(.+?)</span></a>'

p = re.compile(re_pages, re.DOTALL)

pages = p.findall(str(div_page[0]))

#print pages

if len(pages) > 1:

return pages[-2]

else:

return 1

#获取电影列表

def getMovieList(html):

soup = BeautifulSoup(html)

#<ul class="mod_list_pic_130">

divs = soup.find_all('ul', {'class': 'mod_list_pic_130'})

#print divs

for divHtml in divs:

divHtml = str(divHtml).replace('\n', '')

#print divHtml

getMovie(divHtml)

def getMovie(html):

global NUM

global m_type

global m_site

reMovie = r'<li><a _hot=\"movie\.image\.link\.1\.\" class=\"mod_poster_130\" href=\"(.+?)\" target=\"_blank\" title=\"(.+?)\">.+?</li>'

p = re.compile(reMovie, re.DOTALL)

movies = p.findall(html)

#print movies

if movies:

for movie in movies:

#print movie

NUM += 1

#print "%s : %d" % ("=" * 70, NUM)

'''

values = dict(

movieTitle=movie[1],

movieUrl=movie[0],

movieSite=m_site,

movieType=m_type

)

print values

'''

eachMovie = [NUM, movie[1], movie[0], datetime.datetime.now(), m_type]

#cursor.execute('alter table movies AUTO_INCREMENT=1')

cursor.execute("INSERT INTO movies(number,title,url,time, type) VALUES(%s, %s, %s,%s,%s)", eachMovie)

conn.commit()

#movieStore.append(eachMovie)

#数据库插入数据,自己创建表,字段为:number, title, url, type

def db_insert(insert_list):

try:

conn = MySQLdb.connect(host="127.0.0.1", user="root", passwd="meimei1118", db="ctdata", charset='utf8')

cursor = conn.cursor()

cursor.execute('delete from movies')

cursor.execute('alter table movies AUTO_INCREMENT=1')

cursor.executemany("INSERT INTO movies(number,title,url,type) VALUES(%s, %s, %s,%s)", insert_list)

conn.commit()

cursor.close()

conn.close()

except MySQLdb.Error, e:

print "Mysql Error %d: %s" %(e.args[0], e.args[1])

if __name__ == "__main__":

url = "http://v.qq.com/list/1_-1_-1_-1_1_0_0_20_0_-1_0.html"

html = getHtml(url)

tagUrls = getTags(html)

#print tagHtml

#print tagUrls

try:

conn = MySQLdb.connect(host="127.0.0.1", user="root", passwd="meimei1118", db="ctdata", charset='utf8')

cursor = conn.cursor()

cursor.execute('delete from movies')

for url in tagUrls.items():

#print str(url[1]).encode('utf-8'), url[0]

#getPages(str(url[1]))

maxPage = int(getPages(str(url[1]).encode('utf-8')))

print maxPage

for x in range(0, maxPage):

#http://v.qq.com/list/1_18_-1_-1_1_0_0_20_0_-1_0_-1.html

m_url = str(url[1]).replace('0_20_0_-1_0_-1.html', '')

#print m_url

movie_url = "%s%d_20_0_-1_0_-1.html" % (m_url, x)

#print movie_url

movie_html = getHtml(movie_url.encode('utf-8'))

#print movie_html

getMovieList(movie_html)

time.sleep(10)

print u"完成..."

cursor.close()

conn.close()

except MySQLdb.Error, e:

print "Mysql Error %d: %s" %(e.args[0], e.args[1])

#db_insert(movieStore)将上述程序根据面向对象的思想进行改写,并对电影列表获取部分进行多线程的改写。

代码主要分成两个部分,一个是方法类methods,一个是面向对象的改写

methods.py

#coding: utf-8

import re

import urllib2

import datetime

from bs4 import BeautifulSoup

import sys

reload(sys)

sys.setdefaultencoding('utf8')

NUM = 0

m_type = u'' #全局变量。电影类型

m_site = u'qq' #全局变量。电影网站

movieStore = [] #存储电影信息

#根据指定的URL获取网页内容

def getHtml(url):

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 6.3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36',

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8'}

timeout = 20

req = urllib2.Request(url, None, headers)

response = urllib2.urlopen(req, None, timeout)

return response.read()

#从电影分类列表页面获取电影分类

def getTags(html):

global m_type

soup = BeautifulSoup(html)

#return soup

tags_all = soup.find_all('ul', {'class': 'clearfix _group', 'gname': 'mi_type'})

#print len(tags_all), tags_all

#print str(tags_all[0]).replace('\n', '')

#<a _hot="tag.sub" class="_gtag _hotkey" href="http://v.qq.com/list/1_0_-1_-1_1_0_0_20_0_-1_0.html" title="动作" tvalue="0">动作</a>

reTags = r'<a _hot=\"tag\.sub\" class=\"_gtag _hotkey\" href=\"(.+?)\" title=\"(.+?)\" tvalue=\"(.+?)\">.+?</a>'

pattern = re.compile(reTags, re.DOTALL)

tags = pattern.findall(str(tags_all[0]))

if tags:

tagsURL = {}

for tag in tags:

#print tag

tagURL = tag[0].decode('utf-8')

m_type = tag[1].decode('utf-8')

tagsURL[m_type] = tagURL

print m_type

else:

print "Not Find"

return tagsURL

#获取每个分类的页数

def getPages(tagUrl):

tag_html = getHtml(tagUrl)

#div class="paginator

soup = BeautifulSoup(tag_html) #过滤出标记页面的html

#print soup

#<div class="mod_pagenav" id="pager">

div_page = soup.find_all('div', {'class': 'mod_pagenav', 'id': 'pager'})

#print div_page[0]

#<a _hot="movie.page2." class="c_txt6" href="http://v.qq.com/list/1_18_-1_-1_1_0_1_20_0_-1_0_-1.html" title="2"><span>2</span></a>

re_pages = r'<a _hot=.+?><span>(.+?)</span></a>'

p = re.compile(re_pages, re.DOTALL)

pages = p.findall(str(div_page[0]))

#print pages

if len(pages) > 1:

return pages[-2]

else:

return 1

#从指定电影块页面获取电影具体内容

def getMovie(html):

global NUM

global m_type

global m_site

reMovie = r'<li><a _hot=\"movie\.image\.link\.1\.\" class=\"mod_poster_130\" href=\"(.+?)\" target=\"_blank\" title=\"(.+?)\">.+?</li>'

p = re.compile(reMovie, re.DOTALL)

movies = p.findall(html)

#print movies

if movies:

for movie in movies:

#print movie

#NUM += 1

#print "%s : %d" % ("=" * 70, NUM)

'''

values = dict(

movieTitle=movie[1],

movieUrl=movie[0],

movieSite=m_site,

movieType=m_type

)

print values

'''

eachMovie = [movie[1], movie[0], m_type, datetime.datetime.now()]

print eachMovie

movieStore.append(eachMovie)

#获取一页的电影列表

def getMovieList(html):

soup = BeautifulSoup(html)

#<ul class="mod_list_pic_130">

divs = soup.find_all('ul', {'class': 'mod_list_pic_130'})

#print divs

for divHtml in divs:

divHtml = str(divHtml).replace('\n', '')

#print divHtml

getMovie(divHtml)movies.py

#coding: utf-8

import re

import urllib2

import datetime

import threading

from methods import *

import time

import MySQLdb

import sys

reload(sys)

sys.setdefaultencoding('utf8')

NUM = 0 #全局变量。电影数量

movieTypeList = []

def getMovieType():

url = "http://v.qq.com/list/1_-1_-1_-1_1_0_0_20_0_-1_0.html"

html = getHtml(url)

tagUrls = getTags(html)

for url in tagUrls.items():

#print str(url[1]).encode('utf-8'), url[0]

#getPages(str(url[1]))

maxPage = int(getPages(str(url[1]).encode('utf-8')))

#print maxPage

typeList = [url[0], str(url[1]).encode('utf-8'), maxPage]

movieTypeList.append(typeList)

#多线程获取每一类型的电影列表

class GetMovies(threading.Thread):

def __init__(self, movie):

threading.Thread.__init__(self)

self.movie = movie

def getMovies(self):

maxPage = int(self.movie[2])

m_type = self.movie[0]

for x in range(0, maxPage):

#http://v.qq.com/list/1_18_-1_-1_1_0_0_20_0_-1_0_-1.html

m_url = str(self.movie[1]).replace('0_20_0_-1_0_-1.html', '')

#print m_url

movie_url = "%s%d_20_0_-1_0_-1.html" % (m_url, x)

#print movie_url, url[0]

movie_html = getHtml(movie_url.encode('utf-8'))

#print movie_html

getMovieList(movie_html)

time.sleep(5)

print m_type + u"完成..."

def run(self):

self.getMovies()

#插入数据库,表结构自己创建

def db_insert(insert_list):

try:

conn = MySQLdb.connect(host="127.0.0.1", user="root", passwd="meimei1118", db="ctdata", charset='utf8')

cursor = conn.cursor()

cursor.execute('delete from test')

cursor.execute('alter table test AUTO_INCREMENT=1')

cursor.executemany("INSERT INTO test(title,url,type,time) VALUES (%s,%s,%s,%s)", insert_list)

conn.commit()

cursor.close()

conn.close()

except MySQLdb.Error, e:

print "Mysql Error %d: %s" % (e.args[0], e.args[1])

if __name__ == "__main__":

getThreads = []

getMovieType()

start = time.time()

for i in range(len(movieTypeList)):

t = GetMovies(movieTypeList[i])

getThreads.append(t)

for i in range(len(getThreads)):

getThreads[i].start()

for i in range(len(getThreads)):

getThreads[i].join()

t1 = time.time() - start

print t1

t = time.time()

db_insert(movieStore)

t2 = time.time() - t

print t2

相关文章推荐

- Constraints on a Wildcard : Generic Parameters

- Linux-ubuntu-MyEclipse8.5 安装

- Unique Paths II

- JAVA基础第七天

- Excel 统计在某个区间内数值的个数

- FTP服务器的搭建

- IOS 开发,调用打电话,发短信,打开网址

- 认识自己——恐惧的奴隶6:老师

- 苹果API常用英文名词

- 利用div实现遮罩层效果

- emacs第一次接触

- 雷军武大演讲:快40岁时 我曾觉得梦想渐行渐远

- UVA_11039 Building Designing

- Add and Search Word - Data structure design

- 6.27博客

- JAVA基础第六天

- SpringMvc+Jdbc泛型反射BaseDao

- spring jedisTemplate操作出现key和value值出现\xac字符

- Latex listings 宏包排版代码

- 欢迎使用CSDN-markdown编辑器