hadoop1.1.2集群详细安装实例

2014-12-08 13:43

435 查看

摘要: 最近因为公司准备将数据迁移到Hbase上,所以不得不学习hadoop,我们尝试过将数据迁移到mongodb上面,周末在家里做了一个小试验,使用java+mongodb做爬虫抓取数据,我将mongodb安装在centos6.3虚拟机上,分配1G的内存,开始抓数据,半小时后,虚拟机内存吃光,没有办法解决内存问题,网上很多人说没有32G的内存不要玩mongodb,这样的说法很搞笑,难道我将1T的数据都放在内存上,不是坑么,所以说这是我们为什么选择Hbase的原因之一。

最近因为公司准备将数据迁移到Hbase上,所以不得不学习hadoop,我们尝试过将数据迁移到mongodb上面,周末在家里做了一个小试验,使用java+mongodb做爬虫抓取数据,我将mongodb安装在centos6.3虚拟机上,分配1G的内存,开始抓数据,半小时后,虚拟机内存吃光,没有办法解决内存问题,网上很多人说没有32G的内存不要玩mongodb,这样的说法很搞笑,难道我将1T的数据都放在内存上,不是坑么,所以说这是我们为什么选择Hbase的原因之一。

使用ifconfig 查看IP,可以使用vi /etc/sysconfig/network-scripts/ifcfg-eth0 ,ifcfg-eth0为网卡,根据自己的需求配置,如下:

DEVICE=eth0

BOOTPROTO=static

ONBOOT=yes

IPADDR=192.168.1.110

NETMASK=255.255.255.0

TYPE=Ethernet

GATEWAY=192.168.1.1

里面MAC地址不需要修改。

2、配置主机名network与DNS

使用vi /etc/sysconfig/network,修改主机名与网关,这里的网关可填可不填,如下

NETWORKING=yes

NETWORKING_IPV6=no

HOSTNAME=master

GATEWAY=192.168.1.1

使用vi /etc/resolv.conf ,添加DNS,这里可以添加你省内常用的DNS,什么8.8.8.8就算了,太慢了,添加DNS为了使用yum安装程序,如下

nameserver 202.106.0.20

nameserver 192.168.1.1

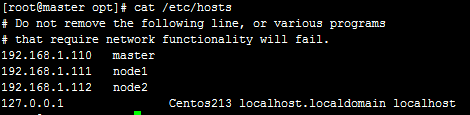

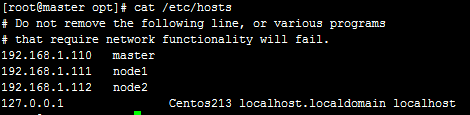

配置vi /etc/hosts 文件,如下

192.168.1.110 master

192.168.1.111 node1

192.168.1.112 node2

# yum -y install lrzsz gcc gcc-c++ libstdc++-devel ntp 安装配置文件

4、同步时间与地区

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

将地区配置为上海,再使用ntpdate更新时间,再使用crontab -e,添加如下

# 30 23 * * * /usr/sbin/ntpdate cn.pool.ntp.org ; hwclock -w

每天晚上23:30更新一下时间

5、关闭防火墙,SELINUX=disable

# service iptables stop

# vi /etc/selinux/config

SELINUX=disabled

将所有的软件包都放到/opt文件夹中

# chmod -R 777 /opt

# rpm -ivh jdk-7u45-linux-i586.rpm

[root@master opt]# vi /etc/profile.d/java_hadoop.sh

export JAVA_HOME=/usr/java/jdk1.7.0_45/

export PATH=$PATH:$JAVA_HOME/bin

[root@master opt]# source /etc/profile

[root@master opt]# echo $JAVA_HOME

/usr/java/jdk1.7.0_45/

2、添加hadoop用户,配置无ssh登录

# groupadd hadoop

# useradd hadoop -g hadoop

# su hadoop

$ ssh-keygen -t dsa -P '' -f /home/hadoop/.ssh/id_dsa

$ cp id_dsa.pub authorized_keys

$ chmod go-wx authorized_keys

[hadoop@master .ssh]$ ssh master

Last login: Sun Mar 23 23:16:00 2014 from 192.168.1.110

做到了以上工作,就可以开始安装hadoop了。

为要选择使用hadoop1,可以去看看51cto向磊的博客http://slaytanic.blog.51cto.com/2057708/1397396,就是因为产品比较成熟,稳定。

hadoop-1.1.2.tar.gz

hbase-0.96.1.1-hadoop1-bin.tar.gz

zookeeper-3.4.5-1374045102000.tar.gz

#将需要的文件与安装包上传,hbase要与hadoop版本一致,在hbase/lib/hadoop-core-1.1.2.jar,为正确。

[root@master opt]# mkdir -p /opt/modules/hadoop/

[root@master hadoop]# chown -R hadoop:hadoop /opt/modules/hadoop/*

[root@master hadoop]# ll

total 148232

-rwxrwxrwx 1 hadoop hadoop 61927560 Oct 29 11:16 hadoop-1.1.2.tar.gz

-rwxrwxrwx 1 hadoop hadoop 73285670 Mar 24 12:57 hbase-0.96.1.1-hadoop1-bin.tar.gz

-rwxrwxrwx 1 hadoop hadoop 16402010 Mar 24 12:57 zookeeper-3.4.5-1374045102000.tar.gz

#新建/opt/modules/hadoop/文件夹,把需要的软件包都复制到文件夹下,将文件夹的权限使用者配置为hadoop用户组和hadoop用户,其实这一步可以最后来做。

[root@master hadoop]# tar -zxvf hadoop-1.1.2.tar.gz

#解压hadoop-1.1.2.tar.gz文件

[root@master hadoop]# cat /etc/profile.d/java_hadoop.sh

export JAVA_HOME=/usr/java/jdk1.7.0_45/

export HADOOP_HOME=/opt/modules/hadoop/hadoop-1.1.2/

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin

#使用vi,新建一下HADOOP_HOME的变量,名称不能自定义(不然会很麻烦)。

[root@master opt]# source /etc/profile

#更新环境变量。

[root@master hadoop]# echo $HADOOP_HOME

/opt/modules/hadoop/hadoop-1.1.2

#打印一下$HADOOP_HOME,就输出了我们的地址。

[hadoop@master conf]$ vi /opt/modules/hadoop/hadoop-1.1.2/conf/hadoop-env.sh

export HADOOP_HEAPSIZE=64

#这里是内存大少的配置,我的虚拟机配置为64m。

[root@master conf]# mkdir -p /data/

#新建一下data文件夹,准备将所有的数据,存放在这个文件夹下。

[root@master conf]# mkdir -p /data/hadoop/hdfs/data

[root@master conf]# mkdir -p /data/hadoop/hdfs/name

[root@master conf]# mkdir -p /data/hadoop/hdfs/namesecondary/

[hadoop@master bin]$ mkdir -p /data/hadoop/mapred/mrlocal

[hadoop@master bin]$ mkdir -p /data/hadoop/mapred/mrsystem

[root@master conf]#su

#切换到root用户,不然是不可以修改权限的。

[root@master conf]# chown -R hadoop:hadoop /data/

#将data文件夹的权限使用者配置为hadoop用户组和hadoop用户

[root@master conf]# su hadoop

#切换到hadoop用户

[hadoop@master conf]$ chmod go-w /data/hadoop/hdfs/data/

#这里步非常重要,就是去除其他用户写入hdfs数据,可以配置为755

[hadoop@master conf]$ ll /data/hadoop/hdfs/

drwxr-xr-x 2 hadoop hadoop 4096 Mar 24 13:21 data

drwxrwxr-x 2 hadoop hadoop 4096 Mar 24 13:21 name

drwxrwxr-x 2 hadoop hadoop 4096 Mar 24 13:20 namesecondary

[hadoop@master conf]$ vi core-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>fs.checkpoint.dir</name>

<value>/data/hadoop/hdfs/namesecondary</value>

</property>

<property>

<name>fs.checkpoint.period</name>

<value>1800</value>

</property>

<property>

<name>fs.checkpoint.size</name>

<value>33554432</value>

</property>

<property>

<name>io.compression.codecs</name>

<value>org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache

.hadoop.io.compress.BZip2Codec</value>

</property>

<property>

<name>fs.trash.interval</name>

<value>1440</value>

</property>

</configuration>

[hadoop@master conf]$ vi hdfs-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.name.dir</name>

<value>/data/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/data/hadoop/hdfs/data</value>

<description> </description>

</property>

<property>

<name>dfs.http.address</name>

<value>master:50070</value>

</property>

<property>

<name>dfs.secondary.http.address</name>

<value>node1:50090</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.datanode.du.reserved</name>

<value>873741824</value>

</property>

<property>

<name>dfs.block.size</name>

<value>134217728</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

[hadoop@master conf]$ vi mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>master:9001</value>

</property>

<property>

<name>mapred.local.dir</name>

<value>/data/hadoop/mapred/mrlocal</value>

<final>true</final>

</property>

<property>

<name>mapred.system.dir</name>

<value>/data/hadoop/mapred/mrsystem</value>

<final>true</final>

</property>

<property>

<name>mapred.tasktracker.map.tasks.maximum</name>

<value>2</value>

<final>true</final>

</property>

<property>

<name>mapred.tasktracker.reduce.tasks.maximum</name>

<value>1</value>

<final>true</final>

</property>

<property>

<name>io.sort.mb</name>

<value>32</value>

<final>true</final>

</property>

<property>

<name>mapred.child.java.opts</name>

<value>-Xmx128M</value>

</property>

<property>

<name>mapred.compress.map.output</name>

<value>true</value>

</property>

</configuration>

#配置文件完成

#切换到master主机,开启

[root@master hadoop]# vi/opt/modules/hadoop/hadoop-1.1.2/conf/masters

node1

node2

[root@master hadoop]# vi/opt/modules/hadoop/hadoop-1.1.2/conf/slaves

master

node1

node2

#节点配置,这里很重要,masters文件不需要把masters加入

前面的网络的配置我就不说了:

#登录master,将authorized_keys,发过去

[root@master ~]# scp /home/hadoop/.ssh/authorized_keys root@node1:/home/hadoop/.ssh/

[root@master ~]# scp /home/hadoop/.ssh/authorized_keys root@node1:/home/hadoop/.ssh/

The authenticity of host 'node1 (192.168.1.111)' can't be established.

RSA key fingerprint is 0d:aa:04:89:28:44:b9:e8:bb:5e:06:d0:dc:de:22:85.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node1,192.168.1.111' (RSA) to the list of known hosts.

root@node1's password:

#切换到node1主机

[root@master ~]# su hadoop

[hadoop@master root]$ ssh master

Last login: Sun Mar 23 23:17:06 2014 from 192.168.1.110

[root@master hadoop]# vi/opt/modules/hadoop/hadoop-1.1.2/conf/masters

node1

node2

[root@master hadoop]# vi/opt/modules/hadoop/hadoop-1.1.2/conf/slaves

master

node1

node2

#切换到master主机,开启

[hadoop@master conf]$ hadoop namenode -format

Warning: $HADOOP_HOME is deprecated.

14/03/24 13:33:52 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master/192.168.1.110

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.1.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.1 -r 1440782; compiled by 'hortonfo' on Thu Jan 31 02:03:24 UTC 2013

************************************************************/

Re-format filesystem in /data/hadoop/hdfs/name ? (Y or N) Y

14/03/24 13:33:54 INFO util.GSet: VM type = 32-bit

14/03/24 13:33:54 INFO util.GSet: 2% max memory = 0.61875 MB

14/03/24 13:33:54 INFO util.GSet: capacity = 2^17 = 131072 entries

14/03/24 13:33:54 INFO util.GSet: recommended=131072, actual=131072

14/03/24 13:33:55 INFO namenode.FSNamesystem: fsOwner=hadoop

14/03/24 13:33:55 INFO namenode.FSNamesystem: supergroup=supergroup

14/03/24 13:33:55 INFO namenode.FSNamesystem: isPermissionEnabled=false

14/03/24 13:33:55 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

14/03/24 13:33:55 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s)

14/03/24 13:33:55 INFO namenode.NameNode: Caching file names occuring more than 10 times

14/03/24 13:33:55 INFO common.Storage: Image file of size 112 saved in 0 seconds.

14/03/24 13:33:56 INFO namenode.FSEditLog: closing edit log: position=4, editlog=/data/hadoop/hdfs/name/current/edits

14/03/24 13:33:56 INFO namenode.FSEditLog: close success: truncate to 4, editlog=/data/hadoop/hdfs/name/current/edits

14/03/24 13:33:56 INFO common.Storage: Storage directory /data/hadoop/hdfs/name has been successfully formatted.

14/03/24 13:33:56 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.1.110

************************************************************/

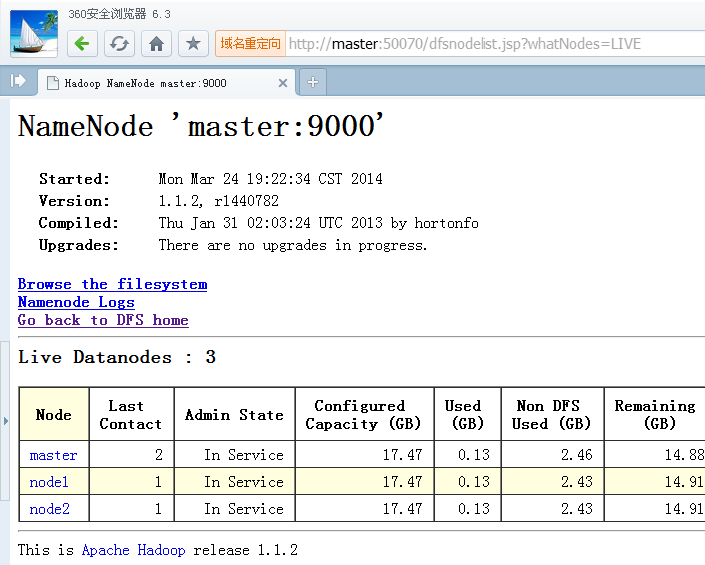

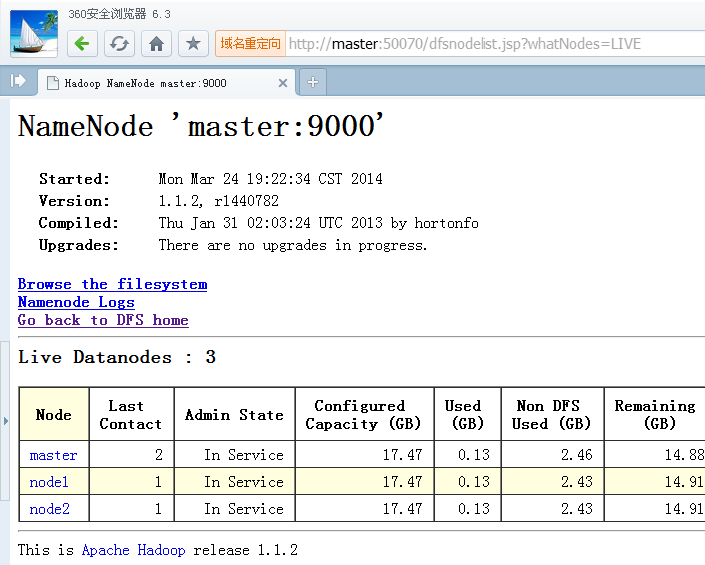

[hadoop@master bin]$ start-all.sh

[hadoop@master bin]$ jps

7603 TaskTracker

7241 DataNode

7119 NameNode

7647 Jps

7473 JobTracker

[root@master hadoop]# tar -zxvf zookeeper-3.4.5-1374045102000.tar.gz

[root@master hadoop]# chown -R hadoop:hadoop zookeeper-3.4.5

[root@master hadoop]# vi /opt/modules/hadoop/zookeeper-3.4.5/conf/zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/data/zookeeper

# the port at which the clients will connect

clientPort=2181

server.1=192.168.1.110:2888:3888

server.2=192.168.1.111:2888:3888

server.3=192.168.1.112:2888:3888

#新建文件myid(在zoo.cfg 配置的dataDir目录下,此处为/home/hadoop/zookeeper),使得myid中的值与server的编号相同,比如namenode上的myid: 1。datanode1上的myid:2。以此类推。

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance #

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

#开始配置。

[root@master hadoop]# mkdir -p /data/zookeeper/

[root@master hadoop]# chown -R hadoop:hadoop /data/zookeeper/

[root@master hadoop]# echo "1" > /data/zookeeper/myid

[root@master hadoop]# cat /data/zookeeper/myid

1

[root@node1 zookeeper-3.4.5]# chown -R hadoop:hadoop /data/zookeeper/*

[root@master hadoop]# scp -r /opt/modules/hadoop/zookeeper-3.4.5/ root@node1:/opt/modules/hadoop/

#将/opt/modules/hadoop/zookeeper-3.4.5发送到node1节点,新增一个myid为2

#切换到node1

[root@node1 data]# echo "2" > /data/zookeeper/myid

[root@node1 data]# cat /data/zookeeper/myid

2

[root@node1 zookeeper-3.4.5]# chown -R hadoop:hadoop /opt/modules/hadoop/zookeeper-3.4.5

[root@node1 zookeeper-3.4.5]# chown -R hadoop:hadoop /data/zookeeper/*

#切换到master

[root@master hadoop]# su hadoop

[hadoop@master hadoop]$ cd zookeeper-3.4.5

[hadoop@master bin]$ ./zkServer.sh start

JMX enabled by default

Using config: /opt/modules/hadoop/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@master bin]$ jps

5507 NameNode

5766 JobTracker

6392 Jps

6373 QuorumPeerMain

5890 TaskTracker

5626 DataNode

[root@node1 zookeeper-3.4.5]# su hadoop

[hadoop@node1 zookeeper-3.4.5]$ cd bin/

[hadoop@node1 bin]$ ./zkServer.sh start

JMX enabled by default

Using config: /opt/modules/hadoop/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@node1 bin]$ jps

5023 SecondaryNameNode

5120 TaskTracker

5445 Jps

4927 DataNode

5415 QuorumPeerMain

#两边开启之后,就测试一下Mode: follower代表正常

[hadoop@master bin]$ ./zkServer.sh status

JMX enabled by default

Using config: /opt/modules/hadoop/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: follower

-----------------------------------zookeeper-3.4.5配置结束-----------------------------------

[root@master ~]# su hadoop

[hadoop@master root]$ cd /opt/modules/hadoop/zookeeper-3.4.5/bin/

[hadoop@master bin]$ ./zkServer.sh start

#解压文件

[root@master hadoop]# vi /etc/profile.d/java_hadoop.sh

export JAVA_HOME=/usr/java/jdk1.7.0_45/

export HADOOP_HOME=/opt/modules/hadoop/hadoop-1.1.2/

export HBASE_HOME=/opt/modules/hadoop/hbase-0.96.1.1/

export HBASE_CLASSPATH=/opt/modules/hadoop/hadoop-1.1.2/conf/

export HBASE_MANAGES_ZK=true

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HBASE_HOME/bin

#配置环境变量。

[root@master hadoop]# source /etc/profile

[root@master hadoop]# echo $HBASE_CLASSPATH

/opt/modules/hadoop/hadoop-1.1.2/conf/

[root@master conf]# vi /opt/modules/hadoop/hbase-0.96.1.1/conf/hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,node1,node2</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/data/zookeeper</value>

</property>

</configuration>

[root@master conf]# cat /opt/modules/hadoop/hbase-0.96.1.1/conf/regionservers

master

node1

node2

[root@master conf]# chown -R hadoop:hadoop /opt/modules/hadoop/hbase-0.96.1.1

[root@master hadoop]# su hadoop

[hadoop@master hadoop]$ ll

total 148244

drwxr-xr-x 16 hadoop hadoop 4096 Mar 24 13:36 hadoop-1.1.2

-rwxrwxrwx 1 hadoop hadoop 61927560 Oct 29 11:16 hadoop-1.1.2.tar.gz

drwxr-xr-x 7 hadoop hadoop 4096 Mar 24 22:40 hbase-0.96.1.1

-rwxrwxrwx 1 hadoop hadoop 73285670 Mar 24 12:57 hbase-0.96.1.1-hadoop1-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 4096 Nov 5 2012 zookeeper-3.4.5

-rwxrwxrwx 1 hadoop hadoop 16402010 Mar 24 12:57 zookeeper-3.4.5-1374045102000.tar.gz

[root@master hadoop]$ scp -r hbase-0.96.1.1 node1:/opt/modules/hadoop

[root@master hadoop]$ scp -r hbase-0.96.1.1 node2:/opt/modules/hadoop

[root@node1 hadoop]# chown -R hadoop:hadoop /opt/modules/hadoop/hbase-0.96.1.1

[root@node2 hadoop]# chown -R hadoop:hadoop /opt/modules/hadoop/hbase-0.96.1.1

[root@node2 hadoop]# su hadoop

[hadoop@node2 bin]$ hbase shell

#进入hbase

[root@master conf]# jps

17616 QuorumPeerMain

20282 HRegionServer

20101 HMaster

9858 JobTracker

9712 DataNode

9591 NameNode

29655 Jps

9982 TaskTracker

第一次写这么多,我上传了一些文件,测试,详细的命令我就不写了,可能无法安装成功,权限是很重要的问题,准备录制一个视频,写成shell,给同事或网友学习。

所有的配置文件及安装包下载地址:http://pan.baidu.com/share/link?shareid=2478581294&uk=3607515896

最近因为公司准备将数据迁移到Hbase上,所以不得不学习hadoop,我们尝试过将数据迁移到mongodb上面,周末在家里做了一个小试验,使用java+mongodb做爬虫抓取数据,我将mongodb安装在centos6.3虚拟机上,分配1G的内存,开始抓数据,半小时后,虚拟机内存吃光,没有办法解决内存问题,网上很多人说没有32G的内存不要玩mongodb,这样的说法很搞笑,难道我将1T的数据都放在内存上,不是坑么,所以说这是我们为什么选择Hbase的原因之一。

软件环境

使用虚拟机安装,centos 6.3,jdk-7u45-linux-i586.rpm,hadoop-1.1.2.tar.gz,hbase-0.96.1.1-hadoop1-bin.tar.gz ,zookeeper-3.4.5-1374045102000.tar.gz第一步,配置网络

1、配置IP使用ifconfig 查看IP,可以使用vi /etc/sysconfig/network-scripts/ifcfg-eth0 ,ifcfg-eth0为网卡,根据自己的需求配置,如下:

DEVICE=eth0

BOOTPROTO=static

ONBOOT=yes

IPADDR=192.168.1.110

NETMASK=255.255.255.0

TYPE=Ethernet

GATEWAY=192.168.1.1

里面MAC地址不需要修改。

2、配置主机名network与DNS

使用vi /etc/sysconfig/network,修改主机名与网关,这里的网关可填可不填,如下

NETWORKING=yes

NETWORKING_IPV6=no

HOSTNAME=master

GATEWAY=192.168.1.1

使用vi /etc/resolv.conf ,添加DNS,这里可以添加你省内常用的DNS,什么8.8.8.8就算了,太慢了,添加DNS为了使用yum安装程序,如下

nameserver 202.106.0.20

nameserver 192.168.1.1

配置vi /etc/hosts 文件,如下

192.168.1.110 master

192.168.1.111 node1

192.168.1.112 node2

3、配置yum源与安装基础软件包

配置yum源,为了更方便的安装程序包,我使用的是163的源,国内比较快,当然你也可以不配置yum源,下载CentOS-Base.repo,地址:http://mirrors.163.com/.help/centos.html,上传yum文件夹中的文件到/etc/yum.repos.d中,覆盖文件,然后yum makecache 更新源,使用yum源安装:# yum -y install lrzsz gcc gcc-c++ libstdc++-devel ntp 安装配置文件

4、同步时间与地区

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

将地区配置为上海,再使用ntpdate更新时间,再使用crontab -e,添加如下

# 30 23 * * * /usr/sbin/ntpdate cn.pool.ntp.org ; hwclock -w

每天晚上23:30更新一下时间

5、关闭防火墙,SELINUX=disable

# service iptables stop

# vi /etc/selinux/config

SELINUX=disabled

第二步,安装java,配置ssh,添加用户

1、安装java1.7将所有的软件包都放到/opt文件夹中

# chmod -R 777 /opt

# rpm -ivh jdk-7u45-linux-i586.rpm

[root@master opt]# vi /etc/profile.d/java_hadoop.sh

export JAVA_HOME=/usr/java/jdk1.7.0_45/

export PATH=$PATH:$JAVA_HOME/bin

[root@master opt]# source /etc/profile

[root@master opt]# echo $JAVA_HOME

/usr/java/jdk1.7.0_45/

2、添加hadoop用户,配置无ssh登录

# groupadd hadoop

# useradd hadoop -g hadoop

# su hadoop

$ ssh-keygen -t dsa -P '' -f /home/hadoop/.ssh/id_dsa

$ cp id_dsa.pub authorized_keys

$ chmod go-wx authorized_keys

[hadoop@master .ssh]$ ssh master

Last login: Sun Mar 23 23:16:00 2014 from 192.168.1.110

做到了以上工作,就可以开始安装hadoop了。

为要选择使用hadoop1,可以去看看51cto向磊的博客http://slaytanic.blog.51cto.com/2057708/1397396,就是因为产品比较成熟,稳定。

开始安装:

[root@master opt]# lshadoop-1.1.2.tar.gz

hbase-0.96.1.1-hadoop1-bin.tar.gz

zookeeper-3.4.5-1374045102000.tar.gz

#将需要的文件与安装包上传,hbase要与hadoop版本一致,在hbase/lib/hadoop-core-1.1.2.jar,为正确。

[root@master opt]# mkdir -p /opt/modules/hadoop/

[root@master hadoop]# chown -R hadoop:hadoop /opt/modules/hadoop/*

[root@master hadoop]# ll

total 148232

-rwxrwxrwx 1 hadoop hadoop 61927560 Oct 29 11:16 hadoop-1.1.2.tar.gz

-rwxrwxrwx 1 hadoop hadoop 73285670 Mar 24 12:57 hbase-0.96.1.1-hadoop1-bin.tar.gz

-rwxrwxrwx 1 hadoop hadoop 16402010 Mar 24 12:57 zookeeper-3.4.5-1374045102000.tar.gz

#新建/opt/modules/hadoop/文件夹,把需要的软件包都复制到文件夹下,将文件夹的权限使用者配置为hadoop用户组和hadoop用户,其实这一步可以最后来做。

[root@master hadoop]# tar -zxvf hadoop-1.1.2.tar.gz

#解压hadoop-1.1.2.tar.gz文件

[root@master hadoop]# cat /etc/profile.d/java_hadoop.sh

export JAVA_HOME=/usr/java/jdk1.7.0_45/

export HADOOP_HOME=/opt/modules/hadoop/hadoop-1.1.2/

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin

#使用vi,新建一下HADOOP_HOME的变量,名称不能自定义(不然会很麻烦)。

[root@master opt]# source /etc/profile

#更新环境变量。

[root@master hadoop]# echo $HADOOP_HOME

/opt/modules/hadoop/hadoop-1.1.2

#打印一下$HADOOP_HOME,就输出了我们的地址。

[hadoop@master conf]$ vi /opt/modules/hadoop/hadoop-1.1.2/conf/hadoop-env.sh

export HADOOP_HEAPSIZE=64

#这里是内存大少的配置,我的虚拟机配置为64m。

[root@master conf]# mkdir -p /data/

#新建一下data文件夹,准备将所有的数据,存放在这个文件夹下。

[root@master conf]# mkdir -p /data/hadoop/hdfs/data

[root@master conf]# mkdir -p /data/hadoop/hdfs/name

[root@master conf]# mkdir -p /data/hadoop/hdfs/namesecondary/

[hadoop@master bin]$ mkdir -p /data/hadoop/mapred/mrlocal

[hadoop@master bin]$ mkdir -p /data/hadoop/mapred/mrsystem

[root@master conf]#su

#切换到root用户,不然是不可以修改权限的。

[root@master conf]# chown -R hadoop:hadoop /data/

#将data文件夹的权限使用者配置为hadoop用户组和hadoop用户

[root@master conf]# su hadoop

#切换到hadoop用户

[hadoop@master conf]$ chmod go-w /data/hadoop/hdfs/data/

#这里步非常重要,就是去除其他用户写入hdfs数据,可以配置为755

[hadoop@master conf]$ ll /data/hadoop/hdfs/

drwxr-xr-x 2 hadoop hadoop 4096 Mar 24 13:21 data

drwxrwxr-x 2 hadoop hadoop 4096 Mar 24 13:21 name

drwxrwxr-x 2 hadoop hadoop 4096 Mar 24 13:20 namesecondary

接下来就配置xml文件:

[hadoop@master conf]$ cd /opt/modules/hadoop/hadoop-1.1.2/conf[hadoop@master conf]$ vi core-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>fs.checkpoint.dir</name>

<value>/data/hadoop/hdfs/namesecondary</value>

</property>

<property>

<name>fs.checkpoint.period</name>

<value>1800</value>

</property>

<property>

<name>fs.checkpoint.size</name>

<value>33554432</value>

</property>

<property>

<name>io.compression.codecs</name>

<value>org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache

.hadoop.io.compress.BZip2Codec</value>

</property>

<property>

<name>fs.trash.interval</name>

<value>1440</value>

</property>

</configuration>

[hadoop@master conf]$ vi hdfs-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.name.dir</name>

<value>/data/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/data/hadoop/hdfs/data</value>

<description> </description>

</property>

<property>

<name>dfs.http.address</name>

<value>master:50070</value>

</property>

<property>

<name>dfs.secondary.http.address</name>

<value>node1:50090</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.datanode.du.reserved</name>

<value>873741824</value>

</property>

<property>

<name>dfs.block.size</name>

<value>134217728</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

[hadoop@master conf]$ vi mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>master:9001</value>

</property>

<property>

<name>mapred.local.dir</name>

<value>/data/hadoop/mapred/mrlocal</value>

<final>true</final>

</property>

<property>

<name>mapred.system.dir</name>

<value>/data/hadoop/mapred/mrsystem</value>

<final>true</final>

</property>

<property>

<name>mapred.tasktracker.map.tasks.maximum</name>

<value>2</value>

<final>true</final>

</property>

<property>

<name>mapred.tasktracker.reduce.tasks.maximum</name>

<value>1</value>

<final>true</final>

</property>

<property>

<name>io.sort.mb</name>

<value>32</value>

<final>true</final>

</property>

<property>

<name>mapred.child.java.opts</name>

<value>-Xmx128M</value>

</property>

<property>

<name>mapred.compress.map.output</name>

<value>true</value>

</property>

</configuration>

#配置文件完成

#切换到master主机,开启

[root@master hadoop]# vi/opt/modules/hadoop/hadoop-1.1.2/conf/masters

node1

node2

[root@master hadoop]# vi/opt/modules/hadoop/hadoop-1.1.2/conf/slaves

master

node1

node2

#节点配置,这里很重要,masters文件不需要把masters加入

hadoop1.1.2的node1,node2配置开始

前面的网络的配置我就不说了:#登录master,将authorized_keys,发过去

[root@master ~]# scp /home/hadoop/.ssh/authorized_keys root@node1:/home/hadoop/.ssh/

[root@master ~]# scp /home/hadoop/.ssh/authorized_keys root@node1:/home/hadoop/.ssh/

The authenticity of host 'node1 (192.168.1.111)' can't be established.

RSA key fingerprint is 0d:aa:04:89:28:44:b9:e8:bb:5e:06:d0:dc:de:22:85.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node1,192.168.1.111' (RSA) to the list of known hosts.

root@node1's password:

#切换到node1主机

[root@master ~]# su hadoop

[hadoop@master root]$ ssh master

Last login: Sun Mar 23 23:17:06 2014 from 192.168.1.110

[root@master hadoop]# vi/opt/modules/hadoop/hadoop-1.1.2/conf/masters

node1

node2

[root@master hadoop]# vi/opt/modules/hadoop/hadoop-1.1.2/conf/slaves

master

node1

node2

#切换到master主机,开启

[hadoop@master conf]$ hadoop namenode -format

Warning: $HADOOP_HOME is deprecated.

14/03/24 13:33:52 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master/192.168.1.110

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.1.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.1 -r 1440782; compiled by 'hortonfo' on Thu Jan 31 02:03:24 UTC 2013

************************************************************/

Re-format filesystem in /data/hadoop/hdfs/name ? (Y or N) Y

14/03/24 13:33:54 INFO util.GSet: VM type = 32-bit

14/03/24 13:33:54 INFO util.GSet: 2% max memory = 0.61875 MB

14/03/24 13:33:54 INFO util.GSet: capacity = 2^17 = 131072 entries

14/03/24 13:33:54 INFO util.GSet: recommended=131072, actual=131072

14/03/24 13:33:55 INFO namenode.FSNamesystem: fsOwner=hadoop

14/03/24 13:33:55 INFO namenode.FSNamesystem: supergroup=supergroup

14/03/24 13:33:55 INFO namenode.FSNamesystem: isPermissionEnabled=false

14/03/24 13:33:55 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

14/03/24 13:33:55 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s)

14/03/24 13:33:55 INFO namenode.NameNode: Caching file names occuring more than 10 times

14/03/24 13:33:55 INFO common.Storage: Image file of size 112 saved in 0 seconds.

14/03/24 13:33:56 INFO namenode.FSEditLog: closing edit log: position=4, editlog=/data/hadoop/hdfs/name/current/edits

14/03/24 13:33:56 INFO namenode.FSEditLog: close success: truncate to 4, editlog=/data/hadoop/hdfs/name/current/edits

14/03/24 13:33:56 INFO common.Storage: Storage directory /data/hadoop/hdfs/name has been successfully formatted.

14/03/24 13:33:56 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.1.110

************************************************************/

[hadoop@master bin]$ start-all.sh

[hadoop@master bin]$ jps

7603 TaskTracker

7241 DataNode

7119 NameNode

7647 Jps

7473 JobTracker

zookeeper-3.4.5配置开始

#在master机器上安装,为namenode[root@master hadoop]# tar -zxvf zookeeper-3.4.5-1374045102000.tar.gz

[root@master hadoop]# chown -R hadoop:hadoop zookeeper-3.4.5

[root@master hadoop]# vi /opt/modules/hadoop/zookeeper-3.4.5/conf/zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/data/zookeeper

# the port at which the clients will connect

clientPort=2181

server.1=192.168.1.110:2888:3888

server.2=192.168.1.111:2888:3888

server.3=192.168.1.112:2888:3888

#新建文件myid(在zoo.cfg 配置的dataDir目录下,此处为/home/hadoop/zookeeper),使得myid中的值与server的编号相同,比如namenode上的myid: 1。datanode1上的myid:2。以此类推。

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance #

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

#开始配置。

[root@master hadoop]# mkdir -p /data/zookeeper/

[root@master hadoop]# chown -R hadoop:hadoop /data/zookeeper/

[root@master hadoop]# echo "1" > /data/zookeeper/myid

[root@master hadoop]# cat /data/zookeeper/myid

1

[root@node1 zookeeper-3.4.5]# chown -R hadoop:hadoop /data/zookeeper/*

[root@master hadoop]# scp -r /opt/modules/hadoop/zookeeper-3.4.5/ root@node1:/opt/modules/hadoop/

#将/opt/modules/hadoop/zookeeper-3.4.5发送到node1节点,新增一个myid为2

#切换到node1

[root@node1 data]# echo "2" > /data/zookeeper/myid

[root@node1 data]# cat /data/zookeeper/myid

2

[root@node1 zookeeper-3.4.5]# chown -R hadoop:hadoop /opt/modules/hadoop/zookeeper-3.4.5

[root@node1 zookeeper-3.4.5]# chown -R hadoop:hadoop /data/zookeeper/*

#切换到master

[root@master hadoop]# su hadoop

[hadoop@master hadoop]$ cd zookeeper-3.4.5

[hadoop@master bin]$ ./zkServer.sh start

JMX enabled by default

Using config: /opt/modules/hadoop/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@master bin]$ jps

5507 NameNode

5766 JobTracker

6392 Jps

6373 QuorumPeerMain

5890 TaskTracker

5626 DataNode

[root@node1 zookeeper-3.4.5]# su hadoop

[hadoop@node1 zookeeper-3.4.5]$ cd bin/

[hadoop@node1 bin]$ ./zkServer.sh start

JMX enabled by default

Using config: /opt/modules/hadoop/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@node1 bin]$ jps

5023 SecondaryNameNode

5120 TaskTracker

5445 Jps

4927 DataNode

5415 QuorumPeerMain

#两边开启之后,就测试一下Mode: follower代表正常

[hadoop@master bin]$ ./zkServer.sh status

JMX enabled by default

Using config: /opt/modules/hadoop/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: follower

-----------------------------------zookeeper-3.4.5配置结束-----------------------------------

[root@master ~]# su hadoop

[hadoop@master root]$ cd /opt/modules/hadoop/zookeeper-3.4.5/bin/

[hadoop@master bin]$ ./zkServer.sh start

hbase配置开始,三台机器都需要的

[root@master hadoop]# tar -zxvf hbase-0.96.1.1-hadoop1-bin.tar.gz#解压文件

[root@master hadoop]# vi /etc/profile.d/java_hadoop.sh

export JAVA_HOME=/usr/java/jdk1.7.0_45/

export HADOOP_HOME=/opt/modules/hadoop/hadoop-1.1.2/

export HBASE_HOME=/opt/modules/hadoop/hbase-0.96.1.1/

export HBASE_CLASSPATH=/opt/modules/hadoop/hadoop-1.1.2/conf/

export HBASE_MANAGES_ZK=true

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HBASE_HOME/bin

#配置环境变量。

[root@master hadoop]# source /etc/profile

[root@master hadoop]# echo $HBASE_CLASSPATH

/opt/modules/hadoop/hadoop-1.1.2/conf/

[root@master conf]# vi /opt/modules/hadoop/hbase-0.96.1.1/conf/hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,node1,node2</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/data/zookeeper</value>

</property>

</configuration>

[root@master conf]# cat /opt/modules/hadoop/hbase-0.96.1.1/conf/regionservers

master

node1

node2

[root@master conf]# chown -R hadoop:hadoop /opt/modules/hadoop/hbase-0.96.1.1

[root@master hadoop]# su hadoop

[hadoop@master hadoop]$ ll

total 148244

drwxr-xr-x 16 hadoop hadoop 4096 Mar 24 13:36 hadoop-1.1.2

-rwxrwxrwx 1 hadoop hadoop 61927560 Oct 29 11:16 hadoop-1.1.2.tar.gz

drwxr-xr-x 7 hadoop hadoop 4096 Mar 24 22:40 hbase-0.96.1.1

-rwxrwxrwx 1 hadoop hadoop 73285670 Mar 24 12:57 hbase-0.96.1.1-hadoop1-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 4096 Nov 5 2012 zookeeper-3.4.5

-rwxrwxrwx 1 hadoop hadoop 16402010 Mar 24 12:57 zookeeper-3.4.5-1374045102000.tar.gz

[root@master hadoop]$ scp -r hbase-0.96.1.1 node1:/opt/modules/hadoop

[root@master hadoop]$ scp -r hbase-0.96.1.1 node2:/opt/modules/hadoop

[root@node1 hadoop]# chown -R hadoop:hadoop /opt/modules/hadoop/hbase-0.96.1.1

[root@node2 hadoop]# chown -R hadoop:hadoop /opt/modules/hadoop/hbase-0.96.1.1

[root@node2 hadoop]# su hadoop

[hadoop@node2 bin]$ hbase shell

#进入hbase

[root@master conf]# jps

17616 QuorumPeerMain

20282 HRegionServer

20101 HMaster

9858 JobTracker

9712 DataNode

9591 NameNode

29655 Jps

9982 TaskTracker

第一次写这么多,我上传了一些文件,测试,详细的命令我就不写了,可能无法安装成功,权限是很重要的问题,准备录制一个视频,写成shell,给同事或网友学习。

所有的配置文件及安装包下载地址:http://pan.baidu.com/share/link?shareid=2478581294&uk=3607515896

相关文章推荐

- hadoop1.1.2集群详细安装实例

- hadoop 1.x 三节点集群安装配置详细实例

- Hadoop 三节点集群安装配置详细实例

- hadoop 三节点集群安装配置详细实例

- 如何查看hadoop集群是否安装成功(用jps命令和实例进行验证)

- Hadoop 集群安装详细步骤

- Hadoop集群监测工具——ganglia安装实例

- hadoop集群里数据收集工具Chukwa的安装详细步骤

- 在Hadoop分布式集群环境下Mahout安装和运行K-means、协同过滤实例

- 从VMware虚拟机安装到hadoop集群环境配置详细说明(第一期)

- 集群分布式 Hadoop安装详细步骤

- 图文讲解基于centos虚拟机的Hadoop集群安装,并且使用Mahout实现贝叶斯分类实例 (1)

- 从VMware虚拟机安装到hadoop集群环境配置详细说明

- 图文讲解基于centos虚拟机的Hadoop集群安装,并且使用Mahout实现贝叶斯分类实例 (7)

- 图文讲解基于centos虚拟机的Hadoop集群安装,并且使用Mahout实现贝叶斯分类实例 (5)

- windows下Eclipse安装hadoop1.1.2插件连接hadoop集群

- 图文讲解基于centos虚拟机的Hadoop集群安装,并且使用Mahout实现贝叶斯分类实例 (3)

- 图文讲解基于centos虚拟机的Hadoop集群安装,并且使用Mahout实现贝叶斯分类实例 (2)

- Hadoop集群Hadoop安装配置详细说明+SSH+KEY登陆

- Hadoop集群安装详细步骤|Hadoop安装配置