自己写Lucene分词器原理篇——CJKAnalyzer简单讲解

2013-10-14 01:43

357 查看

其中CJK中日韩统一表意文字(CJK Unified Ideographs),目的是要把分别来自中文、日文、韩文、越文中,本质、意义相同、形状一样或稍异的表意文字(主要为汉字,但也有仿汉字如日本国字、韩国独有汉字、越南的喃字)于ISO

10646及Unicode标准内赋予相同编码。CJK 是中文(Chinese)、日文(Japanese)、韩文(Korean)三国文字的缩写。顾名思义,它能够支持这三种文字。

实际上,CJKAnalyzer支持中文、日文、韩文和朝鲜文。

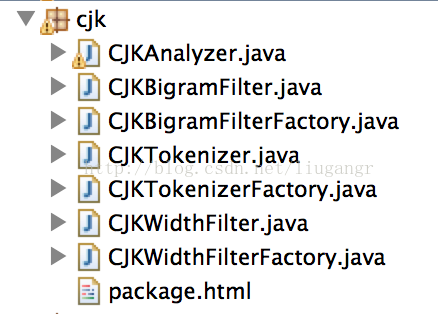

CJKAnalyzer的所有类:

CJKAnalyzer是主类

CJKWidthFilter是负责格式化字符,主要是折叠变种的半宽片假名成等价的假名

CJKBigramFilter是负责把两个CJK字符切割成两个,只要是CJK字符就会两两切割,ABC->AB,BC

CJKTokenizer是兼容低版本的分析器

1.CJKAnalyzer的主要部分,可以看出,先判断版本号,低版本直接用CJKTokenizer,高版本的先用standardanalyzer把非英文字母数字一个个分开,再用CJKWidthFilter格式化CJK字符,再用LowerCaseFilter转换英文成小写,再用CJKBigramFilter把CJK字符切割成两两的。

2.CJKWidthFilter的部分主要是折叠变种的半宽片假名成等价的假名,有点类似全角符号转换成半角符号,含义是一样的,但是表示不一样,主要针对日文。

3.CJKBigramFilter,该fileter可以选择既输出单字切割,同时也输出双字切割;或者只输出双字切割

10646及Unicode标准内赋予相同编码。CJK 是中文(Chinese)、日文(Japanese)、韩文(Korean)三国文字的缩写。顾名思义,它能够支持这三种文字。

实际上,CJKAnalyzer支持中文、日文、韩文和朝鲜文。

CJKAnalyzer的所有类:

CJKAnalyzer是主类

CJKWidthFilter是负责格式化字符,主要是折叠变种的半宽片假名成等价的假名

CJKBigramFilter是负责把两个CJK字符切割成两个,只要是CJK字符就会两两切割,ABC->AB,BC

CJKTokenizer是兼容低版本的分析器

1.CJKAnalyzer的主要部分,可以看出,先判断版本号,低版本直接用CJKTokenizer,高版本的先用standardanalyzer把非英文字母数字一个个分开,再用CJKWidthFilter格式化CJK字符,再用LowerCaseFilter转换英文成小写,再用CJKBigramFilter把CJK字符切割成两两的。

protected TokenStreamComponents createComponents(String fieldName,

Reader reader) {

if (matchVersion.onOrAfter(Version.LUCENE_36)) {

final Tokenizer source = new StandardTokenizer(matchVersion, reader);

// run the widthfilter first before bigramming, it sometimes combines characters.

TokenStream result = new CJKWidthFilter(source);

result = new LowerCaseFilter(matchVersion, result);

result = new CJKBigramFilter(result);

return new TokenStreamComponents(source, new StopFilter(matchVersion, result, stopwords));

} else {

final Tokenizer source = new CJKTokenizer(reader);

return new TokenStreamComponents(source, new StopFilter(matchVersion, source, stopwords));

}

}2.CJKWidthFilter的部分主要是折叠变种的半宽片假名成等价的假名,有点类似全角符号转换成半角符号,含义是一样的,但是表示不一样,主要针对日文。

3.CJKBigramFilter,该fileter可以选择既输出单字切割,同时也输出双字切割;或者只输出双字切割

public boolean incrementToken() throws IOException {

while (true) {

//判断之前是否暂存了双CJK字符

if (hasBufferedBigram()) {

// case 1: we have multiple remaining codepoints buffered,

// so we can emit a bigram here.

//如果选择了要输出单字切割

if (outputUnigrams) {

// when also outputting unigrams, we output the unigram first,

// then rewind back to revisit the bigram.

// so an input of ABC is A + (rewind)AB + B + (rewind)BC + C

// the logic in hasBufferedUnigram ensures we output the C,

// even though it did actually have adjacent CJK characters.

if (ngramState) {

flushBigram();//写双字

} else {

flushUnigram();//写单字,然后后退一个字符(rewind)

index--;

}

//这个个对应上面的判断,实现双字输出

ngramState = !ngramState;

} else {

flushBigram();

}

return true;

} else if (doNext()) {

// case 2: look at the token type. should we form any n-grams?

String type = typeAtt.type();

//判断字符属性,是否是CJK字符

if (type == doHan || type == doHiragana || type == doKatakana || type == doHangul) {

// acceptable CJK type: we form n-grams from these.

// as long as the offsets are aligned, we just add these to our current buffer.

// otherwise, we clear the buffer and start over.

if (offsetAtt.startOffset() != lastEndOffset) { // unaligned, clear queue

if (hasBufferedUnigram()) {

// we have a buffered unigram, and we peeked ahead to see if we could form

// a bigram, but we can't, because the offsets are unaligned. capture the state

// of this peeked data to be revisited next time thru the loop, and dump our unigram.

loneState = captureState();

flushUnigram();

return true;

}

index = 0;

bufferLen = 0;

}

refill();

} else {

// not a CJK type: we just return these as-is.

if (hasBufferedUnigram()) {

// we have a buffered unigram, and we peeked ahead to see if we could form

// a bigram, but we can't, because its not a CJK type. capture the state

// of this peeked data to be revisited next time thru the loop, and dump our unigram.

loneState = captureState();

flushUnigram();

return true;

}

return true;

}

} else {

// case 3: we have only zero or 1 codepoints buffered,

// so not enough to form a bigram. But, we also have no

// more input. So if we have a buffered codepoint, emit

// a unigram, otherwise, its end of stream.

if (hasBufferedUnigram()) {

flushUnigram(); // flush our remaining unigram

return true;

}

return false;

}

}

}相关文章推荐

- 阿拉伯数字转大写中文_财务常用sql存储过程

- ASP 支持中文的len(),left(),right()的函数代码

- SQLite 中文指南之FAQ第1/6页

- perl 中文处理技巧

- 图象函数中的中文显示

- php+AJAX传送中文会导致乱码的问题的解决方法

- asp汉字中文图片验证码

- jquery中文乱码的多种解决方法

- mysql 不能插入中文问题

- JQuery AJAX 中文乱码问题解决

- 解决JSP开发中Web程序显示中文三种方法

- 解决mysql不能插入中文Incorrect string value

- js判断输入是否中文,数字,身份证等等js函数集合第1/3页

- PHP 中文简繁互转代码 完美支持大陆、香港、台湾及新加坡

- php插入中文到sqlserver 2008里出现乱码的解决办法分享

- ajax中文乱码问题解决方案

- java Lucene 中自定义排序的实现

- 正则表达式判断是否存在中文和全角字符和判断包含中文字符串长度

- python中将阿拉伯数字转换成中文的实现代码

- 如何让Nginx支持中文文件名具体设置步骤