mysql的limit优化

2013-03-01 12:06

381 查看

limit

http://www.zhenhua.org/article.asp?id=200

select * from table LIMIT 5,10; #返回第6-15行数据

select * from table LIMIT 5; #返回前5行

select * from table LIMIT 0,5; #返回前5行

性能优化:

基于MySQL5.0中limit的高性能,我对数据分页也重新有了新的认识.

1.

Select * From cyclopedia Where ID>=(

Select Max(ID) From (

Select ID From cyclopedia Order By ID limit 90001

) As tmp

) limit 100;

2.

Select * From cyclopedia Where ID>=(

Select Max(ID) From (

Select ID From cyclopedia Order By ID limit 90000,1

) As tmp

) limit 100;

同样是取90000条后100条记录,第1句快还是第2句快?

第1句是先取了前90001条记录,取其中最大一个ID值作为起始标识,然后利用它可以快速定位下100条记录

第2句择是仅仅取90000条记录后1条,然后取ID值作起始标识定位下100条记录

第1句执行结果.100 rows in set (0.23) sec

第2句执行结果.100 rows in set (0.19) sec

很明显第2句胜出.看来limit好像并不完全像我之前想象的那样做全表扫描返回limit offset+length条记录,这样看来limit比起MS-SQL的Top性能还是要提高不少的.

其实第2句完全可以简化成

Select * From cyclopedia Where ID>=(

Select ID From cyclopedia limit 90000,1

)limit 100;

直接利用第90000条记录的ID,不用经过Max运算,这样做理论上效率因该高一些,但在实际使用中几乎看不到效果,因为本身定位ID返回的就是1条记录,Max几乎不用运作就能得到结果,但这样写更清淅明朗,省去了画蛇那一足.

可是,既然MySQL有limit可以直接控制取出记录的位置,为什么不干脆用Select * From cyclopedia limit 90000,1呢?岂不更简洁?

这样想就错了,试了就知道,结果是:1 row in set (8.88) sec,怎么样,够吓人的吧,让我想起了昨天在4.1中比这还有过之的"高分".Select * 最好不要随便用,要本着用什么,选什么的原则, Select的字段越多,字段数据量越大,速度就越慢. 上面2种分页方式哪种都比单写这1句强多了,虽然看起来好像查询的次数更多一些,但实际上是以较小的代价换取了高效的性能,是非常值得的.

第1种方案同样可用于MS-SQL,而且可能是最好的.因为靠主键ID来定位起始段总是最快的.

Select Top 100 * From cyclopedia Where ID>=(

Select Top 90001 Max(ID) From (

Select ID From cyclopedia Order By ID

) As tmp

)

但不管是实现方式是存贮过程还是直接代码中,瓶颈始终在于MS-SQL的TOP总是要返回前N个记录,这种情况在数据量不大时感受不深,但如果成百上千万,效率肯定会低下的.相比之下MySQL的limit就有优势的多,执行:

Select ID From cyclopedia limit 90000

Select ID From cyclopedia limit 90000,1

的结果分别是:

90000 rows in set (0.36) sec

1 row in set (0.06) sec

而MS-SQL只能用Select Top 90000 ID From cyclopedia 执行时间是390ms,执行同样的操作时间也不及MySQL的360ms.

http://explainextended.com/2009/10/23/mysql-order-by-limit-performance-late-row-lookups/

From Stack Overflow:

When I run an SQL command like the one below, it takes more than 15 seconds:

view

sourceprint?

This task can be reformulated like this: take the last 150,010 rows in

and return the first 10 of them

It means that though we only need 10 records we still need to count off the first 150,000.

The table has an index which keeps the records ordered. This allows us not to use a

for 150,000 records (which are already ordered) is way too much.

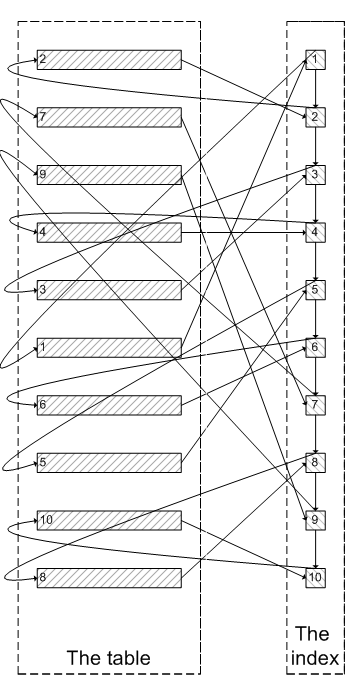

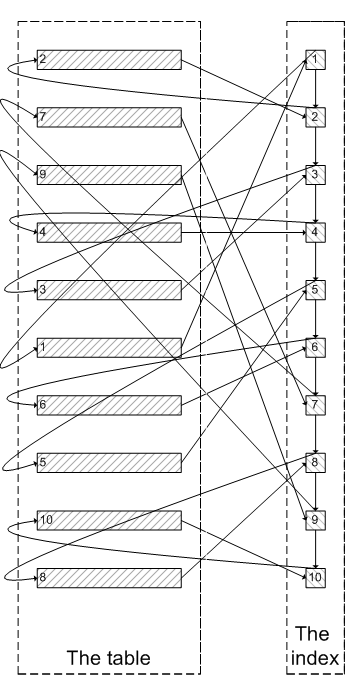

To better understand the reason behind the low performance let’s look into this picture:

As we already saidbefore, there is an index created on the table. Logically, an index is a part of a table which is not even visible from the SQL side: all queries are issued against the table, not the index, and

the optimizer decides whether to use the index or not.

However, physically, an index is a separate object in the database.

An index is a shadow copy of the table which stores some subset of the table’s data:

Index key, i. e. the columns which the index created on.

Table pointer, that is some value that uniquely identifies a row the record reflects. In InnoDB,

it is the value of the

The index records are stored in a B-Tree structure which make the

on them super fast.

However, the index itself does not contain all table’s data: only the subset we described above. To find the actual table values while preserving order, one needs to join the index and

the table. That is for each index record the engine should find the corresponding table record (using the row pointer) and return all non-indexed values from the table itself.

Here’s how it looks:

The process of fetching the table records corresponding to the index records is called row lookup. It is pictured by the curvy arrows connecting the index and the table.

Since the index records and the table records are located far away from each other in the memory and on the disk, the row lookup leads to much more page misses, cache misses and disk seeks that a sequential access and is therefore

quite costly. It takes much time to traverse all the connectors on the picture above.

If we do a plain query which returns all the records we of course need to fetch all the records and each row lookup is necessary.

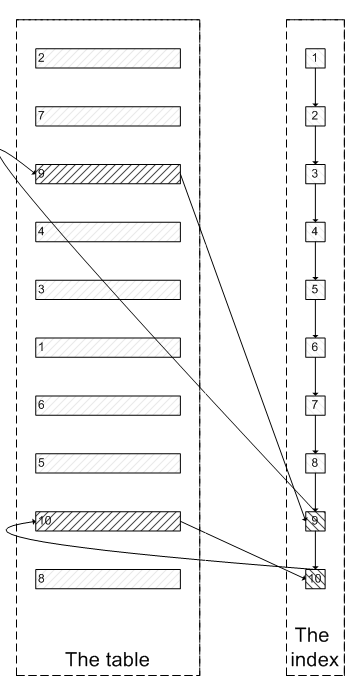

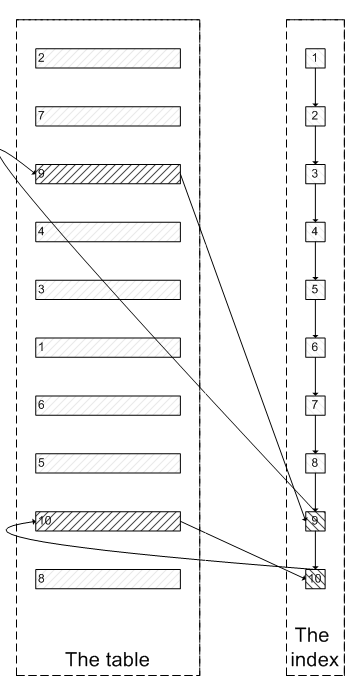

But do we really need it when we use a

If we didsomething like

return the remaining two:

We see that this is a much more efficient algorithm that will save us lots of row lookups.

This is called late row lookup: the engine should look a row up only if there is no way to avoidit. If there is a chance that a row will be filtered out by using the indexed fields only,

it should be done before the rows are looked up in the actual MySQL table. There is no point in fetching the records out of the table just to discard them.

However, MySQL always does early row lookup: it searches for a row prior to checking values in the index, even the optimizer decided to use the index.

Let’s create a sample table and try to reproduce this behavior:

Table creation details

This MyISAM table contains 200,000 records and has a

Here’s the query to select values from 150,001 to 150,010:

view

sourceprint?

View query details

This query works for almost 6 seconds which is way too long.

It, however, uses a

field which is too long to be sorted efficiently. In this very case traversing the index would be faster.

Let’s try to force the index:

view

sourceprint?

View query details

Now it is only 1.23 seconds but still too long due to the early row lookups.

We, however, can trick MySQL to use the late row lookups.

We will only select the

then join the original table back on

This will make each individual row lookup less efficient, since each join will require looking up the index value again. However, this is not much of a deal, and the total number of lookups

will be reduced greatly, so overall performance increase is expected:

view

sourceprint?

View query details

This is only 75 ms, or 16 times as fast as the previous query.

Note that we put an additional

in an extra

which is instant.

http://www.zhenhua.org/article.asp?id=200

select * from table LIMIT 5,10; #返回第6-15行数据

select * from table LIMIT 5; #返回前5行

select * from table LIMIT 0,5; #返回前5行

性能优化:

基于MySQL5.0中limit的高性能,我对数据分页也重新有了新的认识.

1.

Select * From cyclopedia Where ID>=(

Select Max(ID) From (

Select ID From cyclopedia Order By ID limit 90001

) As tmp

) limit 100;

2.

Select * From cyclopedia Where ID>=(

Select Max(ID) From (

Select ID From cyclopedia Order By ID limit 90000,1

) As tmp

) limit 100;

同样是取90000条后100条记录,第1句快还是第2句快?

第1句是先取了前90001条记录,取其中最大一个ID值作为起始标识,然后利用它可以快速定位下100条记录

第2句择是仅仅取90000条记录后1条,然后取ID值作起始标识定位下100条记录

第1句执行结果.100 rows in set (0.23) sec

第2句执行结果.100 rows in set (0.19) sec

很明显第2句胜出.看来limit好像并不完全像我之前想象的那样做全表扫描返回limit offset+length条记录,这样看来limit比起MS-SQL的Top性能还是要提高不少的.

其实第2句完全可以简化成

Select * From cyclopedia Where ID>=(

Select ID From cyclopedia limit 90000,1

)limit 100;

直接利用第90000条记录的ID,不用经过Max运算,这样做理论上效率因该高一些,但在实际使用中几乎看不到效果,因为本身定位ID返回的就是1条记录,Max几乎不用运作就能得到结果,但这样写更清淅明朗,省去了画蛇那一足.

可是,既然MySQL有limit可以直接控制取出记录的位置,为什么不干脆用Select * From cyclopedia limit 90000,1呢?岂不更简洁?

这样想就错了,试了就知道,结果是:1 row in set (8.88) sec,怎么样,够吓人的吧,让我想起了昨天在4.1中比这还有过之的"高分".Select * 最好不要随便用,要本着用什么,选什么的原则, Select的字段越多,字段数据量越大,速度就越慢. 上面2种分页方式哪种都比单写这1句强多了,虽然看起来好像查询的次数更多一些,但实际上是以较小的代价换取了高效的性能,是非常值得的.

第1种方案同样可用于MS-SQL,而且可能是最好的.因为靠主键ID来定位起始段总是最快的.

Select Top 100 * From cyclopedia Where ID>=(

Select Top 90001 Max(ID) From (

Select ID From cyclopedia Order By ID

) As tmp

)

但不管是实现方式是存贮过程还是直接代码中,瓶颈始终在于MS-SQL的TOP总是要返回前N个记录,这种情况在数据量不大时感受不深,但如果成百上千万,效率肯定会低下的.相比之下MySQL的limit就有优势的多,执行:

Select ID From cyclopedia limit 90000

Select ID From cyclopedia limit 90000,1

的结果分别是:

90000 rows in set (0.36) sec

1 row in set (0.06) sec

而MS-SQL只能用Select Top 90000 ID From cyclopedia 执行时间是390ms,执行同样的操作时间也不及MySQL的360ms.

http://explainextended.com/2009/10/23/mysql-order-by-limit-performance-late-row-lookups/

From Stack Overflow:

When I run an SQL command like the one below, it takes more than 15 seconds:

view

sourceprint?

1.

SELECT

*

2.

FROM

news

3.

WHERE

cat_id = 4

4.

ORDER

BY

5.

id

DESC

6.

LIMIT 150000, 10

EXPLAINshows that its using where and the index on

(cat_id, id)

LIMIT 20, 10on the same query only takes several milliseconds.

This task can be reformulated like this: take the last 150,010 rows in

idorder

and return the first 10 of them

It means that though we only need 10 records we still need to count off the first 150,000.

The table has an index which keeps the records ordered. This allows us not to use a

filesort. However, the query is still far from being efficient: 15 seconds

for 150,000 records (which are already ordered) is way too much.

To better understand the reason behind the low performance let’s look into this picture:

As we already saidbefore, there is an index created on the table. Logically, an index is a part of a table which is not even visible from the SQL side: all queries are issued against the table, not the index, and

the optimizer decides whether to use the index or not.

However, physically, an index is a separate object in the database.

An index is a shadow copy of the table which stores some subset of the table’s data:

Index key, i. e. the columns which the index created on.

Table pointer, that is some value that uniquely identifies a row the record reflects. In InnoDB,

it is the value of the

PRIMARY KEY; in MyISAM, it is an offset in the

.MYDfile.

The index records are stored in a B-Tree structure which make the

refand

rangesearching

on them super fast.

However, the index itself does not contain all table’s data: only the subset we described above. To find the actual table values while preserving order, one needs to join the index and

the table. That is for each index record the engine should find the corresponding table record (using the row pointer) and return all non-indexed values from the table itself.

Here’s how it looks:

The process of fetching the table records corresponding to the index records is called row lookup. It is pictured by the curvy arrows connecting the index and the table.

Since the index records and the table records are located far away from each other in the memory and on the disk, the row lookup leads to much more page misses, cache misses and disk seeks that a sequential access and is therefore

quite costly. It takes much time to traverse all the connectors on the picture above.

If we do a plain query which returns all the records we of course need to fetch all the records and each row lookup is necessary.

But do we really need it when we use a

LIMITclause with the offset greater than 0?

If we didsomething like

LIMIT 8, 2we could just skip the first 8 values using the index and the

return the remaining two:

We see that this is a much more efficient algorithm that will save us lots of row lookups.

This is called late row lookup: the engine should look a row up only if there is no way to avoidit. If there is a chance that a row will be filtered out by using the indexed fields only,

it should be done before the rows are looked up in the actual MySQL table. There is no point in fetching the records out of the table just to discard them.

However, MySQL always does early row lookup: it searches for a row prior to checking values in the index, even the optimizer decided to use the index.

Let’s create a sample table and try to reproduce this behavior:

Table creation details

This MyISAM table contains 200,000 records and has a

PRIMARY KEYindex on

id. Each record is filled with 200 bytes of stuffing data.

Here’s the query to select values from 150,001 to 150,010:

view

sourceprint?

1.

SELECT

id, value, LENGTH(stuffing)

AS

len

2.

FROM

t_limit

3.

ORDER

BY

4.

id

5.

LIMIT 150000, 10

View query details

This query works for almost 6 seconds which is way too long.

It, however, uses a

filesortwhich the optimizer considered more efficient than using the index. This would make sense if not for the stuffing

field which is too long to be sorted efficiently. In this very case traversing the index would be faster.

Let’s try to force the index:

view

sourceprint?

1.

SELECT

id, value, LENGTH(stuffing)

AS

len

2.

FROM

t_limit

FORCE

INDEX

(

PRIMARY

)

3.

ORDER

BY

4.

id

5.

LIMIT 150000, 10

View query details

Now it is only 1.23 seconds but still too long due to the early row lookups.

We, however, can trick MySQL to use the late row lookups.

We will only select the

idin the subquery with an

ORDER BYand

LIMITand

then join the original table back on

id.

This will make each individual row lookup less efficient, since each join will require looking up the index value again. However, this is not much of a deal, and the total number of lookups

will be reduced greatly, so overall performance increase is expected:

view

sourceprint?

01.

SELECT

l.id, value, LENGTH(stuffing)

AS

len

02.

FROM

(

03.

SELECT

id

04.

FROM

t_limit

05.

ORDER

BY

06.

id

07.

LIMIT 150000, 10

08.

) o

09.

JOIN

t_limit l

10.

ON

l.id = o.id

11.

ORDER

BY

12.

l.id

View query details

This is only 75 ms, or 16 times as fast as the previous query.

Note that we put an additional

ORDER BYto the end of the query, since the order is not guaranteed to be preserved after the join. This resulted

in an extra

filesortin the plan. However, the actual plan used outputs the values already sorted and this filesort, therefore, will require only a single pass over 10 values

which is instant.

相关文章推荐

- MySql的LIMIT简介及优化

- mysql中limit优化

- MYSQL查询优化:limit 1避免全表扫描

- MySQL的limit优化

- MySQL的limit和offset优化

- 优化 MySQL 查询的 Limit 参数

- MYSQL limit的优化

- MySQL limit查询优化的实际操作步骤

- MySQL的limit查询优化

- 详解mysql的limit经典用法及优化实例

- mysql limit分页优化方法分享

- mysql limit查询优化(数据量大的时候很优)

- MySQL的limit用法和分页查询的性能分析及优化(转)

- 如何优化Mysql千万级快速分页,limit优化快速分页,MySQL处理千万级数据查询的优化方案!(zz)

- 优化mysql的limit offset的例子

- mysql中limit的优化

- mysql中rollup和limit的用法及where子句的优化

- 关于MySQL的LIMIT 语法小优化!

- 使用当当sharding-jdbc分表,Mysql LIMIT分页的优化方案

- MySQL技巧:做好Limit优化