CentOS6.2下一步一步源代码安装OpenStack

2012-10-19 16:41

387 查看

5月3日,添加安装计算节点的说明(蓝色字体)

OpenStack的essex正式版终于发布了,但是要想在CentOS下通过yum安装估计还得等上一段时间,因此不妨我们来一起通过源代码安装OpenStack,这样也对OpenStack有一个更清晰的认识。前段时间我一直忙nova这个组件的安装配置,一直各种报错,把我郁闷了好久,最要命的是我是在VMware workstation下安装CentOS6.2实验OpenStack,但是CentOS6.2在虚拟机环境下无法开启虚拟机化技术,而Ubuntu却可以,不得而把电脑重新装了CentOS重新实验。目前nova这个组件已经安装好了,所以我把这些过程写在这里跟大家分享下。这是第一篇,主要是操作系统的安装及系统相关依赖包的安装,下一篇将介绍各组件源代码的安装方法,再往下就是各组件的配置、运行,测试。好了,废话不多说,Let's

Go!!!

1.操作系统安装:

安装系统时最好给服务器取个名,不然后面rabbitmq-server可能无法启动,如下图所示:

在选择安装类型时,选择Desktop,并且选择下面的现在定制,如下图所示:

Applications部分只选择InternetBrowser即可,该组的可选包只选择firefox,如下图:

Base System部分默认即可,Databases部分勾选Mysql的两项,其中client的可选包只选python的即可,如下图:

计算节点只需要安装客户端,不需要安装Mysql服务器

Virtualization部分勾选上面三项,且去掉第二项的可选包,如下图:

其它未说明部分默认即可,安装完后进入系统创建你的用户和密码,我这里建的是ugyn。

2.安装光盘中的一些依赖包

2.1将当前用户添加到sudoers中

[ugyn@cc ~]$ su

Password:

[root@cc ugyn]# echo 'ugyn ALL=(ALL) NOPASSWD:ALL' >> /etc/sudoers

[root@cc ugyn]# su ugyn

[ugyn@cc ~]$

2.2添加CentOS光盘镜像为repo:

[ugyn@cc ~]$ sudo cp /etc/yum.repos.d/CentOS-Media.repo /etc/yum.repos.d/iso.repo

[ugyn@cc ~]$ sudo vim /etc/yum.repos.d/iso.repo

最后我的iso.repo内容为:

[iso]

name=iso

baseurl=file:///media/iso/

gpgcheck=1

enabled=0

gpgkey=file:///media/iso/RPM-GPG-KEY-CentOS-6

挂载镜像:

[ugyn@cc ~]$ sudo mkdir /media/iso

[ugyn@cc ~]$ sudo mount -o loop -t iso9660 Downloads/CentOS-6.2-x86_64-bin-DVD1.iso /media/iso

2.3卸载python-crypto:

该软件版本过低,如果不卸载会导致以后glance服务无法启动

[ugyn@cc ~]$ sudo yum --enablerepo=iso --disablerepo=base,updates,extras remove python-crypto

2.4安装光盘中存在的依赖软件:

[ugyn@cc ~]$ sudo yum --enablerepo=iso --disablerepo=base,updates,extras install gcc gcc-c++ python-devel python-setuptools libxml2-devel libxslt-devel mod_wsginumpy

这里mod_wsgi为安装horizon所必须,numpy为安装noVNC所必须,安装计算节点这两个包都不需要

3.安装epel中的一些依赖包:

关于qpid的bug已经被修复,详情见这里:

https://review.openstack.org/#/c/6760/

https://github.com/openstack/glance/commit/5bed23cbc962d3c6503f0ff93e6d1e326efbd49d

我将使用qpid,这样可以不用安装epel源,省去安装rabbitmq及其依赖的60多包,python-sqlite2, python-greenlet-devel及其依赖的python-greenlet 可以先下载然后手动安装就好了,我将会将这些文件打包跟大家下载。如果使用qpid请安装好python-qpid,python-sqlite2, python-greenlet-devel及其依赖的python-greenlet后直接看第4步。

下载页:http://dl.fedoraproject.org/pub/epel/6/x86_64/repoview/letter_p.group.html

安装:

sudo yum --enablerepo=iso --disablerepo=base,updates,extras install python-qpid

sudo rpm -ihv rpms/python-sqlite2-2.3.5-2.el6.x86_64.rpm rpms/python-greenlet-0.3.1-6.el6.x86_64.rpm rpms/python-greenlet-devel-0.3.1-6.el6.x86_64.rpm

3.1安装epel源:

[ugyn@cc ~]$ sudo rpm -i http://download.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-5.noarch.rpm

3.2安装epel源中的rabbitmq-server及另两个依赖包:

[ugyn@cc ~]$ sudo yum install rabbitmq-server python-sqlite2 python-greenlet-devel

3.3启动rabbitmq-server:

首先要停掉qpidd,本来OpenStack可以使用qpidd,我在实验中使用时通过glance上传镜像时报错(详情见这里 ),而用rabbitmq没这样的问题

[ugyn@cc ~]$ sudo chkconfig qpidd off && sudo service qpidd stop

[ugyn@cc ~]$ sudo chkconfig rabbitmq-server on && sudo service rabbitmq-server start

设置密码:

[ugyn@cc ~]$ sudo rabbitmqctl change_password guest service123

注意:请确定已经设置好了主机名,不然rabbit可能无法启动

安装计算节点时以下步骤都不需要!!!

4.启动httpd:

[ugyn@cc ~]$ sudo chkconfig httpd on && sudo service httpd start

Starting httpd: httpd: apr_sockaddr_info_get() failed for cc.sigsit.org

httpd: Could not reliably determine the server's fully qualified domain name, using 127.0.0.1 for ServerName

[ OK ]

[ugyn@cc ~]$ sudo service httpd status

httpd (pid 28460) is running...

5.启动mysql:

[ugyn@cc ~]$ sudo chkconfig mysqld on && sudo service mysqld start

修改配置:

[ugyn@cc ~]$ sudo vim /etc/my.cnf

在[mysqld]选项下添加以下两行:

character-set-server=utf8

default-storage-engine=InnoDB

在文件最后添加以下两行:

[mysql]

default-character-set=utf8

重启mysql:

sudo service mysqld restart

登录mysql删除匿名用户并设置root密码:

mysql -uroot

mysql> use mysql

mysql> delete from user where User="";

mysql> update user set Password=password('service123');

删除test数据库:

mysql> drop database test;

创建keystone所需数据库:

mysql> create database keystone;

mysql> grant all privileges on keystone.* to 'keystone'@'%' identified by 'keystone';

创建nova所需数据库:

mysql> create database nova;

mysql> grant all privileges on nova.* to 'nova'@'%' identified by 'nova';

创建glance所需数据库:

mysql> create database glance;

mysql> grant all privileges on glance.* to 'glance'@'%' identified by 'glance';

创建horizon所需数据库:

mysql> create database horizon;

mysql> grant all privileges on horizon.* to 'horizon'@'%' identified by 'horizon';

刷新权限:

mysql> flush privileges;

mysql> quit

6.配置网桥:

见这里/article/7944205.html

好了,操作系统部分到这里就差不多了,下面运行下virsh及virt-manager看看主机能否启动虚拟机技术

[ugyn@cc ~]$ sudo virsh version

Compiled against library: libvir 0.9.4

Using library: libvir 0.9.4

Using API: QEMU 0.9.4

Running hypervisor: QEMU 0.12.1

注意virt-manager需要以root用户登录图形界面才能运行

操作系统准备好后,接下来就应该进行各组件的安装了,在这一篇里我将介绍怎么在一台机器上安装keystone、glance、nova、horizon、swift。大致安装步骤如下,下载源代码,下载并安装各组件的依赖库,安装各组件。

1.下载源代码并解压

从官方网站下载源代码,地址如下:

https://launchpad.net/nova/

https://launchpad.net/glance/

https://launchpad.net/keystone/

https://launchpad.net/horizon/

https://launchpad.net/swift/

我的文件夹结构如下:

[ugyn@cc install]$ ls -l

total 5984

-rw-r--r--. 1 ugyn ugyn 298898 Apr 8 14:18 glance-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 606289 Apr 10 19:15 horizon-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 186851 Apr 10 19:15 keystone-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 4359338 Apr 8 14:15 nova-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 95197 Mar 27 18:26 pip-1.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 48335 Apr 10 19:15 python-keystoneclient-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 85322 Apr 8 14:16 python-novaclient-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 421496 Apr 8 14:20 swift-1.4.8.tar.gz

-rw-r--r--. 1 ugyn ugyn 202 Apr 13 16:10 test

解压:

[ugyn@cc install]$ tar zxpf keystone-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf glance-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf nova-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf python-novaclient-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf python-keystoneclient-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf horizon-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf swift-1.4.8.tar.gz

2.安装pip:

pip是一个安装python库的好工具,总的来说源代码安装OpenStack还是比较简单的,每个组件具有类似的结构,在各个安装包下有个tools/pip-requires文件,列举了该组件所依赖的python库,因此安装该组件前先要用pip安装所依赖的python库。

下载pip: http://pypi.python.org/packages/source/p/pip/pip-1.1.tar.gz#md5=62a9f08dd5dc69d76734568a6c040508

安装:

[ugyn@cc install]$ tar zxpf pip-1.1.tar.gz

[ugyn@cc install]$ cd pip-1.1 && sudo python setup.py install

3.整合pip-requires文件下载相关python库文件:

我的目标是即使在没有网络的环境下也能安装OpenStack,所以我希望把所有相关库文件下载下来然后通过本地安装。为此我打算把各组件的pip-requires文件整合到一个文件,去除其中的重复部分,然后用pip工具下载所有库文件,我会提供一个所有文件的下载,如果你使用我提供的下载文件的话就可以跳过这步。

[ugyn@cc pip-1.1]$ cd ..

[ugyn@cc install]$ cat keystone-2012.1/tools/pip-requires glance-2012.1/tools/pip-requires nova-2012.1/tools/pip-requires horizon-2012.1/tools/pip-requires python-novaclient-2012.1/tools/pip-requires python-keystoneclient-2012.1/tools/pip-requires | grep "^[a-zA-Z]"

| sort -u > pip-requires

编辑pip-requires去除重复的库,原则上重复的库只留下满足要求的版本号最确定的那一个,去除我们要在这里安装的glance,swift等我们要在这里安装的组件。

下载依赖包以供多次使用:

[ugyn@cc install]$ mkdir pipdowns

[ugyn@cc install]$ pip install -r pip-requires -d pipdowns --no-install

4.安装依赖库文件:

以下几个要先安装,因为有其它库的安装依赖于他们

[ugyn@cc install]$ sudo pip install ./pipdowns/Markdown-2.1.1.tar.gz ./pipdowns/nose-1.1.2.tar.gz ./pipdowns/pycrypto-2.3.tar.gz ./pipdowns/six-1.1.0.tar.gz ./pipdowns/Tempita-0.5.1.tar.gz

安装其它库:

[ugyn@cc install]$ sudo pip install ./pipdowns/*

测试是否已全部安装好,这是我自己写的简单脚本,没有输入即表示正常,否则会显示未安装的库名,到时候我会将所有软件打包供大家下载,我的目标是在完全没有网络的机器上也能正常安装OpenStack

[ugyn@cc install]$ ./test

5.安装各组件:

[ugyn@cc install]$ cd keystone-2012.1 && sudo python setup.py install

[ugyn@cc keystone-2012.1]$ cd ../glance-2012.1 && sudo python setup.py install

[ugyn@cc glance-2012.1]$ cd ../nova-2012.1 && sudo python setup.py install

[ugyn@cc nova-2012.1]$ cd ../python-novaclient-2012.1 && sudo python setup.py install

[ugyn@cc python-novaclient-2012.1]$ cd ../python-keystoneclient-2012.1 && sudo python setup.py install

[ugyn@cc python-keystoneclient-2012.1]$ cd ../horizon-2012.1 && sudo python setup.py install

[ugyn@cc horizon-2012.1]$ cd ../swift-1.4.8 && sudo python setup.py install

安装到这里就结束了,接下来我将介绍各组件的配置,运行,测试。。。

这篇文章与我前面写的安装keystone有很大部分是相同,如果你看过前面的那篇文章这里略读主可以了

1.配置

也可参考:http://docs.openstack.org/trunk/openstack-compute/install/content/keystone-conf-file.html进行配置

1.1拷贝默认配置文件:

[ugyn@cc swift-1.4.8]$ cd ../keystone-2012.1 && sudo cp -R etc /etc/keystone

[ugyn@cc keystone-2012.1]$ sudo chown -R ugyn:ugyn /etc/keystone

[ugyn@cc keystone-2012.1]$ mv /etc/keystone/logging.conf.sample /etc/keystone/logging.conf

1.2修改/etc/keystone/keystone.conf:

生成随机token:

[ugyn@cc keystone-2012.1]$ openssl rand -hex 10

7d97448231c0a2bac8a3

[ugyn@cc keystone-2012.1]$ vim /etc/keystone/keystone.conf

将生成的token替换admin_token的值

修改:#log_config = ./etc/logging.conf.sample

为:

log_config = /etc/keystone/logging.conf

修改:

[sql]

connection = sqlite:///keystone.db

idle_timeout = 200

为:

[sql]

connection = mysql://keystone:keystone@localhost/keystone

idle_timeout = 200

min_pool_size = 5

max_pool_size = 10

pool_timeout = 200

修改:

[catalog]

driver = keystone.catalog.backends.templated.TemplatedCatalog

template_file = ./etc/default_catalog.templates

为:

[catalog]

driver = keystone.catalog.backends.sql.Catalog

修改:

[token]

driver = keystone.token.backends.kvs.Tokens

为:

[token]

driver = keystone.token.backends.sql.Token

修改:

[ec2]

driver = keystone.contrib.ec2.backends.kvs.Ec2

为:

[ec2]

driver = keystone.contrib.ec2.backends.sql.Ec2

便于运行客户端命令创建以下文件并运行:

export SERVICE_TOKEN=7d97448231c0a2bac8a3

export SERVICE_ENDPOINT=http://127.0.0.1:35357/v2.0

export OS_USERNAME=nova

export OS_PASSWORD=service123

export OS_TENANT_NAME=service

export OS_AUTH_URL=http://127.0.0.1:5000/v2.0

说明:这里的用户数据是在下面2.3中脚本创建的,当设置了SERVICE_TOKEN、SERVICE_ENDPOINT可以运行任何的keystone命令,因此须小心

运行:

[ugyn@cc keystone-2012.1]$ source ~/.openstackrc

2.运行

2.1第一次运行时需创建数据表:

[ugyn@cc keystone-2012.1]$ keystone-manage db_sync

2.2运行keystone:

请新开一个终端或在后台运行

[ugyn@cc Desktop]$ keystone-all

2.3创建初始tenants、users、roles、services、endpoints:

修改tools/sample_data.sh,在文件开头添加以下内容:

[ugyn@cc keystone-2012.1]$ vim tools/sample_data.sh

#设置管理密码:

ADMIN_PASSWORD=admin123

#设置服务密码:

SERVICE_PASSWORD=service123

#要创建endpoint,请添加:

ENABLE_ENDPOINTS=ture

#要创建与swif相关的user,service:

ENABLE_SWIFT=ture

#要创建与quantum相关的user,service,添加:

#ENABLE_QUANTUM=ture

运行创建脚本:

[ugyn@cc keystone-2012.1]$ sudo tools/sample_data.sh

3.测试

3.1查看刚才创建的用户

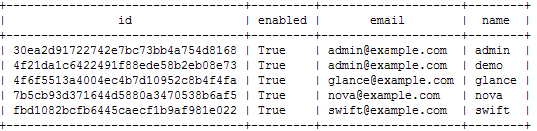

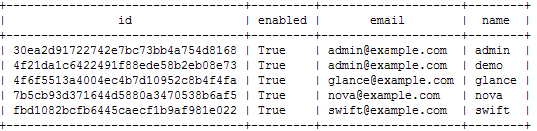

[ugyn@cc keystone-2012.1]$ keystone user-list

3.2查看刚才创建的tenant

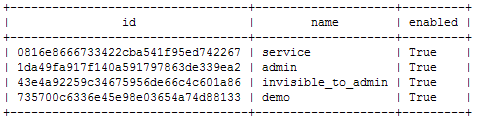

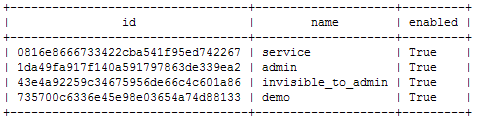

[ugyn@cc keystone-2012.1]$ keystone tenant-list

更多的操作请运行以下命令并自己测试

[ugyn@cc keystone-2012.1]$ keystone help

本文与前面所写的一篇关于glance的安装配置文章大部分是重复的,如果已经看过前面的那一篇,此篇略读即可

1.配置

1.1拷贝默认配置文件:

[ugyn@cc keystone-2012.1]$ cd ../glance-2012.1 && sudo cp -R etc /etc/glance

[ugyn@cc glance-2012.1]$ sudo chown -R ugyn:ugyn /etc/glance

1.2配置glance使用keystone认证:

更新/etc/glance/glance-api-paste.ini的以下选项为keystone中的设置值:

[filter:authtoken]

admin_tenant_name = service

admin_user = glance

admin_password = service123

在/etc/glance/glance-api.conf尾添加:

[paste_deploy]

flavor = keystone

更新/etc/glance/glance-registry-paste.ini的以下选项为keystone中的设置值

[filter:authtoken]

admin_tenant_name = service

admin_user = glance

admin_password = service123

在/etc/glance/glance-registry.conf尾添加:

[paste_deploy]

flavor = keystone

1.3设置glance的mysql连接:

修改/etc/glance/glance-registry.conf下的

sql_connection = mysql://glance:glance@localhost/glance

1.4配置glance的通信服务器:

修改/etc/glance/glance-api.conf下的:

notifier_strategy = noop

为:

notifier_strategy = rabbit

rabbit_password = guest

为:

rabbit_password = service123

现在我采用qpid作为通信服务器,设置notifier_strategy = qpid,其它qpid选项没做修改

1.5配置glance的存储:

在这里先不做修改了,采用默认的文件存取,以后配置好swift后再改

1.6创建目录:

[ugyn@cc glance-2012.1]$ sudo mkdir /var/log/glance

[ugyn@cc glance-2012.1]$ sudo mkdir /var/lib/glance

[ugyn@cc glance-2012.1]$ sudo chown -R ugyn:ugyn /var/log/glance

[ugyn@cc glance-2012.1]$ sudo chown -R ugyn:ugyn /var/lib/glance

2.运行

[ugyn@cc glance-2012.1]$ sudo glance-control all start

将start换成restart、stop即可重启、关闭glance

3.测试

3.1境像上传:

下载境像:http://smoser.brickies.net/ubuntu/ttylinux-uec/ttylinux-uec-amd64-12.1_2.6.35-22_1.tar.gz

解压:

[ugyn@cc glance-2012.1]$ cd ~/image

[ugyn@cc image]$ tar xzvf ttylinux-uec-amd64-12.1_2.6.35-22_1.tar.gz

上传kernel:

[ugyn@cc image]$ glance add name="tty-linux-kernel" disk_format=aki container_format=aki < ttylinux-uec-amd64-12.1_2.6.35-22_1-vmlinuz

输出:

Uploading image 'tty-linux-kernel'

===============================================================================================[100%] 13.9M/s, ETA 0h 0m 0s

Added new image with ID: d83d5227-64ba-4541-adce-4c5442d9eecc

上传initrd:

[ugyn@cc image]$ glance add name="tty-linux-ramdisk" disk_format=ari container_format=ari < ttylinux-uec-amd64-12.1_2.6.35-22_1-loader

输出:

Uploading image 'tty-linux-ramdisk'

=========================================================================================[100%] 935.456035K/s, ETA 0h 0m 0s

Added new image with ID: b7b783f9-7007-453b-9236-242765136898

上传image:注意这里的kernel_id各ramdisk_id是前面两步上传生成的id

[ugyn@cc image]$ glance add name="tty-linux" disk_format=ami container_format=ami kernel_id=d83d5227-64ba-4541-adce-4c5442d9eecc ramdisk_id=b7b783f9-7007-453b-9236-242765136898 < ttylinux-uec-amd64-12.1_2.6.35-22_1.img

输出:

Uploading image 'tty-linux'

===============================================================================================[100%] 79.6M/s, ETA 0h 0m 0s

Added new image with ID: fbd05c73-7bea-4af6-a0d5-9c7581569792

3.2查看上传的镜像:

glance的其它操作请查看请通过命令glance --help

好了,glance就说到这,接下来我将说说nova的配置、运行、测试,在实验过程中遇到了各种错误,好在最后还是把它跑起来了,说完这个后我将把相关文件整理下,上传到csdn供大家下载使用

.配置

1.1复制默认配置文件:

[ugyn@cc image]$ cd ~/install/nova-2012.1

[ugyn@cc nova-2012.1]$ sudo cp -R etc/nova /etc/nova

[ugyn@cc nova-2012.1]$ sudo chown -R ugyn:ugyn /etc/nova

1.2创建/etc/nova/nova.conf

将http://docs.openstack.org/trunk/openstack-compute/install/content/nova-conf-file.html配置内容复制进来并修改相关选项

我的nova.conf内容如下:

[DEFAULT]

# LOGS/STATE

verbose=True

# AUTHENTICATION

auth_strategy=keystone

# SCHEDULER

compute_scheduler_driver=nova.scheduler.filter_scheduler.FilterScheduler

# VOLUMES

volume_group=nova-volumes

volume_name_template=volume-%08x

iscsi_helper=tgtadm

# DATABASE

sql_connection=mysql://nova:nova@10.17.23.95/nova

# COMPUTE

libvirt_type=kvm

connection_type=libvirt

instances_path=/home/ugyn/instances

instance_name_template=instance-%08x

api_paste_config=/etc/nova/api-paste.ini

allow_resize_to_same_host=True

# APIS

osapi_compute_extension=nova.api.openstack.compute.contrib.standard_extensions

ec2_dmz_host=10.17.23.95

s3_host=10.17.23.95

# RABBITMQ

rabbit_host=10.17.23.95

rabbit_userid=guest

rabbit_password=service123

# GLANCE

image_service=nova.image.glance.GlanceImageService

glance_api_servers=10.17.23.95:9292

# NETWORK

network_manager=nova.network.manager.FlatDHCPManager

force_dhcp_release=True

dhcpbridge_flagfile=/etc/nova/nova.conf

firewall_driver=nova.virt.libvirt.firewall.IptablesFirewallDriver

my_ip=10.17.23.95

public_interface=br100

vlan_interface=eth0

flat_network_bridge=br100

flat_interface=eth0

fixed_range=10.0.0.0/24

# NOVNC CONSOLE

novncproxy_base_url=http://10.17.23.95:6080/vnc_auto.html

xvpvncproxy_base_url=http://10.17.23.95:6081/console

vncserver_proxyclient_address=127.0.0.1

vncserver_listen=127.0.0.1

bindir=/usr/bin

除了一般的修改外,我还加了两个参数instances_path我把这个设到我的个人文件夹来了,bindir这个很重要,否则启动nova-network时会有问题。

创建该文件夹:[ugyn@cc nova-2012.1]$ mkdir ~/instances

nova默认采用rabbitmq作为通信服务器,如果要用qpid,请把RABBITMQ相关选项替换成如下:

#QPID

rpc_backend=nova.rpc.impl_qpid

qpid的其它选项我这里保持默认,如果要设置请参考示例配置文件以下内容:

1.3配置nova的keystone认证:

[ugyn@cc nova-2012.1]$ vim /etc/nova/api-paste.ini

修改:

admin_tenant_name = %SERVICE_TENANT_NAME%

admin_user = %SERVICE_USER%

admin_password = %SERVICE_PASSWORD%

为:

admin_tenant_name = service

admin_user = nova

admin_password = service123

1.4创建存储卷:

[ugyn@cc nova-2012.1]$ mkdir /home/ugyn/novaimages

[ugyn@cc nova-2012.1]$ dd if=/dev/zero of=/home/ugyn/novaimages/nova-volumes.img bs=1M seek=100k count=0

[ugyn@cc nova-2012.1]$ sudo vgcreate nova-volumes $(sudo losetup --show -f /home/ugyn/novaimages/nova-volumes.img)

1.5初始化数据库:

[ugyn@cc nova-2012.1]$ nova-manage db sync

1.6解决启动nova-network、nova-volume出现timeout错误:

https://lists.launchpad.net/openstack/msg02565.html

2运行

先在每个新开终端运行nova-api、nova-cert、nova-compute、nova-network、nova-objectstore、nova-scheluder、nova-volume查看各程序是否正常运行,确定正常后可以关闭各程序,通过nova-all启动以上各个程序。

3.测试

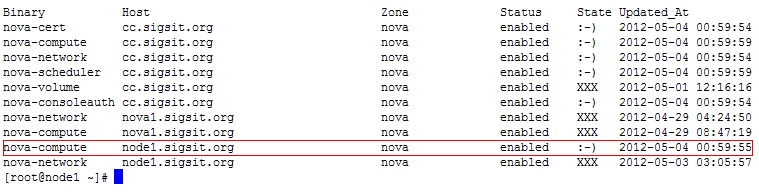

3.1查看各服务状态:

[ugyn@cc nova-2012.1]$ nova-manage service list

2012-04-20 17:11:26 DEBUG nova.utils [req-12fde12c-11e2-4861-8e53-03af10832e4b None None] backend <module 'nova.db.sqlalchemy.api' from

'/usr/lib/python2.6/site-packages/nova-2012.1-py2.6.egg/nova/db/sqlalchemy/api.pyc'> from (pid=1095) __get_backend /usr/lib/python2.6/site-

packages/nova-2012.1-py2.6.egg/nova/utils.py:658

2012-04-20 17:11:26 WARNING nova.utils [req-12fde12c-11e2-4861-8e53-03af10832e4b None None] /usr/lib64/python2.6/site-

packages/sqlalchemy/pool.py:681: SADeprecationWarning: The 'listeners' argument to Pool (and create_engine()) is deprecated. Use event.listen

().

Pool.__init__(self, creator, **kw)

2012-04-20 17:11:26 WARNING nova.utils [req-12fde12c-11e2-4861-8e53-03af10832e4b None None] /usr/lib64/python2.6/site-

packages/sqlalchemy/pool.py:159: SADeprecationWarning: Pool.add_listener is deprecated. Use event.listen()

self.add_listener(l)

Binary Host Zone Status State Updated_At

nova-cert cc.sigsit.org nova enabled :-) 2012-04-20 09:11:24

nova-compute cc.sigsit.org nova enabled :-) 2012-04-20 09:11:22

nova-network cc.sigsit.org nova enabled :-) 2012-04-20 09:11:23

nova-scheduler cc.sigsit.org nova enabled :-) 2012-04-20 09:11:23

nova-volume cc.sigsit.org nova enabled :-) 2012-04-20 09:11:21

注意::-)表示正在运行;XXX表示服务启动时出错已停止;如果服务从未正常启动过则不会出现在这里

3.2创建网络:

[ugyn@cc nova-2012.1]$ nova-manage network create private 10.0.0.0/24 1 256 --bridge=br100

3.3查看信息:

更多信息请运行nova help查看相关命令

3.4启动一个实例:

创建密钥:

ugyn@cc nova-2012.1]$ cd

请先关闭selinux,否则无法创建密钥,方法如下:

[ugyn@cc ~]$ sudo vim /etc/sysconfig/selinux

修改:SELINUX=disabled

不想重启执行以下命令:

[ugyn@cc ~]$ sudo setenforce 0

[ugyn@cc ~]$ nova keypair-add mykey > oskey.priv

[ugyn@cc ~]$ chmod 600 oskey.priv

创建实例:

[ugyn@cc ~]$ nova boot myserver --flavor 1 --key_name mykey --image tty-linux

可通过nova show myserver或nova list 查看实例状态,当实例状态为active时可通过ssh连接了。

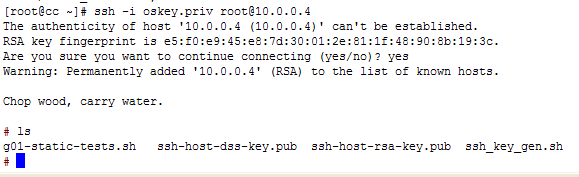

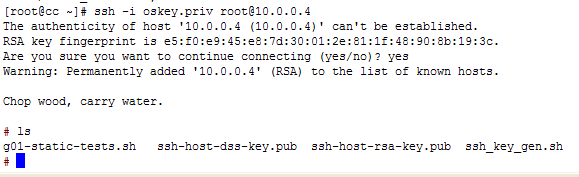

通过ssh登录实例:

[ugyn@cc ~]$ ssh -i oskey.priv root@10.0.0.2

查看实例日志:

[ugyn@cc ~]$ nova console-log myserver

删除实例:

[ugyn@cc ~]$ nova delete myserver

触认是否删除:

[ugyn@cc ~]$ nova list

更新:5月4日;添加noVNC的配置

1配置

1.1创建配置文件:

[ugyn@cc ~]$ vim install/horizon-2012.1/openstack_dashboard/local/local_settings.py

将http://docs.openstack.org/trunk/openstack-compute/install/content/local-settings-py-file.html示例配置文件拷入其中,修改数据库信息,在USE_SSL

= False下添加一行:

SECRET_KEY = 'elj1IWiLoWHgcyYxVLj7cMrGOxWl0'随意修改部分字符即可。

将该文件拷贝一份到horizon安装目录:

[ugyn@cc ~]$ sudo cp install/horizon-2012.1/openstack_dashboard/local/local_settings.py /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.

egg/openstack_dashboard/local/local_settings.py

1.2为httpd创建horizon配置文件

[ugyn@cc ~]$ sudo vim /etc/httpd/conf.d/horizon.conf

内容如下:

[plain] view

plaincopy

<VirtualHost *:80>

WSGIScriptAlias / /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg/openstack_dashboard/wsgi/django.wsgi

WSGIDaemonProcess horizon user=apache group=apache processes=3 threads=10 home=/usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg

SetEnv APACHE_RUN_USER apache

SetEnv APACHE_RUN_GROUP apache

WSGIProcessGroup horizon

DocumentRoot /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg/.blackhole/

Alias /media /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg/openstack_dashboard/static

<Directory />

Options FollowSymLinks

AllowOverride None

</Directory>

<Directory /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg/>

Options Indexes FollowSymLinks MultiViews

AllowOverride None

Order allow,deny

allow from all

</Directory>

ErrorLog /var/log/httpd/horizon_error.log

LogLevel warn

CustomLog /var/log/httpd/horizon_access.log combined

</VirtualHost>

WSGISocketPrefix /var/run/httpd

创建目录:mkdir /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg/.blackhole

1.3初始化数据库:

[ugyn@cc ~]$ install/horizon-2012.1/manage.py syncdb

DEBUG:openstack_dashboard.settings:Running in debug mode without debug_toolbar.

/usr/lib/python2.6/site-packages/django/conf/__init__.py:75: DeprecationWarning: The ADMIN_MEDIA_PREFIX setting has been removed; use STATIC_URL instead.

"use STATIC_URL instead.", DeprecationWarning)

/usr/lib/python2.6/site-packages/django/conf/__init__.py:110: DeprecationWarning: The SECRET_KEY setting must not be empty.

warnings.warn("The SECRET_KEY setting must not be empty.", DeprecationWarning)

/usr/lib/python2.6/site-packages/django/utils/translation/__init__.py:63: DeprecationWarning: Translations in the project directory aren't supported anymore. Use the LOCALE_PATHS setting instead.

DeprecationWarning)

/usr/lib/python2.6/site-packages/django/core/cache/__init__.py:82: DeprecationWarning: settings.CACHE_* is deprecated; use settings.CACHES instead.

DeprecationWarning

/usr/lib/python2.6/site-packages/nose/plugins/manager.py:405: UserWarning: Module wsgiref was already imported from /usr/lib64/python2.6/wsgiref/__init__.pyc, but /usr/lib/python2.6/site-packages is being added to sys.path

import pkg_resources

DEBUG:nose.plugins.manager:DefaultPluginManager load plugin xcover = nosexcover:XCoverage

DEBUG:django.db.backends:(0.000) SHOW TABLES; args=()

Creating tables ...

Creating table django_session

DEBUG:django.db.backends:(0.096) CREATE TABLE `django_session` (

`session_key` varchar(40) NOT NULL PRIMARY KEY,

`session_data` longtext NOT NULL,

`expire_date` datetime NOT NULL

)

;; args=()

Installing custom SQL ...

Installing indexes ...

DEBUG:django.db.backends:(0.176) CREATE INDEX `django_session_c25c2c28` ON `django_session` (`expire_date`);; args=()

DEBUG:django.db.backends:(0.000) SET foreign_key_checks=0; args=()

DEBUG:django.db.backends:(0.000) SET foreign_key_checks=1; args=()

Installed 0 object(s) from 0 fixture(s)

2运行

重启httpd

[ugyn@cc ~]$ sudo service httpd restart

Stopping httpd: [ OK ]

Starting httpd: Warning: DocumentRoot [/usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg/.blackhole/] does not exist

httpd: apr_sockaddr_info_get() failed for cc.sigsit.org

httpd: Could not reliably determine the server's fully qualified domain name, using 127.0.0.1 for ServerName

[ OK ]

关闭nova-api并重新运行

3测试

登录界面:

镜像列表:

创建实例:

4配置noVNC

参考文档:http://docs.openstack.org/trunk/openstack-compute/install/content/ch_install-dashboard.html

4.1配置

vnc的配置在nova.conf文件中,在文件的后面已经添加了以下内容:

# NOVNC CONSOLE

novncproxy_base_url=http://10.61.2.88:6080/vnc_auto.html

xvpvncproxy_base_url=http://10.61.2.88:6081/console

vncserver_proxyclient_address=10.61.2.88

vncserver_listen=10.61.2.88

这里vncserver_listen的值在计算节点上时应配置成计算节点的ip,不然会报错libvirtError: operation failed: failed to retrieve chardev info in qemu with 'info chardev'而无法启动实例。

4.2启动nova-consoleauth

noVNC要通过nova-consoleauth来进行token的验证,且nova-all不启动nova-consoleauth,因此我们得另外启动nova-consoleauth

4.3安装noVNC代理

essex版不包含nova-novncproxy,我们得自己下载安装

地址:https://github.com/cloudbuilders/noVNC

解压至:/var/lib/noVNC

请确定已经安装了numpy,如果没有请运行:

sudo yum --enablerepo=iso --disablerepo=base,updates,extras install numpy

进入目录:/var/lib/noVNC

运行:utils/nova-novncproxy --flagfile=/etc/nova/nova.conf

这里一定要进行目录/var/lib/noVNC,运行时会当前目录做为根目录,否则路径就不对了

这样就可以了,但在 我这里的运行结果并不理想,有时候就只有一个黑框看不到东西,有时能看到内容,但无法鼠标,键盘输入

(七)安装配置计算节点

更新:5月4日;添加与计算节点相关的软件

更新:9月25日;发现编译的qemu不兼容,详细说明见CentOS6.2下一步一步源代码安装OpenStack(七)安装配置计算节点

下载下地:

http://download.csdn.net/detail/ugyn109/4258774(请选择下面的更新版,如果已经下载过这一版本,可以只下载单独的noderpms软件)

更新版下载地址:

http://download.csdn.net/detail/ugyn109/4276300主要添加了安装计算节点所需的qemu相关包(我把它们放在了noderpms文件夹下)及noVNC软件

另外避免重复下载,我将noderpms分离出来单独提供下载:http://download.csdn.net/detail/ugyn109/4275380

目标:

提供在完成没有网络环境下均安装整套OpenStack所需的全部软件

说明:

该下载包包含下列软件

其中

etc——包含我现在正常运行软件的所有配置文件

pipsowns——各组件所依赖的所有python库软件

rpms——所依赖的epel源中的三个软件,这里没有包含rabbitmq及其依赖的包,如果你打算使用rabbitmq而不是qpid做为AMQP通信服务器的请自行参照我的文档下载安装

*.gz——OpenStack各组件源代码

pip-requires——各组件所依赖的python库软件列表,供pip工具下载安装python库软件用,相关信息见CentOS6.2下一步一步源代码安装OpenStack(二)组件安装

test——测试pipdowns中的软件是否已经全部安装好的脚本

noderpms——更新版本中提供的在计算节点中安装qemu相关软件的包,计算节点需要qemu-nbd,它包含在qemu-common中,然而CentOS并不包含该包,因此我从与CentOS的qemu版本相近的fc13中编译出相关rpm包供大家使用,安装方法见CentOS6.2下一步一步源代码安装OpenStack(七)安装配置计算节点

noVNC——nova-xvpvncproxy服务软件

8月28日更新:

第五步中qemu-common的安装有问题,我从f13的源码中编译出来的qemu存在问题,兼容性不好,一个是我通过virt-manager安装windows虚拟机中创建完成后无法启动直接报错;二个是我在dash中对实例进行suspend操作时报错操作失败,因此建议在元数据的注入时采用guestfs这样只需要安装三外软件包libguestfs,libguestfs-mount,fuse这些包在组“Virtualization Tools”中,安装简单可以不用编译nbd模块,省去第四、五步,但是据说guestfs速度比nbd慢,如果坚持要用nbd,请参考这里http://jamyy.dyndns.org/blog/2012/02/3582.html

1.操作系统的准备

操作系统的准备请看CentOS6.2下一步一步源代码安装OpenStack(一)操作系统准备与计算节点相关的部分我已经用蓝色字体标出。

2.时间同步、防火墙及selinux

2.1时间同步

计算节点要与控制节点通信,保持时间的同步很重要,我们需要配置ntp服务器。

2.1.1配置启动控制节点的ntp

[root@cc ~]# vim /etc/ntp.conf

在:

# Hosts on local network are less restricted.

#restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

下添加你要提供时间同步服务的网段,如我的为:

restrict 10.61.2.0 mask 255.255.255.0 nomodify notrap

去掉以下两行前的注释:

server 127.127.1.0 # local clock

fudge 127.127.1.0 stratum 10

[root@cc ~]# vim /etc/sysconfig/ntpd

在后面添加:

SYNC_HWCLOCK=yes

使时间更新到bios

启动ntp:

[root@cc ~]# chkconfig ntpd on && service ntpd start

2.1.2配置启动计算节点的ntp

[root@node1 ~]# vim /etc/ntp.conf

以下三行:

server 0.centos.pool.ntp.org

server 1.centos.pool.ntp.org

server 2.centos.pool.ntp.org

替换为:

server 10.61.2.88

这里的ip还是你的控制节点的ip

[root@node1 ~]# vim /etc/sysconfig/ntpd

在后面添加:

SYNC_HWCLOCK=yes

使时间更新到bios

启动ntp:

[root@node1 ~]# chkconfig ntpd on && service ntpd start

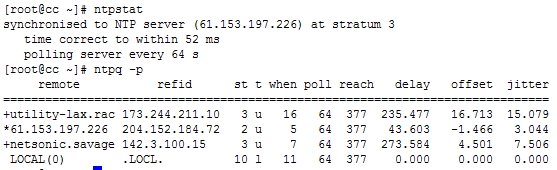

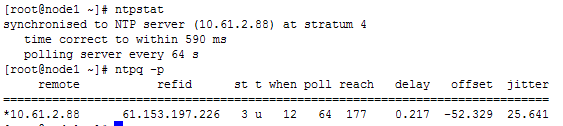

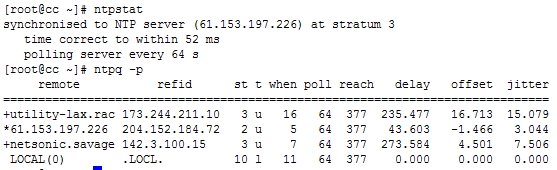

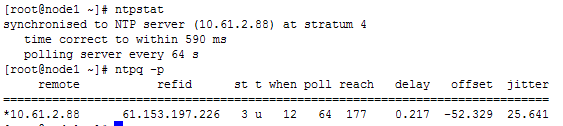

2.1.3一段时间观察同步情况

控制节点情况:

计算节点情况:

2.2防火墙配置

我的机器均只使用了一个网卡,配置的本地ip段为10.61.2.0/24,为简单我会直接对本地的ip数据包全部放行。CentOS在启动时会加载/etc/sysconfig/iptables中的过滤规则,所以我选择编辑它加入我的一条简单规则,注意控制节点和计算节点都要添加

[root@node1 ~]# vim /etc/sysconfig/iptables

在:

-A INPUT -m state --state NEW -m tcp -p tcp --dport 22 -j ACCEPT

后添加:

-A INPUT -s 10.61.2.0/24 -j ACCEPT

该规则只有在机器重启后才会有效,要想现在就运行该规则请运行:

[root@node1 ~]# iptables -I INPUT 9 -s 10.61.2.0/24 -j ACCEPT

这里的数字表示该规则插入的位置,我这里是放在

ACCEPT tcp -- anywhere anywhere state NEW tcp dpt:ssh

后面,请运行:

[root@node1 ~]# iptables -L

查看该规则位置 ,位置从1开始计数

2.3关闭selinux

selinux会引起一些权限的问题,因此我选择关闭

[root@node1 ~]# setenforce 0

[root@node1 ~]# vim /etc/selinux/config

修改SELINUX=disabled

3.组件安装

组件的安装见CentOS6.2下一步一步源代码安装OpenStack(二)组件安装,这里我们只安装nova、glance、python-novaclient其它后两个为nova所依赖的

4.编译nbd模块

参考:http://jamyy.dyndns.org/blog/2012/02/3582.html

安装kernel-devel及kernel-headers:

yum --enablerepo=iso --disablerepo=base,updates,extras install kernel-devel kernel-headers

安装kernel source code:

wget ftp://ftp.redhat.com/pub/redhat/linux/enterprise/6ComputeNode/en/os/SRPMS/kernel-2.6.32-220.el6.src.rpm

rpm -ihv kernel-2.6.32-220.el6.src.rpm

备份:

cd /usr/src/kernels/

mv $(uname -r) $(uname -r)-old

解压源文件:

tar jxpf /root/rpmbuild/SOURCES/linux-2.6.32-220.el6.tar.bz2 -C ./

mv linux-2.6.32-220.el6 $(uname -r)

编译:

cd $(uname -r)

make mrproper

cp ../$(uname -r)-old/Module.symvers ./

cp /boot/config-$(uname -r) ./.config

make oldconfig

make prepare

make scripts

make CONFIG_BLK_DEV_NBD=m M=drivers/block

cp drivers/block/nbd.ko /lib/modules/$(uname -r)/kernel/drivers/block/

depmod -a

注意:nbd模块不会在机器启动后自己load,必须用命令modprobe nbd加载,怎样使其开机自动加载?

开机自启动方法:编辑/etc/rc.d/rc.local 在文件尾添加一行:modprobe nbd

5.安装qemu-common

计算节点上还需要有qemu-nbd工具,该工具在qemu-common中,遗憾的是CentOS中并不包含qemu-common,不得不自己从f13找来与CentOS版本最接近的qemu源代码rpm自己编译,但安装qemu-common时仍然与已安装的qemu-kvm冲突。因此先卸载qemu-kvm及qemu-img,再重新安装,安装时发现依赖seabios-bin,还得从源rpm编译。。。

5.1编译qemu及seabios

我会提供已经编译好的包供大家下载,如果采用我提供的包请直接查看5.3软件的安装,下载信息我会放在OpenStack相关软件下载及说明

5.1.1安装编译所依赖的包

有些包在CentOS-6.2-x86_64-bin-DVD2.iso中,因此要将该镜像也挂载上,并修改iso.repo文件

[root@node1 ~]# mkdir /media/iso2

[root@node1 ~# mount -o loop -t iso9660 ~/CentOS-6.2-x86_64-bin-DVD2.iso /media/iso2

[root@node1 ~]# vim /etc/yum.repos.d/iso.repo

[iso]

name=iso

baseurl=file:///media/iso/

file:///media/iso2/

gpgcheck=1

enables=0

gpgkey=file:///media/iso/RPM-GPG-KEY-CentOS-6

添加了红色字体这一行

安装依赖:

[root@node1 ~]# yum --enablerepo=iso --disablerepo=base,updates,extras install rpm-build glib2-devel SDL-devel texi2html gnutls-devel cyrus-sasl-devel libaio-devel pciutils-devel pulseaudio-libs-devel ncurses-devel iasl

5.2安装qemu及seabios源rpm并编译

参考:http://wiki.xen.org/wiki/RHEL6_Xen4_Tutorial

下载:

[root@node1 ~]# wget http://archives.fedoraproject.org/pub/archive/fedora/linux/releases/13/Everything/source/SRPMS/qemu-0.12.3-8.fc13.src.rpm

[root@node1 ~]# wget ftp://ftp.redhat.com/pub/redhat/linux/enterprise/6ComputeNode/en/os/SRPMS/seabios-0.6.1.2-8.el6.src.rpm(发现编译不能生成seabios-bin改用其它src)

[root@node1 ~]# wget http://archives.fedoraproject.org/pub/fedora/linux/releases/16/Everything/source/SRPMS/seabios-0.6.2-3.fc16.src.rpm

安装:

[root@node1 ~]# rpm -ihv qemu-0.12.3-8.fc13.src.rpm

[root@node1 ~]# rpm -ihv seabios-0.6.2-3.fc16.src.rpm

编译:

[root@node1 ~]# cd rpmbuild/SPECS/

[root@node1 SPECS]# rpmbuild -bb qemu.spec

最后编译结果:

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-kvm-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-img-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-common-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-user-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-system-x86-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-system-ppc-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-system-sparc-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-system-arm-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-system-mips-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-system-cris-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-system-m68k-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-system-sh4-0.12.3-8.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/x86_64/qemu-kvm-tools-0.12.3-8.el6.x86_64.rpm

[root@node1 SPECS]# rpmbuild -bb seabios.spec

最后编译结果:

Wrote: /root/rpmbuild/RPMS/x86_64/seabios-0.6.2-3.el6.x86_64.rpm

Wrote: /root/rpmbuild/RPMS/noarch/seabios-bin-0.6.2-3.el6.noarch.rpm

5.3重新安装qemu

卸载旧版本:

[root@node1 SPECS]# yum --enablerepo=iso --disablerepo=base,updates,extras remove qemu-img qemu-kvm seabios

安装新版本:

[root@node1 SPECS]# rpm -ihv /root/rpmbuild/RPMS/noarch/seabios-bin-0.6.2-3.el6.noarch.rpm

[root@node1 SPECS]# rpm -ihv /root/rpmbuild/RPMS/x86_64/seabios-0.6.2-3.el6.x86_64.rpm

[root@node1 SPECS]# rpm -ihv /root/rpmbuild/RPMS/x86_64/qemu-common-0.12.3-8.el6.x86_64.rpm

[root@node1 SPECS]# rpm -ihv /root/rpmbuild/RPMS/x86_64/qemu-system-x86-0.12.3-8.el6.x86_64.rpm

[root@node1 SPECS]# rpm -Uhv /root/rpmbuild/RPMS/x86_64/qemu-img-0.12.3-8.el6.x86_64.rpm

[root@node1 SPECS]# rpm -Uhv /root/rpmbuild/RPMS/x86_64/qemu-kvm-0.12.3-8.el6.x86_64.rpm

[root@node1 SPECS]# yum --enablerepo=iso --disablerepo=base,updates,extras install libvirt virt-who

启动libvirtd:

[root@node1 SPECS]# chkconfig libvirtd on && service libvirtd start

6.运行nova-compute

计算节点上只要运行nova-compute就可以了,当然还可以运行nova-network,这时需要在/etc/nova下添加policy.json文件

从控制节点拷贝配置文件nova.conf到/etc/nova/nova.conf

修改其中的my_ip为计算节点的ip

修改其中的vncserver_listen为计算节点的ip

我这里还没弄好swift实例采用本地文件系统存储,因此要创建一个本地目录,该目录可在nova.conf中用instances_path=/home/instances指定:

[root@node1 ~]# mkdir /home/instances

运行:

[root@node1 ~]# nova-compute

7.测试

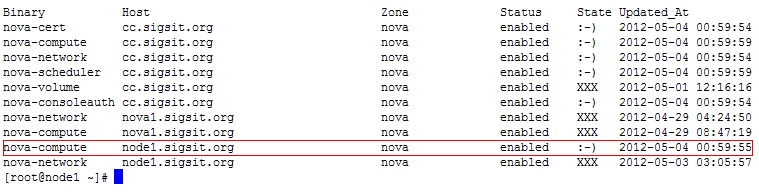

7.1查看nova-compute是否启动

[root@node1 ~]# nova-manage service list

注意:控制节点上我采用nova-all启动相关服务,但nova-all不会启动nova-compute和nova-consoleauth,如果要能够在控制节点上创建实例,需另外启动nova-compute,在采用vnc操作实例要另外启动nova-consoleauth来进行验证,我的控制节点重启后没有挂载nova-volumes,所以nova-volume没有启动起来,但在这里没有什么影响,你看到node1这个主机上有两个nova-compute及nova-network这个不用管,这是我以前安装的时候遗留下来的。

7.2通过horizon创建实例

现在就可以在控制节点通过ssh连接实例了:

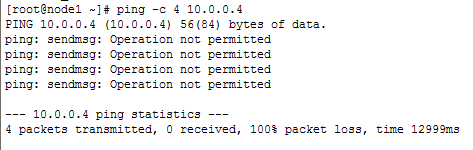

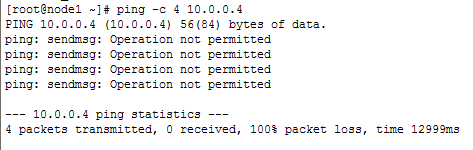

另外我在我的计算节点上无法ping通实例:

Openstack Installation and Configuration

准备环境

OS:Ubuntu12.04 64位

安装系统时安装ssh、dns

更改系统时间:ntpdate ip或一个时间域名 hwclock -w

防火墙:ufw disable

更改系统支持语言 /etc/default/locale reboot

Openstack install start

#Update the machine using the following commands

sudo apt-get update

sudo apt-get upgrade

#Install bridge-utils

sudo apt-get install bridge-utils

#Network Configuration

#The primary network interface

auto eth0

iface eth0 inet static

address 192.168.3.97

netmask 255.255.255.0

gateway 192.168.3.1

# Bridge network interface for VM networks

auto br100

iface br100 inet static

address 192.168.100.1

netmask 255.255.255.0

bridge_stp off

bridge_fd 0

brctl addbr br100

/etc/init.d/networking restart

#NTP Server

apt-get install ntp

vi /etc/ntp.conf

server ntp.ubuntu.com

server 127.127.1.0

fudge 127.127.1.0 stratum 10

service ntp restart

#Mysql

apt-get install mysql-server python-mysqldb

#Create the root password for mysql. The password used in this guide is "password"

vi /etc/mysql/my.cnf

bind-address = 0.0.0.0

service mysql restart

#Creating Databases

#about nova databases

mysql -uroot -ppassword -e 'CREATE DATABASE nova;'

mysql -uroot -ppassword -e 'CREATE USER nova;'

mysql -uroot -ppassword -e "GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%';"

mysql -uroot -ppassword -e "SET PASSWORD FOR 'nova'@'%' =PASSWORD('password');"

#about glance databases

mysql -uroot -ppassword -e 'CREATE DATABASE glance;'

mysql -uroot -ppassword -e 'CREATE USER glance;'

mysql -uroot -ppassword -e "GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%';"

mysql -uroot -ppassword -e "SET PASSWORD FOR 'glance'@'%' =PASSWORD('password');"

#about keystone databases

mysql -uroot -ppassword -e 'CREATE DATABASE keystone;'

mysql -uroot -ppassword -e 'CREATE USER keystone;'

mysql -uroot -ppassword -e "GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%';"

mysql -uroot -ppassword -e "SET PASSWORD FOR 'keystone'@'%' =PASSWORD('password');"

#Keystone

apt-get install keystone python-keystone python-keystoneclient

vi /etc/keystone/keystone.conf

admin_token = admin

connection = mysql://keystone:password@192.168.3.97/keystone

service keystone restart

keystone-manage db_sync

export SERVICE_ENDPOINT="http://localhost:35357/v2.0"

export SERVICE_TOKEN=admin

source /etc/profile

/root/.bashrc

#Creating Tenants

keystone tenant-create --name admin

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | None |

| enabled | True |

| id | 71afa7f265a043baaf35c94c526f6fe6 |

| name | admin |

+-------------+----------------------------------+

keystone tenant-create --name service

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | None |

| enabled | True |

| id | 9b154b36eba44e6faa243cbe31cd505e |

| name | service |

+-------------+----------------------------------+

#create users

keystone user-create --name admin --pass admin --email admin@foobar.com

keystone user-create --name nova --pass nova --email nova@foobar.com

keystone user-create --name glance --pass glance --email glance@foobar.com

keystone user-create --name swift --pass swift --email swift@foobar.com

输出如下

root@oak-controller:~# keystone user-create --name admin --pass admin --email admin@foobar.com

+----------+-------------------------------------------------------------------------------------------------------------------------+

| Property | Value |

+----------+-------------------------------------------------------------------------------------------------------------------------+

| email | admin@foobar.com |

| enabled | True |

| id | e7865fd421764554a15418467d530d9f |

| name | admin |

| password | $6$rounds=40000$Tl1Ll7lr2A7PKV2w$Jf4VNEPGpIQg2xefYHTCU1Ou4eKpvTpVtdbJ8q2WJ426hdS2onr4YQcdkGJOvyVtR6tA3KN.TiL57Rdlo.B2M1 |

| tenantId | None |

+----------+-------------------------------------------------------------------------------------------------------------------------+

root@oak-controller:~# keystone user-create --name nova --pass nova --email nova@foobar.com

+----------+-------------------------------------------------------------------------------------------------------------------------+

| Property | Value |

+----------+-------------------------------------------------------------------------------------------------------------------------+

| email | nova@foobar.com |

| enabled | True |

| id | 16b969fb9f3a470581141d601e61beeb |

| name | nova |

| password | $6$rounds=40000$2hIzWJsygWb9ebna$TtXRFGcGsBoJSE9rO1R5Dg9o27EGwvK7LDZuNVSsA.vVgcriyXkBzqIrfm8pF3qNfgaImbvxqdBNtWYbbejpt. |

| tenantId | None |

+----------+-------------------------------------------------------------------------------------------------------------------------+

root@oak-controller:~# keystone user-create --name glance --pass glance --email glance@foobar.com

+----------+-------------------------------------------------------------------------------------------------------------------------+

| Property | Value |

+----------+-------------------------------------------------------------------------------------------------------------------------+

| email | glance@foobar.com |

| enabled | True |

| id | 0846f49915e34af2b2711daa2276600b |

| name | glance |

| password | $6$rounds=40000$9n/XT3tA4Va/Yw5q$A9YHhm5.A4I5sf8o55FZHZinRoNPfb/7jNpEYpizG2Pfa3r97faysrGePtXaaaST46CrTaJWbUCCG49wBjt6n1 |

| tenantId | None |

+----------+-------------------------------------------------------------------------------------------------------------------------+

root@oak-controller:~# keystone user-create --name swift --pass swift --email swift@foobar.com

+----------+-------------------------------------------------------------------------------------------------------------------------+

| Property | Value |

+----------+-------------------------------------------------------------------------------------------------------------------------+

| email | swift@foobar.com |

| enabled | True |

| id | 89d985c3654a49edbbaf098b044d4d97 |

| name | swift |

| password | $6$rounds=40000$1S5h0kE6JpNxx73b$.Nf8wwfRO3/UFI58WAo77ASdp93vtEiyrWyL160prVWyPnp5VMlUnNaJKLyxnahagxBXX87Y4KbhfvWU87E2d0 |

| tenantId | None |

+----------+-------------------------------------------------------------------------------------------------------------------------+

# Creating Roles

keystone role-create --name admin

keystone role-create --name Member

输出如下:

root@oak-controller:~# keystone role-create --name admin

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| id | 54f31e5da56e4c26ad4febce9ff7b017 |

| name | admin |

+----------+----------------------------------+

root@oak-controller:~# keystone role-create --name Member

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| id | 6d763be492464f9396bbaf02fbfff753 |

| name | Member |

+----------+----------------------------------+

#Listing Tenants, Users and Roles

root@oak-controller:~# keystone tenant-list

+----------------------------------+---------+---------+

| id | name | enabled |

+----------------------------------+---------+---------+

| 71afa7f265a043baaf35c94c526f6fe6 | admin | True |

| 9b154b36eba44e6faa243cbe31cd505e | service | True |

+----------------------------------+---------+---------+

root@oak-controller:~# keystone user-list

+----------------------------------+---------+-------------------+--------+

| id | enabled | email | name |

+----------------------------------+---------+-------------------+--------+

| 0846f49915e34af2b2711daa2276600b | True | glance@foobar.com | glance |

| 16b969fb9f3a470581141d601e61beeb | True | nova@foobar.com | nova |

| 89d985c3654a49edbbaf098b044d4d97 | True | swift@foobar.com | swift |

| e7865fd421764554a15418467d530d9f | True | admin@foobar.com | admin |

+----------------------------------+---------+-------------------+--------+

root@oak-controller:~# keystone role-list

+----------------------------------+--------+

| id | name |

+----------------------------------+--------+

| 54f31e5da56e4c26ad4febce9ff7b017 | admin |

| 6d763be492464f9396bbaf02fbfff753 | Member |

+----------------------------------+--------+

#command example

keystone user-role-add --user $USER_ID --role $ROLE_ID --tenant_id $TENANT_ID

#To add a role of 'admin' to the user 'admin' of the tenant 'admin'

keystone user-role-add --user e7865fd421764554a15418467d530d9f --role 54f31e5da56e4c26ad4febce9ff7b017 --tenant_id 71afa7f265a043baaf35c94c526f6fe6

#The following commands will add a role of 'admin' to the users 'nova', 'glance' and 'swift' of the tenant 'service'.

keystone user-role-add --user 0846f49915e34af2b2711daa2276600b --role 54f31e5da56e4c26ad4febce9ff7b017 --tenant_id 9b154b36eba44e6faa243cbe31cd505e

keystone user-role-add --user 16b969fb9f3a470581141d601e61beeb --role 54f31e5da56e4c26ad4febce9ff7b017 --tenant_id 9b154b36eba44e6faa243cbe31cd505e

keystone user-role-add --user 89d985c3654a49edbbaf098b044d4d97 --role 54f31e5da56e4c26ad4febce9ff7b017 --tenant_id 9b154b36eba44e6faa243cbe31cd505e

#add a role 'Member' to user 'admin' of tenant 'admin'

keystone user-role-add --user e7865fd421764554a15418467d530d9f --role 6d763be492464f9396bbaf02fbfff753 --tenant_id 71afa7f265a043baaf35c94c526f6fe6

# Creating Services

#command example

keystone service-create --name service_name --type service_type --description 'Description of the service'

keystone service-create --name nova --type compute --description 'OpenStack Compute Service'

keystone service-create --name volume --type volume --description 'OpenStack Volume Service'

keystone service-create --name glance --type image --description 'OpenStack Image Service'

keystone service-create --name swift --type object-store --description 'OpenStack Storage Service'

keystone service-create --name keystone --type identity --description 'OpenStack Identity Service'

keystone service-create --name ec2 --type ec2 --description 'EC2 Service'

keystone service-list

root@oak-controller:~# keystone service-list

+----------------------------------+----------+--------------+----------------------------+

| id | name | type | description |

+----------------------------------+----------+--------------+----------------------------+

| 368283bcbbf2488d94c8e8f8f6899ee4 | keystone | identity | OpenStack Identity Service |

| 50ab784afa0e4583ad78578d089678e9 | swift | object-store | OpenStack Storage Service |

| 63b3a3c91869439cabfc2fdd9a793dde | volume | volume | OpenStack Volume Service |

| b07ac3f65cb34bbe97d717be3f60c45a | glance | image | OpenStack Image Service |

| dcf501c58c314e06a99b3639782d5cee | ec2 | ec2 | EC2 Service |

| e828bd8bc67b490eaa10911168f82c3b | nova | compute | OpenStack Compute Service |

+----------------------------------+----------+--------------+----------------------------+

#Creating Endpoints

example:keystone endpoint-create --region region_name --service_id service_id --publicurl public_url --adminurl admin_url --internalurl internal_url

#nova-compute

keystone endpoint-create --region myregion --service_id e828bd8bc67b490eaa10911168f82c3b --publicurl 'http://192.168.3.97:8774/v2/$(tenant_id)s' --adminurl 'http://192.168.3.97:8774/v2/$(tenant_id)s' --internalurl 'http://192.168.3.97:8774/v2/$(tenant_id)s'

#nova-volume

keystone endpoint-create --region myregion --service_id 63b3a3c91869439cabfc2fdd9a793dde --publicurl 'http://192.168.3.97:8776/v1/$(tenant_id)s' --adminurl 'http://192.168.3.97:8776/v1/$(tenant_id)s' --internalurl 'http://192.168.3.97:8776/v1/$(tenant_id)s'

#glance

keystone endpoint-create --region myregion --service_id b07ac3f65cb34bbe97d717be3f60c45a --publicurl 'http://192.168.3.97:9292/v1' --adminurl 'http://192.168.3.97:9292/v1' --internalurl 'http://192.168.3.97:9292/v1'

#swift

keystone endpoint-create --region myregion --service_id 50ab784afa0e4583ad78578d089678e9 --publicurl 'http://192.168.3.97:8080/v1/AUTH_$(tenant_id)s' --adminurl 'http://192.168.3.97:8080/v1' --internalurl 'http://192.168.3.97:8080/v1/AUTH_$(tenant_id)s'

#keystone

keystone endpoint-create --region myregion --service_id 368283bcbbf2488d94c8e8f8f6899ee4 --publicurl http://192.168.3.97:5000/v2.0 --adminurl http://192.168.3.97:35357/v2.0 --internalurl http://192.168.3.97:5000/v2.0

#ec2

keystone endpoint-create --region myregion --service_id dcf501c58c314e06a99b3639782d5cee --publicurl http://192.168.3.97:8773/services/Cloud --adminurl http://192.168.3.97:8773/services/Admin --internalurl http://192.168.3.97:8773/services/Cloud #Glance

apt-get install glance glance-api glance-client glance-common glance-registry python-glance

#Glance Configuration

vi /etc/glance/glance-api-paste.ini

admin_tenant_name = service

admin_user = glance

admin_password = glance

vi /etc/glance/glance-registry-paste.ini

admin_tenant_name = service

admin_user = glance

admin_password = glance

vi /etc/glance/glance-registry.conf

sql_connection = mysql://glance:password@192.168.3.97/glance

[paste_deploy]

flavor = keystone

vi /etc/glance/glance-api.conf

[paste_deploy]

flavor = keystone

glance-manage version_control 0

glance-manage db_sync

restart glance-api

restart glance-registry

export SERVICE_TOKEN=admin

export OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_AUTH_URL="http://localhost:5000/v2.0/"

export SERVICE_ENDPOINT=http://localhost:35357/v2.0

source .bashrc

glance index

echo$?

###Nova

apt-get install nova-api nova-cert nova-compute nova-compute-kvm nova-doc nova-network nova-objectstore nova-scheduler nova-volume rabbitmq-server novnc nova-consoleauth

vi /etc/nova/nova.conf

--dhcpbridge_flagfile=/etc/nova/nova.conf

--dhcpbridge=/usr/bin/nova-dhcpbridge

--logdir=/var/log/nova

--state_path=/var/lib/nova

--lock_path=/run/lock/nova

--allow_admin_api=true

--use_deprecated_auth=false

--auth_strategy=keystone

--scheduler_driver=nova.scheduler.simple.SimpleScheduler

--s3_host=192.168.3.97

--ec2_host=192.168.3.97

--rabbit_host=192.168.3.97

--cc_host=192.168.3.97

--nova_url=http://192.168.3.97:8774/v1.1/

--routing_source_ip=192.168.3.97

--glance_api_servers=192.168.3.97:9292

--image_service=nova.image.glance.GlanceImageService

--iscsi_ip_prefix=192.168.100

--sql_connection=mysql://nova:password@192.168.3.97/nova

--ec2_url=http://192.168.3.97:8773/services/Cloud

--keystone_ec2_url=http://192.168.3.97:5000/v2.0/ec2tokens

--api_paste_config=/etc/nova/api-paste.ini

--libvirt_type=kvm

--libvirt_use_virtio_for_bridges=true

--start_guests_on_host_boot=true

--resume_guests_state_on_host_boot=true

# vnc specific configuration

--novnc_enabled=true

--novncproxy_base_url=http://192.168.3.97:6080/vnc_auto.html

--vncserver_proxyclient_address=192.168.3.97

--vncserver_listen=192.168.3.97

# network specific settings

--network_manager=nova.network.manager.FlatDHCPManager

--public_interface=eth0

--flat_interface=eth0

--flat_network_bridge=br100

--fixed_range=192.168.100.1/24

#JJZ>>>>>> fixed_range defines the internal network used by VM, change to 192.168.100.1/24

--floating_range=192.168.3.129/25

#JJZ>>>>>> floating_range defines the external network used by VM, change to 192.168.3.129/25

--network_size=256

#JJZ>>>>> network_size define the network size of internal network, change to 256

--flat_network_dhcp_start=192.168.100.2

--flat_injected=False

--force_dhcp_release

--iscsi_helper=tgtadm

--connection_type=libvirt

--root_helper=sudo nova-rootwrap

--verbose

root@oak-controller:~# pvcreate /dev/sda4

Physical volume "/dev/sda4" successfully created

root@oak-controller:~# vgcreate nova-volumes /dev/sda4

Volume group "nova-volumes" successfully created

chown -R nova:nova /etc/nova

chmod 644 /etc/nova/nova.conf

vi /etc/nova/api-paste.ini

admin_tenant_name = service

admin_user = nova

admin_password = nova

nova-manage db sync

nova-manage network create private --fixed_range_v4=192.168.100.0/24 --num_networks=1 --bridge=br100 --bridge_interface=eth0 --network_size=256

nova-manage floating create --ip_range=192.168.3.128/25从新在执行下地址poor 取决于我nova.conf 的配置定义

export OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_AUTH_URL="http://localhost:5000/v2.0/"

service libvirt-bin restart

service nova-network restart

service nova-api restart

service nova-objectstore restart

service nova-scheduler restart

service nova-consoleauth restart

service nova-cert restart

service nova-volume restart

service nova-compute restart

root@oak-controller:~# nova-manage service list

2012-07-26 14:37:28 DEBUG nova.utils [req-8d4b9794-c2bb-4e35-b3a0-d1f9796c784e None None] backend <module 'nova.db.sqlalchemy.api' from '/usr/lib/python2.7/dist-packages/nova/db/sqlalchemy/api.pyc'> from (pid=25877) __get_backend /usr/lib/python2.7/dist-packages/nova/utils.py:658

Binary Host Zone Status State Updated_At

nova-network oak-controller nova enabled

2012-07-26 06:37:25

nova-compute oak-controller nova enabled

2012-07-26 06:37:25

nova-scheduler oak-controller nova enabled

2012-07-26 06:37:18

nova-consoleauth oak-controller nova enabled

2012-07-26 06:37:22

nova-cert oak-controller nova enabled

2012-07-26 06:37:20

apt-get install git-core

apt-get install -y memcached libapache2-mod-wsgi openstack-dashboard

#create database dash

mysql -uroot -ppassword -e 'CREATE DATABASE dash;'

mysql -uroot -ppassword -e 'CREATE USER dash;'

mysql -uroot -ppassword -e "GRANT ALL PRIVILEGES ON dash.* TO 'dash'@'%';"

mysql -uroot -ppassword -e "SET PASSWORD FOR 'dash'@'%' =PASSWORD('password');"

vi /etc/openstack-dashboard/local_settings.py

LOCAL_PATH = os.path.dirname(os.path.abspath(__file__))

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.mysql',

'NAME': 'dash',

'USER': 'dash',

'PASSWORD': 'password',

'HOST': '192.168.3.97',

'default-character-set': 'utf8'

},

}

/usr/share/openstack-dashboard/manage.py syncdb

mkdir -p /var/lib/dash/.blackhole

/etc/init.d/apache2 restart

restart nova-api

OpenStack的essex正式版终于发布了,但是要想在CentOS下通过yum安装估计还得等上一段时间,因此不妨我们来一起通过源代码安装OpenStack,这样也对OpenStack有一个更清晰的认识。前段时间我一直忙nova这个组件的安装配置,一直各种报错,把我郁闷了好久,最要命的是我是在VMware workstation下安装CentOS6.2实验OpenStack,但是CentOS6.2在虚拟机环境下无法开启虚拟机化技术,而Ubuntu却可以,不得而把电脑重新装了CentOS重新实验。目前nova这个组件已经安装好了,所以我把这些过程写在这里跟大家分享下。这是第一篇,主要是操作系统的安装及系统相关依赖包的安装,下一篇将介绍各组件源代码的安装方法,再往下就是各组件的配置、运行,测试。好了,废话不多说,Let's

Go!!!

1.操作系统安装:

安装系统时最好给服务器取个名,不然后面rabbitmq-server可能无法启动,如下图所示:

在选择安装类型时,选择Desktop,并且选择下面的现在定制,如下图所示:

Applications部分只选择InternetBrowser即可,该组的可选包只选择firefox,如下图:

Base System部分默认即可,Databases部分勾选Mysql的两项,其中client的可选包只选python的即可,如下图:

计算节点只需要安装客户端,不需要安装Mysql服务器

Virtualization部分勾选上面三项,且去掉第二项的可选包,如下图:

其它未说明部分默认即可,安装完后进入系统创建你的用户和密码,我这里建的是ugyn。

2.安装光盘中的一些依赖包

2.1将当前用户添加到sudoers中

[ugyn@cc ~]$ su

Password:

[root@cc ugyn]# echo 'ugyn ALL=(ALL) NOPASSWD:ALL' >> /etc/sudoers

[root@cc ugyn]# su ugyn

[ugyn@cc ~]$

2.2添加CentOS光盘镜像为repo:

[ugyn@cc ~]$ sudo cp /etc/yum.repos.d/CentOS-Media.repo /etc/yum.repos.d/iso.repo

[ugyn@cc ~]$ sudo vim /etc/yum.repos.d/iso.repo

最后我的iso.repo内容为:

[iso]

name=iso

baseurl=file:///media/iso/

gpgcheck=1

enabled=0

gpgkey=file:///media/iso/RPM-GPG-KEY-CentOS-6

挂载镜像:

[ugyn@cc ~]$ sudo mkdir /media/iso

[ugyn@cc ~]$ sudo mount -o loop -t iso9660 Downloads/CentOS-6.2-x86_64-bin-DVD1.iso /media/iso

2.3卸载python-crypto:

该软件版本过低,如果不卸载会导致以后glance服务无法启动

[ugyn@cc ~]$ sudo yum --enablerepo=iso --disablerepo=base,updates,extras remove python-crypto

2.4安装光盘中存在的依赖软件:

[ugyn@cc ~]$ sudo yum --enablerepo=iso --disablerepo=base,updates,extras install gcc gcc-c++ python-devel python-setuptools libxml2-devel libxslt-devel mod_wsginumpy

这里mod_wsgi为安装horizon所必须,numpy为安装noVNC所必须,安装计算节点这两个包都不需要

3.安装epel中的一些依赖包:

关于qpid的bug已经被修复,详情见这里:

https://review.openstack.org/#/c/6760/

https://github.com/openstack/glance/commit/5bed23cbc962d3c6503f0ff93e6d1e326efbd49d

我将使用qpid,这样可以不用安装epel源,省去安装rabbitmq及其依赖的60多包,python-sqlite2, python-greenlet-devel及其依赖的python-greenlet 可以先下载然后手动安装就好了,我将会将这些文件打包跟大家下载。如果使用qpid请安装好python-qpid,python-sqlite2, python-greenlet-devel及其依赖的python-greenlet后直接看第4步。

下载页:http://dl.fedoraproject.org/pub/epel/6/x86_64/repoview/letter_p.group.html

安装:

sudo yum --enablerepo=iso --disablerepo=base,updates,extras install python-qpid

sudo rpm -ihv rpms/python-sqlite2-2.3.5-2.el6.x86_64.rpm rpms/python-greenlet-0.3.1-6.el6.x86_64.rpm rpms/python-greenlet-devel-0.3.1-6.el6.x86_64.rpm

3.1安装epel源:

[ugyn@cc ~]$ sudo rpm -i http://download.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-5.noarch.rpm

3.2安装epel源中的rabbitmq-server及另两个依赖包:

[ugyn@cc ~]$ sudo yum install rabbitmq-server python-sqlite2 python-greenlet-devel

3.3启动rabbitmq-server:

首先要停掉qpidd,本来OpenStack可以使用qpidd,我在实验中使用时通过glance上传镜像时报错(详情见这里 ),而用rabbitmq没这样的问题

[ugyn@cc ~]$ sudo chkconfig qpidd off && sudo service qpidd stop

[ugyn@cc ~]$ sudo chkconfig rabbitmq-server on && sudo service rabbitmq-server start

设置密码:

[ugyn@cc ~]$ sudo rabbitmqctl change_password guest service123

注意:请确定已经设置好了主机名,不然rabbit可能无法启动

安装计算节点时以下步骤都不需要!!!

4.启动httpd:

[ugyn@cc ~]$ sudo chkconfig httpd on && sudo service httpd start

Starting httpd: httpd: apr_sockaddr_info_get() failed for cc.sigsit.org

httpd: Could not reliably determine the server's fully qualified domain name, using 127.0.0.1 for ServerName

[ OK ]

[ugyn@cc ~]$ sudo service httpd status

httpd (pid 28460) is running...

5.启动mysql:

[ugyn@cc ~]$ sudo chkconfig mysqld on && sudo service mysqld start

修改配置:

[ugyn@cc ~]$ sudo vim /etc/my.cnf

在[mysqld]选项下添加以下两行:

character-set-server=utf8

default-storage-engine=InnoDB

在文件最后添加以下两行:

[mysql]

default-character-set=utf8

重启mysql:

sudo service mysqld restart

登录mysql删除匿名用户并设置root密码:

mysql -uroot

mysql> use mysql

mysql> delete from user where User="";

mysql> update user set Password=password('service123');

删除test数据库:

mysql> drop database test;

创建keystone所需数据库:

mysql> create database keystone;

mysql> grant all privileges on keystone.* to 'keystone'@'%' identified by 'keystone';

创建nova所需数据库:

mysql> create database nova;

mysql> grant all privileges on nova.* to 'nova'@'%' identified by 'nova';

创建glance所需数据库:

mysql> create database glance;

mysql> grant all privileges on glance.* to 'glance'@'%' identified by 'glance';

创建horizon所需数据库:

mysql> create database horizon;

mysql> grant all privileges on horizon.* to 'horizon'@'%' identified by 'horizon';

刷新权限:

mysql> flush privileges;

mysql> quit

6.配置网桥:

见这里/article/7944205.html

好了,操作系统部分到这里就差不多了,下面运行下virsh及virt-manager看看主机能否启动虚拟机技术

[ugyn@cc ~]$ sudo virsh version

Compiled against library: libvir 0.9.4

Using library: libvir 0.9.4

Using API: QEMU 0.9.4

Running hypervisor: QEMU 0.12.1

注意virt-manager需要以root用户登录图形界面才能运行

操作系统准备好后,接下来就应该进行各组件的安装了,在这一篇里我将介绍怎么在一台机器上安装keystone、glance、nova、horizon、swift。大致安装步骤如下,下载源代码,下载并安装各组件的依赖库,安装各组件。

1.下载源代码并解压

从官方网站下载源代码,地址如下:

https://launchpad.net/nova/

https://launchpad.net/glance/

https://launchpad.net/keystone/

https://launchpad.net/horizon/

https://launchpad.net/swift/

我的文件夹结构如下:

[ugyn@cc install]$ ls -l

total 5984

-rw-r--r--. 1 ugyn ugyn 298898 Apr 8 14:18 glance-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 606289 Apr 10 19:15 horizon-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 186851 Apr 10 19:15 keystone-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 4359338 Apr 8 14:15 nova-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 95197 Mar 27 18:26 pip-1.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 48335 Apr 10 19:15 python-keystoneclient-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 85322 Apr 8 14:16 python-novaclient-2012.1.tar.gz

-rw-r--r--. 1 ugyn ugyn 421496 Apr 8 14:20 swift-1.4.8.tar.gz

-rw-r--r--. 1 ugyn ugyn 202 Apr 13 16:10 test

解压:

[ugyn@cc install]$ tar zxpf keystone-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf glance-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf nova-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf python-novaclient-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf python-keystoneclient-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf horizon-2012.1.tar.gz

[ugyn@cc install]$ tar zxpf swift-1.4.8.tar.gz

2.安装pip:

pip是一个安装python库的好工具,总的来说源代码安装OpenStack还是比较简单的,每个组件具有类似的结构,在各个安装包下有个tools/pip-requires文件,列举了该组件所依赖的python库,因此安装该组件前先要用pip安装所依赖的python库。

下载pip: http://pypi.python.org/packages/source/p/pip/pip-1.1.tar.gz#md5=62a9f08dd5dc69d76734568a6c040508

安装:

[ugyn@cc install]$ tar zxpf pip-1.1.tar.gz

[ugyn@cc install]$ cd pip-1.1 && sudo python setup.py install

3.整合pip-requires文件下载相关python库文件:

我的目标是即使在没有网络的环境下也能安装OpenStack,所以我希望把所有相关库文件下载下来然后通过本地安装。为此我打算把各组件的pip-requires文件整合到一个文件,去除其中的重复部分,然后用pip工具下载所有库文件,我会提供一个所有文件的下载,如果你使用我提供的下载文件的话就可以跳过这步。

[ugyn@cc pip-1.1]$ cd ..

[ugyn@cc install]$ cat keystone-2012.1/tools/pip-requires glance-2012.1/tools/pip-requires nova-2012.1/tools/pip-requires horizon-2012.1/tools/pip-requires python-novaclient-2012.1/tools/pip-requires python-keystoneclient-2012.1/tools/pip-requires | grep "^[a-zA-Z]"

| sort -u > pip-requires

编辑pip-requires去除重复的库,原则上重复的库只留下满足要求的版本号最确定的那一个,去除我们要在这里安装的glance,swift等我们要在这里安装的组件。

下载依赖包以供多次使用:

[ugyn@cc install]$ mkdir pipdowns

[ugyn@cc install]$ pip install -r pip-requires -d pipdowns --no-install

4.安装依赖库文件:

以下几个要先安装,因为有其它库的安装依赖于他们

[ugyn@cc install]$ sudo pip install ./pipdowns/Markdown-2.1.1.tar.gz ./pipdowns/nose-1.1.2.tar.gz ./pipdowns/pycrypto-2.3.tar.gz ./pipdowns/six-1.1.0.tar.gz ./pipdowns/Tempita-0.5.1.tar.gz

安装其它库:

[ugyn@cc install]$ sudo pip install ./pipdowns/*

测试是否已全部安装好,这是我自己写的简单脚本,没有输入即表示正常,否则会显示未安装的库名,到时候我会将所有软件打包供大家下载,我的目标是在完全没有网络的机器上也能正常安装OpenStack

[ugyn@cc install]$ ./test

5.安装各组件:

[ugyn@cc install]$ cd keystone-2012.1 && sudo python setup.py install

[ugyn@cc keystone-2012.1]$ cd ../glance-2012.1 && sudo python setup.py install

[ugyn@cc glance-2012.1]$ cd ../nova-2012.1 && sudo python setup.py install

[ugyn@cc nova-2012.1]$ cd ../python-novaclient-2012.1 && sudo python setup.py install

[ugyn@cc python-novaclient-2012.1]$ cd ../python-keystoneclient-2012.1 && sudo python setup.py install

[ugyn@cc python-keystoneclient-2012.1]$ cd ../horizon-2012.1 && sudo python setup.py install

[ugyn@cc horizon-2012.1]$ cd ../swift-1.4.8 && sudo python setup.py install

安装到这里就结束了,接下来我将介绍各组件的配置,运行,测试。。。

这篇文章与我前面写的安装keystone有很大部分是相同,如果你看过前面的那篇文章这里略读主可以了

1.配置

也可参考:http://docs.openstack.org/trunk/openstack-compute/install/content/keystone-conf-file.html进行配置

1.1拷贝默认配置文件:

[ugyn@cc swift-1.4.8]$ cd ../keystone-2012.1 && sudo cp -R etc /etc/keystone

[ugyn@cc keystone-2012.1]$ sudo chown -R ugyn:ugyn /etc/keystone

[ugyn@cc keystone-2012.1]$ mv /etc/keystone/logging.conf.sample /etc/keystone/logging.conf

1.2修改/etc/keystone/keystone.conf:

生成随机token:

[ugyn@cc keystone-2012.1]$ openssl rand -hex 10

7d97448231c0a2bac8a3

[ugyn@cc keystone-2012.1]$ vim /etc/keystone/keystone.conf

将生成的token替换admin_token的值

修改:#log_config = ./etc/logging.conf.sample

为:

log_config = /etc/keystone/logging.conf

修改:

[sql]

connection = sqlite:///keystone.db

idle_timeout = 200

为:

[sql]

connection = mysql://keystone:keystone@localhost/keystone

idle_timeout = 200

min_pool_size = 5

max_pool_size = 10

pool_timeout = 200

修改:

[catalog]

driver = keystone.catalog.backends.templated.TemplatedCatalog

template_file = ./etc/default_catalog.templates

为:

[catalog]

driver = keystone.catalog.backends.sql.Catalog

修改:

[token]

driver = keystone.token.backends.kvs.Tokens

为:

[token]

driver = keystone.token.backends.sql.Token

修改:

[ec2]

driver = keystone.contrib.ec2.backends.kvs.Ec2

为:

[ec2]

driver = keystone.contrib.ec2.backends.sql.Ec2

便于运行客户端命令创建以下文件并运行:

export SERVICE_TOKEN=7d97448231c0a2bac8a3

export SERVICE_ENDPOINT=http://127.0.0.1:35357/v2.0

export OS_USERNAME=nova

export OS_PASSWORD=service123

export OS_TENANT_NAME=service

export OS_AUTH_URL=http://127.0.0.1:5000/v2.0

说明:这里的用户数据是在下面2.3中脚本创建的,当设置了SERVICE_TOKEN、SERVICE_ENDPOINT可以运行任何的keystone命令,因此须小心

运行:

[ugyn@cc keystone-2012.1]$ source ~/.openstackrc

2.运行

2.1第一次运行时需创建数据表:

[ugyn@cc keystone-2012.1]$ keystone-manage db_sync

2.2运行keystone:

请新开一个终端或在后台运行

[ugyn@cc Desktop]$ keystone-all

2.3创建初始tenants、users、roles、services、endpoints:

修改tools/sample_data.sh,在文件开头添加以下内容:

[ugyn@cc keystone-2012.1]$ vim tools/sample_data.sh

#设置管理密码:

ADMIN_PASSWORD=admin123

#设置服务密码:

SERVICE_PASSWORD=service123

#要创建endpoint,请添加:

ENABLE_ENDPOINTS=ture

#要创建与swif相关的user,service:

ENABLE_SWIFT=ture

#要创建与quantum相关的user,service,添加:

#ENABLE_QUANTUM=ture

运行创建脚本:

[ugyn@cc keystone-2012.1]$ sudo tools/sample_data.sh

3.测试

3.1查看刚才创建的用户

[ugyn@cc keystone-2012.1]$ keystone user-list

3.2查看刚才创建的tenant

[ugyn@cc keystone-2012.1]$ keystone tenant-list

更多的操作请运行以下命令并自己测试

[ugyn@cc keystone-2012.1]$ keystone help

本文与前面所写的一篇关于glance的安装配置文章大部分是重复的,如果已经看过前面的那一篇,此篇略读即可

1.配置

1.1拷贝默认配置文件:

[ugyn@cc keystone-2012.1]$ cd ../glance-2012.1 && sudo cp -R etc /etc/glance

[ugyn@cc glance-2012.1]$ sudo chown -R ugyn:ugyn /etc/glance

1.2配置glance使用keystone认证:

更新/etc/glance/glance-api-paste.ini的以下选项为keystone中的设置值:

[filter:authtoken]

admin_tenant_name = service

admin_user = glance

admin_password = service123

在/etc/glance/glance-api.conf尾添加:

[paste_deploy]

flavor = keystone

更新/etc/glance/glance-registry-paste.ini的以下选项为keystone中的设置值

[filter:authtoken]

admin_tenant_name = service

admin_user = glance

admin_password = service123

在/etc/glance/glance-registry.conf尾添加:

[paste_deploy]

flavor = keystone

1.3设置glance的mysql连接:

修改/etc/glance/glance-registry.conf下的

sql_connection = mysql://glance:glance@localhost/glance

1.4配置glance的通信服务器:

修改/etc/glance/glance-api.conf下的:

notifier_strategy = noop

为:

notifier_strategy = rabbit

rabbit_password = guest

为:

rabbit_password = service123

现在我采用qpid作为通信服务器,设置notifier_strategy = qpid,其它qpid选项没做修改

1.5配置glance的存储:

在这里先不做修改了,采用默认的文件存取,以后配置好swift后再改

1.6创建目录:

[ugyn@cc glance-2012.1]$ sudo mkdir /var/log/glance

[ugyn@cc glance-2012.1]$ sudo mkdir /var/lib/glance

[ugyn@cc glance-2012.1]$ sudo chown -R ugyn:ugyn /var/log/glance

[ugyn@cc glance-2012.1]$ sudo chown -R ugyn:ugyn /var/lib/glance

2.运行

[ugyn@cc glance-2012.1]$ sudo glance-control all start

将start换成restart、stop即可重启、关闭glance

3.测试

3.1境像上传:

下载境像:http://smoser.brickies.net/ubuntu/ttylinux-uec/ttylinux-uec-amd64-12.1_2.6.35-22_1.tar.gz

解压:

[ugyn@cc glance-2012.1]$ cd ~/image

[ugyn@cc image]$ tar xzvf ttylinux-uec-amd64-12.1_2.6.35-22_1.tar.gz

上传kernel:

[ugyn@cc image]$ glance add name="tty-linux-kernel" disk_format=aki container_format=aki < ttylinux-uec-amd64-12.1_2.6.35-22_1-vmlinuz

输出:

Uploading image 'tty-linux-kernel'

===============================================================================================[100%] 13.9M/s, ETA 0h 0m 0s

Added new image with ID: d83d5227-64ba-4541-adce-4c5442d9eecc

上传initrd:

[ugyn@cc image]$ glance add name="tty-linux-ramdisk" disk_format=ari container_format=ari < ttylinux-uec-amd64-12.1_2.6.35-22_1-loader

输出:

Uploading image 'tty-linux-ramdisk'

=========================================================================================[100%] 935.456035K/s, ETA 0h 0m 0s

Added new image with ID: b7b783f9-7007-453b-9236-242765136898

上传image:注意这里的kernel_id各ramdisk_id是前面两步上传生成的id

[ugyn@cc image]$ glance add name="tty-linux" disk_format=ami container_format=ami kernel_id=d83d5227-64ba-4541-adce-4c5442d9eecc ramdisk_id=b7b783f9-7007-453b-9236-242765136898 < ttylinux-uec-amd64-12.1_2.6.35-22_1.img

输出:

Uploading image 'tty-linux'

===============================================================================================[100%] 79.6M/s, ETA 0h 0m 0s

Added new image with ID: fbd05c73-7bea-4af6-a0d5-9c7581569792

3.2查看上传的镜像:

glance的其它操作请查看请通过命令glance --help

好了,glance就说到这,接下来我将说说nova的配置、运行、测试,在实验过程中遇到了各种错误,好在最后还是把它跑起来了,说完这个后我将把相关文件整理下,上传到csdn供大家下载使用

.配置

1.1复制默认配置文件:

[ugyn@cc image]$ cd ~/install/nova-2012.1

[ugyn@cc nova-2012.1]$ sudo cp -R etc/nova /etc/nova

[ugyn@cc nova-2012.1]$ sudo chown -R ugyn:ugyn /etc/nova

1.2创建/etc/nova/nova.conf

将http://docs.openstack.org/trunk/openstack-compute/install/content/nova-conf-file.html配置内容复制进来并修改相关选项

我的nova.conf内容如下:

[DEFAULT]

# LOGS/STATE

verbose=True

# AUTHENTICATION

auth_strategy=keystone

# SCHEDULER

compute_scheduler_driver=nova.scheduler.filter_scheduler.FilterScheduler

# VOLUMES

volume_group=nova-volumes

volume_name_template=volume-%08x

iscsi_helper=tgtadm

# DATABASE

sql_connection=mysql://nova:nova@10.17.23.95/nova

# COMPUTE

libvirt_type=kvm

connection_type=libvirt

instances_path=/home/ugyn/instances

instance_name_template=instance-%08x

api_paste_config=/etc/nova/api-paste.ini

allow_resize_to_same_host=True

# APIS

osapi_compute_extension=nova.api.openstack.compute.contrib.standard_extensions

ec2_dmz_host=10.17.23.95

s3_host=10.17.23.95

# RABBITMQ

rabbit_host=10.17.23.95

rabbit_userid=guest

rabbit_password=service123

# GLANCE

image_service=nova.image.glance.GlanceImageService

glance_api_servers=10.17.23.95:9292

# NETWORK

network_manager=nova.network.manager.FlatDHCPManager

force_dhcp_release=True

dhcpbridge_flagfile=/etc/nova/nova.conf

firewall_driver=nova.virt.libvirt.firewall.IptablesFirewallDriver

my_ip=10.17.23.95

public_interface=br100

vlan_interface=eth0

flat_network_bridge=br100

flat_interface=eth0

fixed_range=10.0.0.0/24

# NOVNC CONSOLE

novncproxy_base_url=http://10.17.23.95:6080/vnc_auto.html

xvpvncproxy_base_url=http://10.17.23.95:6081/console

vncserver_proxyclient_address=127.0.0.1

vncserver_listen=127.0.0.1

bindir=/usr/bin

除了一般的修改外,我还加了两个参数instances_path我把这个设到我的个人文件夹来了,bindir这个很重要,否则启动nova-network时会有问题。

创建该文件夹:[ugyn@cc nova-2012.1]$ mkdir ~/instances

nova默认采用rabbitmq作为通信服务器,如果要用qpid,请把RABBITMQ相关选项替换成如下:

#QPID

rpc_backend=nova.rpc.impl_qpid

qpid的其它选项我这里保持默认,如果要设置请参考示例配置文件以下内容:

###### (IntOpt) Seconds between connection keepalive heartbeats# qpid_heartbeat=5###### (StrOpt) Qpid broker hostname# qpid_hostname="localhost"###### (StrOpt) Password for qpid connection# qpid_password=""###### (StrOpt) Qpid broker port# qpid_port="5672"###### (StrOpt) Transport to use, either 'tcp' or 'ssl'# qpid_protocol="tcp"###### (BoolOpt) Automatically reconnect# qpid_reconnect=true###### (IntOpt) Equivalent to setting max and min to the same value# qpid_reconnect_interval=0###### (IntOpt) Maximum seconds between reconnection attempts# qpid_reconnect_interval_max=0###### (IntOpt) Minimum seconds between reconnection attempts# qpid_reconnect_interval_min=0###### (IntOpt) Max reconnections before giving up# qpid_reconnect_limit=0###### (IntOpt) Reconnection timeout in seconds# qpid_reconnect_timeout=0###### (StrOpt) Space separated list of SASL mechanisms to use for auth# qpid_sasl_mechanisms=""###### (BoolOpt) Disable Nagle algorithm# qpid_tcp_nodelay=true###### (StrOpt) Username for qpid connection# qpid_username=""

1.3配置nova的keystone认证:

[ugyn@cc nova-2012.1]$ vim /etc/nova/api-paste.ini

修改:

admin_tenant_name = %SERVICE_TENANT_NAME%

admin_user = %SERVICE_USER%

admin_password = %SERVICE_PASSWORD%

为:

admin_tenant_name = service

admin_user = nova

admin_password = service123

1.4创建存储卷:

[ugyn@cc nova-2012.1]$ mkdir /home/ugyn/novaimages

[ugyn@cc nova-2012.1]$ dd if=/dev/zero of=/home/ugyn/novaimages/nova-volumes.img bs=1M seek=100k count=0

[ugyn@cc nova-2012.1]$ sudo vgcreate nova-volumes $(sudo losetup --show -f /home/ugyn/novaimages/nova-volumes.img)

1.5初始化数据库:

[ugyn@cc nova-2012.1]$ nova-manage db sync

1.6解决启动nova-network、nova-volume出现timeout错误:

https://lists.launchpad.net/openstack/msg02565.html

2运行

先在每个新开终端运行nova-api、nova-cert、nova-compute、nova-network、nova-objectstore、nova-scheluder、nova-volume查看各程序是否正常运行,确定正常后可以关闭各程序,通过nova-all启动以上各个程序。

3.测试

3.1查看各服务状态:

[ugyn@cc nova-2012.1]$ nova-manage service list

2012-04-20 17:11:26 DEBUG nova.utils [req-12fde12c-11e2-4861-8e53-03af10832e4b None None] backend <module 'nova.db.sqlalchemy.api' from

'/usr/lib/python2.6/site-packages/nova-2012.1-py2.6.egg/nova/db/sqlalchemy/api.pyc'> from (pid=1095) __get_backend /usr/lib/python2.6/site-

packages/nova-2012.1-py2.6.egg/nova/utils.py:658

2012-04-20 17:11:26 WARNING nova.utils [req-12fde12c-11e2-4861-8e53-03af10832e4b None None] /usr/lib64/python2.6/site-

packages/sqlalchemy/pool.py:681: SADeprecationWarning: The 'listeners' argument to Pool (and create_engine()) is deprecated. Use event.listen

().

Pool.__init__(self, creator, **kw)

2012-04-20 17:11:26 WARNING nova.utils [req-12fde12c-11e2-4861-8e53-03af10832e4b None None] /usr/lib64/python2.6/site-

packages/sqlalchemy/pool.py:159: SADeprecationWarning: Pool.add_listener is deprecated. Use event.listen()

self.add_listener(l)

Binary Host Zone Status State Updated_At

nova-cert cc.sigsit.org nova enabled :-) 2012-04-20 09:11:24

nova-compute cc.sigsit.org nova enabled :-) 2012-04-20 09:11:22

nova-network cc.sigsit.org nova enabled :-) 2012-04-20 09:11:23

nova-scheduler cc.sigsit.org nova enabled :-) 2012-04-20 09:11:23

nova-volume cc.sigsit.org nova enabled :-) 2012-04-20 09:11:21

注意::-)表示正在运行;XXX表示服务启动时出错已停止;如果服务从未正常启动过则不会出现在这里

3.2创建网络:

[ugyn@cc nova-2012.1]$ nova-manage network create private 10.0.0.0/24 1 256 --bridge=br100

3.3查看信息:

更多信息请运行nova help查看相关命令

3.4启动一个实例:

创建密钥:

ugyn@cc nova-2012.1]$ cd

请先关闭selinux,否则无法创建密钥,方法如下:

[ugyn@cc ~]$ sudo vim /etc/sysconfig/selinux

修改:SELINUX=disabled

不想重启执行以下命令:

[ugyn@cc ~]$ sudo setenforce 0

[ugyn@cc ~]$ nova keypair-add mykey > oskey.priv

[ugyn@cc ~]$ chmod 600 oskey.priv

创建实例:

[ugyn@cc ~]$ nova boot myserver --flavor 1 --key_name mykey --image tty-linux

可通过nova show myserver或nova list 查看实例状态,当实例状态为active时可通过ssh连接了。

通过ssh登录实例:

[ugyn@cc ~]$ ssh -i oskey.priv root@10.0.0.2

查看实例日志:

[ugyn@cc ~]$ nova console-log myserver

删除实例:

[ugyn@cc ~]$ nova delete myserver

触认是否删除:

[ugyn@cc ~]$ nova list

更新:5月4日;添加noVNC的配置

1配置

1.1创建配置文件:

[ugyn@cc ~]$ vim install/horizon-2012.1/openstack_dashboard/local/local_settings.py

将http://docs.openstack.org/trunk/openstack-compute/install/content/local-settings-py-file.html示例配置文件拷入其中,修改数据库信息,在USE_SSL

= False下添加一行:

SECRET_KEY = 'elj1IWiLoWHgcyYxVLj7cMrGOxWl0'随意修改部分字符即可。

将该文件拷贝一份到horizon安装目录:

[ugyn@cc ~]$ sudo cp install/horizon-2012.1/openstack_dashboard/local/local_settings.py /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.

egg/openstack_dashboard/local/local_settings.py

1.2为httpd创建horizon配置文件

[ugyn@cc ~]$ sudo vim /etc/httpd/conf.d/horizon.conf

内容如下:

[plain] view

plaincopy

<VirtualHost *:80>

WSGIScriptAlias / /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg/openstack_dashboard/wsgi/django.wsgi

WSGIDaemonProcess horizon user=apache group=apache processes=3 threads=10 home=/usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg

SetEnv APACHE_RUN_USER apache

SetEnv APACHE_RUN_GROUP apache

WSGIProcessGroup horizon

DocumentRoot /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg/.blackhole/

Alias /media /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg/openstack_dashboard/static

<Directory />

Options FollowSymLinks

AllowOverride None

</Directory>

<Directory /usr/lib/python2.6/site-packages/horizon-2012.1-py2.6.egg/>

Options Indexes FollowSymLinks MultiViews

AllowOverride None

Order allow,deny

allow from all

</Directory>