LVS+Keepalived 新玩法:负载均衡层与真实服务器层融合

2012-09-05 15:47

323 查看

LVS+Keepalived 新玩法:负载均衡层与真实服务器层融合

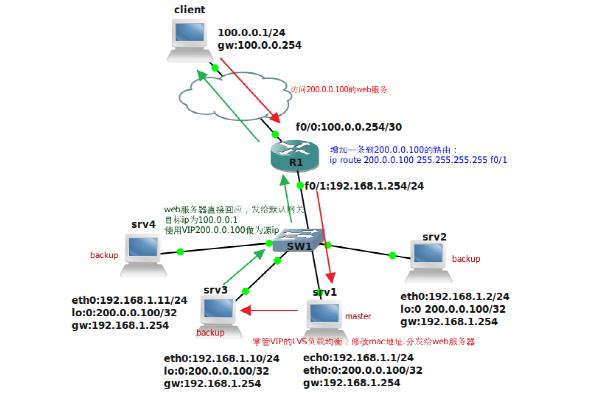

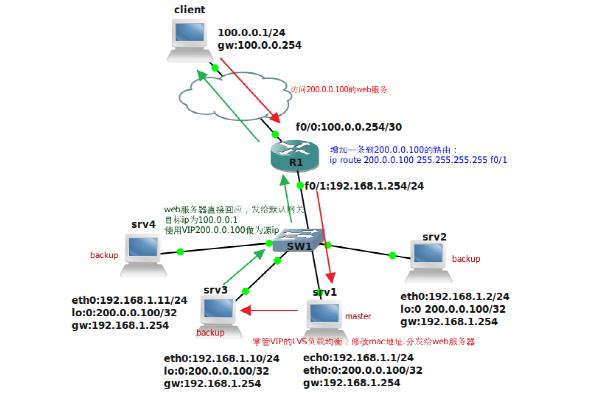

拓扑:

目的:充分利用硬件资源,LVS_DR服务器利用的资源相对低,通过keepalived配合,实现备用的LVS_DR成为web服务器的负载均衡群集.。

原理:

四台服务器上安装keepalived和lvs,开放80端口提供web服务,通过keepalived竞选master接管VIP并开启LVS功能,为web服务器负载分发,同时自己也是web服务器,权重低些。

当master挂掉后,后面web服务器通过keepalived竞选新的master,并开启LVS功能。

注意:

我的vip和rip是不同网段的,主要是想实现一个公网ip组lvs_dr模式。

其实也可以改成同一网段,把VIP改成192.168.1.X

realserver回应出去时的源ip是VIP。

这里我在路由器上增加了一条到vip的静态路由:

参考文章:http://lustlost.blog.51cto.com/2600869/929915

软件:

ipvsadm-1.24.tar.gz

keepalived-1.1.19.tar.gz

安装keepalived 和ipvs

网上大把

需要的文件和脚本:

/etc/keepalived/keepalived.conf 默认的keepalived启动时的配置文件

/etc/keepalived/lvs.conf 当成为master时加入lvs功能的配置文件

/opt/shell/lvs_rsrv.sh 成为realserver用的脚本,

四台机器都安装keepalived和lvs,放置脚本和配置文件,

每台机修改的只是keepalived.conf文件和lvs.conf的少许部分!

先把需要的脚本文件放到/opt/shell下

/opt/shell/lvs_rsrv.sh 借用netseek大大的脚本,内容如下:

记得给运行权限:

开始配置keepalived:

默认启动的/etc/keepalived/keepalived.conf文件如下:

LVS功能的/etc/keepalived/lvs.conf配置文件如下:

文件都设置好了复制到每台机器上面

Server 2改的地方

/etc/keepalived/keepalived.conf文件:

/etc/keepalived/lvs.conf文件:

Server3 和server4修改的地方与server2雷同,都是修改标示,优先级和本机web服务的权重

快速修改命令:,yx为优先级的变量。

启动你的web服务后再启动keepalived

开始测试:

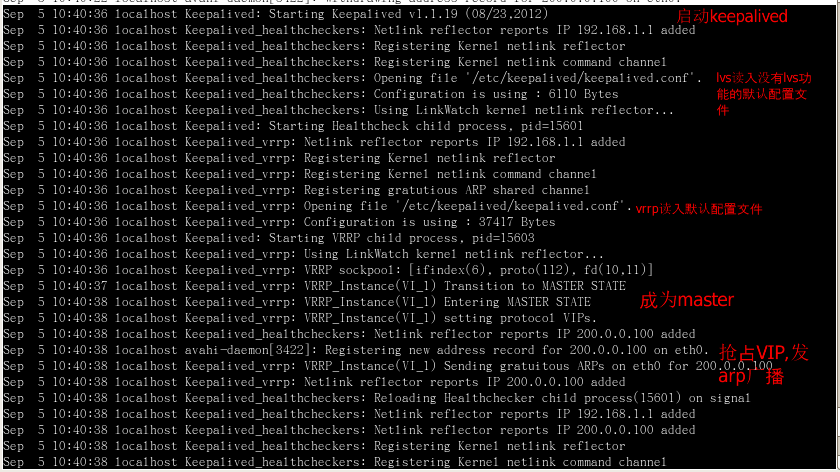

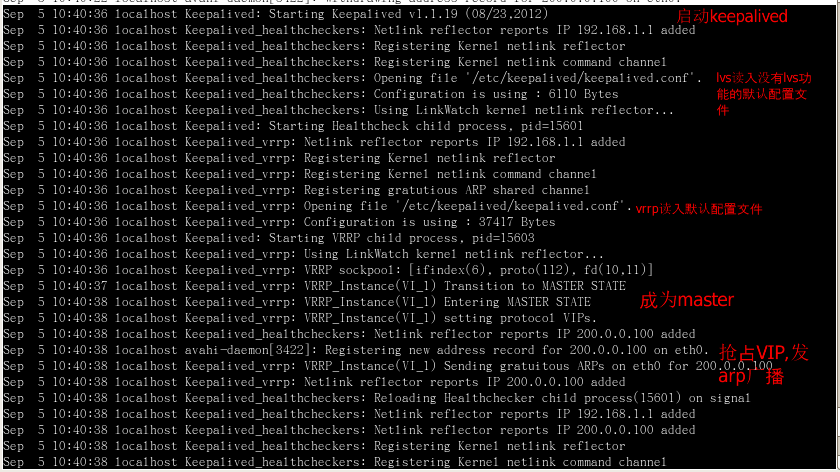

在server1上启动keepalived,查看/var/log/message日志。

接上:

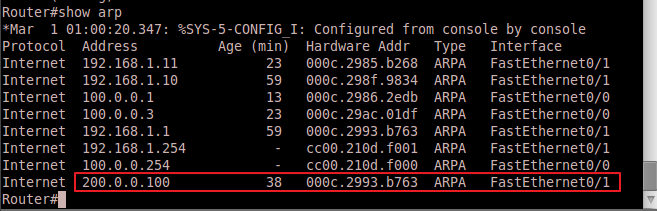

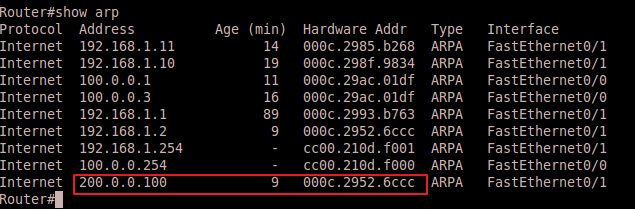

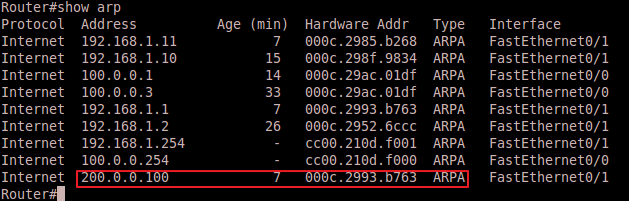

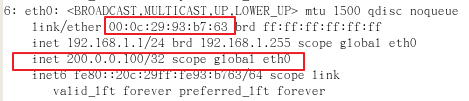

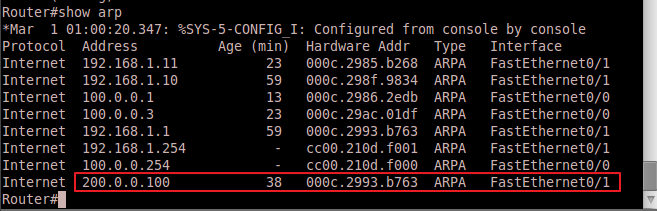

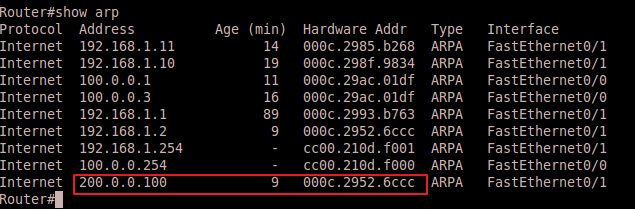

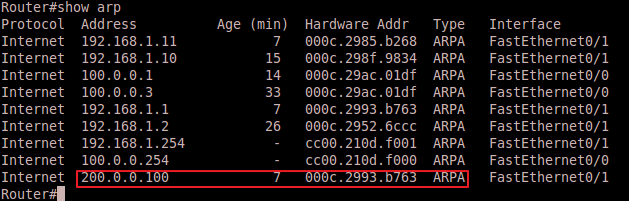

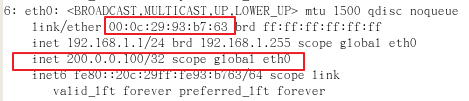

在路由器查看VIP的arp对应为server1的网卡mac

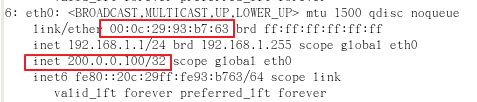

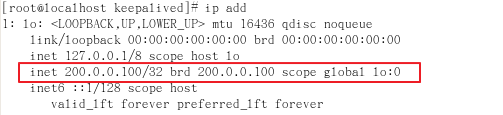

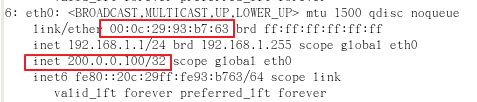

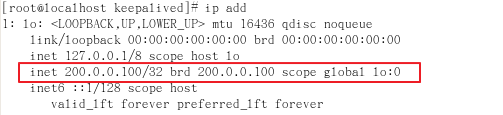

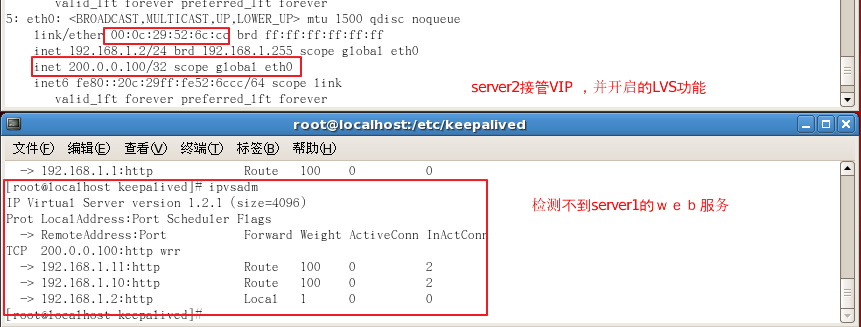

查看 server1的ip地址情况:mac地址对应

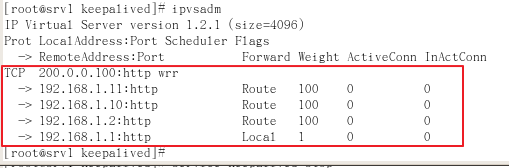

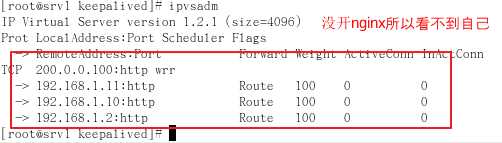

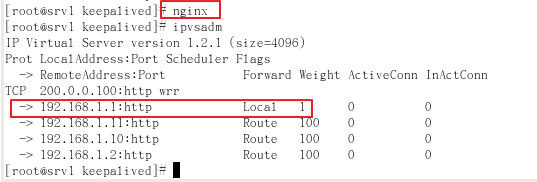

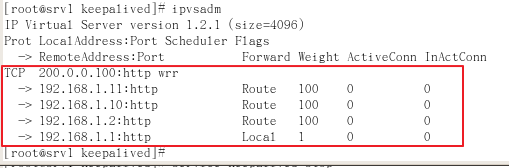

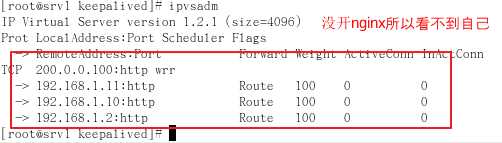

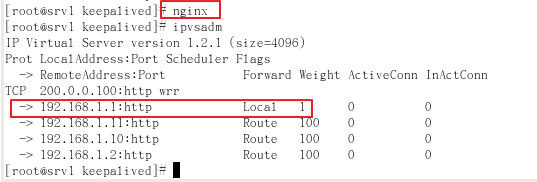

查看lvs功能:

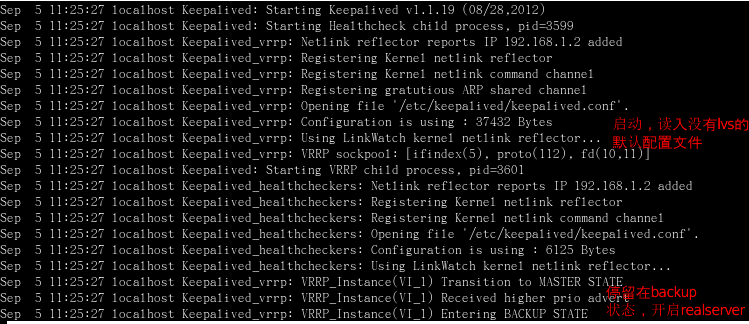

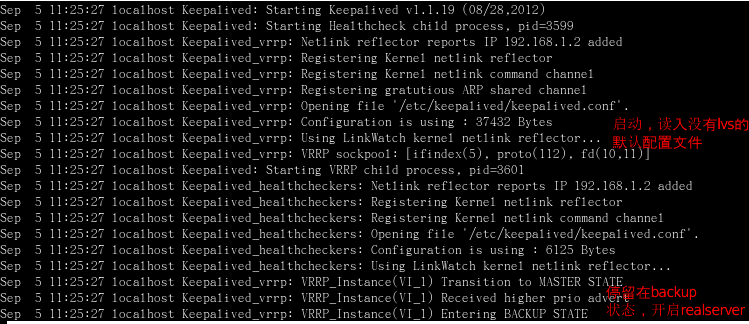

启动server2的keepalived,查看/var/log/message日志:

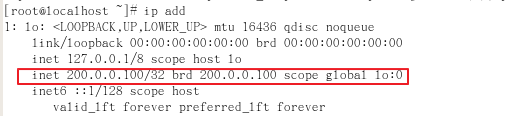

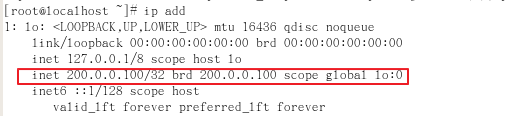

查看ip地址,lo:0口增加的vip

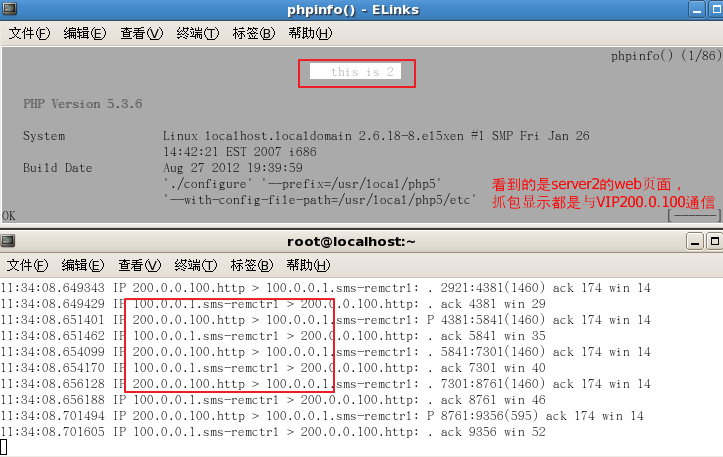

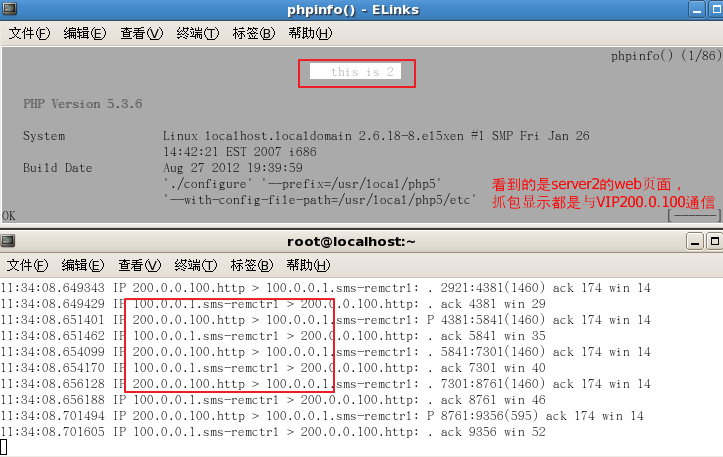

浏览测试:

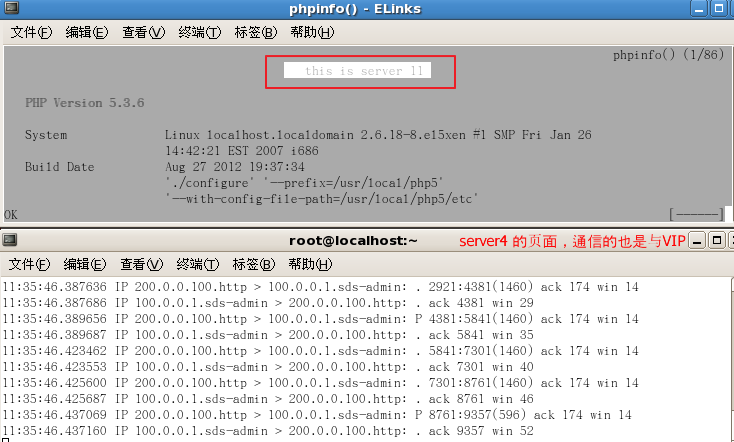

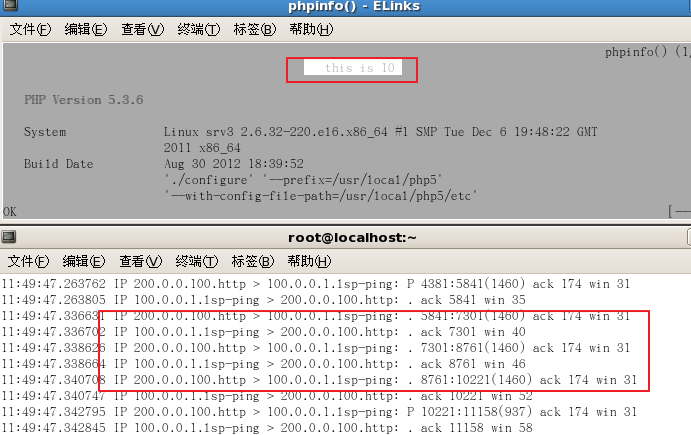

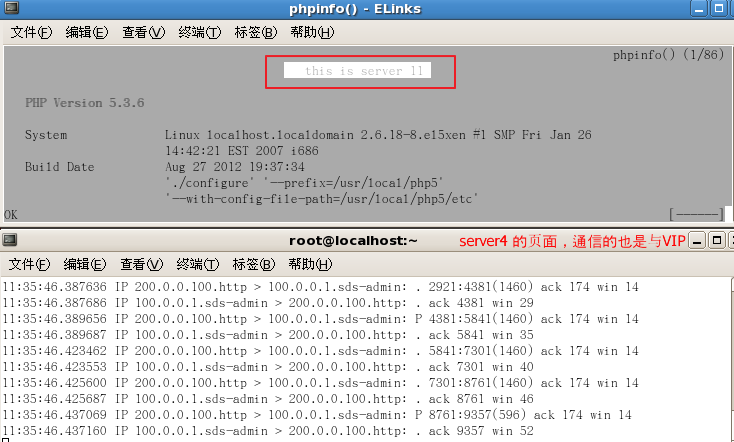

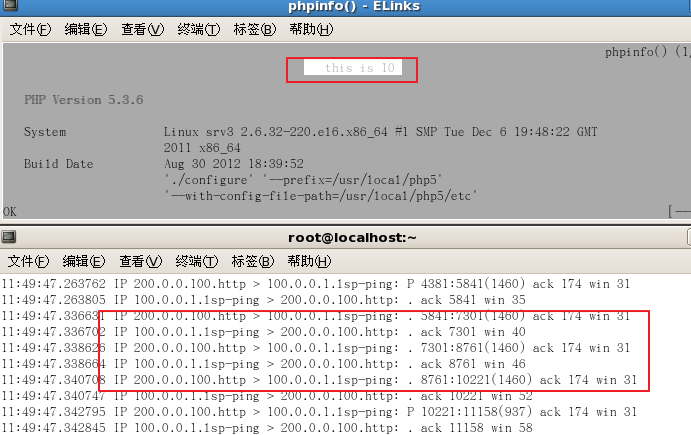

客户机地址为100.0.0.1/24 默认网关为100.0.0.254

使用elinks http://200.0.0.100/命令

切换测试:关闭server1的keepalived和nginx进程

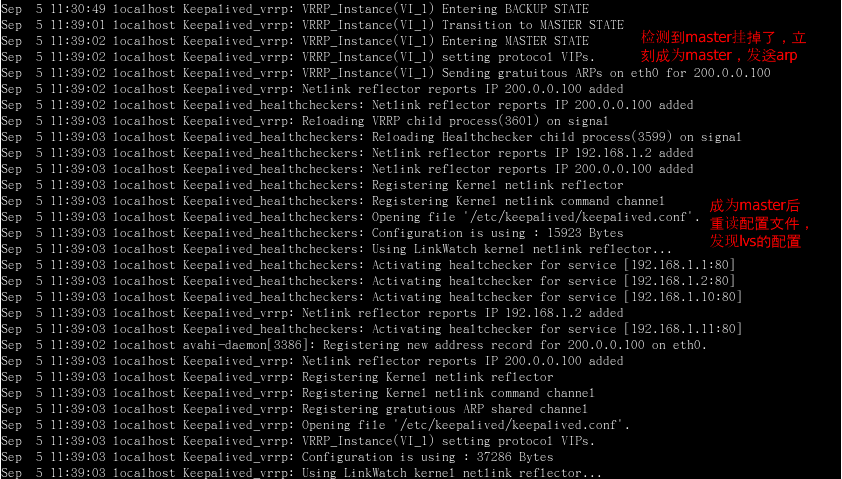

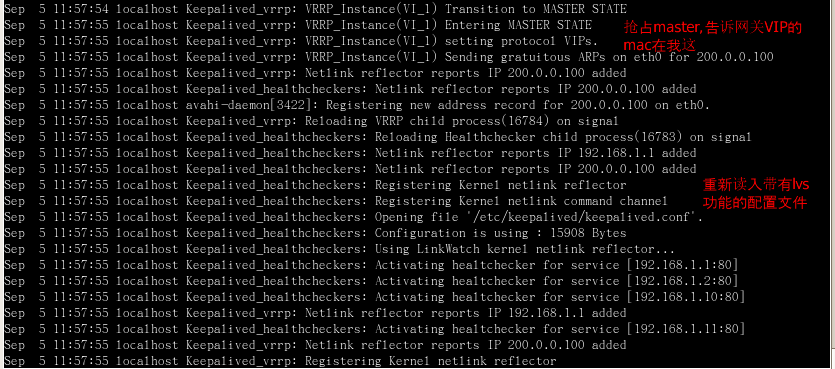

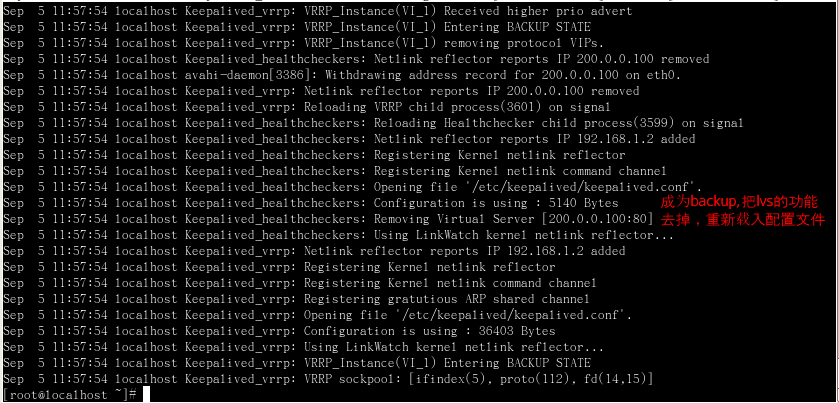

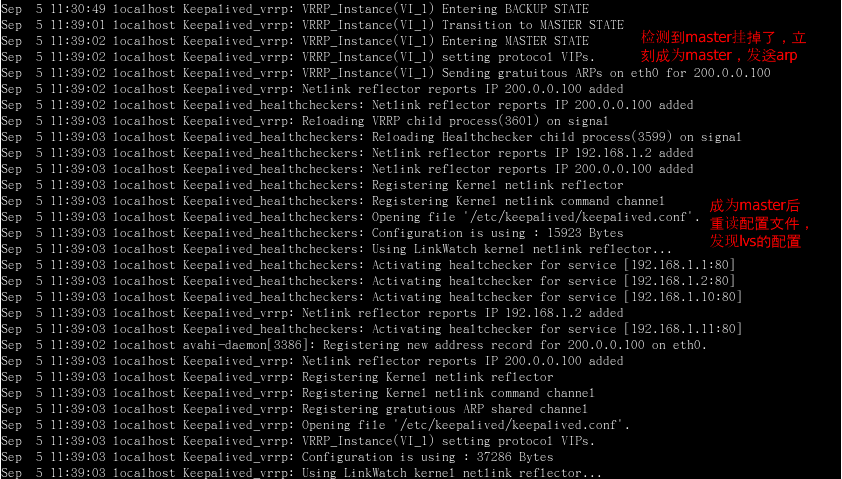

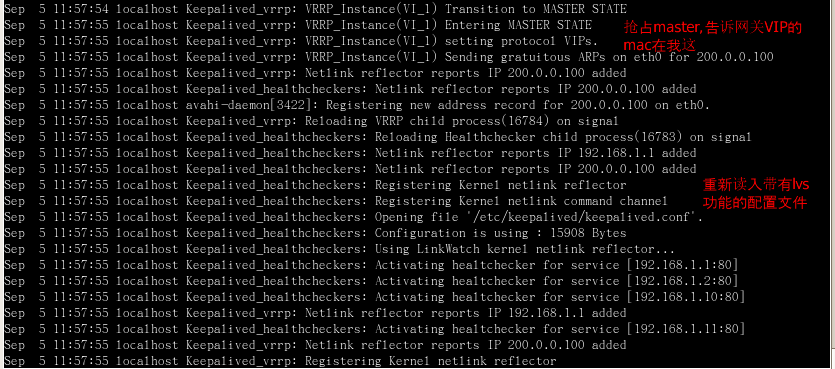

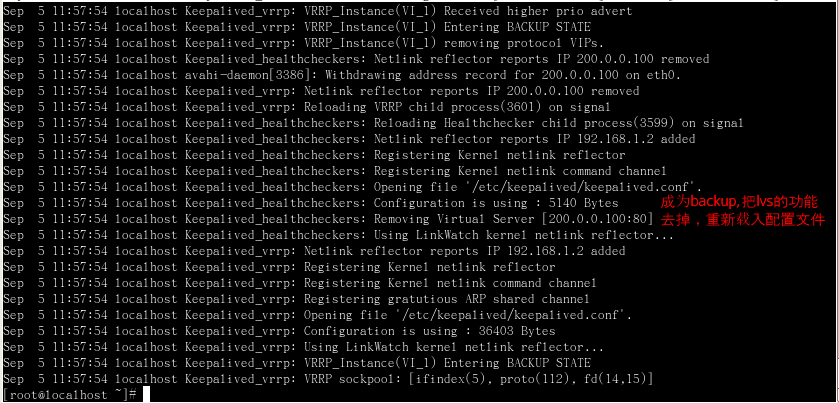

看server2接管过程:cat /var/log/message

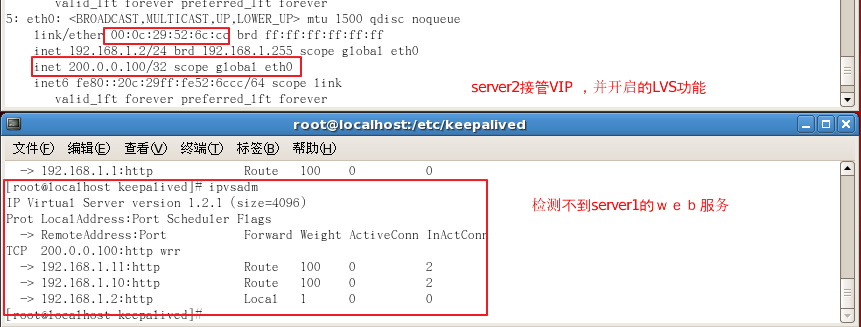

查看lvs功能是否开启:

查看路由器VIP对应的mac地址,与server2的网卡地址一样。

客户机继续浏览到页面,并与VIP通信。

抢占测试:(如果不想抢占只需在keepalived.cof文件#nopreempt 注释去掉)

这时重新启动server1进程,但不启动nginx:

抢占过程如下:启动keepalived 服务,读入没有lvs功能的配置文件,抢占master,成为mastter把lvs功能的配置加入到keepalived.conf文件里,重新载入,开启lvs功能。

查看server1的日志:

查看lvs功能:

查看路由VIP的mac地址:

查看server1的地址:

开启nginx再次查看lvs:

查看server2是否降为backup状态并成为realserver

查看是否成为realserver

可以看到,54秒时server1抢占为master,server2在54一秒内就降为backup状态变成realserver,55秒时server1重新载入带有lvs功能的配置文件并开启

实验结束----------

结语:

整个群集里,如果负载LVS的master挂掉,就会有机器会成为master,接管LVS任务,哪个web服务出现问题,LVS都能自动检测,并添加删除。做到超高可用,理论上也适合其他服务.

提示:

keepalived.conf文件这一句,

把里面的stop修改成start,即关闭keepalived时也成为realserver,方便测试各节点切换。

本文出自 “记忆” 博客,请务必保留此出处http://xzregg.blog.51cto.com/3829250/982746

拓扑:

目的:充分利用硬件资源,LVS_DR服务器利用的资源相对低,通过keepalived配合,实现备用的LVS_DR成为web服务器的负载均衡群集.。

原理:

四台服务器上安装keepalived和lvs,开放80端口提供web服务,通过keepalived竞选master接管VIP并开启LVS功能,为web服务器负载分发,同时自己也是web服务器,权重低些。

当master挂掉后,后面web服务器通过keepalived竞选新的master,并开启LVS功能。

注意:

我的vip和rip是不同网段的,主要是想实现一个公网ip组lvs_dr模式。

其实也可以改成同一网段,把VIP改成192.168.1.X

realserver回应出去时的源ip是VIP。

这里我在路由器上增加了一条到vip的静态路由:

参考文章:http://lustlost.blog.51cto.com/2600869/929915

软件:

ipvsadm-1.24.tar.gz

keepalived-1.1.19.tar.gz

安装keepalived 和ipvs

网上大把

需要的文件和脚本:

/etc/keepalived/keepalived.conf 默认的keepalived启动时的配置文件

/etc/keepalived/lvs.conf 当成为master时加入lvs功能的配置文件

/opt/shell/lvs_rsrv.sh 成为realserver用的脚本,

四台机器都安装keepalived和lvs,放置脚本和配置文件,

每台机修改的只是keepalived.conf文件和lvs.conf的少许部分!

先把需要的脚本文件放到/opt/shell下

mkdir -p /opt/shell

/opt/shell/lvs_rsrv.sh 借用netseek大大的脚本,内容如下:

#!/bin/bash

# Written by NetSeek

# description: Config realserver lo and apply noarp

eth="lo:0"

#VIP

WEB_VIP=200.0.0.100

. /etc/rc.d/init.d/functions

case "$1" in

start)

ifconfig $eth $WEB_VIP netmask 255.255.255.255 broadcast $WEB_VIP

/sbin/route add -host $WEB_VIP dev $eth

#

echo "1" >/proc/sys/net/ipv4/conf/${eth%%:0}/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/${eth%%:0}/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p >/dev/null 2>&1

echo "RealServer Start OK"

;;

stop)

ifconfig $eth down

route del $WEB_VIP >/dev/null 2>&1

echo "0" >/proc/sys/net/ipv4/conf/${eth%%:0}/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/${eth%%:0}/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "RealServer Stoped"

;;

status)

# Status of LVS-DR real server.

islothere=`/sbin/ifconfig $eth | grep $WEB_VIP`

isrothere=`netstat -rn | grep "$eth" | grep $WEB_VIP`

if [ ! "$islothere" -o ! "isrothere" ];then

echo "LVS-DR real server Stopped."

else

echo "LVS-DR Running."

fi

;;

*)

# Invalid entry.

echo "$0: Usage: $0 {start|status|stop}"

;;

esac记得给运行权限:

chmod +x /opt/shell/*

开始配置keepalived:

默认启动的/etc/keepalived/keepalived.conf文件如下:

# ! Configuration File for keepalived

global_defs {

router_id LVS_1 #运行keepalived机器的一个标识

}

#VIP

vrrp_instance VI_1 {

state MASTER #指定刚启动状态,MASTER或BACKUP,全部节点一样就行

interface eth0 #实例绑定的网卡

virtual_router_id 51 #标记(0...255)相同实例要相同

priority 100 #优先级

advert_int 1 #检查间隔,默认1s

# nopreempt #设抢占

authentication { #认证

auth_type PASS #认证的方式,支持PASS和AH

auth_pass 1111 #认证的密码

}

virtual_ipaddress { #指定漂移地址(VIP)

200.0.0.100

}

track_interface {

eth0 #跟踪接口,设置额外的监控,里面任意一块网卡出现问题,都会进入故障(FAULT)状态

}

#开启lvs功能就靠以下的命令

#成为master后执行:关闭realserver的设置,在keepalived.conf文件中追加lvs的设置,重新载入文件

notify_master "/opt/shell/lvs_dr_rsrv.sh stop;/bin/cat /etc/keepalived/lvs.conf >>/etc/keepalived/keepalived.conf;/sbin/service keepalived reload"

#成为backup后执行:开启realserver的设置,在keepalived.conf文件里找lvs的设置,找的到就删除lvs配置,重新载入配置,找不到就不重载

notify_backup "/opt/shell/lvs_dr_rsrv.sh start;/bin/grep '^#lvs_set' /etc/keepalived/keepalived.conf && /bin/sed -i '/^#lvs_set/,$d' /etc/keepalived/keepalived.conf && /sbin/service keepalived reload"

#关闭keepalived时执行:删除keepalived.conf文件的lvs配置,关闭realserver的设置,这里也可以自定为 start,关闭keepalived也为realserver

notify_stop "/opt/shell/lvs_dr_rsrv.sh stop;/bin/sed -i '/^#lvs_set/,$d' /etc/keepalived/keepalived.conf"

#故障时的执行的命令(例如eth0 down掉)

notify_fault "/sbin/service keepalived stop"

}LVS功能的/etc/keepalived/lvs.conf配置文件如下:

#lvs_set #这句是标记,不要删

virtual_server 200.0.0.100 80 { #设置VIP port

delay_loop 2 #每个2秒检查一次real_server状态

lb_algo wrr #lvs调度算法这里使用加权轮询 有:rr|wrr|lc|wlc|lblc|sh|dh

lb_kind DR #负载均衡转发规则NAT|DR|TUN

# persistence_timeout 60 #会话保持时间

protocol TCP #使用协议TCP或者UDP

real_server 192.168.1.1 80 {

weight 1 #权重(这里是本机,同时开启LVS功能,权重设的小点,可以设为0,完全不对本机分发(当其他web出现问题也不启用)

TCP_CHECK { #tcp健康检查

connect_timeout 10 #连接超时时间

nb_get_retry 3 #重连次数

delay_before_retry 3 #重连间隔时间

connect_port 80 #健康检查端口

}

}

real_server 192.168.1.2 80 {

weight 100 #权重

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.1.10 80 {

weight 100 #权重

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.1.11 80 {

weight 100 #权重

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}文件都设置好了复制到每台机器上面

scp /etc/keepalived/*.conf 192.168.1.2:/etc/keepalived/ scp -r shell 192.168.1.2:/opt/ ...

Server 2改的地方

/etc/keepalived/keepalived.conf文件:

router_id LVS_192.168.1.2 #运行keepalived机器的一个标识 priority 99 #优先级低点

/etc/keepalived/lvs.conf文件:

real_server 192.168.1.1 80 {

weight 100 #修改server1的权重

...

real_server 192.168.1.2 80 { #

weight 1 #把自己的权重改小

...Server3 和server4修改的地方与server2雷同,都是修改标示,优先级和本机web服务的权重

快速修改命令:,yx为优先级的变量。

yx=99

ip=`ifconfig eth0 | awk -F"[ :]+" 'NR==2{print $4}'`

sed -i "/router_id LVS_1/{s/1/$ip/}" /etc/keepalived/keepalived.conf

sed -i "/priority 100/{s/100/$yx/}" /etc/keepalived/keepalived.conf

sed -i 's/weight 1.*/weight 100/' /etc/keepalived/lvs.conf

sed -i "/$ip/{N;s/weight 100/weight 1/}" /etc/keepalived/lvs.conf启动你的web服务后再启动keepalived

nginx service keepalived start

开始测试:

在server1上启动keepalived,查看/var/log/message日志。

接上:

在路由器查看VIP的arp对应为server1的网卡mac

查看 server1的ip地址情况:mac地址对应

ip addr

查看lvs功能:

启动server2的keepalived,查看/var/log/message日志:

查看ip地址,lo:0口增加的vip

浏览测试:

客户机地址为100.0.0.1/24 默认网关为100.0.0.254

使用elinks http://200.0.0.100/命令

切换测试:关闭server1的keepalived和nginx进程

killall nginx killall keepalived

看server2接管过程:cat /var/log/message

查看lvs功能是否开启:

查看路由器VIP对应的mac地址,与server2的网卡地址一样。

客户机继续浏览到页面,并与VIP通信。

抢占测试:(如果不想抢占只需在keepalived.cof文件#nopreempt 注释去掉)

这时重新启动server1进程,但不启动nginx:

service keepalived start

抢占过程如下:启动keepalived 服务,读入没有lvs功能的配置文件,抢占master,成为mastter把lvs功能的配置加入到keepalived.conf文件里,重新载入,开启lvs功能。

查看server1的日志:

查看lvs功能:

查看路由VIP的mac地址:

查看server1的地址:

开启nginx再次查看lvs:

查看server2是否降为backup状态并成为realserver

查看是否成为realserver

可以看到,54秒时server1抢占为master,server2在54一秒内就降为backup状态变成realserver,55秒时server1重新载入带有lvs功能的配置文件并开启

实验结束----------

结语:

整个群集里,如果负载LVS的master挂掉,就会有机器会成为master,接管LVS任务,哪个web服务出现问题,LVS都能自动检测,并添加删除。做到超高可用,理论上也适合其他服务.

提示:

keepalived.conf文件这一句,

把里面的stop修改成start,即关闭keepalived时也成为realserver,方便测试各节点切换。

notify_stop "/opt/shell/lvs_dr_rsrv.sh stop;/bin/sed -i '/^#lvs_set/,$d' /etc/keepalived/keepalived.conf"

本文出自 “记忆” 博客,请务必保留此出处http://xzregg.blog.51cto.com/3829250/982746

相关文章推荐

- 使用keepalived加lvs做负载均衡,访问后端的服务器,2分钟后超时,需要重新登录

- LVS集群的体系结构,构建强壮的体系结构里负载均衡层、真实服务器层、后端共享存储层都是相辅相成

- Linux LVS Keepalived实现Httpd服务器80端口的负载均衡

- 使用LVS+keepalived实现mysql负载均衡的实践和总结

- 借助LVS+Keepalived实现负载均衡

- Lvs + Ngnix + Haproxy + Keepalived + Tomcat 实现三种HA软负载均衡和Tomcat Session共享 分类: 系统架构 Linux 2015-06-09 21:50 168人阅读 评论(0) 收藏

- 架构设计:负载均衡层设计方案(7)——LVS + Keepalived + Nginx安装及配置

- 负载均衡--集群 LVS+keepalived

- 手把手教程: CentOS 6.5 LVS + KeepAlived 搭建 负载均衡 高可用 集群

- Lvs + Ngnix + Haproxy + Keepalived + Tomcat 实现三种HA软负载均衡和Tomcat Session共享

- nginx做反向负载均衡,后端服务器获取真实客户端ip

- linux集群系列(一):LVS+Keepalived以DR模式实现负载均衡

- lvs+keepalived实现负载均衡

- 【技术&服务器】nginx与lvs做负载均衡的比较

- Lvs+keepalived实现负载均衡、故障剔除(DR模式)

- lvs(+keepalived)、haproxy(+heartbeat)、nginx 负载均衡的比较分析

- LVS+Ldirectord实现Web服务器的负载均衡及故障转移

- lvs DR模式 +keepalived 实现directory 高可用、httpd服务负载均衡集群

- lvs DR模式 +keepalived 实现directory 高可用、httpd服务负载均衡集群

- lvs+keepalived+nginx实现高性能负载均衡集群